One of the best features of using FPGAs for a design is the inherent parallelism. Sure, you can write software to take advantage of multiple CPUs. But with an FPGA you can enjoy massive parallelism since all the pieces are just hardware. Every light switch in your house operates in parallel with the others. There’s a new edition of a book, titled Parallel Programming for FPGAs that explores that topic in depth and it is under the Creative Commons license. In particular, the book focuses on using Vivado HLS instead of the more traditional Verilog or VHDL.

HLS allows a designer to express a high-level algorithm in C, C++, or SystemC. Given a bit more information, HLS will convert that into an FPGA configuration. That doesn’t mean, though, that you can just cut and paste ordinary C code. HLS has several restrictions due to the fact that it is compiling to logic gates, not lines of code. Actually, it also generates Verilog or VHDL, but if you do it right, that should be transparent to you.

After the introduction, the book is more like a series of monographs on very specific topics, but the depth of each is very impressive. There’s plenty of DSP examples, of course. There’s also general math, so if you ever wondered how to compute a sine or cosine in an FPGA, read chapter 3.

Chapter 9 with its discussion of video processing will be of great interest, and there are discussions of sorting algorithms and Huffman encoding, too.

HLS is a great tool, but we fear it will confuse people even further. FPGAs don’t run software (unless you build a CPU on one), but many people mistake HDL descriptions for software because they look superficially similar. HLS makes it even worse because it really is C or C++ code. But it doesn’t compile to object code like a normal program. Of course, that’s only a problem when you have to convince a client or a manager that you can’t apply certain software methodologies to FPGA development. For actual use, HLS is great and can simplify development greatly.

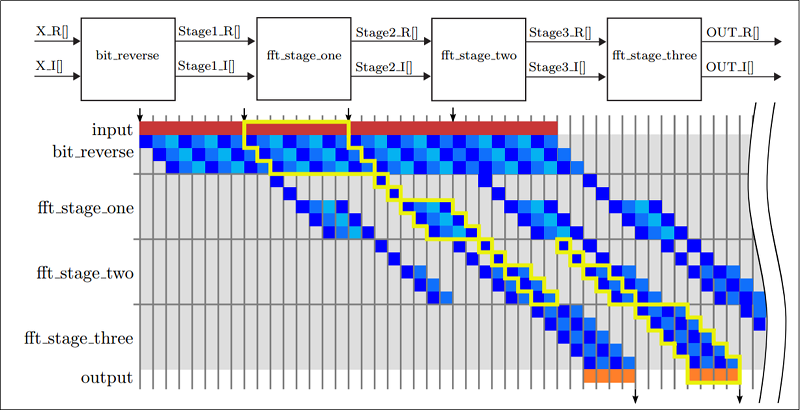

As an example of what HLS looks like, here’s an FFT which, admittedly, uses some other C functions. But it is much more intuitive — especially if you are a programmer — than similar code would be in VHDL or Verilog.

void fft(DTYPE X R[SIZE], DTYPE X I[SIZE], DTYPE OUT R[SIZE], DTYPE OUT I[SIZE])

{

#pragma HLS dataflow

DTYPE Stage1 R[SIZE], Stage1 I[SIZE];

DTYPE Stage2 R[SIZE], Stage2 I[SIZE];

DTYPE Stage3 R[SIZE], Stage3 I[SIZE];

bit reverse(X R, X I, Stage1 R, Stage1 I);

fft stage one(Stage1 R, Stage1 I, Stage2 R, Stage2 I);

fft stages two(Stage2 R, Stage2 I, Stage3 R, Stage3 R);

fft stage three(Stage3 R, Stage3 I, OUT R, OUT I);

}

The book is meant to be a university text, so it isn’t light reading. Still, it is a great treatment of the topic and the price is right.

If you want a more gentle introduction to classic FPGA development we have that. There are other ways to do C to FPGA, too.

In school they taught us some methods of using vhdl to do parallel programming in computing it self (not just in fpgas)

But never dived in to it

Parallel designs in FPGAs are a massive incentive to use an FPGA where you ordinarily would not … With some debugging to get propagation delay perfect you can do some surprisingly large and fast calculations in real time using only the signal clock

Unless you have a multi processor core, computers are serial processors only and programming one with a hardware description language (vhdl) is nonsensical. I wonder at your understanding of the language and the processes that turn it into FPGA config files if you are tweaking things to get propagation delays right. Do you mean propagation delays? As in ‘intra clock cycle timing’ as opposed to ‘inter clock cycle timing’. The first is a compile/fit tools problem (assuming you have described the hardware appropriately) and the latter is purely a design problem.

My wondering continues with ‘using only the signal clock’: there are millions of applications today that have multiple signal clocks and other clocks and many FPGAs include complex clocking functions and PLLs for example to generate higher and lower clock frequencies than the external clocks or clocks with a specific phase angle relationship with a reference clock maybe for latching input and output data to avoid metastable states on inputs or avoiding setup and hold time issues on synchronous serial logic blocks.

Just had to clear up that first point and then couldn’t stop myself.

I missed out on FPGAs at first time at uni, the first data processing semiconductors

our classes were exposed to was the NS SCMP 8 bit micro (1977 or so) needing

support chips and trundling along at around 1 MHz or so… First application was

radio fox hunting – had a 4 element yagi on top of my 1972 Ford Escort with a

hand crank on passenger side – thats where we expected to use the scamp

to offer directional info at first then “maybe” more the array, too much to do :/

It was a few years later that GAL’s from AMD came along with their use in

the 4054 storage screen tape drive computers. Nifty stuff back then for

bipolar programmable logic, some interesting protocol projects…

There are all sorts of benefits of FPGAs and especially in handling multiple

HDMI streams with a slave processor handling a few of the more complex

aspects. I will need a refresher as I still dream in 8051 code occasionally

which is a heck of a lot better than the SCMP code I’ve mostly forgotten :-(

I imagine one of the advantages this way is bringing to bear all the conventional programming tools to the field.

FPGAs are cancer that is killing DIY electronics scene.

Uh, why? The seems like a really good time for hobby electronics.

That’s a really stupid take. FPGA’s can perform tasks that processors cannot. Processors can also perform tasks that FPGA’s cannot. The only alternative to an FPGA is an ASIC or building the specific circuit yourself like we’re back in the 1950’s. This would take dramatically more time, money, and power, and be flatout unfeasible for a DIYer. FPGA’s and CPLD’s especially are a way that a DIY person can actually reasonably attain ASIC level performance without having to spend tens of thousands to have a series of them manufactured.

Here’s the proper link : http://kastner.ucsd.edu/hlsbook/

I hope the parallel programming in the book is a bit clearer than this…

FFT data is usually tapered, or modified by a “window function” – to account for the sample size not perfectly matching the signals in the sample. For an example, see: https://en.wikipedia.org/wiki/Window_function#Cosine-sum_windows

You can also have “ovelap” in samples. 25% is a value I hear a lot.

So you have: raw data, being copied, re-sized by factors of a window function, sorted / evaluated by one FFT.

[bit reverse etc. in your diagram]

At the SAME time, the SAME raw data is ALSO being copied, re-sized by DIFFERENT factors, and sorted / evaluated by a second FFT.

This being an FPGA, it is also quite possible the same raw data is also being fed into 1 or more DSP filters, with the results feeding a 3rd and 4th FFT, giving you nice spectrum displays of “before” and “after” the DSP filter.

Am I the only one that finds the term “parallel programming for FPGAs” confusing? I might be a bit old school because to me that sounds like ‘parallel loading configuration files’ as opposed to the intended meaning ‘parallel processing in FPGAs’. It seems the article talks a lot about the languages available for programming FPGAs which only exacerbates the confusion and the only place parallel processing is discussed in clear terms is in the comments. Inherent ‘parallelism’ just sounds like 80s buzz word speak to me.

If the article had briefly defined this ‘parallelism’ and then expanded that topic some it would have been of far more benefit to newbies maybe who haven’t toyed with these things yet and the more seasoned who maybe are not aware of new languages that make designing separate interactive processes and processes that operate in parallel easier to coordinate. As it is I think there may be many for whom the book would be useful who won’t go looking because they read this article which would be a shame.

I get your confusion. One of the major goals of the book is to get people (in particular, ‘programmers’ rather than ‘circuit designers’) excited about using FPGAs in new ways. FPGAs are a great parallel processing platform, but the development model (RTL design) just wasn’t accessible to most people with a software background. In addition, although RTL design scale up reasonable well for large design teams working on large projects, it hasn’t worked as well for moderately sized projects without well-defined requirements. For lack of a better word, it’s just not very ‘agile’.

Hopefully the title gets at this idea.

In terms of the book, it focuses on using High Level Synthesis to extract parallelism from sequential programs. It’s not uncommon to have programs that execute the equivalent of thousands of instructions each clock cycle. The key difference with RTL design is focusing on function-level and loop-level parallelism, rather than just cycle-level parallelism. As for synthesis from *concurrent* programs, rather than sequential programs, SystemC is one option. However, effectively using SystemC at a higher level than RTL design requires a good understanding of how concurrent processes will interact, otherwise it becomes very difficult to write correct, understandable programs.

LabVIEW is not always well received, especially in the hacker space since it is a close sourced programming language that regularly locks you into specific hardware. But the major thing it has going for it is that being a graphical programming language means the compiler can recognize places where things can happen in parallel and just does it that way without any extra work. This is why programming for an FPGA in LabVIEW is pretty easy and doesn’t require any additional training over programming for a Windows target, or ARM target. That being said NI is a hardware company and at the moment only allows for programming on their FPGA boards. Here was the first video I saw on youtube related to LabVIEW and FPGA.

https://youtu.be/4MYrtynlWxg