The future, if you believe the ad copy, is a world filled with cameras backed by intelligence, neural nets, and computer vision. Despite the hype, this may actually turn out to be true: drones are getting intelligent cameras, self-driving cars are loaded with them, and in any event it makes a great toy.

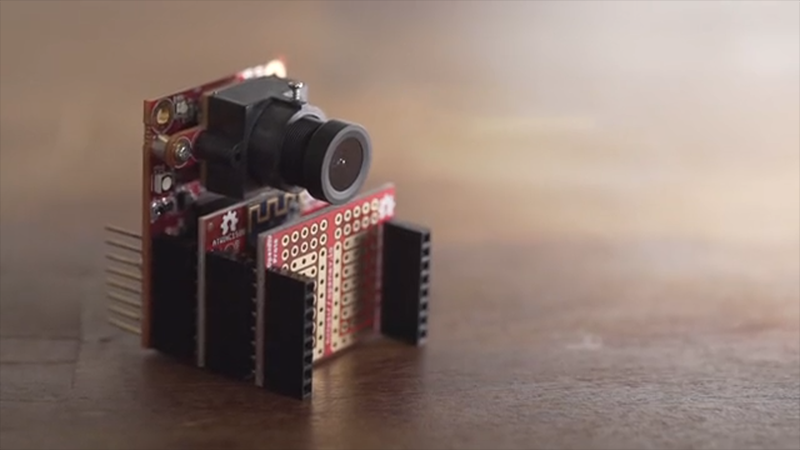

That’s what makes this Kickstarter so exciting. It’s a camera module, yes, but there are also some smarts behind it. The OpenMV is a MicroPython-powered machine vision camera that gives your project the power of computer vision without the need to haul a laptop or GPU along for the ride.

The OpenMV actually got its start as a Hackaday Prize entry focused on one simple idea. There are cheap camera modules everywhere, so why not attach a processor to that camera that allows for on-board image processing? The first version of the OpenMV could do face detection at 25 fps, color detection at more than 30 fps, and became the basis for hundreds of different robots loaded up with computer vision.

This crowdfunding campaign is financing the latest version of the OpenMV camera, and there are a lot of changes. The camera module is now removable, meaning the OpenMV now supports global shutter and thermal vision in addition to the usual color/rolling shutter sensor. Since this camera has a faster microcontroller, this latest version can support multi-blob color tracking at 80 fps. With the addition of a FLIR Lepton sensor, this camera does thermal sensing, and thanks to a new library, the OpenMV also does number detection with the help of neural networks.

We’ve seen a lot of builds using the OpenMV camera, and it’s getting ot the point where you can’t compete in an autonomous car race without this hardware. This new version has all the bells and whistles, making it one of the best ways we’ve seen to add computer vision to any hardware project.

Also check out jevois.org

I have no idea if it’s smaller than this cam, but it’s pretty damn small. And the code is all open and in Github. I’ve modified it and compiled it myself after I bought one of them and it there was a newer version of the module I wanted to use.

Hi Miles, this is Kwabena, OpenMV’s creator. Yeah, the Jevios is about the same size of the OpenMV Cam. Jevios is target more towards folks wanting a full linux experience versus just getting simple color tracking and things like this working for hobbyist robotics. If you watch our Kickstarter video you’ll notice the heavy focus on simple robotics projects which is what we optimized the camera for.

“The future, if you believe the ad copy, is a world filled with cameras backed by intelligence, neural nets, and computer vision.”

Just regular surveillance cameras. Moving the smarts closer to them has advantages.

I’m only getting dystopian Skynet’ish vibes.

>Moving the smarts closer to them has advantages.

I wonder if this wouldn’t lead to a Gibson-esque disagreement among a cluster of cameras about what actually happened with some particular incident.

Very good sci-fi premise.

Rashomonitors?

Bravo

Actually, simple intelligence is creeping towards the edge, and is likely to become the norm.

The Google AIY kit is actually pretty nice if you want to make simple, autonomous system that can do simple vision tasks for under $100. And you can run a decent image classifier on low-end Android phones .. so there are readily available devices that have crossed fron novelty to usefulness, and there are use cases that favir putting the intelligence at the endpoint.

That said, I’m not sure that edge AI is the sweet spot for this device .. the tool-chain for developing a classifier and putting it on tje Google AIY visuon kit or an Android phone is pretty straightforward.

The tiniest (hacker-friendly, affordable) computer vision platform is http://www.jevois.org, and it´s way more powerful and tiny than that

Hi walter, jevios draws 800mA running at full power. The OpenMV Cam draws 140mA at full power. Sure jevios is more powerful but you need a fan and a battery with it. So, if you’re using it for your project you’re looking at doing something completely different that what we built the OpenMV Cam for. In particular, our goal with the product was just to be something like a CMUcam (or Pixy) – i.e. a simple color tracking camera that could be programmed to control devices in the real world to remove some of the problems folks like beginner students have with making things work.

I bought one of the earlier OpenMV devices and it was a lot of fun to play with. I had it up and running in minutes.

Did you build anything relevant with it ?

The Pixy2 (https://pixycam.com/) is advertised with googley eyes so it must be pretty great too.

The Pixy camera remains a great camera for color tracking using the Arduino. It really works well as an attachment to the Arduino. The goal with the OpenMV Cam was to merge the two devices into one.

I do hope these vision systems can deal with the “elephant” in the room.

https://www.quantamagazine.org/machine-learning-confronts-the-elephant-in-the-room-20180920/

What an interesting article!

I started building “smart” cameras in 2013 using dual core Android sticks with Linux on them. You can build a more generic/universal system for the same or less money. I built one based on the $9 CHIP (remember that?) for about $35. https://github.com/sgjava/opencv-chip This includes the full OpenCV stack, not some cheesed down software stack.

The issue with false detection in CV (as stated in the above Elephant in the room post above) can be addressed through training or adding other sensors like ultrasonic, PIR, radar, etc. Just as we do not rely on one sense, neither should your CV/ML projects.

Yeah, chip was really cool and we wanted to build around it. But, they went out of business. It would have been really awesome to use. However, the Pi foundation killed them with the Pi zero. The reason chip was awesome is because they offered package-on-package stacked DRAM with the CPU along with driver support.

Note that quoting super low prices based off parts you can assemble together from different provides online does not make for a product when you have to manufacturer thousands. Being able to source parts effectively based on available capital really limits what you can build.

For example, unless you start out with a large allocation of cash initially to build a system it’s impossible to manufacturer initially at large enough volumes to have an ultra low price. Then, you have to add in distributor overhead to the what you will sell the system for which is on the order of an 1.3x increase in price.

I was looking at it as more of a DIY project for the price, not scaling it up to a mass produced item. I try to keep all the software generic, thus I can deploy it on most ARM based SBCs (and specific hardware becomes less significant). My new favorite is the NanoPi Duo because I can get it ~$15.00 shipped when ordering 4 or 5 at a time. Being quad core really helps when you cannot leverage the GPU on the SBC for CV or ML. I had this stuff working in my security system long before Ring came along :) Too bad I only thought of it as a hobby and didn’t monetize it :(

I use the same concept with sensor interfacing by doing it in user space generically. https://github.com/sgjava/userspaceio Then I’m not stuck using some hacked up WiringPi or RPi.GPIO for a particular SBC.