Compared to incandescent lightbulbs, LEDs produce a lot more lumens per watt of input power — they’re more efficient at producing light. Of course, that means that incandescent light bulbs are more efficient at producing heat, and as the days get shorter, and the nights get colder, somewhere, someone who took the leap to LED lighting has a furnace that’s working overtime. And that someone might also wonder how we got here: a world lit by esoteric inorganic semiconductors illuminating phosphors.

The fact that diodes emit light under certain conditions has been known for over 100 years; the first light-emitting diode was discovered at Marconi Labs in 1907 in a cat’s whisker detector, the first kind of diode. This discovery was simply a scientific curiosity until another discovery at Texas Instruments revealed infrared light emissions from a tunnel diode constructed from a gallium arsenide substrate. This infrared LED was then patented by TI, and a project began to manufacture these infrared light emitting diodes.

But infrared light is invisible to the human eye, and not useful for any sort of indication or lighting. The first visible-spectrum LED was built at General Electric in 1962, with the first commercially available (red) LEDs produced by the Monsanto Company in 1968. HP began production of LEDs that year, using the same gallium arsenide phosphate used by Monsanto. These HP LEDs found their way into very tiny seven-segment LED displays used in HP calculators of the 1970s.

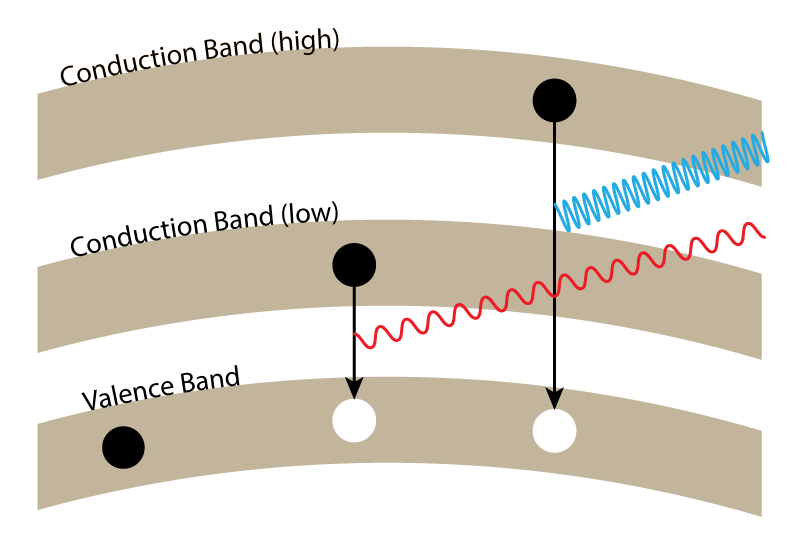

From the infrared LEDs of the early 1960s to the red LEDs of the late 1960s, the 1970s saw orange-red, orange, yellow, and finally green LEDs. There’s a trend to these developments, and it has to do with electron gaps. For a diode to generate light, you must first put energy into an electron. This energy makes the electron jump from its natural state in a valence band to a conduction band. This energy isn’t enough to keep the electron in the conduction band, so it will eventually fall back into the hole it left in the valence band. In doing so, it releases energy back again in the form of a photon.

The more energy it took to move the electron into a conduction band, the more energy that is released as a photon, in the form of higher frequency light. The reason that infrared LEDs came before red LEDs, and green LEDs came after that is that it’s simply harder to climb these bandgaps and find an LED substrate that will emit higher frequencies of light.

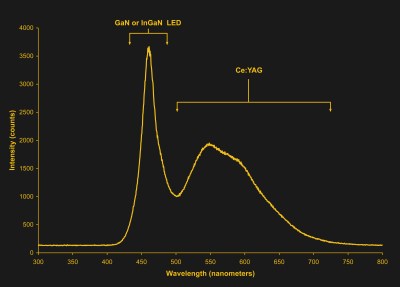

Infrared, red, and even green LEDs were “easy”, but blue LEDs require a much larger bandgap, and therefore required more exotic materials. The puzzle behind making a high-brightness blue LED was first cracked in 1994 at the Nichia Corporation using indium gallium nitride. At the same time, Isamu Akasaki and Hiroshi Amano at Nagoya University developed a gallium nitride substrate for LEDs, for which they won the 2014 Nobel Prize in Physics. With red, green, and blue LEDs, the only thing stopping anyone from building a white LED was putting all these colors in the same package.

Red, Green, but mostly Blue LEDs

The first white LEDs weren’t explicitly white LEDs. Instead, red, green, and blue LEDs were packed into a single LED enclosure. However, if you mix red, green, and blue light, you will get white light, it’s really just a matter of getting the correct proportions of different colored photons.

This remains the standard for RGB LEDs, and some have even experimented with improving the range of color these LEDs can produce. The human eye is extremely sensitive to green frequencies of light, and by adding a fourth LED to a package — it’s best called ’emerald’, or a slightly bluer shade of green than what we’re used to in green LEDs — you can make an LED with a wider color range, or if you prefer, a whiter white.

This was the first method of developing a white LED, and while the LED light bulbs you pick up at the hardware store don’t have individual red, green, and blue LEDs inside, it is still a fantastically popular way of creating more colors with LEDs. Those neopixels, WS2812s, or APA101s, all have red, green, and blue LEDs tucked inside one enclosure. Some of the more advanced individually addressable RGB LEDs even add a fourth LED, for white. But how are those individual white LEDs made?

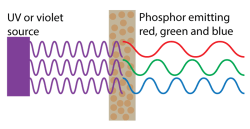

The first white LEDs, made without three individual LEDs, were made with the magic of phosphors. Phosphors are a well-understood science, most commonly found in lighting applications in fluorescent bulbs. Fluorescent bulbs don’t produce white light on their own, they produce ultraviolet light by exciting mercury vapor. However, by coating the inside of a fluorescent bulb with a powder, this ultraviolet light can be converted into red, green, and blue light. The result is a fluorescent bulb that lights your garage or workshop.

This can be done with LEDs as well. With an ultraviolet or violet LED packaged inside a phosphor-coated enclosure, you can make a white LED. This is known as a full-conversion white LED.

In 1996, the Nichia Company announced the production of white LEDs, and the rest is history. For the past twenty years, the power, efficiency, and brightness of these LEDs has increased. Now, or in the very near future, the default for home lighting won’t be incandescent bulbs, but powerful LEDs consuming a mere fraction of the energy an old Edison bulb would, shifting more of the task of keeping your house warm onto the heater.

Should probably be mentioned that in most (all?) homes the furnace is much more efficient at converting energy into heat than an incandescent lightbulb would be. The net effect of converting to LEDs is still a huge gain in energy efficiency. (Also the heat from the furnace comes out at floor level and rises, while the heat from incandescents starts at the ceiling and stays there. This from long experience doing theater lighting design with incandescent bulbs a decade or so ago.)

Meh. If these things aren’t obvious to a person already then I doubt they can be helped much. Just let it go and natural selection will work it out. Besides, who here doesn’t want to see the article about how some sap turned their house into a giant easy-bake oven?

Things as this aren’t obvious to many, but it would be a mistake to make assumptions. Personally I go ahead and state the facts as I know them, give them the opportunity to counter, anf if it’s obviouse they are cleless I drop it.

Dammit Hackaday it’s well past time to come up with a commenting system that allows us to edit commitments. Because your first attempt was a fail is no reason not to try again until you succeed.

Hear Her!

Sorry, meant to say Hear, Hear!

AMEN!! Hackaday: Make it sew.

Maybe the Hackaday postings are on a blockchain where publications are absolutely irremovable. Just kidding :-)

>” the furnace is much more efficient at converting energy into heat than an incandescent lightbulb would be”

Not really. Your furnace loses a lot of heat up the flue, and also cools down your house by pulling in air from the outside to replace what was drafted up the chimney.

Meanwhile, an electric heater turns 100% of the energy it takes in, into heat. Can’t beat that.

put the efficiency in terms of £ per BTU heat output and its a gain in favor of proper heating (unless you live somewhere with dirt cheap electricity). .. FWIW when im cold in the workshop and dont fancy running the heating, a 500W halogen work-light on a tripod is a superb comfort. I hope incandescents stick around for a while longer.

My furnace fuel produces CO2 – my electricity comes from hydro and nuclear.

Ever hear of a heat pump?

Space heater?

Modern gas furnaces do neither of these things. They have their own outside air supply, and their heat exchangers are so efficient that they can use PVC pipe for a flue. This design has been in common use for 20+ years here in the US.

Unless you also condense all the water in the flue gasses and bring it down to the starting temperature, you always lose energy. Hence the difference between HHV and LHV of fuels. For natural gas, the maximum efficency you can get out of a furnace is 91%, and some more loss of energy is necessary to provide the draft (or, to run an exhaust fan).

91% in an ultra-efficient house* is nothing to sneeze at.

*https://www.energy.gov/energysaver/energy-efficient-home-design/ultra-efficient-home-design

@Ostracus: I think that Luke is not talking about the “efficiency” of the house but the “efficiency” of the combustion.

As he said there is a difference between High Heat Value (HHV) and Low Heat Value (LHV, if I’m understanding the abbreviations correctly). Basically, you can’t get all of the energy released by the combustion because some of that energy is used to heat the products. Therefore, the only mean to get all the energy of the combustion would be to condense the water and cool it. That way you can get back the sensible enthalpy of the products and the phase change enthlapy of water.

Another way to put it:

Reaction enthalpy (100%) = Energy that you get (91%) + Phase change & sensible enthalpy (9%)

Of courses, these perecentages depend on the fuel.

(I hope I’m remembering everything correctly)

This is exactly what our furnace does, or tries to do. It measures the temperature of the gas running out of the top of the furnace and reduces the gas/air into the burn chamber such that there is “no heat” comes out the bottom. For this it needs a blower in order to eject gases, as the fresh air is injected at the top and the burned air is pulled from the bottom. The chimney is a double pipe, with the inner pipe containing the exhaust, and the outer pipe containing fresh air, resulting in a contained system not pulling cold air into the house.

Of course it’s not perfectly efficient. I haven’t looked at the values but I believe the air going up the chimney is equal in temperature to the ‘cold water’ coming into the boiler to be heated.

Also, yes it does condense water and collect it — it has a large drain on the bottom of the burn chamber to collect the condensed water and runs that into the drain. It’s on my project todo list to put a rain gauge here.

This was installed in 2005, but the model was not new/special then — I think it actually started production in 1998 or so.

Except that electricity is more expensive which is why no one in cold northern climates runs pure electric heat in North America. Modern gas furnaces don’t put heat up the flue anymore. They are exhaust barely warm gases through a PVC pipe. It does not matter if your furnace is 100% efficient in energy conversion if the energy cost is too high in the first place.

“Meanwhile, an electric heater turns 100% of the energy it takes in, into heat. Can’t beat that.” Heat pumps will give you way more than 100% of their electrical input as heat, since they’re also able to tap energy from the outside air or a geothermal source. And gas-fired furnaces, while not 100% efficient, don’t suffer from the lost efficiency in running a turbine and electrical generator, and transmission losses to the house.

Using a light bulb as a heating element is going to be as power-hungry as a space heater.

>”Meanwhile, an electric heater turns 100% of the energy it takes in, into heat. Can’t beat that.”

Ahhh… but you can. Heat pumps, moving heat from one place to another, have a modest gain over this 100% number.

Actually you can beat that, but not by directly converting electricity into heat, but by using a heat pump. They can move much more heat for the same amount of energy by using a phase change to bring in energy from the outside.

And then there’s cryptocurrency mining that “subsidizes” the cost of electricity, good for apartments where installing a heat pump is not realistic. I remember spinning up a few earnhoney mining VMs to stay warm during the winter…

Some may laugh, but I remember just how warm a room full of computers could get even before GPUs became a thing. Now just one card can put out quite a bit with the proper program.

As is often the case it’s all relative. For decades now mobile home central heating units have used sealed combustion chamber, that consumes air drawn from the exterior of the home,so no heated air goes out the flue. There where wall furnaces that used the same and where installed in many other types residences. Where I live electrical power has remained steady at ten ens per unit for 40 years, but gas, even propane prevails as energy source of choice. Clearly being more efficient doesn’t translate into affordable. I didn’t mention heat pumps because I don’t know of anyone using the for space heating.

“Meanwhile, an electric heater turns 100% of the energy it takes in, into heat. Can’t beat that.”

Although true one ends up comparing apples to oranges if comparing electric heating to natural gas for instance. The natural gas heating process is not 100% but neither is the process for generating the electricity used by the electric heater. (usually optimal assuming the power plant is well engineered and managed, but not 100%). Now heat pumps, especially those that circulate underground water perform better than both but cost a lot more to set up.

ummm, no?

Every even remotely efficient furnace uses it’s own air supply (a pipe run from the outside), which apart from better efficiency makes it safer, not to mention that modern condensation furnaces have the exhaust temperature so low, you could comfortably hold your hand over the chimney. Not sure how they are available in the US, but for EU, it’s very common.

Yes, but if its warm and dark, I do not need any heat at all.

What are you? A mushroom?

Except that electricity is more expensive which is why no one in cold northern climates runs pure electric heat in North America. Modern gas furnaces don’t put heat up the flue anymore. They are exhaust barely warm gases through a PVC pipe. It does not matter if your furnace is 100% efficient in energy conversion if the energy cost is too high in the first place.

>”while the heat from incandescents starts at the ceiling and stays there. ”

That’s another common myth and misconception. An incandecent lightbulb is basically an infrared heater. The infrared light is absorbed by the floor and the furniture, so it too heats the room from the floor up.

In fact, it’s somewhat popular these days to have heating cables above the ceiling panels, backed with aluminium reflectors to beam the heat down into the room through the panels, like a low-temperature version of those red glowing heater bars people install on their patios.

>” This from long experience doing theater lighting”

Maybe that’s because theater lighting is typically done with light fixtures that enclose the bulb inside a steel box and put a lens or a gel in front of it, keeping all the IR light inside the box, which gets hot. The hot box then heats the air around it.

A naked incandescent bulb radiates 98% of the heat it produces evenly around it, and with a suitable reflector you can direct almost all the heat down onto the floor. But, if you put a thick glass lens in front, that blocks most of the IR and the lens gets hot instead.

I might be being dumb here but if an incandescent lamp puts most of it’s heat into the floor, how come you burn your hand if you try to unscrew one that has been on for more than a couple of seconds?

You have, so to speak, interrupted the bullet right at the barrel. In this case, it’s a sort of machine gun. Get thy hand off of the muzzle. Let the bullets fly. Or photons, if you will. But don’t look directly at them or they will change their nature. ????

Heat and IR radiation are not the same thing. The light bulb will heat the reflector. The reflector will absorb, not reflect, heat.

Yup .. I use a 250 watt halogen work-light for my “space heater” .. the radiant energy heats me up w/o the need to heat up the entire room.

Your incandescent light bulb basically sucks at making light you want. As you say above your incandescent light bulb make infrared which is not what you want your light bulb to be doing. A light bulb is meant to produce visible light and anything else is an inefficiency. Its like saying your electric oven in your kitchen is producing light. Well, it is but it would be better if it wasn’t.

So you mean that my floor should be hotter than touching the light bulb? I’m pretty sure a lot of heat stays up at the ceiling. I’ve only installed thousands of light fixtures and they were all pretty warm. In fact, you have to use special fixtures if they are going to be in direct contact with insulation because they are hot. An incandescent bulb is a pretty bad infrared heater, which is why they make…infrared heaters.

>”The net effect of converting to LEDs is still a huge gain in energy efficiency.”

Household lighting consumes about 15% of peoples’ electricity bills on average, and household electricity bills cover about 15% of the total electricity demand. It’s not really a huge savings.

And if you wanted to save electricity, long fluorescent tubes have greater lm/W figures and better light quality compared to the common LED offerings, at a lower price. The main point of the LEDs is replacing the common lightbulb, which was becoming too cheap to manufacture and too cheap to sell, so Phillips and pals lobbied a global ban on it.

Well there is durability in places where florescents would be difficult to change.

There are types of heavy-duty flourescent bulbs that outlast LEDs.

Especially since in most LED products, there’s an electrolytic capacitor that cooks well before the diode itself.

Then again, the actual lifespan of LEDs depends on how much you allow the light output and efficiency to drop. Some manufacturers count to 70%, others to 50%.

its almost never the led, but the power supply that fails.

Those fluorescent tubes have a lot a hazardous materials in them which is why the push to LEDs.

Some houses use electric heat, as well, in which case, the efficiency is identical, other than a small amount that leaves through the windows as visible light.

If you have electric heat, and it’s cold enough that it’s running regularly, there’s no real advantage to saving energy on your lighting.

Of course, in the summer, when everyone’s running their air conditioners, that lighting inefficiency counts double, since you’re using more energy to produce the light, and then even more to pump that heat outside.

Wrong. Not all electric heaters are equally efficient which is why you don’t see electric heaters using incandescent bulbs. Do you read what you are saying? Are you telling me an incandescent light bulb is just as energy efficient as an electric furnace? If your incandescent light bulb is designed to emit visible photons then it cannot be as efficient as an electric heating element consuming the same power and not emitting light.

Efficient LEDs are a great thing but I wish the FCC would enforce their own rules regarding unintentional radiators on their manufacturers and importers. We are replacing lights that make more heat than light with ones that produce radio waves instead!

Th FAA doesn’t care about emissions below 450 kHz, which many LED drivers don’t operate above.

That doesn’t mean they can’t puke all over the spectrum above their switching frequency. The EU is looking into LED lamps because they are causing interference with VHF signals…

I can’t use LED bulbs in my garage door opener. The interference they produce “jam” the receiver, so when they’re lit, the door won’t always close until they go out and you hit the remote. If you’re in the driveway, they’re unpredictable, on the curb, they just don’t work. I wrote an article about this at:

http://www.buchanan1.net/chamberlain.html

Yes, I also had to observe this already. A LED lamp with resistors or a linear driver could have been an option, but I used a “staircase light” (timer) relay and connected a control output of the opener box to it and that to the normal ceiling lights.

That addressed the main reason for using a LED bulb there: We wanted to increase the light output by changing from that faint orange glowing 24V incandescent lamp to proper LEDs. But the cheap chinese LED bulb did not help much in this. It had only 5W instead of the 15W or 25W the incandescent bulb had and flickered like hell on the 24V AC supply, although it was rated for AC and an electrolytic cap was visible.

Actually, the FAA doesn’t care about emissions below 450 feet AGL… correct units are important.

I’m not a radio guy at all, but could these “leaky” LEDs be picked up for short range passive radar?

long wavelength = shitty resolution, that’s why all jet fighter radars work in the 10GHz range and short range anti-missile stuff even higher, despite having dearly pay for that choice…

“Now, or in the very near future, the default for home lighting won’t be incandescent bulbs, but powerful LEDs consuming a mere fraction of the energy an old Edison bulb would, shifting more of the task of keeping your house warm onto the heater.”

Possibly serving the role of lighting and communications. Never mind lower temperatures allow for more freedom in lighting design.

Note that the different color LEDs each are made from different materials. If you look at an RGB LED under a microscope, you’ll see leads going to 3 separate “chips”. This is why you can’t make a single semiconductor wafer that emits all 3 colors, since each color requires a different base material. This is why we use OLEDs instead of “regular” LEDs in displays: it’s easier to deposit into a fine pattern the liquid OLED material (and let it dry) than it is to deposit the solid semiconductor materials of regular LEDs.

Oh the irony!

No offense to [Benchoff] but …

People who are colorblind study color extensively. People who are not colorblind just take it for granted.

Quote: “A whiter white”! I never heard a truer truth lol, It’s called color temperature.

Quote: “if you mix red, green, and blue light, you will get white light”. Sure if there is a medium to mix the colors (which is very complex) but in this case there is no medium so you get red, green and blue (not white) but human eyes will perceive this combination of colors to be white only if the red green and blue mach closely with the centers of the sensitivity of the cone structures in their eyes.

Quote: “they’re more efficient at producing light. Of course, that means that incandescent light bulbs are more efficient at producing heat”

You might want to check that. LEDs create a lot of heat. One does not necessarily translate to the other.

Heat produced is just whatever portion of input power hasn’t been converted to light. LED’s produce waste heat, yes, but nowhere near as much as a hot tungsten filament. Compare an 800-lumen LED bulb at 13 watts to an 800-lumen incandescent bulb at 60 watts; I don’t know what the exact efficiencies are, but the LED is producing a fraction as many watts of waste heat.

On the other hand, LEDs are much more vulnerable to the heat since they’re not radiating the waste away.

In the mean while:

http://www.sciencemag.org/news/2016/01/how-get-old-fashioned-light-bulb-glow-without-wasting-so-much-energy

IR-reflecting glass recycles the heat back to the filament and raises incandescent filament efficacy up to 40 lm/W.

The common “white” LEDs with the ballast circuitry losses come around 65 lm/W.

LEDs are more vulnerable to heat because the properties of doped semiconductors vary greatly with temperature. At 200 degrees C most semiconductor diodes stop working as diodes.

Incandescent lamps can’t even work without the filament being hot. The maximum ambient temperature is limited by the parts that make up the lamp: glass, tungsten, copper, solder, etc..

Quote: “The energy consumed by a 100-watt GLS incandescent bulb produces around 12% heat, 83% IR and only 5% visible light. In contrast, a typical LED might produce15% visible light and 85% heat”

https://www.ledsmagazine.com/articles/2005/05/fact-or-fiction-leds-don-t-produce-heat.html

Best technology LEDs are now over 50% efficient at turning electric power into light power. LED lamps that can be bought at a hardware store are nowhere near that good, but things have improved from your 2005 citation.

IR in this context has to be counted as heat.

You can also get light out of a 1N4148 / 1N914 with enough current … for a short time!

Same with two carbon electrodes with enough power.

Yes, we also call those Sound Emitting Diodes (SED’s).

Just before they transform into Dark Emitting Diodes (DEDs)

That cracked me up lol. When I see a car park accident, I call it acoustic parking.

Students of the Braille driving method.

Smoke emitting diodes

I’d suggest making a musical instrument, but it might get a wee bit expensive, and the FCC might get a bit pissed.

a 1n4148 or 1n914 can also carry 100 amps,,,for about a microsecond,

Florescent lights are history, nobody is installing them anymore. I have gotten rid of most in my house, as the ones go in the basement they will be changed out.

Blue LEDs did exist before GaN – Silicon Carbide blue LEDs were briefly a thing, but expensive and not very bright.

I came here to say this. When I got married in December of 1991, the “Something New” and “Something Blue” my wife carried was a garter into which I had wired one of these Silicon Carbide LEDs and a small battery. We were the toast of our nerdy friends.

:) !!

One vital thing to keep in mind is the quality of the light, as explained so well here: https://lumicrest.com/cri-quality-of-light-explained/ (I have a 50W(!) ‘white’ light that is useless because it tinges everything a horrible purple. I may not need to spend $160 for Lumicrest LED strips, but I am getting $40 ones that have a CRI of 95+.)

That may be a UV band area and may not be healthy for eyes.

That is something that I am concerned about as well.

No, it’s probably just a shitty phosphor, cheap or bad mix. Take a CDR as a (reflective) diffraction grating and look at the spectrum. You will for sure see a bright peak in the usual blue region. UV LEDs are much more expensive than ordinary blue chips. Why should anybody use them for ordinary lighting?

IIRC Nichia literally cracked the problem of making blue LEDs. They were attempting to make side emitting blue LASER diodes but the dies kept cracking and not producing a beam. Then one of the researchers noticed while examining a failed diode that the cracks on top were *glowing blue*. He had an ah-ha moment, what if they deliberately induced more cracking to increase the light output? Their failed blue LASER became blue LEDs.

Of course he didn’t get rich off it because he was an employee of the company.

One of the startups that came from our makerspace makes LED light fixtures with a very specific light frequency profile. In addition to ‘white’ light, it has a narrow peak in the near UV that kills off viruses and bacteria on the surfaces it illuminates without giving you skin cancer or cataracts.

They have done Very Well. We are quite proud of them.

If it is enough UV to kill off viruses and bacteria, Then it is dangerous to ANY living being!

The Sun kills viruses and bacteria the same way. I’d have to see the numbers but I’d be willing to bet that the surface cleansing LEDs are less dangerous than the sun by orders of magnitude. Humans have a thick layer of dead skin cells to protect them from mild UV but most bacteria and viruses have no such protection.

No, for proper germicidal action you need “hard” UVC light, like 254nm mercury vapor light. That gives you sunburn and damages your eyes. The long exposure what is needed for sunlight to kill germs with it’s mostly UVA and B (at sealevel) will also give you sunburn and accelerated skin aging.

I’m sure there’s plenty of 254mm radiation to go around with Sunlight, especially for the very long duration that things go through being left outside constantly. We are dealing with black-body radiation which covers all frequencies. (minus whatever the atmosphere absorbs) Fast?..no, but it gets there. It’s amazing how much degradation solar UV can cause to materials given even just a few months. (Imagine what Elon Musk’s first roadster must look like now) The Sun destroyed the LCD display on the power meter on the side of my house making the meter unreadable. I had the utility company replace it and then proceeded to protect the new one by covering it with a plastic Folgers coffee can. In a month or two, what was red had become pink and after a bit later what was resilient was falling apart. It’s all solar UV at work here. I’m sure that dosage could kill almost any bacteria growing on that can. :-) (I miss the metal Folgers cans. They were more useful after the coffee is gone. – …perhaps Master Chef?…the transition would feel like betrayal…a loyal Folgers customer/addict speaking ) …My coffee cup is empty!! Must fix!!……

[foxpup]

I’m still keeping a couple of the plastic Folgers cans to (someday!) attach to an old ice cream stirrer as a parts cleaner/tumbler. The handle indents will hopefully act as stirring paddles. Even if the cans have a short life in that use, they are plentiful.

They certainly are plentiful around my place. (hope your ice cream project goes well for you :-) ) I’ve got a bunch of them filled with failed popcorn kernels that I plan to grind up and feed the birds/squirrels with. I hate to waste all that food. (I’m not making nearly as many now though since I repaired the kettle on my glass sided popcorn popper – up to a point, hotter is better) Now there’s a context where LED lighting is stupid, inside popcorn poppers. The light bulb is both a light source and a heater. (and hermetically sealed which is needed with all that cooking oil getting everywhere)…lava lamps too

I’ve done many projects with long LED light strips with 300 SMD chips on each 5 meter roll. You can build some great stuff with them but you really aren’t saving much energy. Those suckers are less efficient than they should be. They are designed to take 12V/24V on each color “channel” which requires compensating resistors throughout. The heat of the resistors and the ineficiency in the LED can be a heat problem with the wrong design. In fact you sometimes need a current limiting power supply to avoid thermal runaway which is a real problem with semiconductors. If it was up to me I’d have strips with no resistors and apply appropriate voltages to each channel and make it more efficient. I guess I could short out all the resistors on the strips I buy and do just that, but that does not sound fun since there are as many resistors as there are smd LED chips. …not my idea of a good time. :-) If you didn’t have the resistors you could pack LEDs in more tightly and bring it up to 500 or more SMD chips on a 5 meter roll (might get hot) or leave it at 300 and make it possible to trim the tape at each LED chip. ????

LED’s should be current limited and not voltage.

They’re not linear when it comes to voltage.

“LED’s should be current limited and not voltage.” Yes, every time to do it properly, but how many readers here haven’t taken to heart what 330 ohm resistors are most likely used for? At least for me, I think 5V source, red LED, apply 330 ohm resistor and it works. As long as the current is way below that thermal runaway tipping point you can get away with going cheap. As for eliminating resistors in the long LED strips, yes I would end up doing current limiting. I just didn’t mention it in the spirit of brevity. :-) I wonder how many readers here learned that rule by working with LED Lasers that absolutely demand current limiting.

When visible laser diodes became available to hobbyists in the early ’90’s (~$80 each) the “rule of thumb” was,

“buy 3, the first 2 you’ll destroy stupidly, with the 3rd one you’ll follow the application notes for the power to supply”.

Which is not good, when these diodes were €3000,- blue laser didoes from Nichia.

…please tell me you didn’t?…… :-)

Many cheap Chinese LED’s like those in the main article picture has suffered a premature death from myself monkeying around and believing that voltage regulating without a series resistor is good enough.

Thankful they’re a dime a dozen and the lesson was eventually learned.

This is a technicality vs practicality thing.

Yes current limiting should always be used to at least compensate for the change in junction voltage that results from changes in temperature.

However the series resistors still need to be used for a practice reason.

You could simply series all the LEDs without resistors and use a current regulated supply. The downside of this configuration is that one dead LED (O/C) will result in none of the LEDs working.

The solution is to put all the LEDs in parallel. That introduces a different problem. As the junction voltage varies between LEDs, in this configuration (without resistors) all the LEDs will have different intensities and some will not function at all.

That is why there needs to be individual current regulation for each LED and hence the resistors. Resistors are the cheapest solution.

Producing white with RGB is also only about half efficient as modern white LEDs. And you have a bad color rendition, as they have a gap in the spectrum, where yellow should be. Thats the reason why RGB-amber and RGB-white LEDs exist.

“Thats the reason why RGB-amber and RGB-white LEDs exist.” I’ve seen those and they have seemed interesting to me but controllers aren’t wonderfully cheap like the standard RGB ones and cost is a big deal with most of my projects. :-) I’ll keep watching the market. Perhaps I’ll find cheaper controllers or do something crazy like make my own. I can certainly understand why that fourth color would be useful….lots of options…fun stuff actually :-)

FYI, a number of birds have a 4th type of cone in their eyes, which detects a different frequency of blue.

errr…

“cone cell”

LEDs are great replacements for incandescent lights, but my lava lamp has stopped working.

if you use enough power, the light alone will make it work again…just not sure you’ll be able to look at it :D

What happened to the CFL? I was trying to buy some big ones (~55W) to use in a pole barn with standard light sockets, but the good brands are gone, and all the LEDs that produce that much light use more power!

Was hoping to read something about the longevity or useable lifespan of white LEDs. I recall reading somewhere that there are inferior white LEDs whose phosphors will degrade long before the LED diode is done.

As others have observed, the LEDs running from AC often expire early due to the circuitry, not the LED itself.

in real life use, my incandescent GU5.3 lights and even some common bulbs have systematically outlasted the LEDs, and light quality is a lot better.

i use dozens of leds but they have their own limitations and quirks. neon lights are imho superior in many respect, and very cheap. for some apps. CFL are also very good. but disappearing from the market.

no point in enforcing by law an extremist view of the matter like governments have done. there are applications where LEDs have an edge, others where they suck.

im stuck with a very poor lighting in my old house, as the electric plant was designed for incandescent lights and LEDs of suitable power keep failing and are poorly placed for efficient light distribution. rewiring the house would cost an arm and a leg.

is true that most often is not the led failing but the PSU. but they cant be fixed.

2019, and living in darkness….

In my shop, I use quite a lot of white LEDs (most spotlights use 9x3W LEDs) and can save a lot of power, but these LEDs have never reached their advertises 50.000 hours of MTBF due to the fact that most of them are 9×3 LEDs in series, so 50.000 h/9, I am repairing most spotlights myself and need about 200 pcs per month…

LEDs dimming and failing too soon….I think most products overdrive their LEDs to meet brightness specs at the expense of thier lifetimes. If we brought back the current 33% and increased the number of LEDs by 50% we’d get the same brightness, more nicely diffused and much, much, longer MTF. Heat is also a huge killer that can be dealt with this way. It’s all in the engineering and the will not to just go cheap chasing only short term specs and price. LED light bulbs are the most stupid of LED products. SMD LED’s are happiest spread out over a large surface with lots of room to cast off heat. The light is more diffused and one may not even need current regulation if confidence in cooling is practical. It all makes so much more sense than cramming a bunch of LED’s tight in close to each other, overdrive them, and hope all the heat doesn’t kill the unit before it can be returned.

” SMD LED’s are happiest spread out over a large surface with lots of room to cast off heat. ”

Kitchen lights are like that. Attached to a big metal plate. Still going strong. Edge lit fixture by a strip, not so much.

Really sucks trying to see what color an LED traffic signal us during a snowstorm. I wonder if the reduced energy costs make up for the road workers who get to use a broom to clean them off

The old-fashion incandescent traffic signals never had these issues.

(gosh darn it, needed the missing edit feature)

This guy covers the LED traffic light problem to extreme detail. Snow can be quite a problem. This is why I’m warey of any company’s products who’s engineering department is a place where snow is rare/absent and their products have to be used outside. (Tesla Motors, I’m looking at you. – strangely, I feel pretty confident their products are OK in the winter. I know a few happy owners up here in Nebraska which rymes with Alaska but isn’t nearly as cold – most of the time, but our traffic lights do sometimes get caked over with wet snow)

https://www.youtube.com/watch?v=GiYO1TObNz8

That’s not the worst feature of LEDs being used for traffic.

The old incandescent indicators used a color filter and white light at a lower color temperature – probably about 3500 to 4000 kelvin.

In those days everything including road signage was deliberately made so that color blind people had no trouble seeing or interpreting them. Even the resistor color code was also a grey scale to colorblind people so that they could read resistors to … until china started making them.

Filtered white light provides a color of one specific frequency or extremely narrow band. Which in that case was a color that was detectable by colorblind people.

On the other hand LEDs mix frequencies so that people of normal color perception perceive the intended color and unfortunately colorblind people don’t see the color or even worse can’t see the illumination at all.

In my case there was only one model car with a strip of separate LEDs in an arc for the front indicator that I couldn’t see at all in daylight to start with. Now there are many models of cars that have LED indicators that I can’t see in daylight.

People of normal color perception generally refuse to become involved with this issue in an attempt to effect change within the manufacturing industry.

Instead they simply shift the blame onto colorblind people (10% of the population).

Colorblind people can’t fix the problem by correcting their personal biology so they have to find another solution.

In my case the solution has been a large 4WD with a large bull-bar and insurance. It’s not an ideal solution by any means. It’s simply that it’s the best solution “available” to me.

Now that’s a new one for me. I’ve never heard of any one using that excuse to buy one of those wide-arse, loud, smelly, FTW-mobiles. I drive a quick nimble electric in the small town that also hosts the worlds largest pickup dealership. I often feel like a border-collie among huge, smelly, loud cows. Plus, nothing destroys the usefulness of a pickup like jacking it up so high you can’t load or unload it. There must be a better solution for the color blind. (augmented reality with special overlay of content the viewer would normally miss?) Color blindness is a real issue for many people. I remember my mom shopping for and choosing clothes for my dad to wear . Then there’s the time my Dad ordered a blue Chevy and they shipped him a green one which he kept anyway. Now that was a real FTW-mobile, big old ’70s station wagon with a V8 where the 8 meant 8mpg. :-) Color blindness stinks and you can’t fix it by using the other hand like for Left-Handers can. What stinks even more is people’s refusal to acknowledge it. (Come to think of it, it all makes sense considering my Dad’s favorite car color is Blue considering he has Red-Green color blindness. That way he can actually see his car for the color it actually is. :-) )

We had a solution before. Now it’s that people are no longer interested in assisting colorblind people.

For the curious that have LED indicators just get an old yellow/orange (or whatever color it is) indicator lens and hold it over your LED indicator. If you can’t see the LED flash though the lens (or it’s very dull) then a colorblind person can’t see it either.

All my cars have been white or grey. One car I ordered from a (color) sales brochure without knowing what color it was and it turned out to be grey.

That reminds me of the closing scene in the movie “Up!”.

The old man and the boy are sitting on the curb and counting the cars by their color. “Red one!”,

“Blue one!” and the talking dog calls out “Gray one!” for each car!

B^)

I had the same thought and you said it. :-) It was a pleasant final scene for the movie. :-)

Even lower for the color temp; the 116W bulbs come in at 2850*K.

Move to Boston.

You’ll be a completely average driver, nobody uses indicators, so nobody can see them.

Massholes ‘block’ if you indicate, even if it forces them into an exit only lane they don’t want. They’ll just cut you off coming back at the absolutely last inch.

To be fair to massholes: It’s hard to signal while flipping someone off with one hand, drinking a beer with the other and steering with a knee.

Who invented the process that made a blue LED possible? Herb Maruska at RCA in 1972. https://spectrum.ieee.org/rcas-forgotten-work-on-the-blue-led

The three guys who got the Nobel Prize for physics made it more efficient and mass producible at low cost.

“Now, or in the very near future, the default for home lighting won’t be incandescent bulbs, but powerful LEDs”

Hi, from the future. You were correct. As of today, August 1st 2023, shoppers in the United States will no longer be able to purchase most incandescent bulbs. And yes, it was a complete coincidence that I ended up here, today. I was going down a rabbit hole researching the first commercially available LED, made by Mansanto.

Incandescent ‘heat bulbs’ will still be available.

100% efficient, it’s the law.

This article fail to mention the true inventer of the blue LED and white LED which was Dr. Shuji Nakamura.