For the vast majority of us, Gigabit Ethernet is more than enough for daily tasks. The occasional big network file transfer might drag a little, but it’s rare to fall short of bandwidth when you’re hooked up over Cat 6. [Brian] has a thirst for saturating network links, however, and decided only 10 Gigabit Ethernet would do.

Already being the owner of a Gigabit Ethernet network at home, [Brian] found that he was now regularly able to saturate the links with his existing hardware. With a desire to run intensive virtual machines on his existing NAS without causing bandwidth issues, it was time for an upgrade. Unfortunately, the cost of rewiring the existing home network to Cat 6 and procuring hardware that could run 10 Gigabit Ethernet over copper twisted pair was prohibitively expensive.

Instead, [Brian] decided to reduce the scope to connecting just 3 machines. Switches were prohibitively expensive, so each computer was fitted with twin 10 Gigabit interfaces, such that it could talk to the two other computers. Rather than rely on twisted pair, the interfaces chosen use the SFP+ standard, in which the network cable accepts electrical signals from the interface, and contains a fiber optic transciever.

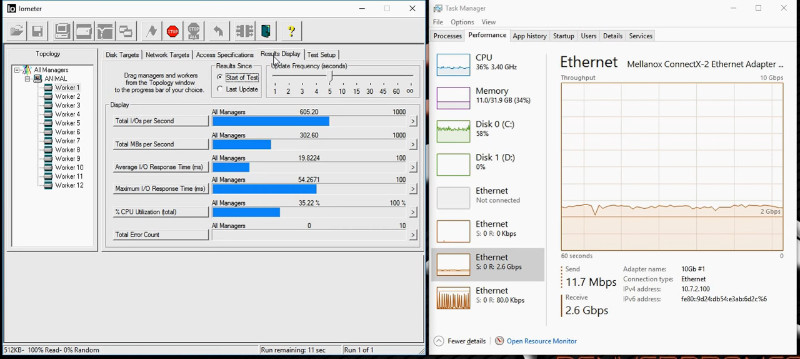

[Brian] was able to get the 3 computers networked for just $120, with parts sourced from eBay. It’s an approach that doesn’t scale well; larger setups would be much better served by using a switch and a less zany network topology. But for [Brian], it works just fine, and allows his NAS to outperform a 15,000 RPM server hard disk as far as read rates go.

If you’re curious about improving your own network performance, it might pay to look at your cables first – things are not always as they seem.

“Guy buys some equipment and plugs it into his computer”

hackerman.jpg

Seriously, what is this?

Yeah, I do agree on that part. How is buying stuff and plugging it in a hack?

He just…networked stuff…with better cables than he used to have before that. Damn, I do that shit for a living.

It reminded me of an article I read a few years ago where the guy just bought cards and went point to point becasue switches were so expensive back then.

Imagine my surprise to learn that it’s the same article from 3 years ago.

No kidding, a story on a 3 year old article, must be a slow news day

4x SPF+ & 1Gb LAN Mikrotik switch (CRS305-1G-4S+IN) is about 120 EUR without VAT. I would not call this prohibitively expansive. It has 82Gbps switching capacity. Well within requirements.

did u read the article?

Yes. Still, if he limits himself to only NAS, server and his computer, he can use cheaper one interface network cards, connect the computers to switch and connect the switch with 1Gb Network. All 3 computers are practically next to each other, so re-cabling is not necessary. This way he would have much simpler network.

10 gigabit EtherWave should be a thing. There are dual port 10 gig network cards. Any way to configure them like the old Farallon product?

EtherWave* could daisy chain up to 7 PC or Mac computers off a single port on a 10 Base-T hub, switch, or router. On the Mac side 10 megabit was only possible with NuBus, PDS, or PCI EtherWave adapters. The printer port (serial) adapters were limited to 690 kilobit.

*Google insists you must be looking for Moog Etherwave Theremins or accessories, or various other things named Etherwave or Ether Wave so add Farallon and -theremin to somewhat trim down the useless results.

Brian’s post is about three years old, and pricing has changed a lot since then. I remember shopping for components with him. We were able to find some used gigabit switches with one or two 10 gbps uplink ports for a few hundred bucks, but I recall even small 10 gigabit switches starting at over $1,000.

At the time, I went a different route than Brian. I only had two machines that needed a network performance boost, so I ordered a pair of 2-port 20 gbps Infiniband cards and a CX4 cable for about $120. In practice, it isn’t any faster than Brian’s setup, because I’m hitting the limits of PCIe on one end of the connection.

At the time, I thought Infiniband was interesting, because 8-port 20 gbps Infiniband switches were around $100 used. That aspect is less interesting today, because 10gbe switches have gotten so cheap, except that even faster Infiniband hardware has dropped in price over the last three years.

I imagine you could replicate my Infiniband setup today with a newer generation of hardware that’s twice as fast as my gear for roughly the same cost. Of course, Infiniband comes with its own disadvantages that the average person wouldn’t want to have to deal with!

I also have some D-Link DGS-1510 which have 2xSFP+ and loads of gigabit ports. I haven’t actually linked the 10Gb part just because I haven’t got around to it but these managed switches come up for not much on eBay and work great.

Y’know you can buy mellanox CX3 cards on eBay for $20 each, right? And they have working drivers for almost every platform of windows or Linux? And you can run them As IB native at 40Gb/s or run them by VPI at 32Gb/s?… And the cables are about $5-10 …

Well, I paired a set and now have a home network that can crank out 64Gb/s transfers doing SMB multipathed. Bit pointless though…Hard to keep the lines saturated for that long.

“Where’s your article?”

:-/

May be Mike thinks *buying* stuff to do what it is supposed to do isn’t hack worthy unlike our editors.

It literally is:

Buy cards.

Buy cables.

Plug in cards into PCs.

Plug cables in.

???

Profit

Mellanox CX for $20? I just checked and I found two different ones are about 30€…and they’re both defective and have 20€ in shipping-fees as well. The non-defective ones begin at 100€.

Don’t think 20 bucks is a regular offer, but I got two CX3 (311A, so single port) cards off eBay NL for 55€ exactly 12 months ago. 1.5m of FS DAC cable was another 17€, so connecting selected machines via 10GbE or even faster isn’t all that expensive for quite some time now. Running mine on Solaris, which would not be possible with cheapo 10GBASE-T cards like the Tehuti ones.

Eh… there are tons of Mellanox cards out there that work great. I set up two computers, with 2 mellanox cards and a cable, and now I have a 40Gbit/s link between them. Total was $80, and that was several years ago.

“Rather than rely on twisted pair, the interfaces chosen use the SFP+ standard, in which the network cable accepts electrical signals from the interface, and contains a fiber optic transciever.”

Fiber optic? Which part of the word “Copper” in “SFP+ Direct Attach Copper (10GBSFP+Cu).” was the complicated one?

Brilliant

Came to say the same. There is absolutely nothing optical here. It’s directly over copper.

That would be a hack though, optical with those transcievers.

It was badly written. The SFP+ standard does not dictate media-type, so there is indeed no reliance on twisted pair. As such, it also doesn’t mandate optical fiber; Direct Attach Copper is cost-competitive for distances less than 7m. Longer than that and you need to select your transceivers and fiber according to the distance.

You can get older Cisco Nexus models (eg 5020) for under $200 on ebay now. With redundant hot-swap PSUs and fans, and a 1Tb/s backplane, it’ll be more switch than you’ll ever need at home. It’s big and loud though, not exactly bedroom-friendly.

you guys beat me to it ..

I don’t see where a normal person would need more than 100Mbit.

I upgraded my home network to 1Gb/s only because my DSL got upgraded to 100Mb/s|40Mb/s (runs at 107|44 yay) and my network gave a slight bottleneck. Apart from big downloads there is nothing to notice.

And the only thing I’ve seen 1Gb/s too slow was with Software Defined Radio.

You’re right. Your average person doesn’t need more than 100MB. Your average person has no idea what linux is, wonders if SDR is FDRs unmentioned brother, and thinks the cloud is magic, not someone else’s computer. Not to mention your average person probably thinks everyone who reads hackaday must be criminals because hack is in the name.

Internet Intranet.

E.g. if you’re doing stuff with videos and storing them on a NAS, then 1Gbit isn’t going to cut it at some point.

To put it simple: Your use case is not THE use case. Other people, other requirements.

for Internet stuff in regional Australia 10Mbit will do you but internal network with only a couple of computers I max out 100Mbit easily and often, need to upgrade Gigabit soon…

Backing up to a NAS is the biggest consumer.

You think backups to a NAS server is slow, try running ordinary, everyday system backups on an IBM midrange system. Be it a 9370, AS400, or even an ES9000, tell the wife you’ll be home late (again), & you’ll microwave dinner when you (eventually) get home.

Try running rsync to a USB memory stick to a WRT54 class router. :P Between weak CPU, crappy Broadcom USB implementation it is really slow. It saved my bacon half a dozen times over the years. Upgraded to a dual core ARM router a few years ago because the dual band won’t interfere with bluetooth.

Normal people don’t visit hackaday :P

But you are very mistaken. I’ll bet money that your computers hard drives are connected over sata and not 100mbit anymore. Storage servers to virtual machine hosts are the same deal, and the only way to have certain forms of redundancy.

Even just backups can slow down access to storage, so the less time those run the better.

Once your computers can access a share on the NAS at the same speed as a local disk, you can centralize all of your files there, and focus your raid, backups, and redundancy on the NAS in one place instead of over many desktops, some of which may not even support two hard drives let alone a proper redundant setup.

Internet speed doesn’t even come into play.

Just wait until 802.11ay and wireless VR take off, then all of a sudden even 10Gbps might not be enough…

> Just wait until 802.11ay and wireless VR take off, then all of a sudden even 10Gbps might not be enough…

We can barely do proper IBSS or Direct WiFi(c) in 802.11n, so…

But yeah, users push everything to the cloud and rarely retrieve their stuff.

While 10Gbit is nice for fast backups, or slinging multiiple GB’s of files around quickly… it is kinda overkill for home use. However, if you’re wired for CAT6 you can run 10Gb over copper for ~50meters. Unless you’re living large, that should easily cover most homes. No need for fiber.

If you have decent quality CAT5e you can run 10Gb for at least ten meters or so. The spec that calls for CAT6 is overkill.

Well since most “ordinary” people have run conduit, keeping up with the Jones should be easier.

3 year old news is so exciting!

Honestly though I did something similar, only the cards came with two copper SFP+ cables so I started with 10Gbps between my file server and VM server. I was able to find a Dell 24 port switch with 2 SFP+ ports for under $100 on eBay and dropped that in. I’m not sure the speed increase is all that much – it’s not like I’m doing multi-GB transfers all the time, my Internet service is 100/100, and the fan in the switch can be heard in our kitchen since the ‘server room’ is in the basement. It is a fun experiment but not one that’s really needed for most people.

Isn’t 10gbase-t actually able to give you 10Gbps over CAT5e cable?

Category 6a is required to reach the full distance of 100 metres (330 ft) and category 6 may reach a distance of 55 metres (180 ft) depending on the quality of installation, determined only after re-testing to 500 MHz.

https://en.wikipedia.org/wiki/10_Gigabit_Ethernet#10GBASE-T

$120.00 seems like a good bargain. I myself have FIOS, but have been running wireless with a standard router & a Tends USB modem. Beyond aggravating. Solution? An upgraded router @ 2.4 GHz, 5 port gigabit hub, & CAT7 RJ45 cable plugged directly into the LAN ports on my PCs which have high speen LAN ports. From what I’ve read, CAT8 RJ45 cable will soon hit the markets. When that happens, another upgrade. But my new setup should be plenty fast for now.

i find the lack of IPv6 addresses in the network diagram disturbing. 10/8 is so late 90-ish… mDNS does the trick anyways and you can skip the addressing part completely with link locals.

You know you could use SMB3 multichannel feature. I used 2x pcie 4 ports gigabit intel cards. Gives me a nice 8 gigabit speed on the NAS. Very cheap! Less than 60$ setup

As a professional network engineer, this article and comment thread make me cringe, badly.

If you’re doing video production with 4K video material, good luck running that off a consumer-grade NAS connected with Gig-E. For me, I use Thunderbolt connected to a NUC running mdadm RAID and a bunch of USB-3 drives. ~US$800 for the NUC and total control over the RAID topology, plus I run some low-use VM’s. Thunderbolt networking is not heavily advertised but works great. ‘course, the cables are a bit pricey (and you can’t use a USB-C cable, yay.)

Things I’d love to see, and AFAIK does not exist, at least for consumers:

* Thunderbolt 3 Hubs/Switches (where you can connect multiple Thunderbolt-3 devices together, not these crappy USB-C to USB-3 “hubs”.)

* Thunderbolt 3 to 10G-E adapters.

* USB-3.1g2 (10gb/s) to 10G-E adapters.

But what do you when you want to provide a 10GBe connectivity to a NAS (e.g. QNAP or Synology) that only has RJ45 1GB port ?

I have lots of movies that are in 4k on my Plex server and moving or streaming them will barely work on 1gbe in native format, however works flawlessly on 10gbe.

Next on hackaday:

-Purchasing and “installing” a USB hard drive. these 3 steps show you how! with pictures!

-Getting free cable by purchasing and installing an indoor antenna, these 3 steps show you how, with pictures!

-Upgrade RAM hacks, purchasing and installing a RAM module, these steps show you how, HP hates this, do this every day!

-How to use and configure a USB bluetooth module, with steps and pictures!

It is 2019 and this article is referring a post that Brian made 3 YEARS AGO in 2016? I don’t get it…

A 16 port 10Gb/s switch can be purchased from China for $50USD delivered and it works quite well. If I remember correctly, each port is 10Gb/s independent of the others. The real joke is that I run it usually with CAT5 – I just wanted to buy one switch that would last a while.

A poor-man’s token ring network to avoid buying an expensive switch? That’s clever! I may have to keep that in mind for the future.

Expanding beyond 3 computers would be hard since I can’t recall seeing 10GbE NICs with more than 2 interfaces. Bridging interfaces might work but seems like IP management would get tedious, or more cards would need to be added to the PCs. At that point, it would seem smarter to dedicate a system to act as a network switch. I would say the same thing if someone were resorting to a quad port NIC (if such a thing exists). But someone pointed out a quad port switch for under $120 in a comment above so unless that quad port NIC was very cheap, it doesn’t seem like a great idea.

Well, if you don’t mind getting your hands dirty with routing tables, then you you could chain them together using 2 port interfaces and still reach any computer in the chain (or ring).

this is pretty much the topology I have for 3 computers (but that server a different purpose). The difference for me is my budget was much smaller and my stuff came from salvage.

It sounds like 10GbE stuff is coming down in price to where it might be usable for home networking without resorting to picking through salvage.

How is it a hack to go out and buy something and use it exactly as intended by the manufacturer? And also the most prohibitive expense is the cost of the 10 gig connection… And he didn’t get around that.

once you have a 10gb network you should also setup a lancache.

https://github.com/nexusofdoom/lancache-installer

My paternal grandmother runs on 40Gbit ethernet. I tweaked her profile to use TCP/IP over SATA3, and now her disk queueueus are always full. So now I need to plug a 10GbaseCx4 cable into her and download all my data, to upload on 100Gb.