A remote Ethernet device needs two things: power and Ethernet. You might think that this also means two cables, a beefy one to carry the current needed to run the thing, and thin little twisted pairs for the data. But no!

Power over Ethernet (PoE) allows you to transmit power and data over to network devices. It does this through a twisted pair Ethernet cabling, which allows a single cable to drive the two connections. The main advantage of using PoE as opposed to having separate lines for power and data is to simplify the process of installation – there’s fewer cables to keep track of and purchase. For smaller offices, the hassle of having to wire new circuits or a transformer for converted AC to DC can be annoying.

PoE can also be an advantage in cases where power is not easily accessible or where additional wiring simply is not an option. Ethernet cables are often run in the ceiling, while power runs near the floor. Furthermore, PoE is protected from overload, short circuiting, and delivers power safely. No additional power supplies are necessary since the power is supplied centrally, and scaling the power delivery becomes a lot easier.

Devices Using PoE

Other devices that use PoE include RFID readers, IPTV decoders, access control systems, and occasionally even wall clocks. If it already uses Ethernet, and it doesn’t draw too much power, it’s a good candidate for PoE.

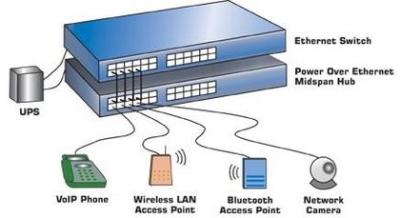

On the supply side, given that the majority of devices that use PoE are in some form networking devices, it makes sense that the main device to provide power to a PoE system would be the Ethernet switch. Another option is to use a PoE injector, which works with non-PoE switches to ensure that the device is able to receive power from another source than the switch.

How it Works

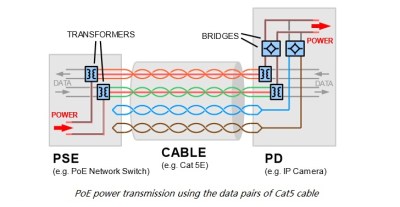

Historically, PoE was implemented by simply hooking extra lines up to a DC power supply. Early power injectors did not provide any intelligent protocol, simply injecting power into a system. The most common method was to power a pair of wires not utilized by 100Base-TX Ethernet. This could easily destroy devices not designed to accept power, however. The IEEE 802.3 working group started their first official PoE project in 1999, titled the IEE 802.3af.

While further standards haven’t been released, proprietary technologies have used the PoE term to describe their methods of power delivery. A new project from the IEEE 802.3 working group was the 2018 released IEEE 802.3bt standard that utilizes all four twisted pairs to deliver up to 71 W to a powered device.

But this power comes at a cost: Ethernet cables simply don’t have the conductive cross-section that power cables do, and resistive losses are higher. Because power loss in a cable is proportional to the squared current, PoE systems minimize the current by using higher voltages, from 40 V to 60 V, which is then converted down in the receiving device. Even so, PoE specs allow for 15% power loss in the cable itself. For instance, your 12 W remote device might draw 14 W at the wall, with the remaining 2 W heating up your crawlspace. The proposed 70 W IEEE 802.3bt standard can put as much as 30 W of heat into the wires.

The bigger problem is typically insufficient power. The 802.4af PoE standard maximum power output is below 15.4 W (13 W delivered), which is enough to provide power for most networking devices. For higher power consumption devices, such as network PTZ cameras, this isn’t the case.

Although maximum power supply is specified in the standards, having a supply that supplied more power is necessary will not affect the performance of the device. The device will draw as much current as necessary to operate, so there is no risk of overload, just hot wires.

So PoE isn’t without its tradeoffs. Nevertheless, there’s certainly a lot of advantages to accepting PoE for devices, and of course we welcome a world with fewer wires. It’s fantastic for routers, phones, and their friends. But when your power-hungry devices are keeping you warm at night, it’s probably time to plug them into the wall.

Along with centralized power delivery is the benefit of centralized UPS batteries for backup.

One beefy UPS is often cheaper and easier to maintain than X number of smaller UPS units scattered around.

This also can make it more worth while to pop for one with hot-swap battery packs, again since it is one UPS instead of many that will have that additional cost, while many devices can benefit from the improved uptime.

buuuut, one beefy UPS is guaranteed to be well over the power limit many EU countries impose in fire code…(it has to turn off in case of a fire, because firefigters don’t like being zapped)

Ah, thanks for the memories..

A customer (Name Redacted) had skimped on the cabling install, and left some PoE enabled Cat5 laying on the deck of a storage area.

Entry level fork lift operators were not aware that crushing the cabling was a bad thing.

Enter stage left, two fully loaded palettes, crushing the cables into a ginormous mess.

Shortly after, certain printers and computer Ethernet connected devices started smoking.

I love the smell of napalm in the morning..

Nice story ! But I didn’t get the connection between crushing cables and smoking printers. Isn’t the PoE switch would have failed instead of other equipments connected to it ?

All I know for sure is, they were forced to replace the equipment that didn’t like PoE voltage on the Ethernet wires. The VOIP phones seemed to be OK, but other hardware gave it up. So, now I advise not crushing cabling. (PoE or not)

This is BS. Simply rolling over them (even with a heavy load) would not cause this. Good story for the laughs, however the lie is exposed. Nice try on the fiction attempt. lmfao

>“PoE Ethernet extenders enable you to reach these devices, up to 10,000 feet away, and power them over single twisted-pair, coax or any other existing copper wiring,” explains Al Davies, Perle’s director of product management.

The drawback is that most PoE Ethernet extenders operate as simple passive power injectors, applying 44–57V to the RJ-45 port pins. This allows up to 30W of power to be transferred efficiently to PoE-compatible equipment via the cable, while remaining at a safe level for users. But it can result in severe damage to non-PoE-compliant Ethernet devices if they are connected accidentally, possibly costing thousands of dollars.<

Duh..

“PoE can also be an advantage in cases where power is not easily accessible or where additional wiring simply is not an option.”

That’s not the only usage.

https://planetechusa.com/blog/poe-lighting-how-poe-is-revolutionizing-led-lights-in-smart-homes-and-offices/

VLC is also a possibility.

https://ieeexplore.ieee.org/document/7072557

The POE to “revolutionize” lights article misses at least one key thing.

One of the big reasons for using LED lights is to reduce energy use/cost.

With POE you are increasing the energy use (increased loss in the cables),

counteracting the benefits of using LEDs. (As compared to using standard wiring.)

So one needs to figure out the trade-offs.

(I can just see people not thinking this through, and going whole-hog with

an idea like that, and wasting a bunch of power.)

Better for a few low power lights (e.g. in a temporary setting) but not what want

to do in general, without careful analysis of operational costs and risks.

One of the big cost savers with the POE lighting is in the labor. Instead of a licensed electrician you can have a certified tech install the wires as it’s all low voltage. Plus with the POE you typically can get better control schemes, standard, for the lighting than you may otherwise have gotten, where energy codes are lax. There are not a lot of companies doing POE lighting, seems most are looking towards line voltage lights, wireless controls.

And for those who’d like to make their own projects based on PoE, I have to toot my own horn here and mention my wESP32 project: https://hackaday.io/project/85389-wesp32-wired-esp32-with-ethernet-and-poe

70 watt delivered with 30 watt of power loss… WTF a committee of real geniuses!

we need to improve energy usage, not wastong it.

The standard allows for that much, the loss would be that at max run length, you want to minimize that loss, feel free to make Ethernet cables out of 16awg or bigger cable.

Most PoE devices don’t use that much power (usually around 5-10W for an access point). If you want to use the highest power classes at maximum distance it is recommended to buy Ethernet cables with thicker wiring on the PoE pair. It costs more but you make it back in reduced losses.

And what do you think wireless charging will do? – It will not improve energy usage.

But anyway, although it is a good thing to improve energy efficiency, we do not NEED to do it. Contrary to the opinion of climate hysterics. As long as somebody pays for the energy he uses, nobody has to interfere with his lifestyle.

That’s the main reason, I hate this climate hysterics: The want to question and change our whole lifestyle.

The initial purpose of POE was to solve a niche problem. Setting a standard within the safe tolerances of common data cable for power purposes was the goal. ISO and TIA already figured out the safe tolerances. Anyway, before the IEEE specifications got out the door the niche had already blown wide open with various proprietary standards.

I can’t wait for the Part 2 of this article. Things have changed considerably with the advent of the 4PPOE family of specifications. Some things are better, some weaknesses are accentuated. It’s a bit more complicated considering that multi-gig needs to fit into this equation.

Somewhere in the workshop I have a unit that injects 72 volts in to Ethernet and drops it at the other end to power the device. By increasing the voltage you can reduce your losses over the cable. Higher voltage means lower cable resistance but then you run in to insulation issues.

God it would be awesome to be able to deliver power over Fiber :)

You can deliver power over fiber, just very little of it. It’s used in some instruments where electrical coupling must be avoided (like fuel meters).

What do you mean very little?

That only depends on the thickness of said fiber… Even good ol’ 9/125um can take several hundred miliwatts before you start to come close to melting the input side.

The place I work at has a 4kW laser that delivers all that destructive power through a fiber, albeit a fairly thick one.

Power meters in EMC measuring chambers are also sometimes powered via fiber, the second one brings back the data. To avoid any electrical connection.

Photovoltaics are too inefficient for it to be practical. Check back in 50 years ;)

Not in general. It depends on the application. Btw. I think when you can irradiate the cell with the best fitting wavelength you will gain some efficiency.

Tektronix did it for some of their oscilloscope active probes, supplied from the oscilloscope through a fiber. They can increase common mode voltage isolation & CMRR

And this is the big daddy version, which may or may not take off:

https://voltserver.com/idea/

It’s intended to be safer than standard AC power as the system senses damage to the cables faster than you can start a fire or electrocute yourself.

But mainly it’s massively cheaper as, because it’s been carefully specced so it doesn’t count as electrical work under US building codes, it means you don’t need a unionised electrician to install or alter it, meaning companies can save a fortune.

Would love to see a HAD editor do a proper report on it and see if it lives up to the hype.