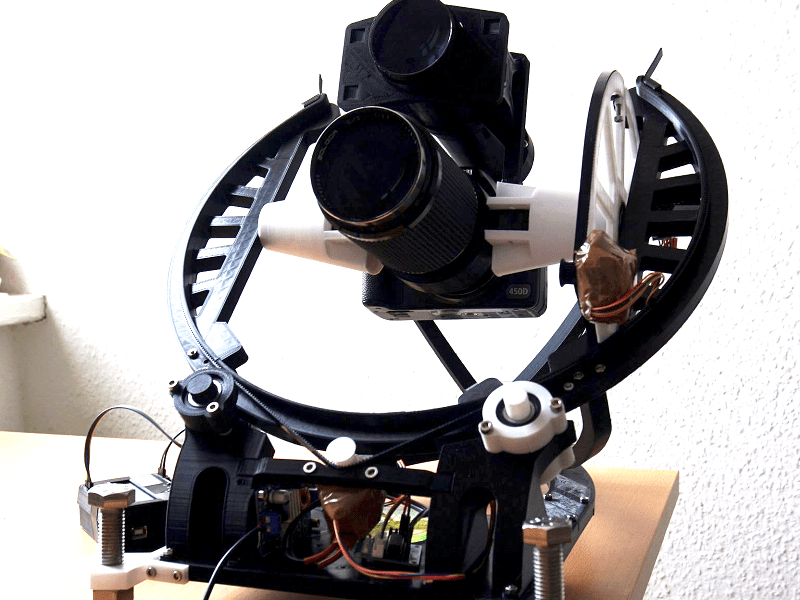

If you want to take beautiful night sky pictures with your DSLR and you live between 15 degrees and 55 degrees north latitude you might want to check out OpenAstroTracker. If you have a 3D printer it will probably take about 60 hours of printing, but you’ll wind up with a pretty impressive setup for your camera. There’s an Arduino managing the tracking and also providing a “go to” capability.

The design is over on Thingiverse and you can find code on GitHub. There’s also a Reddit dedicated to the project. The tracker touts its ability to handle long or heavy lenses and to target 180 degrees in every direction.

Some of the parts you must print are specific to your latitude to within 5 degrees, so if you live at latitude 43 degrees, you could pick the 40-degree versions of the parts. So far though, you must be in the Northern hemisphere between 15 and 55 degrees.

What kind of images can you expect? The site says this image of Andromeda was taken over several nights using a Soligor 210mm f/4 lens with ISO 800 film.

Not bad at all! Certainly not the view from our $25 department store telescope.

If you’d rather skip the Arduino, try a cheap clock movement. Or you can replace the clock and the Arduino with yourself.

Was hoping for automated baseball sign camera

+1

Why would this even need to be built specific to a hemisphere and latitude? I suppose the fact that it’s 2-Axis movement rather than 3 has something to do with that.

Astrophotography requires ultralong exposure time. Hours. When youre exposing for this long, you have to move the camera to compensate for the rotation of the earth.

I know that, my query was more tondonwith designing it to be used from any location and hemisphere rather than designing it to be location specific

Earth rotates only around one axis. So a single axis movement is required. The requirement for a single axis machine would then be that you configure the machine for your precise lattitude. 52.0116N in my case.

Yes, you are right. To get away with two axis, you have to precisely align one of the axis with the rotation axis of the earth. This limits its use to a particular lattitude. It’s called a parallact mount. Without such an alignment, you could still track an object but the field of view would rotate as you track the sky over a longer period of time. This could be solved by a third axis to rotate the camera. But almost all astronomical devices avoid this to reduce complexity and increase accuracy.

Thanks for your explanation!

I’ve not heard/read the term ‘parallact mount’ before, I know it as an ‘equatorial mount’.

I think it means equatorial here, in a plane parallel to the equator. The original meaning of paralactical would seem to imply it corrected parallax error due to the atmosphere bending the light from stars/planets when within a few degrees of the horizon.

Enjoy the pictures while you still can. Soon any new ones will be lined with Muskdots.

why is there no Muskdot wikipedia entry ….

This!

Stacking software could remove these easily enough. Stars and planets hardly move in comparison so anything streaking across the frame can be removed.

Couple that with the ongoing work to cut the reflectivity of the satellites combined with the cycling over time of satellites as they age the problem is going away before it really gets started.

you’re not taking multiple images generally, but very long exposures, which means you’ll have streaks on all of them.

No, you actually take relatively short (for astrophotography) exposures (~5-10 minutes for typical focal lengths) and stack them. The reason is NO tracker is 100% perfect, and given the high pixel density of sensors today small errors creep in pretty quickly.

this is true but this tracker has auto guiding functionality. That would help make the tracker more accurate.

And it does. It can reject satellites – which were common before Musk – and badly guided frames. 60 second exposures are common as well. (Depending on the subject, you have to keep exposure short enough to not fill detector wells with electrons.) Totaling as much time as you need and over several nights if needed and with different filters. The results are spectacular. People with 100mm/4″ refactors and a DSLR routinely produce images that rival old Palomar 200″ results. They can’t do the astrometry of individual stars as finely in a single view, but deep sky stuff is awesome. Check a Deep Sky Stacker gallery.

Oh, come on. They are by far not as bad as Planes are.

Maybe there is an idea, track all space based objects in real time, and drop all frames with known space objects fly through the frame.

Although with the number objects up there now, it would probably be easier just to take a single frame, when there is nothing overhead.

You might pick up a few spy satellites by accident, but not much you can do about that.

Elon Musk wants to launch thousands of satellites for worldwide internet and phone service.

It will have an interesting result in China and North Korea (death penalty for possession of WiFi?), among others.

Oh man, imagine something like this with a cantenna and a wifi/bt adapter or maybe some SDR or something on it.

I would feel a lot better about it if it used the tripod mount to hold the camera. Especially since the focus, zoom, and aperture control rings are all on the lens the camera might be able to rotate.

the tripod mount is not at the appropriate location , would be a paint to compensate for.

Some corrections to what has been said. The length of an individual astronomical exposure is mostly a function of lens speed, sky darkness and any filtration used. For unfiltered, visual (400-700 nm) shots, at F/2 under very dark skies, there is no reason to go longer than 3 minutes. 6 minutes at F/2.8, 12 minutes at F/4 etc. Much shorter for light polluted skies, and decent results can be obtained in 1/3 these times. You must correct for the earths rotation in any exposure longer than a few seconds, perhaps 10 seconds with a 35 mm lens, scaled up or down inversely as a function of focal length. It is normal to take several exposures (sometimes dozens) and average them in the computer to greatly improve results. In this process, satellite trails, airplanes and cosmic ray hits can be averaged out, but every time this is done, data is lost. Once the sky is full of Musk pollution, each wide field image will be filled with dozens of satellite trails, maybe 50-100 for really wide field images. Averaging out all of these will greatly degrade the image, there is now way around this other than perhaps to triple the number of images used in the average. In fact, this level of night sky pollution will not be fully reversed even by such drastic measures. Musk is on the verge of destroying thee night sky for generations to come, all to line his pockets with even more cash. He will sell you a line about helping out the digitally disenfranchised, but it is just an cover for relentless greed.

They way around it is for Musk to give launch space to high orbit for a bunch of small space telescopes of 18 to 24″ Planewave quality. And a way for any of us to book time on them.

If this thing is driving a motor, you should be able to use it in the southern hemisphere too. If you use the south pole instead of North pole as reference, just switch the polarity on the motor and you should be good to go.

Any current, existing capability for this to be used in satellite tracking?

If this could be modified to mount a dual band yagi tuned for 2m / .70cm, it has amazing potential in the amateur radio world for amateur satelite tracking.