Our abilities to multitask, to quickly learn complex maneuvers, and to instantly recognize objects even as infants are just some of the ways that human brains make use of our billions of synapses. Biologically, our brain requires fluid-filled cavities, nerve fibers, and numerous other cells and connections in order to function. This isn’t the case with a new kind of brain recently announced by a team of MIT engineers in Nature Nanotechnology. Compared to the size of a typical human brain, this new “brain-on-a-chip” is able to fit on a piece of confetti.

When you take a look at the chip, it is more similar to tiny metal carving than to any neurological organ. The technology used to design the chip is based on memristors – silicon-based components that mimic the transmissions of synapses. A concatenation of “memory” and “resistor”, they exist as passive circuit elements that retain a relationship between the time integrals of current and voltage across an element. As resistance varies, tiny read charges are able to access a history of applied voltage. This can be accomplished by hysteresis and other non-linear properties of passive circuitry.

These properties can be best observed at nanoscale levels, where they aren’t dwarfed by other electronic and field effects. A tiny positive and negative electrode are separated by a “switching medium”, or space between the two electrodes. Voltage applied to one end causes ions to flow through the medium, forming a conduction channel to the other end. These ions make up the electrical signal transmitted through the circuit.

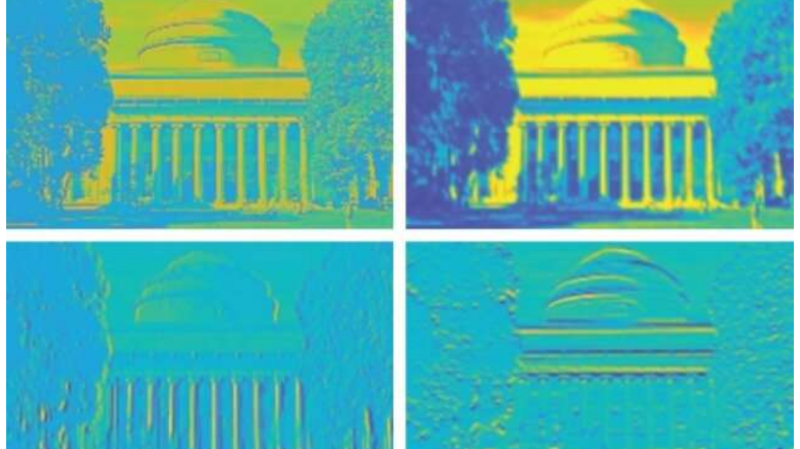

In order to fabricate these memristors, the researchers used alloys of silver for the positive electrode, and copper alongside silicon for the negative electrode. They sandwiched the two electrodes along an amorphous medium and patterned this on a silicon chip tens of thousands of times to create an array of memristors. To train the memristors, they ran the chips through visual tasks to store images and reproduce them until cleaner versions were produced. These new devices join a new category of research into neuromorphic computing – electronics that function similar to the way the brain’s neural architecture operates.

The opportunity for electronics that are capable of making instantaneous decisions without consulting other devices or the Internet spell the possibility of portable artificial intelligence systems. Though we already have software systems capable of simulating synaptic behavior, developing neuromorphic computing devices could vastly increase the capability of devices to do tasks once thought to belong solely to the human brain.

OrganoPlate is actually a neural cluster on chip:

https://www.mimetas.com/page/neurons-on-a-chip

“Compared to the size of a typical human brain, this new “brain-on-a-chip” is able to fit on a piece of confetti.”

I suspect there isn’t a power equivalency.

There isn’t even a size comparison. The confetti sized piece only contains a few thousand elements, not billions like the human brain.

yeah, like some confetti is little squares, say <1×1 cm, and others can be ribbons meters long!

Well of course not. But you got to understand that those billions of elements that you are talking about, that the brain has, are used for far more than what is required by an artificial brain. Because it does not require a biological body to function..it doesn’t need billions to be useful in AI. Also, the thinking process would be much more efficient. Without having to deal with such things as emotions, pain, fear, fatigue, and many many more things that can interfere with a real brain’s problem solving function, an artificial brain dosen’t necessarily need those billions of elements to match the logical power of a real brain.

How many does it need? You sound like this is a solved problem.

My understanding is all the BIOS functions are handled in the hindbrain, which some might call the lizard brain, but analogous structures appeared with the first insects, so it’s the insect brain. Then more advanced command processing and task scheduling appears to be handled between the midbrain and the thalamus. These three together do not make up a whole lot of the brain volume of a human. We may infer that all the abstract and rational thinking goes on in the rest of it, the remaining 70% or so. However, we may also need a scheduling/switching structure similar to the Thalamus to co-ordinate higher brain function. So if you’re after human level intelligence, I suspect you will need pretty much 80% of the neurons in a human brain.

My point was that the whole comparison was pointless. Obviously, if you take a confetti sized piece of circuitry, by limiting the number of elements, it will be the size of a piece of confetti.

Whether an artificial net needs emotions, pain, fear fatigue depends on the problem you want to have solved.

Many of the problems that AI has to deal with are also poorly understood, or over-simplified for the sake of being able to describe them. The AI that can solve the problem as stated is far simpler than the AI it would take to solve the actual problem in the real world.

Such as driving a car. It is not enough to simply keep on the road and avoid obstacles. To fully perform, the AI needs to be able to tell a fake road sign from a real one, and that requires understanding nuances that go far beyond the immediate task of driving. Another example is understanding that language is not merely syntax and grammar, but understanding the culture, its history, its idioms…

Or imagine a self driving car entering a road where an accident happened, and there’s a police officer that explains: “the road is blocked, but just follow the white truck on the sidewalk to go around”. How would the car understand that these instructions are supposed to override the normal rules of the road ?

Nothing new here, no breakthrough.

It´s just a little step toward a memristor based AI chip that can be mass-produced. Search for “memristor AI chip” and you´ll find tons of links, since over 10 years.

I have a design capable of instantaneous decisions that’s right 50% of the time, are they doing better than that yet?

Me too, it’s called “coin”

Heh yeah, was thinking of a simple “electronic coin toss” type circuit, but the real thing works too.

I was highly amused a few years back reading an article about a trainable system of some kind, don’t know if they were neck deep in the neural paradigm then, or if it was good old fashioned computer guessing, but they said something like “Scientists have already doubled the accuracy to 22% from 11% and are confident that they will be able to double it again!”

i was worried there for a second i thought you meant your brain :P

I prefer ketchup on chips. Perhaps blended brains? It might look similar.

I think I saw this in the 1970’s. IIRC, Laminated Mouse Brains control Planoform ships in Norstrilia by Cordwainer Smith :-)

WWII mad scientists be like “Meh, just use the whole pigeon..” https://en.wikipedia.org/wiki/Project_Pigeon

I have doubts that the Wiki is correct. On of my physics professors worked on an IR detector at the time. It was a simple 4 quadrant device. It was meant to be used at night where under air-raid blackout, time-fused incendiary flares were placed on roof tops by the underground and became the only visible and bright light.

He related all this to me in detail while showing me one of the prototype detectors used in tests. He also said their project lost to the pigeons! A pigeon trained to peck on a quadrant to keep a single light centered sounds a lot easier and more reliable than the daylight projection thing.

He didn’t mention the glide bombs but one can see that as a good way to keep bombers away from the saturation of search lights and AAA surrounding major industrial cities. My impression was that these were gravity bombs meant to achieve pinpoint accuracy.

For some background read “Chua, Leon O. Handbook of Memristor Networks. 2019.” you can find it on libgen if you can’t physically access a university library at the moment.

“A concatenation of “memory” and “resistor”” – no it’s not, it’s a portmanteau, a concatenation would just be “memoryresistor”.