Over the past decade, we’ve seen great strides made in the area of AI and neural networks. When trained appropriately, they can be coaxed into generating impressive output, whether it be in text, images, or simply in classifying objects. There’s also much fun to be had in pushing them outside their prescribed operating region, as [Jon Warlick] attempted recently.

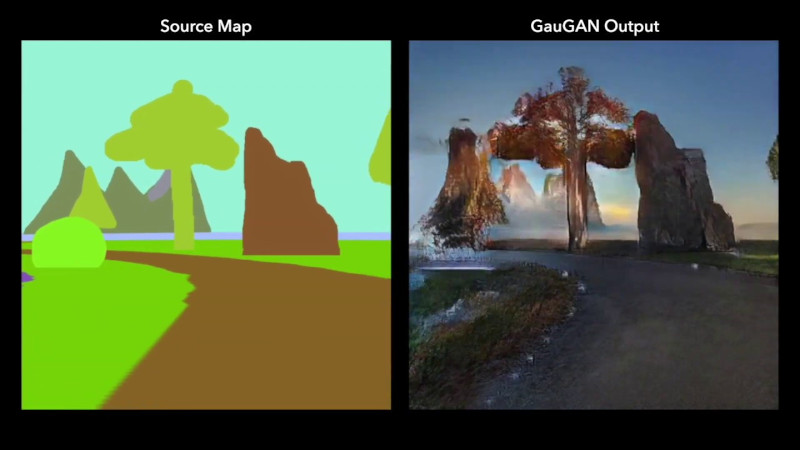

[Jon]’s work began using NVIDIA’s GauGAN tool. It’s capable of generating pseudo-photorealistic images of landscapes from segmentation maps, where different colors of a 2D image represent things such as trees, dirt, or mountains, or water. After spending much time toying with the software, [Jon] decided to see if it could be pressed into service to generate video instead.

The GauGAN tool is only capable of taking in a single segmentation map, and outputting a single image, so [Jon] had to get creative. Experiments were undertaken wherein a video was generated and exported as individual frames, with these frames fed to GauGAN as individual segmentation maps. The output frames from GauGAN were then reassembled into a video again.

The results are somewhat psychedelic, as one would expect. GauGAN’s single image workflow means there is only coincidental relevance between consecutive frames, creating a wild, shifting visage. While it’s not a technique we expect to see used for serious purposes anytime soon, it’s a great experiment at seeing how far the technology can be pushed. It’s not the first time we’ve seen such technology used to create full motion video, either. Video after the break.

I have a different interpretation of photo realistic. Very interesting nonetheless.

yea … photorealistic, I think one does not mean what that word means… I cant even call the results nice, its just a high res tilemap on a camera system, and it looks the part

Nice idea. Interesting results. But could someone show him how to do scripting, to automate the load/generate/save process for each frame?

Or use something like sikulix if to simulate the clicking of buttons and a but of python the generate sequential filenames.

Single image to show how Sikulix works: https://avleonov.com/wp-content/uploads/2017/02/script-2.png

Great. We’ve now got computers as bad as 90% of the population at being creative and talented in art. Yeah team!

I wonder if that algorithm can turn badly taken pictures e.g. bad focus, blurry, under/over exposed etc into better looking ones. Pictures carry much more info than those Windows paint ones.

This is impressive, but it’s not photorealistic nor video. It’s a sequence of somewhat realistic still frames. Turning a method which works well for single frames to produce a coherent video is much harder than a “for loop”.

This is your brain on drugs!

Exactly. Neural networks are a simulation of a severely limited brain, since the real thing is still not fully understood.

Groovy!

Who knew Vincent Van Gogh was a computer program?

Awsome, in the same way that a car with square wheels is. I’ll just be over in the corner having an epileptic fit, let me know when the technology really does work.

Actually they aren’t.

NNs are an ABSTRACTION, of ONE aspect, of the way neurons work.

It’s a VERY important distinction. When you optimize an abstraction, you really REALLY have to stop yourself from generalizing any ideas based off it (or it’s results).

It’s not about whether or not we understand the brain.

It’s about NNs NOT being LIKE brains.

A Car and a skateboard are both “4 wheeled vehicles that can be used for transportation”. But there are things a Car can do that a skateboard can’t. And the reverse is true.

the video you wanted:

https://youtu.be/dqxqbvyOnMY

Worst Mario Kart track ever!

I have been trying to achieve a similar affect with a program called BeCasso. The program that I am using is the exact opposite. It is designed to take photos and turn them into traditional forms of art. By playing with the settings I can re-create different textures and then reassemble the Picture using auto desk sketchbook. I have been using this process to give hand drawn pictures extreme levels of detail. I was planning on running experiments with video the same way that this man did. It is my intention to use this to make 2-D animation realistic enough and easy enough to compete with big Hollywood productions. There are a lot of things that are much easier to do with 2-D animation like animating clothing and hair. If I can get a computer to make the hair photo realistic then all I have to do is make sure that the movements are realistic.

Here is an idea. What if instead of rendering it at 24 frames per second you rented it out five frames per second and then used a separate program to do the work of filling in the missing frames. That should get rid of the jerky look of the video.