What do you do whilst your code’s compiling? Pull up Hackaday? Check Elon Musk’s net worth? Research the price of a faster PC? Or do you wonder what’s taking so long, and decide to switch out your build system?

Clamber aboard for some musings on Makefiles, monopolies, and the magic of Ninja. I want to hear what you use to build your software. Should we still be using make in 2021? Jump into the fray in the comments.

What is a Build Tool Anyway?

Let’s say you’ve written your C++ program, compiled it with g++ or clang++ or your compiler flavor of the week, and reveled in the magic of software. Life is good. Once you’ve built out your program a bit more, adding code from other files and libraries, these g++ commands are starting to get a bit long, as you’re having to link together a lot of different stuff. Also, you’re having to recompile every file every time, even though you might only have made a small change to one of them.

People realised fairly early on that this sucked, and that we can do better. They started to make automated software that could track compilation dependencies, track which bits of code were tweaked since the last build, and combine this knowledge to automatically optimise what gets compiled – ensuring your computer does the minimum amount of work possible.

Enter: GNU Make

Yet another product of the famous Bell Labs, make was written by [Stuart Feldman] in response to the frustration of a co-worker who wasted a morning debugging an executable that was accidentally not being updated with changes. Make solves the problems I mentioned above – it tracks dependencies between sources and outputs, and runs complex compilation commands for you. For numerous decades, make has remained utterly ubiquitous, and for good reason: Makefiles are incredibly versatile and can be used for everything from web development to low level embedded systems. In fact, we’ve already written in detail about how to use make for development on AVR or ARM micros. Make isn’t limited to code either, you can use it to track dependencies and changes for any files – automated image/audio processing pipelines anyone?

But, well, it turns out writing Makefiles isn’t actually fun. You’re not adding features to your project, you’re just coercing your computer into running code that’s already written. Many people (the author included) try to spend as little of their life on this planet as possible with a Makefile open in their editor, often preferring to “borrow” other’s working templates and be done with it.

The problem is, once projects get bigger, Makefiles grow too. For a while we got along with this – after all, writing a super complex Makefile that no-one else understands does make you feel powerful and smart. But eventually, people came up with an idea: what if we could have some software generate Makefiles for us?

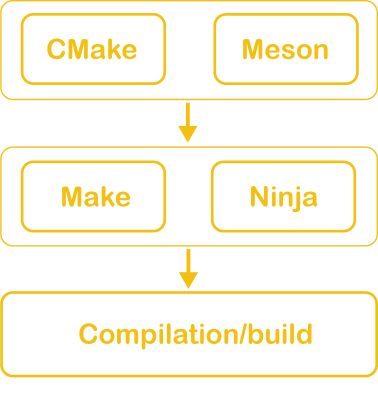

CMake, Meson, Autotools et al

Yes, there are a sizeable number of projects concerned only with generating config files purely to be fed into other software. Sounds dumb right? But when you remember that people have different computers, it actually makes a lot of sense. Tools like CMake allow you to write one high-level project description, then will automatically generate config files for whatever build platforms you want to use down the line – such as Makefiles or Visual Studio solutions. For this reason, a very large number of open source projects use CMake or similar tools, since you can slot in a build system of your choice for the last step – everyone’s happy.

Except, it’s really quite hard to tell if everyone is happy or not. As we know, when people selflessly spend time writing and maintaining good quality open source software, others are very kind to them online, do not complain, and do not write passive-aggressive blog posts about 27 reasons why they’re never using it again. Just kidding!

What I’m getting at here is that it’s hard to judge popular opinion on software that’s ubiquitous, because regardless of quality, beyond a critical mass there will always be pitchfork mobs and alternatives. Make first appeared in 1976, and still captures the lion’s share of many projects today. The ultimate question: is it still around because it’s good software, or just because of inertia?

Either way, today its biggest competitor – a drop-in replacement – is Ninja.

Ninja

Ninja was created by [Evan Martin] at Google, when he was working on Chrome. It’s now also used to build Android, and by most developers working on LLVM. Ninja aims to be faster than make at incremental builds: re-compiling after changing only a small part of the codebase. As Evan wrote, reducing iteration time by only a few seconds can make a huge difference to not only the efficiency of the programmer, but also their mood. The initial motivation for the project was that re-building Chrome when all targets were already up to date (a no-op build) took around ten seconds. Using Ninja, it takes under a second.

Ninja makes extensive use of parallelization, and aims to be light and fast. But so does every other build tool that’s ever cropped up – why is Ninja any different? According to Evan, it’s because it didn’t succumb to the temptation of writing a new build tool that did everything — for example replacing both CMake and Make — but instead replaces only Make.

This means that it’s designed to have its input files generated by a higher-level build system (not manually written), so integrates easily with the backend of CMake and others.

In fact, whilst it’s possible to handwrite your own .ninja files, it’s advised against. Ninja’s own documentation states that “In contrast [to Make], Ninja has almost no features; just those necessary to get builds correct. […] Ninja by itself is unlikely to be useful for most projects.”

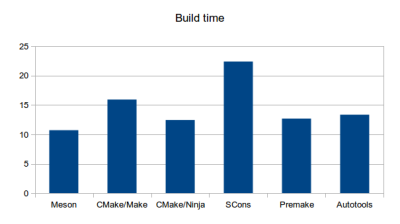

Above you can see the differences in incremental build times in a medium-sized project. The two Ninja-based systems are clear winners. Note that for building the entire codebase from scratch, Ninja will not be any faster than other tools – there are no shortcuts to crunching 1s and 0s.

In his reflections on the success and failure of Ninja, Evan writes that:

“The irony of this aspect of Ninja’s design is that there is nothing preventing anyone else from doing this. Xcode or Visual Studio’s build systems (for example) could just as well do the same thing: do a bunch of work up front, then snapshot the result for quick reexecution. I think the reason so few succeed at this is that it’s just too tempting to mix the layers.”

It’s undeniable that this approach has been successful, with more and more projects using Ninja over time. Put simply, if you’re already using CMake, I can’t see many reasons why you wouldn’t use Ninja instead of make in 2021. But I want to know what you think.

Over to you

It’s impossible to write about all the build tools around today. So I want to hear from you. Have you switched from make to Ninja? Do you swear by Autotools, Buck or something else? Will make ever go away? Will there ever be a tool that can eclipse them all? Let me know below.

WAF best, smallest

WAF is unbelievably slow

The original Perforce Jam is quite small but mostly abandoned in favor of one of the MANY forks.

tup is small and very fast.

I always have GNU make handy, so I just use that even though I really appreciate Jam. Easiest thing to do is use what is on hand and not install extra tools.

I always use make (gnu make these days) and always will most likely. When I leave a project it is a collection of source files and a Makefile. In many ways the makefile is a vital and important piece of project documentation. I or anyone else can come along years later and just type “make” to rebuild the project. All the compiler options, necessary libraries and such don’t need to be rediscovered. No IDE based development for me, there is too much that is not preserved and what do you do if you clone the project and don’t have the requisite IDE?

Build times are truly irrelevant these days, and fretting over them is wasted energy. Unless perhaps you are doing something like rebuilding the linux kernel. In the old days when I was running on 1 mips sun workstations, I had my build system beep and would read a page or two in a book, but those days are long gone. One project I currently work on has 30,000 lines of code or so and compiles in a couple of seconds. Not worth fussing over.

I also use make, due to inertia. Many of the replacements are very bad. I’m exposed to ant/gradle for example and they seemingly break things with every minor release, have far-flung dependencies, are very slow, and (the only one that really matters to me) are fantastically opaque.

But I disagree entirely that build times are irrelevant. Even one second waiting for the compiler to come back will harsh my development pattern. It’s great that modern CPU technology can build a whole large project from scratch in under a minute, but the incremental build to test what I’m working on right now still matters and that should not be any longer than necessary. It’s not that I’m impatient it’s that I’m less productive when there’s a delay in my conversation with the compiler.

This is well-studied. Programmers gain significant productivity each time the build time is reduced in half, all the way down to single-second builds. I have many processes that I have to iterate and if it’s punctuated by a 10 second build, it’s pulling teeth but if it’s less than a second then the whole process goes quickly and easily.

30K lines of code is pretty small, so it prob doesn’t make much diff what you use.. My current project takes about 30 seconds to make – mostly re linking – and about 15 minutes to do a full compile – and that’s on a fast machine.

Make. GNU Make, at that.

For bigger things, autotools. Once I discovered how easy it is to manage cross-build (build on x86_64 for Arm, for example), I realised those pay off big time for the investment (which does seem high at first!).

I am aware that those tools (Make, Autotools) carry a lot of legacy, and that it’d be desirable to streamline things a bit.

But all alternatives I looked at are great at throwing out lots of babies with the bathwater; sometimes the whole bathroom is gone, too.

There is a ton of multi-architecture fiddling baked in in Autotools. Whoever wants to sell me an alternative has to prove that (s)he has taken that into account. Things like “but it’s in Python!” are just irrelevant frills to me.

For specifically Clojure, Leiningen is very well made. I wish more build tools could learn from their example.

I thought you were going to talk about mechanical tools.

+1

WAF (Wife Acceptance Facor) for tools also.

When I read this headline, I was all set to tell the world about my most favoritest hardware hacking implement. So here it is:

I couldn’t live without my little handheld Dremel.

Perhaps the most used tool in my shop!

8200 FTW. Cutting, sanding, grinding, drilling pilot holes. It really is the most used tool.

Step bits revolutionized my mechanical builds. And, surprisingly, the harbor freight bits have outperformed many expensive ones at a fraction of the price.

In my part of the world we call them Step-Drills.

My first one was a 3 sizes in a display play pack. Once I had tried them I was sold! And you don’t need a box in which to store numerous bits of many sizes.

I usually work with Stainless Steel (Inox) which is very hard (especially the common 304 grade).

The last Step Drill I bought is coated with some miraculous material that extends drill life by months. I managed to locate a Step Drill whose smallest size is 1.5 mm with 0.5 mm steps up to 5 mm. I bought 10 of them when popped up to China a couple of years ago and still have 8 left.

Worth every Yuan I spent.

Visual Studio project is pretty good because it can be configured by clicking and it works.

But its support outside of Windows isn’t so great, the project and solution files aren’t easy to tweak manually, and it is so slow.

I worked on a large Windows-based project that consisted of about 40 separate .sln files which could be built in sequence using a custom perl script. Each .sln file was manageable individually, but the dependencies between them became a problem, so we switched to a single .sln file encompassing everything. That was better, but it took around 15 minutes to load the .sln into the IDE! Once it was loaded, performance was ok. A full build from scratch took around eight hours, but a dependency-based build with a trivial change took minutes.

The long load time discouraged developers from frequently syncing their code with the main repository.

SCons is oft criticized due to build times that are typically one or two orders of magnitude slower. Does it matter that cmake or ninja does it in 0.1 sec while SCons does it in 1 or 2 seconds? Unlike some other stuff mentioned in the article, Scons is a complete build system.

Autotools is a dark art and seldom suitable for the discerning engineer that knows and appreciates the difference between an ale and a lager.

Meson is an installation nightmare – at least for me on my Slackware boxes. It seems to be targeted at the windoze community where ‘fire-and-forget’ technology is pro forma.

Cmake is, for me, staid and reliable.

Ninja is a ‘pure’ make, so does not generate build scripts. Ninja is typically faster than make.

My choice has been, for about 10 years, Scons and Cmake. SCons is profoundly maintainable. SCons is reliable, robust, and easy to implement for any and all tools I use. A nephew, that is much smarter than myself, uses SCons + Ninja, but I suspect that he emphasized the ‘cool’ factor, and because his girlfriend uses ninja.

Autotools is a dark art and seldom suitable for the undiscerning engineer that doesn’t know or appreciate the difference between an ale and a lager. It’s for people who care about which stain of yeast will be used for making the ale, dependent upon geographic region, type of local grains, available brewing equipment, time of year and dialect spoken by local brewers.

Equating that Autotools users can’t tell difference between an ale and a lager? As a heavy user of Autotools, your analog feels like the exact furthest from reality. Autotools is for when you care about way to much detail.

Simple make here. Once you have a working ‘template’ it is easy to modify and use on other projects. So far it is all I’ve needed. Works well for all the C/C++ and other projects I ever worked on.

Now the RPI Pico C SDK infrastructure is built around CMake . Seems overly complicated, but then I am not used to it. Might grow on me….

Make for me. (Cmake is always telling me, “you got the wrong version, bro.” I’ve learned to hate it for that reason.)

I’m waiting 10-20 years for CMake to feature stabilize before I use it for anything too serious. I hate coming back to an idle project a few years later to find out that even the build system needs to be rewritten. Kind of sucks all the motivation out of me.

Make for the past 40 odd years, and gnu make more recently. And when the project is large enough and needs to be built on multiple architectures auto tools, though I do feel sometimes auto tools is write once language if you don’t use it frequently.

I recently dug up some early genetic algorithm C code from 30 years ago, untared it on a Ubuntu box, typed make and it mostly just compiled and ran.

Cmake is ok, but version rot drives me slightly nuts.

We had a largish project we had a contractor write around 15 years ago in Java (shudder), and he used an IDE with Ant. After a few years it stopped building due to multiple hidden dependency he’ll and the nightmare of Java versioning.

On Windows, visual studio is ok, and I’ve successfully imported ancient projects that mostly still build with minor tweaks.

I’m going to take a look at ninja though, the speed up for larger applications sounds appealing.

I’m old-school, GNU Make, and a bit of autotools for me. If I encounter something else, I try and find an alternative. Managing all those damned libraries, dependencies, and other cruft that things like ninja require (And don’t get me started on rust). I still have nightmares from trying to a get a library to build and one of the first lines in the build instructions contained the command “docker pull ….”.

I work on embedded OS development, so simplicity and size is far more important to me than speed of compiling. Not that compiling time actually takes that long either. I go all unix on my code and make a bunch of tiny binaries that just do a single thing each. Each of them takes less than 20 second to compile, even without cache. A while back, as an exercise, I built a working web browser that was really just a pile of small binaries that either called each other or other utilities like wget and awk.

At this point, my development workstation is pretty much just 1 GB (compiled size) of various stuff that takes a whole 3 hours of wall time to compile. And that is everything from my boot loader and kernel all the way through to my IDE, web browser, and office suite. My server version takes less than 1 hour and 250 MB of disk to compile (Really, its just a linux kernel, busybox, openssl plus whatever service that intended to run on top, so dnsmasq/git/dovecot+opensmtpd/openldap/nfs-utils)

30 years ago there was imake to build X & Motif. The O’Reilly book very appropriately had a snake on the cover. Imake attempted to use the C preprocessor to modify Makefiles. Except that not all C compilers had the preprocessor as a separate program. So imake carried around its own C preprocessor. Absolutely pathological complexity.

I simply installed Gnu make, wrote a single Makefile with includes for the peculiarities of different platforms. By doing that I easily supported any platform brought to me and actually did a few ports that no one asked for just to have something to do while I waited for something else to complete.

As Stu is famous for remarking, he made a terrible error by distinguishing between tabs and whitespace. And worse, not trimming off trailing whitespace. But we learned to live with it and I built tools to check after spinning my wheels for much of a day because of a trailing blank in a symbol definition.

Autotools is imake reinvented. Octave will not compile on a Solaris system or derivative because the Octave team has borked the configuration. Octave blames autotools and autotools blames Octave. R is as large and complex as Octave I had no trouble building it, so I’m sure who is to blame.

The idiocy of autotools is it’s not the 1990’s. There are not a couple dozen different proprietary flavors of *nix. There are the BSD, Linux and Solaris families left with the latter slowly dying out. Autotools tests for differences which no longer exist and hides errors behind a dozen layers of obfuscation just as imake did.

Autotools is the analog of buggy whips for people with self driving cars.

I do love those configure scripts that test to see if you are running on a Cray or a VAX.

I do a ton of cross-compiling and setting up project for other people to cross-compile. So our host build machine may be Ubuntu or Windows, but the target may be any number of emedded (ARM-32, ARM-64, MIPS) or traditional PC (Windows 32, 64, Linux). For this kind of flexibility, you just can’t beat CMake! There was a little bit of a learning curve, but not much. WAY easier than making Make files manually. You don’t need to keep Visual Studio Projects / Solutions in sync with the Linux Makefiles. Toolchains, C flags, etc., are always correct. I find generating a new build in a new target very satisfying when the CMake makes it easy!

Googles open sourced build system Bazel.

Encourages stating dependencies explicitly to produce hermetic builds.

Support for remote execution

and remote build cache.

The remote cache can really speed up builds when you switch between e.g git branches or if your colleague has already built the lib you needed to depend on.

It has support for querying your build rules for depedencies. And provides good feedback when there are syntax errors in the build files.

And there’s a large and growing community around it.

https://en.wikipedia.org/wiki/Bazel_(software)

My favorite build tool is the Arduino IDE. It does everything very well. There are no separate makefiles to consider, it has library package management, creating and using libraries is nearly frictionless.

For everything except embedded, I’ll leave C/C++ work to those brave men and women who seem to actually enjoy low level infrastructure work, they’re just not languages I’d choose to use for applications.

I’ll just stick to modern languages like Python and JS, which often don’t need separate build tools and fully specify everything about how packages work. C/C++ almost gives you the impression they don’t *really* want you to be using too many dependancies, or writing anything all that big.

The fact that we need all this build management crap in C reflects the fact that the language itself is based on header files and lots of globally available names and doesn’t focus on explicit modules and namespaces like Python. Managing builds is inherently complex with C/C++.

Modern languages seem like they were designed from the ground up for million line, 30 library projects, and they handle it all very well.

C/C++ is at it’s best when you don’t need anything like ninja or CMake. When you have something that’s both big enough to need it, and performance critical enough to need C in the first place, the build system is probably pretty far down on your list of challenges.

My favorite tool is RNA.

mRNA

ATP

Hate makefile with a passion.

This is the biggest reason I don’t use gentoo.

It seem when a program get big enough, you will alway get a makefile error and ruin the minute or hours… that you put into compiling.

I love Make for its simplicity and flexibility. And that it is completely language and os agnostic. It is just as happy generating code from a DSL as checking things out of a repository as running testcases as generating documentation as compiling C.

The main drawback of Make is that it is very difficult to compile C code that supports multiple platforms. For those cases I have used CMake and SCONS. And when NOP compiles take longer than a second or two. For other cases, the added complexity of the tools is just not worth it.

I hate IDE’s with built-in make systems as these make the code non-portable. They also hide many details that are incredibly important for serious developers.

I suggest Rubys Rake. Its very similar to make so it’s easy to transition. Its also a very mature tool. Its the main build tool used by Ruby On Rails projects, and comes preloaded with Ruby.

Here’s a base stm32 project using it: https://github.com/Ackleberry/stm32f3xx_c_scaffold

I suggest Rusts Cargo

If ain’t broken, don’t fix it.

First, I start with a command line, and the up arrow to do it again. cc foo.c ; a.out

Second, when I get a few files and a library, a batch file or shell script buildme{,.bat}

Lastly, when there’s enough time, and enough files in the project, bog standard make.

And if I had my way, K+R C, none of this new-fangled crap.

Well… here’s the thing.

Makefiles are just recipes. You tell it how to build a program. You can tell it what targets to use. You have to tell it what dependencies you have. You have to tell it what order to build it. GNU Make is fairly smart, but sometimes you gotta tell it what to do down to “Use this to compile that.”

Autoconf adds some system configuration probing, and will write out a Makefile from a pattern, as well as a config.h include file. It’s fairly intelligent on finding stuff but you gotta babysit it, clean it out between runs, etc etc etc. It is GNU’s answer to “We got too many Unixen that isn’t even POSIX complaint. We gotta probe for those quirks.”

And you thought Perl’s build process was bad enough — it builds it three times over! One with a minimal set that works everywhere, one with all of the system configuration suss’ed out but no plugin system, then one with everything and those plugins.

CMake tries to automatically work out all that out, but it’s not quite as smart as Visual Studio. You have to tell it about libraries, what configuration is needed, etc. It works fairly well. Raspberry Pi Pico’s C/C++ SDK uses this. But you still have to edit a CMake file.

But I think to truly get things right, you have to pre-process the code with some hints. Visual Studio does this — you have to tell it the libraries (references) the project needs, and it knows about the language you’re using, so it’ll scan and make that order for the build process. This reference mapping is updated in real-time, so building is fast.

(defsystem …. :patachable T) From the MIT derived Lisp machines. It had the ability to do all sorts of fancy dependencies between files, which most people didn’t bother with. The thing that did it for me was the patch management system.

Since the system supported dynamic replacement of individual functions, you could add just changed functions to a tracked lump, that could be loaded into running systems, to fix things. The editor was tied into the system, and it would find only the individual functions you changed, and lead you by the hand thru constructing the patch file.

Eventually you would recompile a system, and declare a new major version. Since this was 30 years ago, the machines were slower, so a complete recompile of a system with 250K lines of code, could take a 24 hours or more.

My favourite is allowing the experienced devs to do builds, along with their (our) friend BuildBot. I am best used elsewhere. Personally, I enjoy the mind and wisdom that has been given to me as my best tool.

I don’t understand why “jam” never caught on. It looks much like Make, but much smarter, it generates dependencies, which make does not.

It looks like a straightforward upgrade, unlike many of the other competing build systems, which are very different to make.

I found out about “jam” only after Perforce dropped support for it, so I guess that’s a blessing in disguise. I would have been all Don Quixote championing jam otherwise.

I might still use it myself, I don’t know. But I won’t expect it to take over the world.

I’m waiting 10-20 years for CMake to feature stabilize before I use it for anything too serious. I hate coming back to an idle project a few years later to find out that even the build system needs to be rewritten. Kind of sucks all the motivation out of me.

CMake isn’t a tool — it’s a horrendous atrocity, a nightmare of bad design. It’s the worst excuse for a build system that has ever existed. In fact it’s probably the worst piece of software ever conceived — I’ve never seen worse for certain. CMake learned nothing from the past, insisting to make all possible mistakes in one poor excuse for a program. There couldn’t be a bigger pile of steaming ugly C++ to do what is in the end a simple job any shell script can do.

It makes Metaconfig look awesome, and it makes GNU Autotools (tough maybe not including libtool) look like a sleek racing car. Imake is an elegant delight in comparison. I’m serious.

Please do not use CMake! CMake is a mistake.

I like Make, and Make all by itself is very good, if you use it in the “pull” way, letting dependencies drive the action, and not in the “push” way, as a sort of a script driver. I find this upside down thinking is the main cause of problems in build setups. People want to force things from the top, and “build it all”, rather than just focussing on one target that is the end result, and letting its dependenicies (on its sub-components) cause everything else to happen. Yes, you have to learn Make, but it’s rational, and it can be learned, it’s not that big. I don’t use half of the “new” (20 year old) features. My projects are not as complex as some, but I’ve seen plenty of large projects that use only make and work very well, yes, some use autoconf and such to sort out *nix differences, but in the end the whole project is a tree of makefiles, and I don’t see why anything more is needed.

Some of the best build systems I have ever encountered are the busybox/buildroot system and the Linux kernel. Both are Make file based. Auto-tools. though horribly complicated (I created one of the first .m4 files for cross-compiling python and boost), works..mostly. People didn’t like these tools, so they made scons and cmake. both of which *STILL* use autotools and makefiles. Then comes along meson, which breaks backwards compatibility with each release and STILL uses autotools and makefiles. Now if you want to compile new code on an older OS. you have hell because meson/ninja are too old, cmake is too old, and you have a complete disaster on your hands. All these build tools is add more complexity and more layers and more dependencies and less backwards (and forwards) compatibility. There are good reasons people cannot run the latest code (Red Hat, anyone:)? I’ve gone back to a simple, well constructed Makefile based on either buildroot or Linux, because they still just work and don’t have horribly complicated build stacks that work fine until they don’t. When you make a new build system, if you have to rely on the build system you don’t like, guess what? You’ve just failed miserably. I’m all for a better build system, but not at the cost of compatibility and simplicity.

Stay away from automake/autoconf. It is not backward compatible once they up the version.