IEEE Spectrum had an interesting post covering several companies trying to sell voice programming interfaces. Not programming APIs for speech recognition, but the replacement of the traditional text editor to produce programs.

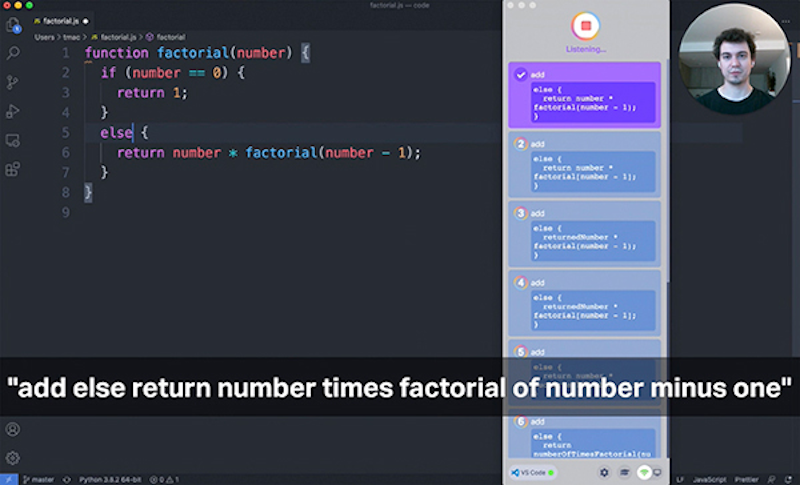

The companies, Serenade and Talon, have very different styles. Serenade has fairly normal-sounding language, whereas Talon has you use very specific phrases and can even use eye tracking to figure out what you are looking at when you issue a command. There’s also mention of two open-source products (Aenae and Caster) that require you to use a third-party speech engine.

For an example of Talon’s input, imagine you want this line of code in your program:

name=extract_word(m)

You’d say this out loud: “Phrase name op equals snake extract word paren mad.” Not exactly how Star Trek envisioned voice programming.

For accessibility, this might be workable. It is hard for us to imagine a room full of developers all talking to make their computers enter C or Python code. Until we can say, “Computer, build a graphic using the data in file hackaday-27,” we think this is not going to go mainstream.

The actual speech recognition part is pretty much a commodity now. Making a reasonable set of guesses about what people will say and what they mean by it is something else. It seems like this works best when you have a very specific and limited vocabulary, like operating a 3D printer.

Intersting approach. I would suggest Forth as the language, where you can start with simple blocks and start building your application in small blocks that are later connected.

What I would love to find is speech recognition software that works with Eagle. With only the dozen most popular things you would normally type into the command line…

Nice. Streamline the common operations rather than attempting to replace the entire UI. Or to replace the operations that would otherwise require that you move your mouse somewhere and then move it back to where it was before.

Most coders I know can type faster than they talk, Im actually prety sure a few of them can even type faster than they think.

Can you imagine a room full of coders talking to their computers and creating the next big application, the nose in that room is going to get a bit out of control. Then you have the issue with privacey. Are you going to start burying your coders in the bowels of the building so that critical system they are working on cant be copied by your competition by using a simple boom mic.

yep – talking is SLOW compared to typing! Even if the tech was perfect, it wouldn’t be usable for that alone.

What’s more, it’s much harder to describe where to go than using the keyboard and mouse.

So I cant’ see it being used much.

Don’t get me wrong, voice is great for some things, but writing code won’t be one of them..

It probably has a place though—just as even heavy users of the command line tend to also use their mouse for some things, there is probably a niche it can serve. We are just not used to it in our workflow yet.

maybe for some system commands, including swapping windows and running something, but not for programming as described in this article.

That’s what I thought! I guess a good example is using a Spacemouse when CAD’ing. You can easily multitask turning and twisting your model with your left while using the “real” mouse and the keyboard at the same time with your right hand.

I guess using a few spoken commands could help here and there, too.

Will it run in emacs?

http://ergoemacs.org/emacs/using_voice_to_code.html

“Meta x control s”

Even if this would replace typing, at least I would need to have the code visible in front of me. I’m a visual thinker (not to be confused with similarly named tools) and if I don’t see the code, it is very difficult to grasp what it’s doing.

What, and no reference to the Had article where this guy invented his own language to optimise coding by speech?

Can’t find the original, could it be Travis Rudd? https://www.youtube.com/watch?v=8SkdfdXWYaI

Sadly the speech recognition is anything but commodity. The accuracy achieved in domain specific tasks such as programming is poor. This is why Talon invested in their own training set. Dragon dictate may claim 98% accuracy, but that’s still 2 errors every few lines…

That but for OpenSCAD

I’ll wait for a reliable external neural interface that works. Until then reliable ancient keyboards, relative positional device, and touchscreen tech sufficing. Speech recognition has gotten much better but still wanky in comparison. Context auto insertion programming works but usually more hassle than useful.

Nothing there I can help u with. Not a huge Python programmer and wouldn’t say a fan. Only another scripter to me but thanks for the invite.

I’ve played with both Serenade and Talon. Yes voice is slow compared to typing, but it is nice to have a way to take some strain off your hands and I found that coding by voice also forces a bit more intentionality and forethought than I normally exercise, especially in an exploratory coding context. I’m glad there are folks working to make these tools better and better, and anything that makes coding more accessible is a big win.

A final note: as code autocomplete gets better (think language models trained on all of GitHub) you can get away with less and less manual input. I was skeptical, but TabNine is already showing me useful suggestions and saving me from typing quite a bit of boilerplate.