For years now we have been told that self-driving cars will be the Next Big Thing, and we’ve seen some companies — yes, Tesla but others too — touting current and planned features with names like “Autopilot” and “self-driving”. Cutting through the marketing hype to unpacking what that really means is difficult. But there is a standard for describing these capabilities, assigning them as levels from zero to five.

Now we’re greeted with the news that Honda have put a small number of vehicles in the showrooms in Japan that are claimed to be the first commercially available level 3 autonomous cars. That claim is debatable as for example Audi briefly had level 3 capabilities on one of their luxury sedans despite having few places to sell it in which it could be legally used. But the Honda Legend SENSING Elite can justifiably claim to be the only car on the market to the general public with the feature at the moment. It has a battery of sensors to keep track of its driver, its position, and the road conditions surrounding it. The car boasts a “Traffic Jam Pilot” mode, which “enables the automated driving system to drive the vehicle under certain conditions, instead of the driver, such as when the vehicle is in congested traffic on an expressway“.

Sounds impressive, but just what is a level 3 autonomous car, and what are all the other levels?

It’s All In The Levels

The Society of Auto Engineers, or the SAE as they are colloquially known, act as a standards body for the automotive industry. You’ll probably be familiar with them if you have ever changed the oil in your car and noticed on the can that it has a viscosity rating of something like SAE 10W-40. Their standards underpin much of what goes in to a motor vehicle, so it’s hardly surprising that when a self-driving car is mentioned it’s their level system by which it will be defined.

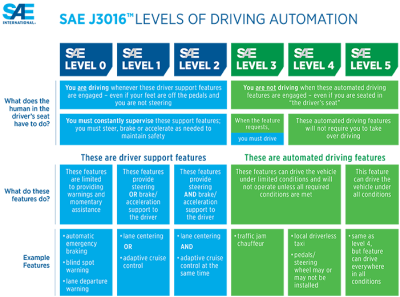

Driving automation is defined by SAE J3016 which has six levels numbered from 0 to 5. Level 0 is a normal meat-controlled car with few automatic safety features, and 5 is the steering-wheel-free auto-taxi from dystopian science fiction.

The first three levels require the driver to be on the case with increasing levels of assistance such as adaptive cruise control and lane centering only aiding them. Level 1 provides helper technologies while requiring the driver to remain in control, while level 2 can allow the driver to take their hands off the wheel but requires them to keep their attention on the road.

Meanwhile, the final three levels allow increasing full autonomy for the vehicle, with level 3 cars such as the Honda allowing fully autonomous driving in some circumstances with the driver able to take their attention away from the road, but with the car requiring them to take control again in some circumstances. The final two levels offer full autonomy, with level 5 going as far as not requiring any human driving controls to be present.

The Current Generation Doesn’t Have As Much Self-Driving As Marketing Hype Wants Us to Believe

The important feature of the new Honda being at level 3 is that it’s the first time a car has been put on sale to the general public which has a mode in which the driver is not required to have their attention on the road. As a comparison the much-publicised Tesla Autopilot remains at level 2, and has so far required the driver to stay alert ready to take over. Even the California carmaker’s upcoming ‘Full Self-Driving’ technology is still also at level 2, bending the limits of misleading language to breaking point. We’ve all read stories involving Tesla self-driving going wrong, but these have invariably involved drivers who failed to respect this. So Honda have stolen a march on their competitors, but given that many countries still prohibit level 3 cars or restrict them to testing only, it’s more of a PR victory than a commercial one.

The SAE have produced a handy chart to show the subtleties of the different levels, and it does a pretty good job of explaining them. Perhaps it will be a while before we see the higher levels in the real world, but at least it should help bust the sales jargon when we do.

Will we also need many levels of autonomous motoring insurance?

By that system, i’ll accept levels -1 or 5. You can keep you auto breaking.

And if i am not driving the car, then i am not driving the car. There is no god damn sense to except someone to take over after they’ve already phased out. I think that’s been proven with the Tesla crashes already (yeees yees yes i know some of those are extreme cases if stupidity).

And where i live, level 4 ain’t enough.

Ah, the joys of having your car decide something that isn’t an automobile is a reason to hard-break on the highway. That was more than a bit scary when driving.

I’ll take a pass on that, too.

I will never ride in a car that drives itself. As you said, hard pass.

One thing that I recently saw that reinforces my long time hard stand against self-driving cars was a short video of a Subaru running its steering wheel rapidly from lock to lock with no human input. Why would anyone want a car that can do this? What happens when a car’s computer decides to suddenly do this sort of thing at 75 mph? I for one don’t wish to find out.

IMHO, self-driving cars don’t belong on public roads, period.

What I would have no problem is self driving cars on roads that are designed for and only to be used by self driving vehicles and nothing else – no humans allowed except as passengers.

I think this is a general truth we’ve been reaching for a while. We’re at the point where humans are only better than robots because the environment is designed for humans. Design a warehouse, fast food restaurant, or assembly line meant for robots, and they’ll do much better job. Same goes for roads.

This is right, but the whole goal here is to avoid rebuilding infrastructure, b/c that’s the expensive bit. (Or at least the expense is less distributed.)

> We’re at the point where humans are only better than robots because the environment is designed for humans.

That’s a specious argument.

We have applied the least effort to its design, putting in only enough features that humans need to drive safely. We don’t always have road markings, signs, crash fences etc. and in places where it snows for half a year even what is a “road” or a “lane” is sometimes up to interpretation.

We’re better than robots because we don’t need as much help to successfully navigate the environment as it is. The environment is not “designed for humans”, we’re evolved to deal with it.

>Design a warehouse, fast food restaurant, or assembly line meant for robots, and they’ll do much better job.

Technically, they don’t do any better a job – you just constrain them in a way that they can’t fail at it. Basically, if you can’t make a car that navigates along a road reliably, make the road into rails, then it can’t make a wrong turn. Is this better?

Interestingly enough, Tesla is giving up on radars and relying only on cameras.

The reason is probably twofold: 1) radars interfere with other radars, just as lidars with lidars so having more than few such cars on the road will make a mess, 2) the radar and visual data can conflict, and the AI is not smart enough to double guess, so it has to default to one or the other anyways. When they don’t conflict, they information is superfluous.

Relying on radar/lidar/sonar is a hack, because the information it produces is limited, but it doesn’t throw as much false positives or negatives (except for point 1) so it works when you have next to nothing for AI. However, Tesla still doesn’t have anything better for AI either, so they’re taking two steps back, and then what?

current tesla cars employ forward facing radar.

graceful sensor fusion is a worthy and challenging endeavor.

forward facing radar works.

camera for perception makes a lot of sense. that’s what the original driver uses.

ultrasonic sensors are also employed in current tesla cars.

have nice day.

Sensor fusion works if the type of information is non-overlapping, if you can patch the information provided by one with the other. If the information is of the same type, it’s like your left eye sees the object and your right eye doesn’t – so which eye is telling you the truth?

Radar/lidar/sonar is just another type of camera that you use to see the environment, so it all reduces to “vision”.

That’s why you have three sensor sources, or have systems that return confidence levels not just true/false.

That’s kinda like saying “well just use fuzzy logic”, but in the end it just falls down to the same binary go/no-go logic.

Simple. Both sensors are telling the truth from their perspective. You solve for both truths. If I see something with my left eye only, it could be that something is blocking the view from the right eye. This is useful information.

For you, but the AI isn’t that smart and it doesn’t have the power budget to solve both cases.

If you put a bubble gum foil on the radar and it stops reporting objects, the AI does not think “Oh no, I seem to have gone blind!”, it just doesn’t get reports of objects and therefore doesn’t react to them.

The difference between you and the AI is that you operate on the direct experience of what you see, whereas the AI operates on symbolic data it gets from a separate visual recognition algorithm, or some other algorithm that deals with the radar image, already chewed up and spit out for it. It doesn’t have access to the raw sensor input, so it can’t double guess what it means.

And even if it did have access to the raw sensor data, you’d need a supercomputer on-board to make heads or tails of it. That’s the entire point of today’s self-driving robots: they are trained offline using a lot more computational power than is ever possible in the actual car, and the result is simplified and “frozen” so it can run in a power limited computer roughly equivalent to a laptop PC.

You can also think of it like having a collision warning system that sometimes beeps randomly and sometimes fails to beep when there’s a real object. You would find it useless.

If you had direct access to the sensor signals yourself, you could tell the difference between a false positive or negative because you have enough brain power to learn why it does that, but the computer doesn’t, so you’re left with an annoying beeper that cannot be trusted, and would be better if it was turned off entirely.

There is actually only one reason Tesla is giving up on radar: Supply chain constraints.

They’re out of parts for the radar and were going to have to cut production if they didn’t remove it. This would have been financially catastrophic, so they removed it and told everybody it was a feature.

The cameras on a Tesla are fixed relative to the vehicle. So they provide significantly less data about depth and object position than a pair of human eyes attached to the head of a person in the driver’s seat. They don’t have enough data for level 3 without the radar. They might not have had enough data for level 3 _with_ the radar, but now they definitely don’t.

>they provide significantly less data

Why would that be?

We are a loooong way off level 5 where I live in rural UK, with single-track lanes. If you encounter an oncoming car, who reverses? It depends on very fuzzy rules whether one of you has just come around a corner, who passed a passing place most recently, whether one of you is towing, whether one of you is a little old lady in her Morris Minor who last reversed in 1963, whether one of you is a van with restricted rear view, how aggressive each party is…

This. Also, how does it decide whether to clip the hedge and scratch the paintwork, or hit the pothole and risk bottoming out?

We probably need to consider some type of level 6 -where one system is driving more than one car, in a way that is beneficial to all. There’s no reason cars have to stop at intersections, provided this system can provide sufficient level of precision and accuracy.

I’ll go no further than level 2 until every car on the road is at level 5 and talking to each other deciding on speed, routing, and right of way without unreliable, wishy-washy, flakey grey matter involved. As long as there is even one driver like me on the road, no one is safe!

Exactly. I think the best option is pretty much to skip from level 2 right to level 5.5 or 6.

I think that’s level 7 or maybe even higher. A supervisory system that can take input from a human supervisor in a control room would be useful too.

Then they’ll start removing pavements (sidewalks) to “keep us safe” and prevent people jumping in the way of the driverless cars. And they can decide where and when you can drive.

Walking somewhere under your own initiative will be civil disobedience.

Just remember that because self-driving won’t work in all situations doesn’t mean it won’t work in some situations. I expect it will become normal to encounter fully self-driving cars within campuses and certain downtown areas of cities before long. They won’t be privately owned or operated, and they’ll be limited to their operating areas, which will be carefully managed to allow the cars to function autonomously. Perhaps other cars will be prohibited or at least limited in these areas.

Eventually, they’ll be able to handle more and more difficult situations, but that still doesn’t mean they’ll be able to handle everything. For instance, off-roading in the zombie apocalypse? Probably not.

Yes. These are called trams.

Trams are obviously limited to where the tracks go, and the current driver-less ones don’t let people anywhere near the tracks. Autonomous cars could go door-to-door and ought to be able to deal with pedestrians. They could co-exist with other transports (such as trams) that are intended for larger volumes and/or longer distances.

“…and they’ll be limited to their operating areas…”

Or trolley buses if you wish. Same difference, although the latter can go short distances off-route on battery power.

I do not think that I’ve ever been on a fully automated tram. Trams store an insane amount of kinetic energy, and operating in overlapping spaces with trucks, vans, cars, cyclists and pedestrians. I would be very impressed if it could be fully automated, as long as machine decisions like the trolley problem opted for full destruction of all property irregardless of cost to save any life.

They don’t always, or necessarily have to overlap with those other services. When they do, it’s usually a simple lack of planning or precognition.

Atlanta airport

The voice that announces on that shuttle — always reminded me of the cyclons. “Stand clear of the closing doors, human.”

All I ask is to let me drive, or let me sleep. Don’t bore me to sleep when I’m supposed to be paying attention. Level 2 is the worst of both worlds.

And it will get worse when technology improves. When your car can drive flawlessly for 1000 miles and then makes a fatal mistake, there is no way that you will be alert enough to prevent it.

Consider malware released into an ecosystem full of level 2+ cars. Mal-Adaptive lane changes? We can hardly secure our critical infrastructure so that worries me.

Or relies on GPS. Someday there will be a solar event that will knock out those satellites and your car will not go anywhere.

Two ideas:

The self-driving car have to use video from static cameras installed above the road crossings, not only onboard cameras. Steady image from street cameras are easy to process. environment must cooperate with self-driving cars. This will be more secure and cheap low-power solution. Such car will see road intersection from multiple views.

One man from Habrahabr (https://habr.com/ru/company/itelma/blog/497740/#comment_21579284) says that self-driving cars is not a thing humanity have to start from. The right order is this:

1. self-driving sub-way trains

2. self-driving trams and trains (still fixed route, but it have to deal with surrounding cars and humans)

3. self-driving buses (can change the line, but the whole route has some equipment installed along the road)

4. self-driving taxis (in single town)

5. self-driving trucks

6. full-featured -self-driving cars

Most engineers try to start from step 6. I don’t know why.