After decades of improvements to hard disk drive (HDD) technology, manufacturers are now close to taking the next big leap that will boost storage density to new levels. Using laser-assisted writes, manufacturers like Seagate are projecting 50+ TB HDDs by 2026 and 120+ TB HDDs after 2030. One part of the secret recipe is heat-assisted magnetic recording (HAMR).

One of the hurdles with implementing HAMR is finding a protective coating for the magnetic media that can handle this frequent heating while also being thinner than current coatings, so that the head can move even closer to the surface. According to a recent paper by N. Dwivedi et al. published in Nature Communications, this new protective coating may have been found in the form of sheets of graphene.

The Need for a Protective Coating

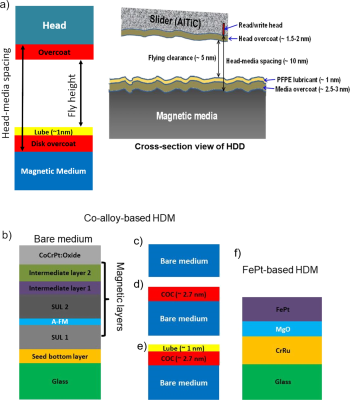

Perhaps the irony in calling HDDs ‘spinning rust’ is that the process of corrosion is one of the major enemies to the long-term operation of these devices. The thin magnetic layer on top of a platter’s base material would readily corrode if left exposed to an atmosphere. Cobalt, the Co in the CoCrPt alloys, is especially susceptible to this process. Once corroded, that part of the platter would then be far less effective at retaining a magnetic orientation. One of the primary purposes of this coating, or overcoat, is to prevent this from happening.

The other use of the overcoat is as a protective measure against mechanical damage. Despite all the fright about ‘head crashes’, mechanical contact with the platter is fairly common, and one of the uses of the overcoat is to provide protection against this, as well as reduce friction. The latter usually involves the use of lubricants, which adds another layer on top of the overcoat. Together with the overcoat, the lubricant layer forms a significant part of the head-media spacing (HMS), and thus how close the head can get to the recording media.

In order for the areal density (AD) of hard drives to keep increasing, it’s necessary for this HMS to decrease even further, which means a thinner overcoat. Today these are carbon-based overcoats (COCs), generally between 2.5-3 nm thick. For upcoming high-density magnetic media that is compatible with HAMR, this means that the COC has to fulfill the following requirements:

- Provide all features with a <1 nm coating

- Full corrosion protection

- Anti-friction equivalent to current COCs

- Wear resistance and stretch resistance (elasticity, i.e. Young’s Modulus)

- Lubricant compatibility (e.g. PFPE).

Of note here is that the magnetic recording material itself is also likely to change during the transition from PMR (perpendicular magnetic recording) to HAMR. This is similar to what happened during the transition from linear magnetic recording (LMR) to PMR as detailed in this paper by I. Kaitsu et al. from 2005.

With LMR, platters had used a coating of CoCrPt for the magnetic media, but for PMR this coating had to be more granular. The solution here was found in adding SiO2, as its grain boundaries neatly subdivide the CoCrPt into magnetic grains which work well with a PMR read/write head.

For HAMR magnetic media, the platter stack is changing again, this time to use FePt for the recording media, as this alloy is generally stable with the use of heat-based recording. This adds another two requirements to the coating for FePt-based platters:

- Thermally stable with HAMR cycles.

- Compatible with not only CoCrPt, but also FePt.

An ongoing issue with HAMR-based drives is that the application of local heat rapidly degrades the COC. Finding a new, more thermally stable overcoat material is paramount in their further commercialization.

Eventually, films of FePt will likely give way to bit-patterned media (BPM), in which magnetic islands are patterned into the magnetic recording layer. This would be similar to the CoCrPt-SiO2 magnetic grains, only on an even smaller scale. Ideally, the same <1 nm COC that works with FePt will also work with such new and upcoming technologies.

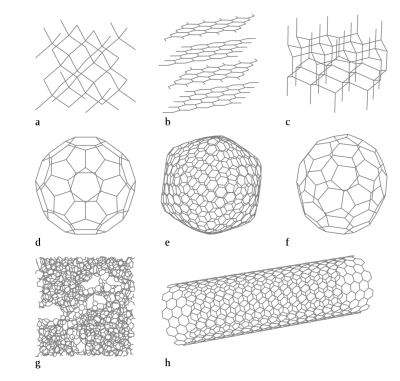

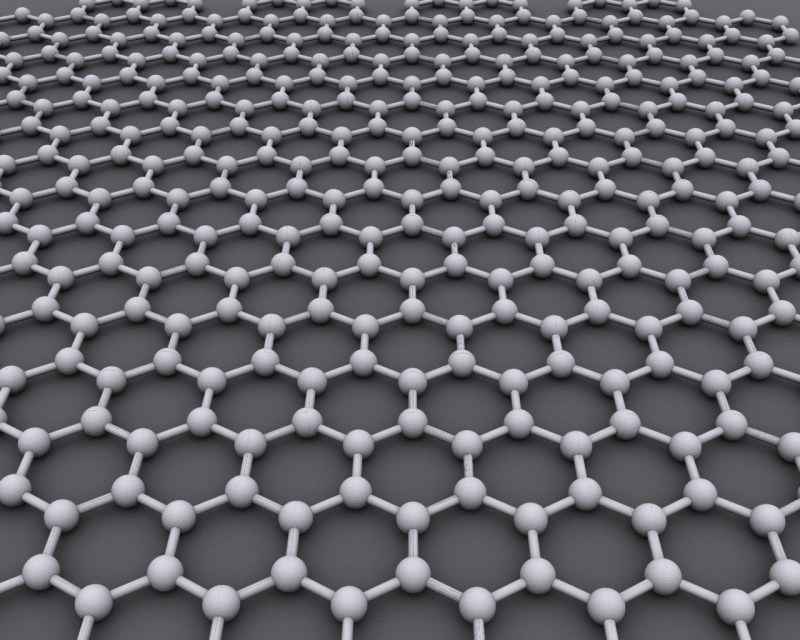

The Many Faces of Carbon

As the name of COC indicates, it too is based on carbon atoms, just like graphene. So what’s the difference between current COCs and new, graphene-based COCs? The main difference is in the way that the carbon atoms are linked together, also known as the allotropes of carbon. In graphene, the carbon atoms are linked together in a regular hexagonal lattice.

This regular lattice is part of the reason why graphene is so stable, but producing it has been a major challenge for a long time. It wasn’t fully isolated and characterized until 2004, when Andre Geim and Konstantin Novoselov at the University of Manchester used the world-famous ‘Scotch tape’ technique to pull graphene layers from graphite.

Since that time, the search for commercial applications of graphene has been ongoing, with the use in HDD COCs one of the more recent ones. As a two-dimensional material that is thermally and otherwise stable, it seems rather ideal for any situation where full coverage of a surface is required. Even if said surface is regularly being blasted by a laser as in the case of HAMR-based hard disk drives.

Science Checks Out

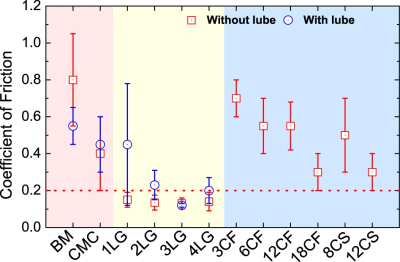

After N. Dwivedi et al. used chemical vapor deposition (CVD) to coat the bare media with 1-4 layers of graphene (1-4LG) using a wet transfer process. They then subjected these graphene COCs along with existing commercial COCs to various tests, including mechanical (friction and wear), laser-based heating and corrosion protection.

Although even a single layer of graphene (1LG) managed to significantly reduce corrosion and show good mechanical, friction, and thermal stability properties, it was found that >2LG-based coatings would likely provide the greatest benefit. Not just with existing CoCrPt-SiO2 media, but also with FePt with HAMR, and HAMR plus BPM. What this essentially means is that a >2LG COC should be suitable for hard drives today and well into the future.

Another interesting finding was that graphene-based COCs do not require lubrication as do current commercial COCs. The benefit of this is that commercially used PFPE lubrication in hard disk drives is not thermally stable when used with HAMR, and thus being able to omit it in favor of just the heating-resistant graphene means that two problems are solved simply by using >2LG instead of non-graphene COCs.

It’s the Little Things

The most interesting thing about this paper is an insight in how the development of new technologies is often held up by what seems like small details. Even though a suitable magnetic recording media was found in FePt already, and even though integrating semiconductor lasers in read/write heads was already a more or less solved problem, the lack of a suitable overcoat material could have derailed all of that effort, or at least postponed it for years.

We can’t celebrate and put in orders for HAMR HDDs just yet, of course. The fun part with materials science comes after a concept has been demonstrated in the laboratory and it has to be scaled up to mass-production. There’s a massive difference between the CVD of graphene in laboratory settings to produce a few platters for a test and producing thousands upon thousands of them in an automated factory setting.

There are a dizzying amount of ways to produce graphene at this point, which is both good and bad news. Not all methods create the same quality graphene, and not all methods lend themselves to mass-production, or the integration into the hard drive manufacturing process. Figuring out the best way to take the results from this recent paper and make it work in a factory setting will be the next step, which can take many more years.

But as with all things in science, good things come to those who are patient.

(Heading image: visualization of single sheet of graphene. Credit: AlexanderAlUS, CCA-SA 3.0)

Spinning disks. How… quaint.

Still can’t get a single 50TB SSD this decade unless they go with 3.5″ form factor and multiple PCB layers to fill out 3.5″ drives

Bring back the Bigfoot drives, but this time spin them faster and apply the latest bleeding edge technology.

SSDs aren’t going to get this cheap and large any time soon.

Imagine having to actually form each bit using multiple, complicated transistor structures when you could just spray them on?

They don’t make each transistor one-by-one. Modern CPUs have billions of transistors, and SSD chips must be similar to store billions of bits (even with multi-level cells)—imagine how long that would take. Instead, they make them in parallel, all at once, using photolithography. There are several steps to form a transistor, but those steps are done for every transistor simultaneously. Also, you might have noticed “BPM” mentioned in the article, which sounds like a similar level of structuring applied to HDD platters, so they’re going to be just as finely featured soon.

Also, you can buy a 100 TB SSD right now. It’s not cheap, though—maybe you meant there won’t soon be one that’s simultaneously that big and reasonably cheap?

There’s a open question out there to see if anybody actually wants hard drives this big. The primary customer is for big storage servers, but if the transfer rates can’t keep up with size (historically, they haven’t), then it will take a long time to rebuild a RAID volume after a failure. If another drive goes down while that process is underway, the entire volume could be a loss, so this causes anxiety in sysadmins. A lot of places are signaling that they’re not interested in hard drives much bigger than 20TB; they’re willing to give up density in favor of less worry over the risk of catastrophic failure.

It helps address the whole “what do I backup my large amount of data to” question. Tape is both an enterprise solution and an expensive one at that. And a lot of people don’t trust the cloud.

Tape is actually fairly cheap per TB.

As long as one handles a fair bit of tape that is, the drives reading/writing to them are costly as hell….

that’s one of the problems. Tape is not too expensive – and much much better to store than a hard disk, and much more robust. I recently read a tape from 30 years ago without a problem, try that with a 30 year hard disk (actually, I tried that too with varying degrees of success..)

But the problem is tape DRIVES are absolutely absurdly expensive ie cost more than a reasonable entire PC.

The second problem is tape technology seems to be not progressing, most (even expensive) ‘consumer’ tape drives are smaller than disks now ie a LTO-7 is notionally ’15TB’ but that is just marketing – in realty it has 6TB if you are storing data that has already been compressed. Want to back up a 14TB drive with video on it? You’ll need three (not cheap) tapes and a very expensive drive to do it.

LTO-8 doubles that – but the drive will cost the same as a reasonable used car…

Personally, I’ve had much worse luck with tape than hard drives. And if your data is really that important you should have it backed up off site anyway. One problem with tape, no random access, which makes it largely only suitable for backup and restoration of an entire volume.

Tape (LTO) is only expensive because that segment of the industry refuses to expand the market by making affordable SATA and USB 3 LTO drives.

If they had a LTO8 (12 TB uncompressed) internal SATA drive for $500, it would sell to the small business market, home office, independent video editors and studios, and regular folks who have craptons of data on hard drives they’d like to backup, but the only single piece of media capable of doing that (without a 4 or 5 figure price) is another hard drive.

LTO can do it, the manufacturers simply refuse to.

in 90ies, i paied 310 Euro (translate from precedent currency) to have and HDD of 300M, mainly full of dos/windows games and some videos.. 10 years ago i payed 107Euro for a HDD of 1,5T, mainly fill of foto, video, films. Now (2021) 10T HDD are priced around 300Euro. maybe in 2025 i will get an HDD 50T for 50 Euros ahd a way to fill wit data there always exist, i.e. to have your local storrage of netflix series or what ever.

Interesting and very valid point, though I think that also goes hand in hand with average file size and thus the required storage volume – you can give up density to improve rebuild times etc, but only up to the point you run out of space.

And as storage mediums get bigger so do file sizes, even when it really isn’t needed. Like video files – getting more and more common in 4K and above, but at sensible viewing distance and screen size its going to be barely noticeable as an improvement to 480p (assuming resolution is all that changed – lots of old 480p is also much lower framerate and vastly inferior in compression methods which is very noticeable), and even on larger screens going beyond HD is going to be tricky to spot by eye – its only when you sit very close to a larger screen so that it fills your field of view that the level of resolution change is really noticeable in moving images, static images are a whole different thing though.

My point really being that when they have to build a new building or four and pay the extra electric bill for spinning even more disks the bigger drives will start to look good again, even if they are slower than they would like.

I can also see such disks being godsends for the offsite backups – big enough to hold everything, small enough to transport – good as the internet speeds are it probably will never keep up with transferring physical media with mass transit, and undoubtedly would be more inconvenient using up network resources for so long.

And if they have the same sort of data safe storage lifespan of existing spinning rust good for archival and offline backups too.

I agree – the two biggest problems I’d like seen addressed with drives is transfer speed and reliability, not size.

It already takes a long time (a reasonable fraction of a day) to sequentially write to a 14TB drive – at the same data rates it will take an appreciable fraction of a week to fill a 50TB one…

Failure rates are still around the .5% to 2% ish and that’s in a ideal managed environment with good disks (see backblaze).

Error rates are still (in good drives) about 1e15 – but the drives are order 1e13 already. Do the maths! And ‘consumer’ drives have error rates of 1e14!

So faster read/write & more reliable please…

Graphene has a lot of potential in quite a few use cases. If only they can get a breakthrough in mass production.

I wanted a 4TB SSD recently… but when a spinning rust disk drive is 1/4 the cost, I picked up a 4TB HDD instead. I really didn’t need the speed as my use case is a file server…. But would have been nice :) .

Not sure of what good a >= 20TB would be as I’d be nervous of that much info on a single disk. Also would take awhile to ‘rebuild’ a raid systems (or even a single disk from backup) as the access speed really can’t change being mechanical. To bad SSD technology is so expensive (relatively) as really this is where you get that needed ‘write speed’ for massive data needs.

I’d rather have a single large disk, and proper backups (which may be on many smaller offline disks, or even a roll of tape if you feel oldschool – it really doesn’t matter) as that is vastly less energy consumption on the running system(s), means those systems can be physically smaller, and in many cases won’t change the file access speed and performance much, if at all, as many chipsets won’t be able to feed/consume from multiple sata drive (which they probably are when not talking SDD) at full speed anyway – though there certainly will be hardware and tasks where multiple disks can be very much faster.

All down to your needs, but I did just replace my failed 6TB with a 14… 16… damned if I remember – it was the best looking price per TB in my budget from a brand I hope means it will be prove reliable that was large enough to actually do the job, and a very useful increase in space for the archive, enough extra to keep more snapshots of the data changes, and finally enough extra space to keep filesystem images for every computer project that might need it – mostly remote pi projects perhaps battery powered so corruption is a big enough risk and reconfiguring everything would take long enough to be very annoying..

As long as you have a ‘need’ for it and wait for restores that is all that matters. I would think splitting data say into two 10TB drives would be better…. That said, my total ‘home data’ is 1.7T, so going bigger than 4TB HDD makes no since for my use case. A waste of money. Now I do have a 8TB external drive for quarterly backups, but that is different than an ‘in use’ server drive. I can see possibly bigger drives in this case. No cloud based backups for me — ever. Off-site physical backup of course, just not cloud based.

Agreed, the nearest I’d get to cloud is internet based backup I was part of running and set up – so perhaps for my family/friends we all act as each others remote backup in our own little cloud.. Not done that but its not a bad idea…

I end up with quite a bit of data – heck my music library is over 500GB now – I like reasonably quality, and have wide and eclectic tastes – including a great deal of NWoBHM thanks to my Dad and Uncle, as it ceased being ‘New Wave’ rather a long time before I was listening to music..

I have 3 small SSD’s (2x500GB, 1×250, Samsung EVO) as System, Work and Temp partitions and 16TB HD (ST16000) with 3 partitions for porn and such. HD is mostly off and it takes a few seconds to spin up, but if I need a few TB for something, it’s there. Cloud and USB backups for what’s important to me. Works nicely for my needs.

Have they actually found a use for graphene yet? All I’ve seen are a bunch of ‘potential applications’ that could happen within five years.

Easy way to get more speed from an HDD would be to give it two full sets of heads and interleave the tracks. It’d be like a RAID 0 striped pair in a single drive, double the read/write speed.

I have learned to love my spinning rust drives a lot more since the advent of affordable solid state drives. Now that they are not the only option, that makes them all the more appealing.

I use SSDs in all my desktops, laptops, and home server. The only place that the SSD doesn’t work for me (only because of cost) is for the data drive on the servers. Wouldn’t be without the SSDs (SATA or m.2) for OS and general purpose! Right now 1TB SSD is the sweet spot at $100 or so.

I used to hate spinning disks because they ruined the vision that we’d all have growing data crystals in the future, but instead were stuck with ugly rattling beige boxes.

But now we have something a little like that, I find my old clattering drives rather charming. Plus I mostly tinker with archaic hardware anyway, and physical disks can be got so cheap. I do have a couple SSDs, including one in this 13 year old Thinkpad I’m typing this on.

The benefits are obvious, but at the same time I am a long way from the bleeding edge.

All this talk about how great Graphene is and all these things it can be used for but no-one has figured out how to make it in large enough quantities and cheap enough to turn any of these applications into products you can buy.

You might look at graphene aluminum-ion cells, also. I think we may see some advancements there sooner than the hard drives.

From this discussion (and others) it appears that what’s really needed is a response surface optimizing speed, cost, size, and actual (not perceived) risk of error/failure. Somewhere in all that is a “sweet spot” though with SSDs linked to Moore’s Law and Spindizzy Disks progressing in occasional order-of-magnitude leaps, the answer might be surprising.

Interesting content . However…..

For most readers not familiar with this stuff (like most Hackaday readers I am sure) the acronyms are really irritating.

HMS – head-media spacing, COC carbon-based overcoats etc. Really?

For this audience a simple search and replace in your editor would make it a lot better to read.

Well first of all this is a hacker space blog for hackers. Anyone is welcome and if they have trouble understanding the unusual acronym there is always you can Wikipedia it or just Google it. But as for graphene, I’m thinking that it is starting to look a lot like nuclear fusion. You know, just another few years down the road.

Well. Not one “It’s HAMR time!” or “Can’t touch this!” joke.