An essential tool that nearly all of us will have is our laptop. For hardware and software people alike it’s our workplace, entertainment device, window on the world, and so much more. The relationship between hacker and laptop is one that lasts through thick and thin, so choosing a new one is an important task. Will it be a dependable second-hand ThinkPad, the latest object of desire from Apple, or whatever cast-off could be scrounged and given a GNU/Linux distro? On paper all laptops deliver substantially the same mix of performance and portability, but in reality there are so many variables that separate a star from a complete dog. Into this mix comes a newcomer that we’ve had an eye on for a while, the Framework. It’s a laptop that looks just like so many others on the market and comes with all the specs at a price you’d expect from any decent laptop, but it has a few tricks up its sleeve that make it worth a glance.

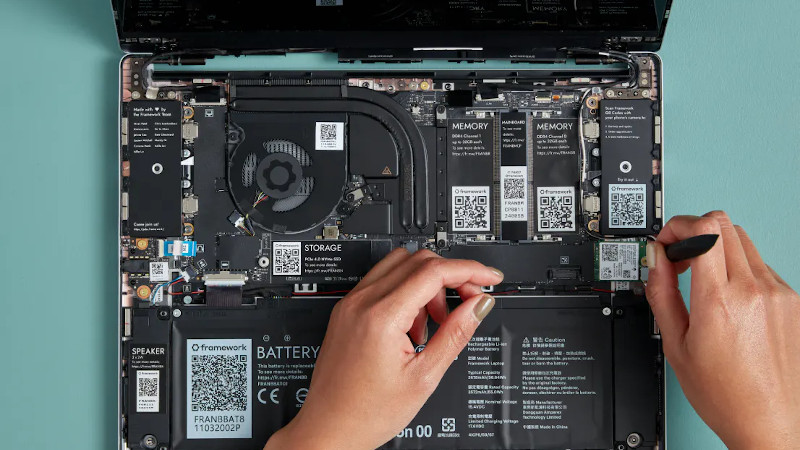

Probably the most obvious among them is that as well as the off-the-shelf models, it can be bought as a customised kit for self-assembly. Bring your own networking, memory, or storage, and configure your new laptop in a much more personal way than the norm from the big manufacturers. We like that all the parts are QR coded with a URL that delivers full information on them, but we’re surprised that for a laptop with this as its USP there’s no preinstalled open source OS as an option. Few readers will find installing a GNU/Linux distro a problem, but it’s an obvious hole in the line-up.

On the rear is the laptop’s other party trick, a system of expansion cards that are dockable modules with a USB-C interface. So far they provide USB, display, and storage interfaces with more to come including an Arduino module, and we like this idea a lot.

It’s all very well to exclaim at a few features and party tricks, but the qualities that define a hacker’s laptop are only earned through use. Does it have a keyboard that will last forever, can it survive being dropped, and will its electronics prove to be fragile, are all questions that can be answered only by word-of-mouth from users. It’s easy for a manufacturer to get those wrong — the temperamental and fragile Dell this is being typed on is a case in point — but if they survive the trials presented by their early adopters and match up to the competition they could be on to a winner.

Mmmm… it sounds like a very good idea: to define a module specification for laptops based on USB-C.

We’ve also released reference designs and documentation under an open source license for the Expansion Card system to enable community developed modules: https://github.com/FrameworkComputer/ExpansionCards

What I’d like to see is an expansion to ExpressCard to have a connector using the full 54mm width, with the addition being more PCI Express lanes and the additional lines for USB 3.x, plus an update to at least PCIe 2.0.

The current ExpressCard connector is 34mm wide and is basically just a combination of PCIe 1.0 x1 and a USB 2.0 port. Having an expanded ExpressCard (that’s still compatible with existing cards) would be ideal for laptop expansion modules, better than a USB C connection for many things, especially mass storage. It would also make adding an external GPU easy and inexpensive.

I like that the modules are basically dongles by another name and form factor

the form factor is important. The ltt video on this laptop points out a lot of actual, real world, use case scenarios that are improved for having an internal dongle. There’s nothing wrong with putting an interchangeable connector to fit within the profile of the laptop. The idea is making it normal or standard so you can just pick up a module for whatever you want. Some laptops have used this in the past, where there was a standardized sata connector, and there actually was a standard where you could hook up power as well, so you could swap out an extra battery for an ssd or an optical drive. but the sata standard was not fast/good/flexible enough. Part of the problem was that almost nobody included the power connectors. You could jam in anything that could run over sata. adding in something so universally exploited as a fast usb interface, and space to install a controller, you no longer need dongles and you aren’t limited by a sata interface. this is the dongle, and it doesn’t “dong” so it doesn’t hang out, so it doesn’t “dongle” it “dingles” *trademark reserved by me* which is REQUIRED for most daily ease of use. I have always hated laptops and other mobile devices due to their ability to completely ignore expansion when the only ‘good’ reason to do so was two fold, cost, and selling a new device with slightly more capability for boatloads more profit. Check out the total laptop a little deeper and you’ll understand that they had a spot on list of things to tackle and they did it great out of the front gate.

The best part about the dongle IS THE NAME…I mean, it’s got “dong” in it!!!

looks interesting – I would suggest the lack of option for a LINUX based OS would be adequately covered in the NONE option for a supplied OS. Could you imagine the outcry if Debian was installed and not Ubuntu or what ever other flavor one prefers – the safest option is to leave it blank and then the end user can install what ever they like with out having to first remove windoze…

I don’t trust USB, it’s UNRELIABLE. Gimme PCIe everywhere!

While on the whole I think you are correct as PCIe is simpler and still more than fast and effective enough, that ship has sailed. These ‘USB’ based modules are a good choice for now – as the current USB that does everything spec has been taken up so enthusiastically that most anything will be available or at least easy to make available for the connector (as it really does try to do everything)..

I hope they design a clip in that just carries a cable – so when you are using the laptop as a more permanent installation you can have the dongle full of connections you need plugged into that internal space rather than sticking out of the machine as much.

I disagree, USB still sucks.

Yes, it is fast enough now.

Yes, it is reliable enough now.

But the problem comes in when you have more than one of the same device. Each time you boot up they get re-numbered randomly.

For example that really sucked for me during work-at-home because I had three video devices, my webcam, a tv card and a security camera mounted over my driveway. Each time I started our meeting software I had to go into setup and cycle through cameras until I found the right one.

For some kinds of devices, like serial devices this can be handled by writing udev rules. But should someone really have to write udev rules just to name their hardware? I didn’t find any udev solution for my problem though. Sure, there were options to auto-generate persistant aliases but the OS would still create random /dev/video_x devices and our meeting software only looks for those, not /dev/whatever_i_named_my_camera.

“Each time you boot up they get re-numbered randomly.”

Not if the device is serial numbered in its firmware, and the OS should be ordering and loading drivers for devices based on the port they are plugged into, (root/downstream) hubs identify port by number, so the devices should be loaded in order.

The tree is no different than a pci bus at that point, so its usually a software or driver limitation when multiple (identical?) devices of the same type are connected and dont work as expected when all are connected at the same time.

Also I haven’t run into the problem with multiple video devices you mentioned in years, and I have 4 usb based devices connected, and close to a dozen sound devices, most usb based. the only sound devices I have issues with are the dynamically assigned DP/HDMI ones (monitors) via the nvidia driver. but thats a driver issue since its not labeling them with the monitor serial number from EDID…

The 3d files for expansion cards are available. You could totally make an empty sleeve for cable storage! Great idea

https://github.com/FrameworkComputer/ExpansionCards

No reason that modules couldn’t use PCIe in the future.

Having PCIe over a USB-C connector is known as thunderbolt, and is supported by a lot of laptops out on the market, wouldn’t be too much of a stretch for a future motherboard to have thunderbolt support for the modules if needed.

You mean like USB 4?

PCIe hotplug is far more spicy than USB, and USB 3.0 PHY is very similar to PCIe anyway. Anyone with a Thunderbolt laptop dock can attest to how finicky PCIe-over-hotpluggable-cables can be.

Connector-wise USB-C is decent, and in this application the module is retained to keep it connected.

Besides, you probably use tons of embedded USB devices and don’t know it: laptop webcams and laptop bluetooth are almost always internal USB devices.

Man, mąkę it OCP3.0 module standard. And you can even have GPU od it or some fancy networking. Imagine cabling in your laptop directly to IB cluster :)

£100 says there is no mobo replacement for the next CPU gen let alone the one after that.

As in you think the company is going to go bust or that they’ll introduce an incompatible second generation?

The easiest way to tell this isn’t going to work is to compare your current to last laptop. Is it roughly the same physical size? For most people the answer is no. Did you upgrade your screen to a higher res? Often yes.

High res screen can be fitted to this no trouble it seems – the cable routing and mounting for such a new screen is well thought out. Looks like the easiest replacement I’ve ever seen. Though at the same screen size going up more really isn’t worth it – its going to be so small an improvement from the normal viewing distance you just won’t see that – a better colour space, fancy touchstuff or more brightness for daylight viewing maybe, more resolution not likely..

Laptops generally are getting smaller historically – but we have hit the point now where they can’t get any thinner and still have the IO connectors. Plus getting any thinner will get uncomfortable to handle – the hand isn’t going to magically shrink to match the device…

So I really can’t see a reason it can’t have a mobo replacement with future tech. The thermal management in such a case is likely to be overkill for future mobile chipsets as everyone gets more concerned about efficiency, and even if there is a few chips out there too hot for it to cool there will be some of the new family that are not.

About the only thing that would kill that idea is the modular connection bays using that USB connector once it is a dead standard in favour of some new hotness that isn’t compatible. Which isn’t going to happen for quite some time, even if there is a new not compatible system it will take years for this older connector to really die (and in fact it only has to be the footprint of similar size so it fits in the same chassis – which is almost certain to be true – connectors are not going to suddenly go back to serial cable size)..

Now the company going bust, getting bought out and quietly shut down etc is very believable, but on a technical standing there is no reason at all you can’t build with future tech to fit this frame, and no reason you wouldn’t want to on the horizon either.

Look at repair manuals for laptop series that span 13.3″ to 17″. Usually the motherboard is the same and only the chassis changes, and the ports on one side of the chassis go through ribbon cables or extender PCBs.

The screen is also user replaceable, and the whole point here is to standardize a “mother board standard” for laptops like atx is on desktops, I believe they’ll make it work, there’s already a ton of hype around it, and yes the motherboard has the ability to accept touch input so a touch screen upgrade is likely coming down the pipeline. The bezels of the laptops snap off with magnets and the panel is held on by 4 screws.

Will they make a different standard motherboard for different sizes of laptops?

My laptops have standardized to 14″ as that’s a convenient size for a bag. As such, the screen resolution hasn’t changed from standard 1080p either because things would become too tiny to see. Yeah, maybe you could double the res and look at photos at the equivalent of mediocre print resolution, but then you’d have to scale the UI and text by 200% so what’s the point? I’d rather pay less money.

Hauling a 17″ “desktop replacement” around became very old very fast, because they’re bulky and they can’t be made light without compromising mechanical durability. They’re just too big and too fragile to be used, except bolted down to a desk, in which case: buy a real computer at half the price.

And “gaming laptops” are still a total gimmick. Buy a real computer at half the price if you want to play.

Actually, with current desktop GPU prices, gaming laptops are a good deal..

How does that figure? A gaming laptop can’t sustain the power draw of a modern GPU from both power supply and cooling perspective anyways, so you’re basically using a hobbled throttled version of the chip that runs with fraction of the performance, which is nevertheless sold to you at desktop model prices.

Same as with the CPUs. You nominally have the latest and greatest, but the cooling system can’t sustain more than a few dozen Watts continuous power draw so it starts throttling when you try to do anything with it.

So you pay for top shelf stuff, plus an extra premium because it’s a laptop and the parts are not standard, and you get bottom shelf performance. At least with a desktop machine you get proper cooling and power supply, so it’s a better machine even if the GPU eats up the rest of the budget.

Yeah, I’m with Jack here the current market makes a laptop for gaming a steal.

And even without the current wonky prices I would say any of the last and current gen gaming beasts are really quite good – the power consumption to performance has shifted a fair bit so the low end GPU for gaming are really not bad giving pretty high performance even with slim’n’light cooling.

While most of the slightly larger laptops with real performance parts now share cooling between a CPU and GPU that each individually pushes their power and thermal budget with dynamic power control – so you can actually run and cool that big 200W+ GPU flat out in a game, at the cost of CPU power budget and so performance – it is just finding the balance to get as much power into the system as the cooler can take split as well as possible between the two big heat generators, and many gaming laptops do that pretty well automatically while giving you controls you can dial in if needed…

A desktop rig still has some advantages of course, but the current crop of gaming laptops are really impressive and even at suggested MRRP are not terrible value. Just much less upgradeable.

>that big 200W+ GPU

Yeah, except a typical gaming laptop doesn’t have a 200 Watt GPU, it’s running at 80W max (E.g. GeForce RTX 3060) which is limited to 60 W + 20 W “dynamic boost”. It’s basically a crippled version of the desktop part.

The limits come from the fact that you only have a 75 Wh battery, which is optimized for high energy density instead of high discharge current. With the CPU and the MB and everything else, you’re already killing it at full power.

Also, the wall power supply maxes out at around 180W so there’s that as well.

Yeah many don’t push that 200W hard, and you are right even the real powerhouses don’t push that much on battery – its just not feasible, but when plugged into the wall it becomes possible – heck some of the really powerful ones have two charging bricks to let you run it flat out at your desk, but will work off one very well – just not as good as it can do, or off the battery in limp home style mode for a little while..

But even the 80W and less cards now have really solid performance, yeah they are not the same performance or even chips as the similarly named/numbered desktop card – which is a scummy naming con they have been using for eons. But they are not millions of miles away, very definately able to run even the latest games at good framerate and settings for the normal laptop resolutions – just don’t try to game in 4K on ultra on them and you won’t know its not a desktop for most games (at least without telemetry up etc to compare – as once you get over the refresh rate of the monitor or even just hit that 60fps ish it starts to become nearly impossible to actually tell)…

>even the real powerhouses don’t push that much on battery

It’s a typical design choice to play by the battery limitations at all times, because they don’t want to demand that you have to have the power brick in to play games, and also, they don’t want to run down the battery while its plugged in – so first they can’t exceed the battery specs, and secondly they have no reason to include a power adapter that is more powerful than what the battery can provide.

The special laptops that have two power adapters are even more expensive, and they’re not useful as laptops anymore because you have to keep them plugged in to play, and haul around two huge power bricks, so that’s getting in the territory of special pleading because they’re basically more expensive desktop machines in the form factor of a laptop.

>But they are not millions of miles away

That is not required. Only that the laptop parts are equally expensive or more so, despite being some fraction of the performance of their desktop counterparts. You pay almost the same price for a quarter or a third of the computational power at a best guess.

Dude the serious gaming laptops of today are very much more like fairly high end desktops that happen to be portable with inbuilt battery backup. On those really high end gaming ‘desktop replacement’ styles in laptop form nobody expects superb battery life and full performance on battery – its just not what they are for – but what they will do on battery is function with still greater performance than the thin’n’light macbook wannabe crowd for long enough to be useful. Though with how good low power CPU and GPU have gotten recently noticing that performance difference can be much harder than it used to be…

The only things that limit the power max a gaming laptop can handle while plugged in is cooling and the powerbrick and even that is not hard and fast limit, its not impossible to spike over the bricks capacity and draw from the battery to deal with it short term – the battery is still on average charging abeit very slowly in that scenario.

Remember its a computer, and modern computers are all about dynamic scaling – power and thermal management, its pretty trivial to run a 100w CPU at any nearly any power budget you care to set, and it will still perform well enough – there is no need at all to put in components the battery can run flat out – all the battery must be able to do is provide enough juice to run the device at all for whatever you decide the minimum time should be, which for desktop replacements under load is usually at most a few hours. For a modern and popular example look at the Switch, far greater performance when plugged in, as the battery just wouldn’t handle that. Even laptops from the pretty dim and distance past would scale to lower power budgets on battery power, and be deliberately scaled back further if battery life was a high priority, that really isn’t an issue at all..

Same thing with cooling – many many desktops even can’t cool their components flat out for long, but they can run at that peak speed for useful periods of time before having to throttle back. Sure something like a macbook barely lasts at top speed long enough to notice and has to throttle very aggressively its cooling is so poor, but many of the gaming oriented laptops won’t throttle down much and can stick there still at a very high speed, as the cooling is actually good enough.

Obviously there are downsides to a laptop vs desktop, but in recent years price to performance in the real world, especially if you are only gaming hasn’t really been much of a downside – for some price points gaming laptops have actually become superior to the desktop by meaningful margins..

Actually, windows allows adjustments of icons, based on the resolution being used.

I’ve used 4K on big screen, and small (17″) screen as well.

Older versions of windows, I had experience these problems with appropriate icon sizes, based on various screen resolution being used.

Yeah, they actually have solutions for that… so do most laptop manufacturers, due to the fact that most laptop models are configurable with at least 2 different screen types… I have seen in one series of laptop, the ability to do a 1080p screen, 1080p high refresh rate, 4k, and 4k touch screens. That’s four options you can generally find on the second hand market. To think that a laptop specifically designed for replaceable screens would not do this is illogical.

Not to mention, I don’t doubt that almost every laptop designer is not going to copy (already copying) these methods to comply with the possibility of right to repair actually having some teeth.

This company, given enough adoption and strong enough patents, could theoretically make enough money just to license the form factor to other laptop manufacturers in the future, or just outright sell it under a different branding so the purchasing company does not have to do in house research.

The reason why you can swap things around is because the screens are not made by the laptop manufacturer, but by some bigger company that manufactures different versions in the same format. It is not necessarily so that you will find a particular panel with particular mounting points and controllers, connectors, etc. in different versions – that depends on whether the original manufacturer wanted to sell different options or not.

Or both. They can introduce incompatibilie 2nd gen then go bust after community backlash before they can make it work with old chassis.

well, looks like the £100 has misspoken ;-P

The only minor niggles I have against this specific laptop is that it only has modules for all its IO… (Except the headphone jack on the right side.)

It would have been nice to see a USB-C port on either side of the laptop and preferably one on the backside too. Since then we do not need to waste the modules on something we will need regardless, and can instead spend the modules on more useful ports.

I would also not mind seeing a module on the backside of the device, since cables going out the back makes a ton of sense in some areas. And for those who don’t fancy ports on the back, they can use it for a storage device instead.

I think that is a future proofing thought data and power connections change generationally, so having a fixed port means the next generation comes out and you are stuck with a port you will at some point not want any more. But headphones and its jack are never going to change, its a connector with massive history, well optimised over all its years of service and about as small as is comfortable for a human hand to use. Once you work your way to something so effective you don’t invent a new functionally identical one for the sake of it.

IO on the back is nice, but almost impossible on such a thin device – the screen can occupy the same space as the cables plugged into that IO – its a bad idea. So you either need to bulk the thing up a little – which I’d be fine with personally or accept side IO only.

Considering how the standard USB Type A port has been around for over two decades without any real changes. (USB 3 came in and added some extra pins on its front, but that is about it.)

USB-C is the new port that is supposedly going to replace type A, for better or worse….

It has already been around for a good while, and is likely to stay for a long time considering how it has 6 differential pairs in it, (even if the two center one’s are “supposed” to be the same, but nothing rigid in the standard requiring this for future implementations, but one should though still expect old cables to bind them together.)

It wouldn’t surprise me if USB-C is here to stay for a long time into the future. So I can’t see much deficit with adding it as a permanent port. Especially when the modules themselves are just USB-C ports in a glorified recess.

What protocols the port supports is though all up to the motherboard to decide. After all, we are in the fun reality where a USB-C port doesn’t have to support USB, even on a consumer product. (I have seen USB-C ports that are exclusively display outputs/inputs and nothing more.)

Not that manufacturers are incentivized to do this but I think it’s time to start making future USB connectors backwards compatible. There’s a mindset I think, not just with the manufacturers but even some consumers that any standard more than a few years old has to be replaced.

I like to compare it to power outlets (here in the US anyway). I can go to an antique store, purchase an electric device that is over 100 years old and it still can be plugged into a modern wall outlet and work. Obviously one would want to inspect the wires and mind the polarization if the chassis is metal but it can be done and it works. And if you find something so old it actually pre-dates the current outlets.. no biggie, the previous standard is still what we screw our light bulbs into today.

Standard older than USB-C did lack some things. The ability for the same device to be either host or client is great. (Firewire should have won). Variable-voltage power is awesome as is the high speed and the ability to send video.

I would like to see a second, larger version of USB-C for non-portable accessories, something that is a little harder to pull out and durable like a tank. Kind of like the old USB-A, not mini, not micro. That and the current USB-C can coexist for the next 100 years. Or at least until we abandon copper for fiber-optics.

Reversible Type A connectors are finally getting out there. I bought a Dewalt branded USB 2.0 ro Type-C cable for my phone and surprise, surprise, the Type A end is reversible, with contacts on both sides of a thin blade in the center. Can that be done with USB 3 Type A?

It would not surprise me either – but usb/thunderbolt type stuff hasn’t really stabilised yet, is quite messy with compatibility and standards compliance, so a change or two to how its used would not be a big surprise either.

Using it for power as well as data means fixing that port to the mobo, which then limits future mobo features for that case, every mobo from now till doomsday must use USB-C – the chassis has the current slot in not some future connector shape.. Where the modules themselves can cease being usb-c and move on to that new standard easy enough (assuming the connectors exist in similarly small form factor – nearly a certainty) – as all the modules are is an alignment and latching lump to push the usb male into the female one directly on the motherboard – so you keep battery, keyboard, mouse and screen but move on to the new connector for everything on mobo Vers 2/3/4 – whatever point it comes in.

POWER. Reams of it.

Yeah who needs batteries? Where’s that 20A outlet? Need lots of power to compile those Arduino sketches!

That’s no “Hacker’s Laptop”, it has no keyboard drainage holes. Real hacker’s equipment will endure the occasional donation of beverages without getting messed up inside.

it’s a laptop. do you want the beverage draining into the motherboard?

My Panasonic Toughbook has what amounts to a “drainage ditch” for the keyboard. Then again, it’s about 2″ (5cm) thick.

Some of them are even water tight enough to be rated for use in the rain… Love me a Toughbook, always solid, and usually far lighter than you would think for their durability.

I thought ALL toughbooks were rated to be used in the rain? Or have they gone soft these days?

My old CF27 / CF25 you could bang nails into a wall with, the CF18 was a bit more delicate but still very much usable “in the field” in all conditions.

Had an Itronix IX250 whcih was a CF27 clone, those things were equally tough.

Our XPlore was brought down by dirt getting in the pogo-pin-array-external-docking-connector thing, and tech reached a point where a cheap Android tablet in a Peli case was starting to look like an easier and cheaper option.

There is fully rugged and semi rugged Toughbooks or at least there used to be, I don’t actually know if the current models are all rain proof and ‘fully’ rugged, I can’t afford new Toughbooks so don’t look – its like the Apple tax, just for good reason!

But there are older ones that while still tougher than a normal laptop are not rated for the 6ft drop onto concrete, etc.

Thinkpads have such holes that go straight thru to the bottom side, they are even appropriately marked with a drop symbol. Such small details make the difference between consumer toys and professional gear.

I’ve seen some Lenovo Thinkpads that very obviously had their keyboards designed to contain some pretty large spills, direct them down to a gutter then out drain holes through the bottom of the laptop. At worst one would have to replace the keyboard if anything worse than water was spilled.

They were also made so that everything ‘top loaded’ into the case. Remove 3 or 4 screws holding the keyboard and palm rest then turn it upright to remove the palm rest, keyboard, and everything else as desired.

There is a lot to like here. We know that processor upgrade-ability can be a crap-shoot (how many times has Intel changed pins/sockets). But, “the Framework Laptop is the only high-performance notebook with a replaceable mainboard that lets you upgrade to new CPU generations if you ever need a boost in the future.”

My nit is the display resolution: 2256×1504. There are no options here or maybe we can use a different panel. The website configuration options do not show a choice. However, the pictures and the descriptions imply that it should be feasible to change the display.

What’s wrong with it?

It’s almost literally half to a third of the resolution it should be?

4K @ 4096×2160 = 8.8 million.

This is only about 3.4 million.

No touch screen or pen inputs either.

Should be?

on a 13.5″ screen 2256×1504 results in 201 DPI which is perfectly good. Much better than most printed documents, considering you also have ClearType. Anything more and you’d have to upscale your UI which takes more GPU time (more battery) and results in blurring of all the fixed resolution content (bitmaps) to make small print legible again. A higher resolution panel at the same size lets less light through the grid so it’s also dimmer (or uses more power again).

You end up paying more for a “better” panel and get worse results.

The display resolution race is the same stupid contest as the megapixel race in digital cameras, where everyone stuffed more pixels in the same tiny sensor and got nothing but noise out of it.

Also, whose stupid idea was it to make Windows 10 default to 150% UI scaling on laptops?

I see it everywhere, and people don’t know how to turn it off or even realize it’s on, so everything looks like the monitor was set for the wrong resolution – which it basically is.

FYI the ideal dot pitch (inverse of DPI) of a monitor is approximately 1/60 of a degree for most people. Anything less and you can’t really see it.

For a typical viewing distance of 70 cm across a desk, that’s about 0,20 mm or 125 DPI. Halve that distance for a laptop screen, and you get 250 DPI. ClearType or any other subpixel rendering technique then boosts this up beyond what you can ever make out with the naked eye. In practice a slightly lower resolution will do. For color information, the eye’s resolving power is much less, so you don’t have to care about missing any details in photos or games.

Why does this matter? Why isn’t more better? Because the relaxed state of your eye is far-sighted. It stresses your eyes less the further away from the monitor you are, so you don’t want to use a resolution that is so sharp you have to bend in to read the fine print, because you do end up hunching your nose up to the screen automatically.

the good thing is that they claim anyone can make a module that fits that slot, so it could solve one thing that seems odd to me that the USBC module clearly could fit two ports not just a single pass-thru, and I can see some making one that uses two slots and brings a complete hub to work almost like a dock

Buy an existing USBC hub dongle.

Remove from enclosure / reduce cable length.

3D print compatible module enclosure.

Profit!

No nipple mouse, no sale.

No actually decent quality touchpad that doesn’t cause nerve damage like a keyboard nipple mouse does? No sale.

True, it has its downsides, but a touchpad is tedious and downright useless for anything other than basic web browsing.

I can play Homeworld II on my laptop on the train with a trackpoint because you can actually use all three buttons while moving the cursor.

Also, a trackpoint doesn’t exclude a touchpad.

That would have been awesome! Some years ago.

These days I’d rather have my phone, a NexDock and a truly customizable and powerful desktop sitting at home that I can remotely access when I need more than my phone provides.

Maybe my next phone will be one of those open-linux phones I’ve been hearing about, maybe with that I won’t even need the desktop so much.

The NexDock sure looks like a nice idea, although I don’t know why it doesn’t have a place to attach a phone securely rather than having it dangling off on a cable. I seem to remember a Motorola phone dock laptop thing that was very similar from several years ago, maybe being USB-C will make this more of a success.

Yeah, except a phone has terrible software support, so if I were to use it I would be permanently remote desktopping with horrible latency.

Although it wouldn’t work anyways, because I don’t have one of those expensive phones that cost as much as a laptop, which have the compatible “desktop mode”.

No nipple mouse, no drainage, no idea if IME can be neutered (at least as far as I saw). I’ll hang on to my cheap “disposable” ThinkPads, thanks.

Don’t get me wrong, I like the LEGO approach… but there has to be a WHY to the WHAT. Nothing this thing does addresses any issues I have with my existing “hacker laptops”.

It’s a solution looking for a problem.

The main reason I keep to Lenovos is that they have the nub mouse AND all three clicky buttons under your thumb. HP has some el-cheapo version of the trackpoint which isn’t nearly as good, and the rest just don’t have it.

There’s plenty of software where you need a middle click + drag to e.g. rotate the view of a 3D object, which is just impossible with a touchpad, but alas some models even with trackpoint don’t have the buttons in the right place so they don’t work.

Dell has, or has had, a trackpoint on their Latitude laptops, along with a touchpad. What I really miss is when laptops had trackballs. Then Apple introduced their first touchpad on a PowerBook and all the rest of the computer industry rushed to get rid of trackballs.

^ This. As long as any laptop is relying on Intel or AMD they’re bundling you a backdoor built-in with IME/TPM/PSP. The only hackers that would use this are the ones that don’t mind jail time.

Hang on – that chassis. Are they buying dead Macbooks and hollowing them out? Props if they are.

What is it with half-height arrow keys and essential keys only available in combination with Fn? Are people expected to use an external keyboard most of the time or something? I use an Alienware M11xR3 that’s so old that backlit keyboards were novel. It’s a 13″ laptop that has.. full height arrow keys. And Page Up and Page Down, Home and End as discrete keys in similar locations to a full-size keyboard too.

It’s to fit in those silly island keys that are needlessly large and far apart. I miss netbook keyboards, they were actually really comfortable to type on since you didn’t need to spread your fingers like a piano player

How a hacker laptop comes with Windows?

The modules seem more of a gimmick or novelty than anything else. You could fit a lot more in a smaller space just by building them into the laptop rather than have them removable, for instance that whole module to pass through the USB C port? I feel it would be better if it just had a good set of peripherals. Can you imagine having to carry modules about with you on the go if you need more than can fit in the laptop just to lose one somehow and you are on your way to a presentation but your laptop now lacks a HDMI port. Or just losing a module in general how much replacements could end up costing.

Also with adding an Arduino module, why? Just why? This one is definitely a gimmick, why add a beginner very low performance to a laptop like this in the form of a removable module, what benefit do you have to having a rubbish microcontroller in a laptop, if you want to use it just plug one in. Or if you insist on having a microcontroller module make it worthwhile and use a better microcontroller than Arduino, use something like an STM32 chip like one of the H7 series something you can buy and not feel how slow it is doing basic tasks so you’ve not got an expensive laptop with a cheap low performance microcontroller in it. Anyway who wants to have Dupont wires hanging out of their laptop?

There should be at least one screw to hold these modules, or some latch i could imagine situation when you pull out module with your connector if this is just press fit