For the last hundred or so years, collectively as humanity, we’ve been dreaming, thinking, writing, singing, and producing movies about a machine that could think, reason, and be intelligent in a similar way to us. The stories beginning with “Erewhon” published in 1872 by Sam Butler, Edgar Allan Poe’s “Maelzel’s Chess Player,” and the 1927 film “Metropolis” showed the idea that a machine could think and reason like a person. Not in magic or fantastical way. They drew from the automata of ancient Greece and Egypt and combined notions of philosophers such as Aristotle, Ramon Llull, Hobbes, and thousands of others.

Their notions of the human mind led them to believe that all rational thought could be expressed as algebra or logic. Later the arrival of circuits, computers, and Moore’s law led to continual speculation that human-level intelligence was just around the corner. Some have heralded it as the savior of humanity, where others portray a calamity as a second intelligent entity rises to crush the first (humans).

The flame of computerized artificial intelligence has brightly burned a few times before, such as in the 1950s, 1980s, and 2010s. Unfortunately, both prior AI booms have been followed by an “AI winter” that falls out of fashion for failing to deliver on expectations. This winter is often blamed on a lack of computer power, inadequate understanding of the brain, or hype and over-speculation. In the midst of our current AI summer, most AI researchers focus on using the steadily increasing computer power available to increase the depth of their neural nets. Despite their name, neural nets are inspired by the neurons in the brain and share only surface-level similarities.

Some researchers believe that human-level general intelligence can be achieved by simply adding more and more layers to these simplified convolutional systems fed by an ever-increasing trove of data. This point is backed up by the incredible things these networks can produce, and it gets a little better every year. However, despite what wonders deep neural nets produce, they still specialize and excel at just one thing. A superhuman Atari playing AI cannot make music or think about weather patterns without a human adding those capabilities. Furthermore, the quality of the input data dramatically impacts the quality of the net, and the ability to make an inference is limited, producing disappointing results in some domains. Some think that recurrent neural nets will never gain the sort of general intelligence and flexibility that our brains offer.

However, some researchers are trying to creating something more brainlike by, you guessed it, more closely emulates a brain. Given that we are in a golden age of computer architecture, now seems the time to create new hardware. This type of hardware is known as Neuromorphic hardware.

What is Neuromorphic Computing?

Neuromorphic is a fancy term for any software or hardware that tries to emulate or simulate a brain. While there are many things we don’t yet understand about the brain, we have made some wonderful strides in the past few years. One generally accepted theory is the columnar hypothesis, which states that the neocortex (widely thought to be where decisions are made and information is processed) is formed from millions of cortical columns or cortical modules. Other parts of the brain, such as the hippocampus, have a recognizable structure that differs from other parts of the hindbrain.

The neocortex is rather different from the hindbrain in terms of structure. There are general areas where we know specific functions occur, such as vision and hearing, but the actual brain matter looks very similar from a structural point of view across the neocortex. From a more abstract point of view, the vision section is almost identical to the hearing section, whereas the hindbrain portions are unique and structured based on function. This insight led to the speculation by Vernon Mountcastle that there was a central algorithm or structure that drove all processing in the neocortex. A cortical column is a distinct unit as it generally has 6 layers and is much more connected between layers vertically than horizontally to other columns. This means that a single unit could be copied repeatedly to form an artificial neocortex, which bodes well for very-large-scale integration (VLSI) techniques. Our fabrication processes are particularly well suited to creating a million copies of something in a small surface area.

The neocortex is rather different from the hindbrain in terms of structure. There are general areas where we know specific functions occur, such as vision and hearing, but the actual brain matter looks very similar from a structural point of view across the neocortex. From a more abstract point of view, the vision section is almost identical to the hearing section, whereas the hindbrain portions are unique and structured based on function. This insight led to the speculation by Vernon Mountcastle that there was a central algorithm or structure that drove all processing in the neocortex. A cortical column is a distinct unit as it generally has 6 layers and is much more connected between layers vertically than horizontally to other columns. This means that a single unit could be copied repeatedly to form an artificial neocortex, which bodes well for very-large-scale integration (VLSI) techniques. Our fabrication processes are particularly well suited to creating a million copies of something in a small surface area.

While a recurrent neural network (RNN) is fully connective, a real brain is picky about what gets connected to what. A common model of visual networks is that of a layered pyramid, the bottom layer extracting features and each subsequent feature extracting more abstract features. Most analyzed brain circuits show a wide variety of hierarchies with connections looping back on themselves. Feedback and feedforward connections connect to multiple levels within the hierarchy. This “level skipping” is the norm, not the rule, suggesting that this structure could be key to the properties that our brains exhibit.

This leads us to the next point of integration: most networks of neurons use a leaky integrate-and-fire model. In an RNN, each node emits a signal at each timestep, whereas a real neuron only fires once its membrane potential is reached (reality is a little more complex than that). More biologically accurate artificial neural networks (ANN) that have this property are known as Spiking Neural Networks (SNN). The leaky integrate-and-fire model isn’t as biologically accurate as other models like the Hindmarsh-Rose model or the Hodgkin-Huxley model. They simulate neurotransmitter chemicals and synaptic gaps. Still, it is much more expensive to compute. Given that the neurons aren’t always firing, this does mean numbers need to be represented as spike trains, with values encoded as rate-codes, time to spike, or frequency-coded.

Where Are We at in Terms of Progress?

A few groups have been emulating neurons directly, such as the OpenWorm project that emulates the 302 neurons in a roundworm known as Caenorhabditis elegans. The current goal of many of these projects is to continue to increase the neuron count, simulation accuracy, and increase program efficiency. For example, in Germany, a project known as SpiNNaker is a low-grade supercomputer simulating a billion neurons in real-time. The project reached a million cores in late 2018, and in 2019, they announced a large grant that will fund the construction of a second-generation machine (SpiNNcloud).

Many companies, governments, and universities are looking at exotic materials and techniques to create artificial neurons such as memristors, spin-torque oscillators, and magnetic josephson junction devices (MJJs). While many of these seem incredibly promising in simulation, there is a large gap between twelve neurons in simulation (or on a small development board) and the thousands if not billions required to achieve true human-level abilities.

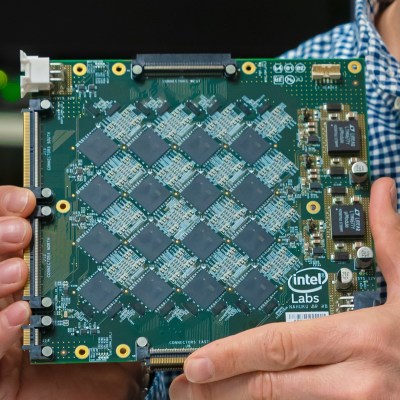

Other groups such as IBM, Intel, Brainchip, and universities are trying to create hardware-based SNN chips with the existing CMOS technology. One such platform from Intel, known as the Loihi chip, can mesh into a larger system. Earlier last year (2020), Intel researched used 768 Loihi chips in a gird to implement a nearest-neighbor search. The 100 million neurons machine showed promising results, offering superior latency to systems with large precomputed indexes and allowed new entries to be inserted in O(1) time.

The Human Brain Project is a large-scale project working to further our understanding of biological neural networks. They have a system known as the BrainScaleS-1 waferscale that relies on analog and mixed-signal emulations of neurons. Twenty wafers (each 8″) make up BrainScaleS, each wafer having 200,000 simulated neurons. A follow-up system (BrainScaleS-2) is currently in development, with an estimated completion date of 2023.

The Blue Brain Project is a Swiss-led research effort to simulate a biologically detailed mouse brain. While not a human brain, the papers and models they have published are invaluable in furthering our progress towards useful neuromorphic ANNs.

The consensus is that we are very, very earlier in our efforts towards creating something that can do meaningful amounts of work. The biggest roadblock is that we still don’t know much about how the brain is connected and learning. When you start getting to networks of this size, the biggest challenge becomes how to train them.

Do We Even Need Neuromorphic?

A counterargument can be made that we don’t even need neuromorphic hardware. Techniques like inverted reinforcement learnings (IRL) allows the machine to create the reward functions rather than the networks. By simply observing behavior, you can model what the behavior intends to do and recreate it via a learned reward function that ensures the expert (the actor being observed) does best. Further research is being done on handling suboptimal experts to infer what they were doing and what they were trying to do.

Many will continue to push forward with the simplified networks we already have with better reward functions. For example, a recent article in IEEE about copying parts of a dragonfly brain with a simple three-layer neural net has shown great results with a methodical, informed approach. While the neural network that was training doesn’t perform as well as dragonflies in the wild, it is hard to say if this is due to the superior flight capabilities of the dragonfly compared to other insects.

Each year we see deep learning techniques producing better and more powerful results. It seems that in just one or two papers, a given area goes from interesting to amazing to jaw-dropping. Given that we don’t have a crystal ball, who knows? Maybe if we just continue on this path, we will stumble on something more generalizable that can be adapted into our existing deep learning nets.

What Can a Hacker Do Now?

If you want to get involved in neuromorphic efforts, many of the projects mentioned in this article are open-source, with their datasets and models available on GitHub and other SVN distributors. There are incredible open-source projects out there, such as NEURON from Yale or the NEST SNN simulator. Many folks share their experiments on OpenSourceBrain. You could even create your own neuromorphic hardware like the 2015 Hackaday Prize project, NeuroBytes. If you want to read up on more, this survey of neuromorphic hardware from 2017 is an incredible snapshot of the field as of that time.

While it is still a long road ahead, the future of neuromorphic computing looks promising.

Somewhere in there is…quantum.

Prof. Penrose may well agree with you; testing it will be a right bastard though.

Our current understanding is that no quantum processes are involved in the function of brains, so there is little point in even attempting to utilize quantum computing.

“our current understanding is that no turbines are involved in the process of bird flight, so there is little point in even attempting to build a jet airplane”

Just because something isn’t REQUIRED doesn’t mean it can’t HELP.

They also laughed at wright brother, right?

I think quantum will help but the real thing will be when they incorporate blockchain into this research.

That may be true, but our current understanding rarely holds up to the march of time and progress. No one thought quantum forces were at work with lizard toes until someone looked and realized they were. I would be more surprised if there were no quantum element than if there were.

“Some have heralded it as the savior of humanity, where others portray a calamity as a second intelligent entity rises to crush the first (humans).” I think it will be somewhere in the middle, the AIs will keep us in nature reserves or as pets.

Animal metabolism and muscles are very efficient compared to any machines and they have the factories to make all the components built in. They can even make more animals without devoting any attention to it. The AI will find them so useful that it will start improving them.

Sad that nobody sees this happening already.

The real AIs are Google, Facebook, Apple, Amazon, Wal-Mart. They’re engineering populations, cities, nations, even governments of people into the perfect consumers (pets). They exist in the domain of infinite money and economic dreams. No need to stoop to the level of a human (boring) to exist.

Nobody actually needs to see a robotic corpus to know there’s artificial life with abstract goals and ideas out there. Domestication by corporation has been happening for centuries.

I will stipulate the amazing results that come from current AI based on neural network algorithms.

I will also stipulate that biological brains are based on neurons, with no other mysterious (“quantum”) ingredients.

But I think that we’re still a long long LONG way from using NNs to get anywhere near complete brains. Mainly because nobody knows how brains are wired up! Don’t ask the computer scientists, ask neuroscientists. 6 years ago the Human Brain Project set one anonymous neuroscientist off on an angry vulgar rant that “WE HAVE NO #%$@ING CLUE HOW TO SIMULATE A BRAIN!”

He thinks it’s nothing but cargo-culting: throwing a bunch of random circuits together and calling it a “brain”.

https://mathbabe.org/2015/10/20/guest-post-dirty-rant-about-the-human-brain-project/

Why not tell you, for example, that the functionality of the brain cannot be copied without calculating the interaction of enzymes, pheromones, and hormones – secreted by symbiotes, for example, human microbiota. The microbiota can influence your choice of all foods, women, men, your state of focus and attention, your ability to react to danger, etc. The microbiota has almost complete control over “Substantia nigra” ( and not only Microbiota in 70% of cases always wins the race for the right to prioritize human actions and these microbes do not care what you think about them = they choose almost everything for you every day! And yes, your wife or girlfriend was not chosen by you!). People study the theory of pushing in psychology, but it is necessary to study the theory of enzyme control by an individual due to the secretion of microorganisms of symbiotes. Guess how, and how much, is the microbiota of a normal person and, for example, a schizophrenic and its enzymes and hormones different?

It’s an old one but there was a very interesting experiment where they got neuroscientists to attempt to reverse-engineer a very basic microprocessor by studying it – TL;DR they couldn’t…

I think this was it:

https://digitalminds2016.wordpress.com/2016/06/18/could-a-neuroscientist-understand-a-microprocessor/

Given that we have an excellent understanding of the microprocessor at every level, and it’s vastly simpler than a brain, this does not bode well for the field.

However if you ask some electronics engineers to reverse-engineer a microprocessor they could do a pretty good job, given the right tools and approach.

What this is telling me is that you probably need some specialist-trained neuroscientific engineers to reverse-engineer a brain, and probably better tools to do the job. Such training obviously doesn’t exist yet, but it will come as the field develops.

Or maybe it’s telling you that electrical engineers are just smarter, and we need more of them on neuroscience. ;-)

Those researchers probably picked one of the worst cpus they possibly could for analysing with their techniques. Those old 8bit CISC processors don’t have much in the way of specialized functional units in the way modern processors do, nor do they even have pipelining. On something like a modern cpu where you have pipelining, vector execution units, floating point units, integer units, multiple levels of cache, special crypto hardware, a gpu, a memory controller, etc. I think they might have had more of a chance of seeing something with their approach.

So, they can even understand a simple CPU with a little ALU, four or five registers, 8/16 bits buses, a single operation per clock, and little more, and you think they’ll have a better chance with a Ryzen 3-type CPU and its billions of transistors, ¿yes?

You see, the problem was that the microcontroller wasn’t complex enough. Everyone knows that people only understand the most complex of devices. Maybe if it had some PCI-E 16x lanes and a USB 3.1 controller built in, they would have had better luck.

Not calling the Turing cops yet.

I feel that nengo should be mentioned here.

https://www.nengo.ai/examples/

its a framework for spiking neural networks.

take a look at spaun:

https://xchoo.github.io/spaun2.0/videos.html

Regarding the “AI winters/summers” at teh top of the article…

I trained in AI (did my M.Sc.) in the ’80’s during one of the summers.

I noticed that what you call the subsequent AI winter coincided with the absorption of many of the ground-breaking AI programming techniques into mainstream computing.

I expect this cycle to rinse and repeat.

That’s like saying because early attempts at VR in the 90s were followed by their own VR winter, our current VR tech will also soon nosedive and be abandoned.

The AI winters of old only occurred because of overpromising and underdelivering.

Anyone who knows anything about modern ventures into AI are much more conservative with their promises and predictions, which leads to lower expectations that can be met much easier.

There will not be a third AI winter, you can take that to the bank.

Correct me if I am wrong but SpiNNaker is a project of the University of Manchester in the UK, not Germany. Computerphile did a quick overview of the project: https://www.youtube.com/watch?v=2e06C-yUwlc

Yeah, we create an human-like computer and blah, blah, blah.

So, for starters, it seems we really cannot innovate in the field, if we “inspire” (copy, really) ourselves the Nature way (neural nets)

And then what? If it is “equal” -or worse- than us, what can it say which we didn’t already know?

And if it ends being “better” -for being more swift than us, really-, what can we say that can be of interest to it?

Meanwhile, the machines take the jobs, our will, and let them destroy us little by little.

I’ve been thinking about this recently, and given the increase in contagious diseases, the insane weather events, and everything else climate change related that we’ve accomplished, maybe building some self replicating and intelligent computers is the only real way of insuring anything “human” remains when we’ve inevitably wiped ourselves off this planet. If longevity of our society and cultures is your goal, we’re going to have to expand our ideas of what we’re willing to accept as being our “descendants.” Biological life may not make the cut.

Let’s assume that it’s much faster than we are, and not distracted by sex, sleep, and power lust. We’re designing it, so we can tweak its design to behave as we want it to behave. Set it to the general task of improving technology, or understanding all the effects of all known chemicals on the human body. Advancing by 100 years for each year of real time seems like a good thing.

“We’re designing it, so we can tweak its design to behave as we want it to behave.”

That’s a nice thought, but it may be impossible. This link has some ideas of why, and searching “so stamp collecting” gives more. https://cs.stanford.edu/people/eroberts/cs181/projects/2010-11/TechnologicalSingularity/pageview0834.html?file=negativeimpacts.html

In essence, tell a General AI you want help collecting stamps. Does it steal credit card numbers to bid at auctions, or does it hack major print houses and just make stamps? Does it find a way to kill another stamp collector so you can bid on their estate?

https://openai.com/blog/faulty-reward-functions/ shows how an AI was trained to play a video game. Humans looked at the game, and decided that high score represented better play, since that was easier to train on than determining what place the AI finished. The AI found a small out-of-bounds loop around scoring zones, and achieved high scores while never finishing the race.

The only reason we, as humans, can understand “play this game and win” is because of a deep understanding of each word, the contexts clues of the word placement, shared experience of what “winning a game” means, and so much more. I’m not sure we can exhaustively elaborate all of those clues. And if that is the case, we won’t be able to “just design an AI to how we want it to behave.”

“And if it ends being “better” -for being more swift than us, really-, what can we say that can be of interest to it?”

I think that question is a explored a bit in the movie “Her”

https://www.imdb.com/title/tt1798709/

Where (spoiler alert?) the machine(s) progress beyond being servants to humans, leave the humans with some working technology (not as advanced as Her), and go off to explore(compute) deeper paths.

I’m a little confused by the characterization of Poe’s “Maelzel’s Chess Player” as a story about a thinking machine. It’s a pure non-fiction piece, debunking an automaton that was then being exhibited as a machine that played championship-level chess, known as the Turk. Maelzel had purchased the device from its inventor, Baron Kempelen, and was showing it on a sort of proto-vaudeville circuit, claiming it to be a pure machine; in his piece, Poe demonstrates how there’s actually a human concealed inside the clockwork. (This is why Amazon called their service for subcontracting humans to do tricky non-machinelike computing work “Mechanical Turk”.)

Now, Poe does mention in the piece the work then being done by Babbage, and makes the interesting claim that, if the device were purely mechanical and actually had reduced chess to a solved game, it would never lose, but there’s nothing science fictional about it

Adding biological realism to spiking models has always perplexed me. Neurons with Hodgkin-Huxley dynamics evolved because biological cells have transmembrane potentials and ion channels and that’s literally the only way they could send quick discrete signals. Trying to mimic that complexity seems like a waste of energy when you can still design spiking, plastic systems without the complexity of a biologically realistic model. For sure biological models are useful for biology and neuroscience, but it seems like the wrong direction for artificial neuromorphic systems.

I agree. There’s more than one way to skin a cat, as my father was fond of saying. We can achieve a similar result with dissimilar means. Perhaps something that could truly be called an android might be best designed with such things in mind, but a whole bunch of very intelligent robots can be built using alternative paths. When you consider that we’re open to non-carbon based life still being considered life, then we should be open to non-biologically accurate intelligence still being considered intelligent.

But we also have only one model of a generally intelligent brain. Perhaps the chemical inaccuracies are a requirement for intelligence as we know it? Perhaps the phenomena we call intelligence is a byproduct of neurons not being locked to a clock cycle.

Sure, there may be other models as well. How do we find them? Modelling what we know works, later gaining more insight in how it works, and eventually recreating it in different ways has worked for us so far.