[Jeff Geerling] routinely tinkers around with Raspberry Pi compute module, which unlike the regular RPi 4, includes a PCI-e lane. With some luck, he was able to obtain an AMD Radeon RX 6700 XT GPU card and decided to try and plug it into the Raspberry Pi 4 Compute Module.

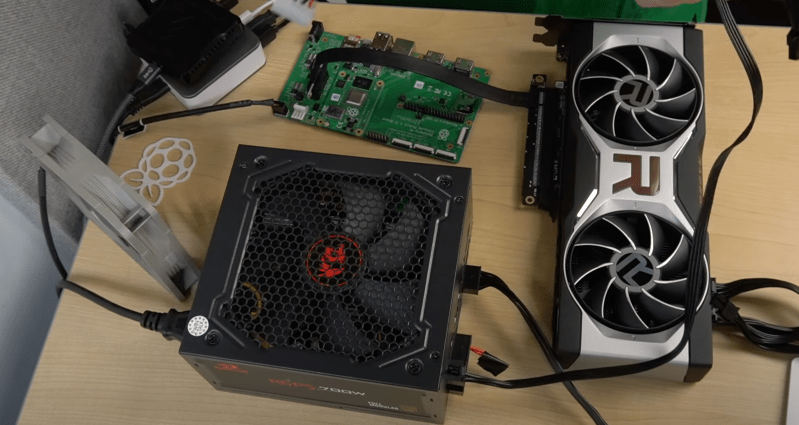

While you likely wouldn’t be running games with such as setup, there are many kinds of unique and interesting compute-based workloads that can be offloaded onto a GPU. In a situation similar to putting a V8 on a lawnmower, the Raspberry Pi 4 pulls around 5-10 watts and the GPU can pull 230 watts. Unfortunately, the PCI-e slot on the IO board wasn’t designed with a power-hungry chip in mind, so [Jeff] brought in a full-blown ATX power supply to power the GPU. To avoid problems with differing ground planes, an adapter was fashioned for the Raspberry Pi to be powered from the PSU as well. Plugging in the card yielded promising results initially. In particular, Linux detected the card and correctly mapped the BARs (Base Address Register), which had been a problem in the past for him with other devices. A BAR allows a PCI device to map its memory into the CPU’s memory space and keep track of the base address of that mapped memory range.

AMD kindly provides Linux drivers for the kernel. [Jeff] walks through cross-compiling the kernel and has a nice docker container that quickly reproduces the built environment. There was a bug that prevented compilation with AMD drivers included, so he wasn’t able to get a fully built kernel. Since the video, he has been slowly wading through the issue in a fascinating thread on GitHub. Everything from running out of memory space for the Pi to PSP memory training for the GPU itself has been encountered.

The ever-expanding capabilities of the plucky little compute module are a wonderful thing to us here at Hackaday, as we saw it get NVMe boot earlier this year. We’re looking forward to the progress [Jeff] makes with GPUs. Video after the break.

Sounds like an effort to bring crypto mining to raspberry pis in a way not previously possible. Cause really, if you can’t play games on it, why bother? ;) I bet this could be used to get some previously janky emulators to run at full speed.

Could be pretty handy for other GPU compute applications where PCIe bandwidth isn’t important. Raytracing, or other simulations, for example.

GIMP photo editor or photoshop… hell video edits with animation or anything edit based this is genius really … would be easy as a fabricator/maker to very discretely make a briefcase style housing with a built in laptop screen ran on a controller and RC car batteries… hell you could make a full blown editing laptop and even add a touch screen drawing pad wired into the thing for less than a 1/4 c0st of a full rig in today’s market not to mention a decent drawing tab… bravo good works here being did

Like, assembling furniture where you neither chopped the wood nor made the tools and screws?

Or grilling a steak on a barbeque where you neither mined the iron nor fed the cow?

Not everything is a waste of time.

Here it is even combining things in a bit of an unusual way (not sure if I would say it’s a hack, and I would certainly not do it myself (b.o. because of lack of skills) but I am also not the benchmark). Here is even lots to learn.

I’m sure this is a deep rabbit hole.

If a future Pi compute module using a future SoC comes with more PCIe lanes then this early investigatory work will come in handy for when the usefulness increases.

For such a small and intergrateable device just one lane really is enough for a great deal of impressive things, if you can actually get all the PCIe devices that may be wanted to work on it.

So I don’t actually think more lanes is really needed to change the usefulness, and I’d not be surprised if future CM don’t have more for a while, as 1 lane is really quite alot of IO potential for such a small processor/memory unit, and you can switch PCIe to run multiple devices off the one lane, in most cases it will be more than enough. So just getting the arm drivers working will vastly increase its usefulness.

It is however definitely somewhat of a rabbit hole, drivers for arm are less common than you would like, open source drivers that make tweaking to run on arm possible likewise. I’ve been hovering on the edge of really diving in for a while, but not quite found the time and energy to invest yet.

It’s very deep. I was reminded as soon as I saw mention of the security processor doing memory training.

AMD’s cards have been shipping with Platform Security Processors for a while now. These small embedded systems are their equivalent to the Intel Management Engine and have been included on their CPUs since fam16h. More recently PSPs have also been embedded in all of their GPUs as well.

Originally I think the excuse was along the lines of verifying ROMs in case someone tried to ebay an overclocked card. Since then they’ve tied them into Digital Rights Management, and more recently, memory initialization (memory training).

On the CPU side this means coreboot has to let the PSP do memory init instead of replacing an intel-FSP-style blob that’s part of the BIOS. This means that no amount of reverse engineering will ever get you the same level of openness on modern AMD hardware. It’s interesting to see the memory training being done by the PSP on their GPUs too.

The mining and Gaming are really a bit of an excuse to slap the card in and make a video.

I’m no t sure they have addressed the memory map issue with the PCIe and I assume they havent even started implementing an OpenCL capable driver for ARM…interesting though!

I’m lead developer of vm6502q/qrack, a quantum computer simulation framework. (https://github.com/vm6502q/qrack) Including the PyQrack pure Python bindings under the same GitHub organization heading, this is literally what our PyQrack ARMv7 binary distribution is meant for, (though I think you might need an ARM64 build, which I don’t personally have hardware for, at the moment). The ARMv7 binary is linked with our full OpenCL support, and tries to use any OpenCL device available, but falls back to pure C++11 CPU simulation if there are none. We’ve even run on the RP3 VideoCore IV, in the past, thanks to ICD by doe300 on GitHub.

I’d LOVE to see this running PyQrack! The binary wheels are also less than 4 MB. I’m looking into getting an ARM64 Linux system, likely a Pi, and I’d personally help, if anyone was interested.

I’m honestly impressed that this worked.

PCIe GPUs are perhaps the fussiest of peripherals in terms of making assumptions about the platform; and a memory-mapped peripheral with as much or more RAM than the host system is also more fiddly(address remapping, etc.) compared to peripherals that communicate with just a teeny little sliver of system RAM.

For a SoC that probably has a PCIe root mostly intended for system designers to connect a chosen and vetted peripheral; not some shellshocked PC PCIe root that’s expected to respond sanely to any random card someone shoves in; even getting to the point where driver support is the relevant question is not bad at all.

Next is a GPU with a slot for a Pi compute module. Then you power the GPU with some kind of backplane and ready you are!

“I installed my computer in my GPU”