Tired of that unsettling feeling you get from looking for paywalled papers on that one site that shall not be named? Yeah, us too. But now there’s an alternative that should feel a little less illegal: this new index of the world’s research papers over on the Internet Archive.

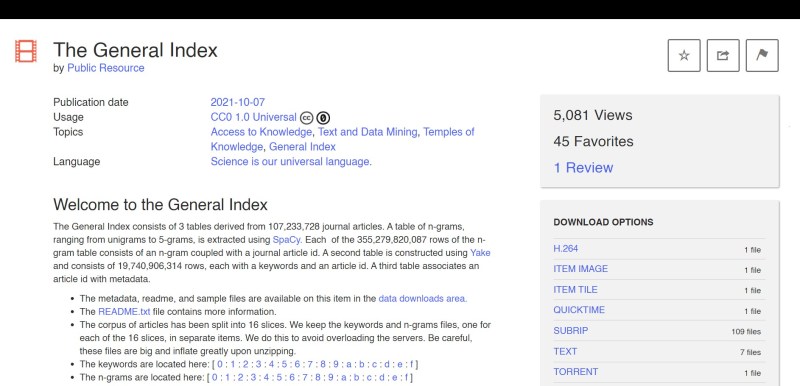

It’s an index of words and short phrases (up to five words) culled from approximately 107 million research papers. The point is to make it easier for scientists to gain insights from papers that they might not otherwise have access to. The Index will also make it easier for computerized analysis of the world’s research. Call it a gist machine.

Technologist Carl Malamud created this index, which doesn’t contain the full text of any paper. Some of the researchers with early access to the Index said that it is quite helpful for text mining. The only real barrier to entry is that there is no web search portal for it — you have to download 5TB of compressed files and roll your own program. In addition to sentence fragments, the files contain 20 billion keywords and tables with the papers’ titles, authors, and DOI numbers which will help users locate the full paper if necessary.

Nature’s write-up makes a salient point: how could Malamud have made this index without access to all of those papers, paywalled and otherwise? Malamud admits that he had to get copies of all 107 million articles in order to build the thing, and that they are safe inside an undisclosed location somewhere in the US. And he released the files under Public Resource, a non-profit he founded in Sebastopol, CA. But we have to wonder how different this really is from say, the Google Books N-Gram Viewer, or Google Scholar. Is the difference that Google is big enough to say they’re big enough get away with it?

If this whole thing reminds you of another defender of free information, remember that you can (and should) remove the DRM from his e-book of collected writings.

Via r/technology

Hmmm…interesting legal angle. I’ve read that removing all vowels from text actually SPEEDS UP reading and comprehension for some people…

This General Index [1] appears to be what is commonly called an n-gram database or table [2]. N-gram tables are common, and there are myriad ways to use/mine them. Take the Google Books Ngram Viewer for example [3][4], you enter a phrase and it displays a graph showing how those phrases have occurred in a corpus of books.

I read some articles about this General Index plus its README.txt file [5]. This database looks a bit half-baked at this point, plus it really would benefit from some usage examples. I expected to see a link to an online tool where you enter some words and get back a list of hits. If something like that exists, I cannot find it.

The bulk of the General Index Postgres SQL data has been split into 16 .zip archive files numbered from 0 to f totaling 22.5 GB compressed. There is a .zip file download available [6] that contains small examples of the data. The General Index README.txt briefly suggests that tools like spaCy [7] and YAKE [8] may be used for n-gram extraction and natural language processing respectively against the General Index, but there’s nothing more.

1. Welcome to the General Index

https://archive.org/details/GeneralIndex

2. n-gram

https://en.wikipedia.org/wiki/N-gram

3. Google Books Ngram Viewer

https://en.wikipedia.org/wiki/Google_Ngram_Viewer

https://books.google.com/ngrams

4. What does the Google Books Ngram Viewer do?

https://books.google.com/ngrams/info#

5. The General Index README.txt

https://ia802307.us.archive.org/18/items/GeneralIndex/data/README.txt

6. General Index Samples

https://archive.org/compress/GeneralIndex/formats=TEXT&file=/GeneralIndex.zip

7. spaCy

https://en.wikipedia.org/wiki/SpaCy

8. YAKE! (Yet Another Keyword Extractor)

https://pypi.org/project/yake/

https://github.com/LIAAD/yake

[citations included]

Thanks!

@Ren said: “[citations included] Thanks!” You are welcome. I encounter many fascinating topics here on HaD. When I delve deeper or explain further, I have decided to try and provide some references. A crude way of giving back.

No, the only uneasy feeling I get when using Sci-Hub is from not being able to donate.

Those papers should be free anyway, if public money paid for the research.

It’s an interesting thing. The funding for the research went to the researchers. The researchers submit the papers to publishers who then put them behind the paywall. The researchers aren’t getting any money from those paywalls, just the publishers.

As such, if you e-mail the authors of a paywalled paper they’ll usually be happy to send you a copy.

you forgot one detail – you also have to pay for putting the paper behind a paywall

But, assuming you want to publish it in a journal at all, you generally have to pay more to not put the paper behind a paywall (i.e., to publish in an open-access journal).

This is all very reminiscent of the Dead Sea Scrolls where the full texts were only made available to selected researchers who in some cases had not published after decades, but the concordance was published quite early on. Someone reconstructed the missing items by reversing the index and published their results. For more details see:

https://en.wikipedia.org/wiki/Dead_Sea_Scrolls#A_Preliminary_Edition_of_the_Unpublished_Dead_Sea_Scrolls_(1991)