Audiophiles will go to such extents to optimize the quality of their audio chain that they sometimes defy parody. But even though the law of diminishing returns eventually becomes a factor there is something in maintaining a good set of equipment. But what if your audio gear is a little flawed, can you fix it electronically? Enter HiFiScan, a piece of Python software to analyse audio performance by emitting a range of frequencies and measuring the result with a microphone.

This is hardly a new technique, and it’s one which PA engineers have used for a long time to tune out feedback resonances, but an easy tool bringing it to the domestic arena is well worth a look. HiFiScan is a measuring tool so it won’t magically correct any imperfections in your system, however it can export data in a format suitable for digital effects packages.

This is hardly a new technique, and it’s one which PA engineers have used for a long time to tune out feedback resonances, but an easy tool bringing it to the domestic arena is well worth a look. HiFiScan is a measuring tool so it won’t magically correct any imperfections in your system, however it can export data in a format suitable for digital effects packages.

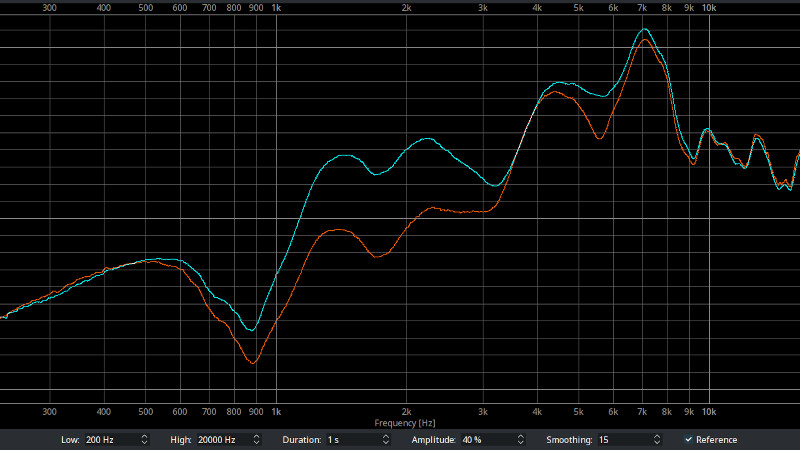

Naturally its utility is dependent on the quality of the hardware it’s used with, but the decent quality USB microphone used in the examples seems to give good enough results. We see it used in a variety of situations, of which perhaps the most surprising is a set of headphones that have completely different characteristics via Bluetooth as when wired.

If audio engineering interests you, remember we have an ongoing series: Know Audio.

When your audio setup is that good that you defy parody, does that mean the audio quality is even better?

Man I grew up in my neighborhood with friends whose dads all had mono. sTeReO? Wow man in like maybe 72 I think my dad got stereo. I Wanted Quadraphonic by then! Damnit! 🙂

You have to defy parody. If all you do is equal it, that’s just parody parity.

REW (“Room Equalization Wizard”) is another popular free tool for performing detailed room acoustic analysis on win/mac/linux.

Good recommendation, thank you. Might be handy for gigging as well? Wonder if the sound guys ever use that.

For a venue you’d need a system capable of optimizing individual long FIR filters for a ton of speakers and a ton of listening positions. Bela could do the zero latency convolution with open source, but the open source software to create the filters doesn’t exist.

For closed source there is AFMG FIR maker.

“For a venue you’d need a system capable of optimizing individual long FIR filters…”

Kids these days. Back in my day, you’d hang some blankets on the walls, maybe point the speakers more this way or that way, and call it good. These days you need a DSP PhD just to tweak your tweeter.

I’m kidding. My experience is that show sound increases with technological sophistication of the engineers. I remember when 9:30 in DC pulled all its audio cable and replaced it with ethernet direct to speaker/amp combos. Saw two shows shortly after that really blew my mind.

But I’m also not kidding — you can do a lot with simpler means as well. Punk rock style.

Especially as long as you don’t care song text is unintelligible for most of the audience. This is the reality with traditional setups, sound from a dozen speakers can’t add up to something intelligible with only simple delay and volume.

Sound engineers seem a bit resistant against FIR though. For fixed venues they are willing to use AFMG’s various software, but for on the fly they want knobs, not measurement and calibration.

For a few decades now, sound systems are often quickly checked and EQ and levels tweaked by playing pink noise through the speaker(s) of interest, picking up the sound with an instrumentation mic at a representative location, and viewing the mic signal on a spectrum analyzer.

If using Windows you might try one of these once you’ve characterized your setup.

https://github.com/jaakkopasanen/AutoEq

Or:

https://sourceforge.net/projects/peace-equalizer-apo-extension/

Bluetooth sounding different is no surprise. I had a TV, well several, that looked completely different (better) through the VGA port. Maybe color space or some post processing due to the analog signal.

Peace equalizer or github jaakkopasanen AutoEq

We’re missing one final (important) part in the listening chain here. The person’s ears. I’m pretty sure with all the differences between people, hearing is different as well. So maybe that should be measured, and compensated for, as well?

There were a German startup doing exactly this, but I cannot remember their name.

Hörwurm

The only reson for this would be if you have run out of battries to your hearing and only if you are the only listenerer.

Compensated, but to what?

Getting things right “by ear” is kinda like wine tasting. You get used to the sound in seconds and then keep twisting the knobs until you’re way off. Then you come back the next day and find it’s all wrong.

There have been some efforts at that. Sony have this thing where they 3D scan your head to pick (or build) an appropriate HRTF for their headphone surround effect. Samsung have a simple wizard built into their phones that lets you tweak EQ through a series of audio tests. Years ago HTC put mics into their bundled earphones, the phone would play test sounds while you’re wearing the earphones and the response the mics picked up is used to correct audio output.

Until there’s a SPDIF input at the base of your skull, your ears are the only sonic inputs you have. Whether you’re in the same room as a string quartet, or listening to a recording of them, you use the same ears. There’s no “right” ear response curve that your ears need to be “equalized” to. If the reproduction system (including the listening room) is capable of faithfully reproducing the sound of the thing that was recorded… job done.

I like the way he does all that work, then minces it though a Class D amp at the end!

Brilliant idea, but You should probably make sure your microphone is plugged in with a mechanically Balanced zero oxygen gold plated cable or you won’t get the true benefit.

It’s all for nothing if the conductors weren’t braided in reverse fishtail by blind orphans in the Himalayan foothills

Don’t forget the cable’s jacket made of unobtanium for that always missing extra benefit

When I hear the term smart speaker they mean smart microphone and the network it’s on. The speaker is passive and dumb. These speakers and phones are smart. Bose did RTA in a home system years ago? My curb find surround amp has a mic jack for some EQ setting. Having four 10 band EQs I”ll pass.

Phones-hearing aids will now do some of this for the user, more to come. Over the counter soon.

Bose didn’t have to do any RTA on any room as long as they obtain “Better sound through marketing”

It could use some more cowbell.

Cow 🐄

Bell 🔔

Enough?

Check also these apps:

http://kokkinizita.linuxaudio.org/linuxaudio/