Cryptographic coprocessors are nice, for the most part. These are small chips you connect over I2C or One-Wire, with a whole bunch of cryptographic features implemented. They can hash data, securely store an encryption key and do internal encryption/decryption with it, sign data or validate signatures, and generate decent random numbers – all things that you might not want to do in firmware on your MCU, with the range of attacks you’d have to defend it against. Theoretically, this is great, but that moves the attack to the cryptographic coprocessor.

In this BlackHat presentation (slides), [Olivier Heriveaux] talks about how his team was tasked with investigating the security of the Coldcard cryptocurrency wallet. This wallet stores your private keys inside of an ATECC608A chip, in a secure area only unlocked once you enter your PIN. The team had already encountered the ATECC608A’s predecessor, the ATECC508A, in a different scenario, and that one gave up its secrets eventually. This time, could they break into the vault and leave with a bag full of Bitcoins?

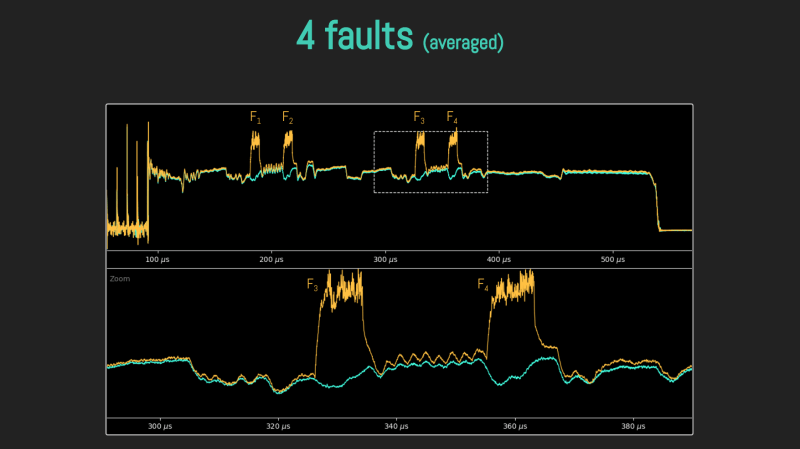

Lacking a vault door to drill, they used a powerful laser, delidding the IC and pulsing different areas of it with the beam. How do you know when exactly to pulse? For that, they took power consumption traces of the chip, which, given enough tries and some signal averaging, let them make educated guesses on how the chip’s firmware went through the unlock command processing stages. We won’t spoil the video for you, but if you’re interested in power analysis and laser glitching, it’s well worth 30 minutes of your time.

You might think it’s good that we have these chips to work with – however, they’re not that hobbyist-friendly, as proper documentation is scarce for security-through-obscurity reasons. Another downside is that, inevitably, we’ll encounter them being used to thwart repair and reverse-engineering. However, if you wanted to explore what a cryptographic coprocessor brings you, you can get an ESP32 module with the ATECC608A inside, we’ve seen this chip put into an IoT-enabled wearable ECG project, and even a Nokia-shell LoRa mesh phone!

We thank [Chip] for sharing this with us!

> all things that you might not want to do in firmware on your MCU, with the range of attacks you’d have to defend it against

If the crypto processor is configured as an I2C peripheral, you’d still have to deal with a range of attacks against your firmware. If the device is capable of signing a message with a private key, and you take over the MCU, you can have it sign your custom message. The chip won’t know what it is for.

Thats why you need to provide a pin/password before it can unlock the private key to do the stuff you want.

The I2C bus can still be trivially intercepted to capture this password, or the attacker could wait until the chip is unlocked and than take over control of the bus.

I think these chips are mostly used for cases where the security of an individual device (that the attacker has unlimited physical access to) isn’t the primary concern, but the compromise of a single device shouldn’t result in compromise of all identical devices (a class break).

Yeah, but the pin blocks the found device on the street (in a locked car or not…) kind of attack.

I’m not that familiar with power supply glitching, but what about including some bypass capacitors right into the chip package? Would they have to be too large to yield any positive effect in thwarting those attacks? Or is that already been done even? Probably not viable for commodity chips though.

Almost all chips have internal bypass capacitors, the ones on the PCB serve to refill them.

However the glitch usually forces the line low, not merely stops feeding power.

Keeping key material in a magical blackbox with promises of security never was an option. And to avoid rubber hose cryptography you should also use a keyfile together with a key in your brain. Only a 2 key system allows you to destroy the other half quickly and avoid having to spill anything.

Good practice also used in SSH keys: Keys at rest are encrypted with a password. Don’t store key material in the same location.

No lock is proof against a foe who has the entire schematics and precise enough picks. It will always be an arms race.

Oh sweet irony! On the video, he has a thick French accent so YouTube detects his speech as being French and forces the closed caption generation to French! It would be easier for me to understand this man if he actually was speaking French because YouTube is stupid.

Part of the reason why AI will never be useful. Now we need another training model for ALL english videos with any accent of the native original language of the speaker.

I don’t think you are using the word “never” quite right. AI is already successfully used for several things, and even if it struggles with accents it is already way better than not having subtitles in 99% of videos (due to lack of people to manually type it all)

Hmm, I had a kind of weird an off-hand thought– I mean I know most security to date is based off the factoring of primes– But ‘neural nets’ are also very often seen as being ‘black boxes’– or the specific weights determined, well, we can’t quite ‘read’ why they work. So couldn’t a simple neural net be designed for security, either ‘passing through the network’, or at most basic, using weights as your key (obviously that could be copied though) ? I wonder if anyone has looked into this.

Maybe, but I think the difference is that with the right piece of information (the secret key) you can decrypt data, while there’s not necessarily an equivalent “secret key” that would allow you to invert a neural net

Dear Rapvkil,

Well, yes, thus my ‘thought’ and my ‘point’….

Well, then you can only encrypt things, they can never be decrypted, even by you.

You could figure out the weights if your can control the inputs and observe the outputs; for example, you could input zero on all inputs and 1 on a single input, and do that for so inputs, or change one of the inputs until you see bitflip on an output.

One of the key properties of a good encryption algorithm is that any bit change in the input or key changes approximately half of the bits of the output. It must never tell you that you are close to finding the key. I don’t think neural networks fulfill this requirement.

I wondered why DiodeGoneWild on youtube did not enable automatic captions on any of his videos. I guess google translate would detect that he is uploading from the Czech Republic and screw things up.

I’ve seen the video once it was released, it’s such a good example of using laser FI. The article goes from why you wouldn’t want to roll/run your own crypto, bank heist, to right to repair. Each are valid topics to discuss, and you should write more, but it would be beneficial to all to separate the topics.

Fault injection (FI) is a thing, and there are more projects using the techniques every day. Just the other day, Flipper Zero released a few pictures of an upcoming add-on to allow voltage glitching. Next we have a lot of other open source tools including PicoEMP, it’s easier now than it has ever been to try it yourself. Don’t expect to repeat the attack demonstrated here, though, as the laser source is likely already in the €10k+ range.

In formal certification, the attacks are rated against the tools required, attacker skill, and time requirements to name a few. The chip in question doesn’t have certification, possibly they have tried and failed, or it was just too expensive. Anyhow, looking at the video, they used specialized equipment, the guy in an expert, and he spends quite the time on it. If this is enough for an advanced basic rating, and if your assets are well enough protected with that rating will require some guess work.

The last one mentioned was how security frustrates the user and makes devices unrepairable. I fully agree that if you own something, you should be able to do what you want to do with it. However, this does not mean it should retain its original functionality when control is released. E.g. Rooting a phone usually means you can’t use the banking applications anymore, as the device is no-longer trusted. Sadly, we can’t have both.

> Rooting a phone usually means you can’t use the banking applications anymore, as the device is no-longer trusted.

That to me is more than sad, its kinda of bonkers when you think about it – your bank or their app only trusts stuff when Google says it should?!?! If security processes require third and fourth parties without a real need you are just making everything less secure by introducing so many more unnecessary points of failure that you just have to trust are working properly.

Even more so if you are effectively offloading a large part of the decision on if/when/how far to trust and how to authenticate to these third parties that offer no transparency as to their own security practices, or how they make those decisions. (I’ve not looked recently, maybe they have improved but Banking Apps on the smartphone last I looked at them were kinda of a nightmare security wise)

This is important work. Great to bring it up.

Once again the maxim, “If a adversary has physical access to your device, it is no longer yours.”