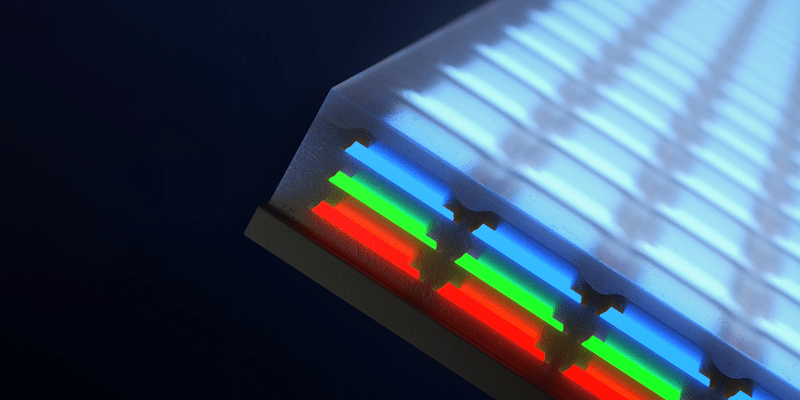

If you zoom into the screen you are reading this on, you’d see an extremely fine pattern of red, green, and blue emitters, probably LEDs of some kind. This somewhat limits the resolution you can obtain since you have to cram three LEDs into each screen pixel. Engineers at MIT, however, want to do it differently. By growing thin LED films and sandwiching them together, they can produce 4-micron-wide LEDs that produce the full range of color, with each color part of a vertical stack of LEDs.

To put things in perspective, a standard TV LED is at least 200 microns across. Mini LEDs measure upwards of 100 microns, and micro LEDs are the smallest of all. A key factor for displays is the pitch — the distance from the center of one pixel to the center of the next. For example, the 44mm version of the Apple Watch has a pitch of around 77 microns. A Samsung Galaxy 10 is just over 46 microns. This is important because it sets the minimum size for a high-resolution screen, especially if you are building large screens (such as when you build custom video walls (see the video below for more about that).

For example, consider a 4K screen with 3840×2160 pixels. If you can only do a 0.1mm pitch, your monitor will have to be at least 16 inches wide. A 4K TV with a 75-inch screen needs a 432 micron pitch, but to make a 24-inch screen with the same resolution requires 138 microns. While that means it is easier to make a large high-resolution display, it is harder to view the large screen up close. This can be a problem for computer monitors or VR headsets.

But imagine if these new LEDs would allow, say, 10 micron pitch. Then you could pack a 4K screen into a bit more than an inch and a half! VR headsets could easily be 4K if this were possible.

So far, the team has made a single multicolor pixel. Of course, they want to continue to produce a true array. Some of the overhead of that will reduce the pixel density, but it still could offer impressive results.

If you want to read more about microLED technology, [Maya] can help with that. Until this new tech goes mainstream, OLED is still the one to beat. You can actually make your own.

Um… most screens are LCDs with LED backlight these days. Some mobile device might use OLED, but they aren’t the norm.

They aren’t? Let me guess, you use either an iPhone or the cheapest grocery store garbage phone you could find?

Current market data records that not even half of mobile devices sold in 2022 use OLEDs. So indeed they are not the norm.

My friend, virtually all laptops and desktop monitors are LCDs (with LED backlights), as UT says.

Roughly a third of mobile phone use OLEDs (https://www.counterpointresearch.com/oled-adoption-smartphones-2022/), though iPhones, on the other hand, have used OLEDs almost exclusively for several generations.

That part of article is misleading at best, only maybe 1/2 of people viewing this site, and of those, only about 1/3 have OLED screens… so guess around 1/6th are viewing this on an OLED.

Also, OLEDs are the only type of emissive display currently being used either on mobile devices or computers, so saying “probably some kind LED” is a bit odd; it’s either OLED or not emissive display.

Everyone is forgetting TV counts as a display and there is plenty of oled there. QLED is also an led tech and even lcd displays use leds unless they are quite old.

The vast majority of TVs out there are still LCD. The first sentence of the article is just plain wrong. Also, and LED backlight does not equal rgb emitters of the pixel cells.

True, but I’m not sure an LCD display with LED backlights should be counted as an LED display in any way.

QLED is just a better way to build an LCD (purer colors and lower filter losses). Despite the name, it’s not an emissive display type.

“Light travels through QD layer film and traditional RGB filters made from color pigments, or through QD filters with red/green QD color converters and blue passthrough”

(https://en.wikipedia.org/wiki/Quantum_dot_display)

From your Wikipedia page: QDs are either photo-emissive (photoluminescent) or electro-emissive (electroluminescent)

So a blue LED gets converted by the qdot to red and yellow.

Yes, a blue LED gets converted by the qdot to red and green… and then goes through an LCD panel.

As far as I can tell from these stats (https://www.cepro.com/audio-video/displays/30-percent-tvs-sold-will-be-high-end-displays-by-2025/), by far the majority of LEDs in “LED” TV displays out there are in fact just the white backlights, with and LCD in front for subtractive filtering to form the image.

…and [here] (https://omdia.tech.informa.com/OM029463/Samsung-changes-its-2023-QD-OLED-TV-strategy-which-is-strikingly-different-from-its-2022-approach) if anyone can get behind the paywall? (hackers???)

Smaller pixel size == higher definition for small (i.e. tiny) screens,

I am okay with that, but hopefully it can be accomplished with a low price, reliability, and low power. I’m willing to give it time to get there.

(I’m not an early adopter).

Monitors have already reached the limit of what is usable resolution. The only reason to make pixel smaller then about 100um is to make them so small that you won’t even see them if there are dead or stuck pixels. My current 4k monitor has a pitch of around 0.24mm. This is a bit on the coarse side, but you have to look pretty closely to notice. And my monitor has several sub pixels per pixel. My 6 year old phone too. If you look at them with a magnifying glass, then you can see that each sub pixel is made out of several “stripes” of the same color, and you need a quite decent magnifying glass to even be able to see that.

There are some exceptions of course. One of them are displays for VR glasses as already mentioned. Those can benefit from very small high resolution displays.

I see much more room for improvement in other area’s such as efficiency, reducing power consumption and improving lifetime. Transflective color LCD’s would be nice for example. Do Oleds still suffer from burn in as they have for years now?

>but you have to look pretty closely to notice.

The trick is, a single pixel does not make an image. You need two pixels to separate a distinct feature from another – two pixels to make a contrasting edge. The information you see on the screen is not encoded at the pixel level and the actual “resolution” of the image you’re looking at is always more sparse than the pixel pitch of the display.

In order to avoid the “screen door” effect and various moire artifacts, the image is blurred (anti-aliased, de-focused etc.) by a factor of 0.5-0.9 depending on the content. In video production this is known as the Kell-factor. In the general case, the display artifacts start to become unnoticeable at a Kell factor of about 0.7. That is, the actually distinguishable “dots” of information in the image you’re looking at have 0.7 times the effective resolution of your monitor.

People with average vision can see the pixels at roughly 100 DPI at 1 meters (0.254 mm). If you want a picture that is actually as sharp as the average person can make out, you need 100 / 0.7 DPI = 142 DPI from 1 meters distance which means a pixel pitch of 0.178 mm. If you halve the distance, you need to halve the pixel pitch for the same effect, which means you need it to go under 100 µm for laptops and tablets, but also for desktop monitors that are viewed closer up.

Those numbers sound about right.

I’m getting a bit older now and my eyes are not what they used to be, but even so, I can just about still see the effect of anti aliasing on my 0.24mm pitch monitor. When I was young I had an opportunity to look at a 0.17mm pitch CRT and “stepping” artifacts on text was barely visible, which means that if you go to around 100um, then you have “analog antialiasing” and also doing it in software would be superfluous. My phone already is in that region, but my 4k monitor is not and still “benefits” from software anti-aliasing.

Yes burn-in still exists on most phones. It’s only slightly less of a problem and you’ll still toast your screen by running at max brightness for months.

Also resin 3D printers can benefit quite a bit from smaller pixels. Although monochrome is perfectly fine there.

Going uberMicro for makes little sense for directly visible displays because of limited resolution of human vision. They may be useful in all sorts of AR applications, but they ar going to need good optics. And good optics don’t grow in trees.

Such dense displays will also have limited brightness due to limited heat dissipation, a problem faced also by other 3D IC technologies.

The article just said at the end that OLED is hard to beat. Also OLED is becoming the norm and LCD is starting to decline slowly. All the phones I use are OLED screen and been like that for years. LCD is just so crap with black colours. Also you are starting to see cheaper getting OLED screens so in the next few years most phones will be OLED because it uses less power and you can control refresh rates too to save more power. LCD will become the next CRT in time. Mind you CRT is still an old tech to beat. Also this article was about LED not LCD, but people are moaning in the comments about OLED is not the norm but LCD is. But LCD is not LED is it? Only the back light to it.

OLED is crap with bright colors and viewing the display outdoors, and image burn-in and color shift due to differential fading.

The one good thing about LCD is that the colors shift uniformly over time, so you can keep calibrating your monitor to compensate. It doesn’t develop red and blue blotches depending on what you’ve been mostly viewing.

don’t mean to argue, just anecdata…

my moto x OLED sucked at bright / outdoors, but my oneplus nord n20 OLED works great outdoors. i just took my current phone out in full daylight (there was a hole in the clouds just for my experiment, wow!) and the display looked as perfect as ever, even in direct sunlight. subjectively, as good as my two-phones-ago nexus 5x that was a nice and bright LCD. so OLEDs have been improving at brightness, by quite a bit.

can’t comment on burn-in…i haven’t noticed it but i think i’m undiscerning on that front.

Assuming that each layer of LED doesn’t filter light then Green should be at the back then red and blue closest to the surface of the display. Our eyes are most sensitive to green light 59% then red 30% and finally blue 11%

There is another tradeoff to be considered: heat dissipation. In the middle there should be the layer that most efficiently converts current to light.

The substrate at the back is what carries heat away – the air or glass in front of the panel is more of an insulator.

Then the least efficient layer needs to be closest to the back.

Comparing a 1 Watt Red LED and 1 Watt Blue LED. They will use equal power and output the same amount of heat, but the red LED will appear much brighter.

We are going to hit the relativistic limits of how fast we can pump bits into these displays at some point. We just aren’t going to have enough time to get different areas of the display to synchronise. Queue some sort of concurrent superimposed iterative entanglement based display. Who knows what will see ten years from now, with the speed things are changing. Hopefully the progress will prove beneficial.

>”Then you could pack a 4K screen into a bit more than an inch and a half! VR headsets could easily be 4K if this were possible.”

One of the obstacles for VR specifically is refresh speed, since it generally has to be way above 30 or you will get motion sick. So, more pixels refreshing faster requires a better GPU and more bandwidth. Since 4k 120hz screens became available a while back, it may not be a problem, unless they decide to increase the resolution further.

Yeah, it vexes how we just accept that more resolution is always better, and even go out of our way to replace good displays with newer versions that eat up 4x the bandwidth, storage and processing power, just for the sake of a number. It’s especially silly with VR headsets, which are already power-starved, and are hobbled by fixed focal distance no matter how many pixels you throw at the problem.

The image resolution doesn’t need to be 4K – just that the pixels need to be small enough that your eye can’t resolve them, which avoids the shimmering edge artifacts from the pixel grid.

So this array of pixels is the display analog of a Foveon sensor, presumably stacked vertically in the same order. How much loss is there in the red spectrum vs a normal display ?

Awesome! I can’t wait to see higher resolution ads wherever I go. I love billboards!

So we could get rid of ClearType, and either disable antialiasing or have high enough resolution for it not to look ugly and blurry. Bring it on!

To those who are stuck on what displsys are already out here now at this moment.

It doesn’t matter much what is now to the future.

This tech adaptation is an analog to layering pixels for color production. This should improve image quality in a very visually obvious way.

This tech is probably a next step in electronic visual display. It may be a potential improvement that aids developers of image and print editor software development.

This tech concept could maybe be used to simulate print based tech color productions. We have 6 color printing. Perhaps there are ways to layer liquid paper or maybe even OLED pixel screens to more properly represent print media.

Doesn’t this design – if the losses from stacking aren’t too bad – also increase the overall brightness?