Machine learning is supposed to help us do everything these days, so why not electron microscopy? A team from Ireland has done just that and published their results using machine learning to enhance STEM — scanning transmission electron microscopy. The result is important because it targets a very particular use case — low dose STEM.

The problem is that to get high resolutions, you typically need to use high electron doses. However, bombarding a delicate, often biological, subject with high-energy electrons may change what you are looking at and damage the sample. But using reduced electron dosages results in a poor image due to Poisson noise. The new technique learns how to compensate for the noise and produce a better-quality image even at low dosages.

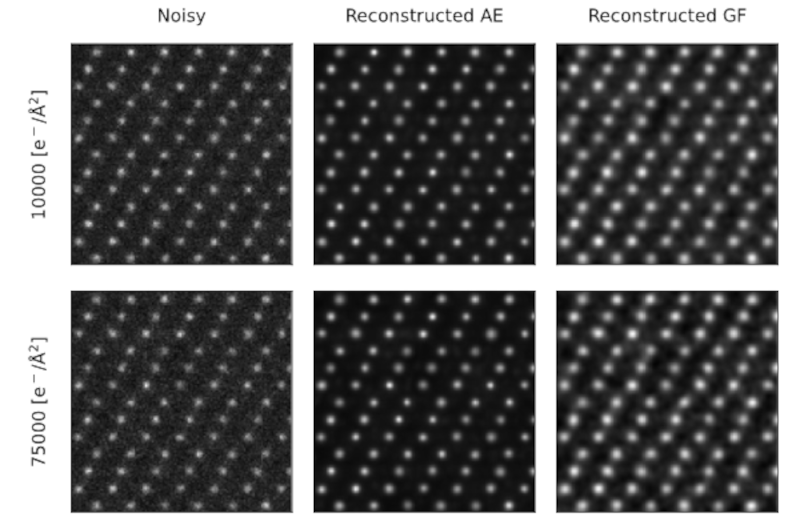

The processing doesn’t require human intervention and is fast enough to work in real time. It is hard for us to interpret the tiny features in the scans presented in the paper, but you can see that the standard Gaussian filter doesn’t work as well. The original dots appear “fat” after filtering. The new technique highlights the tiny dots and reduces the noise between them. This is one of those things that a human can do so easily, but traditional computer techniques don’t always provide great results.

You have to wonder what other signal processing could improve with machine learning. Of course, you want to be sure you aren’t making up data that isn’t there. It wouldn’t do to teach a CAT scan computer that everyone needs an expensive surgical procedure. That would never happen, right?

In electronic work, we usually are using SEM that detects secondary electron emissions because it is hard to shoot an electron through an electronic component. But STEM is a cool technology that can evens show the shadows of atoms. We keep hoping someone will come up with a homebrew design that would be easy to replicate, but — so far — it is still a pretty big journey to get an electron microscope in your home lab.

I wouldn’t call that an image enhancement as much as an image interpretation or analysis, it is the “opinion” of the neural network.

yeah! this is the same problem all neural network image enhancement or compression faces.

in fact, you can experience the same problem yourself from a low-resolution image of a face. say, a distant face in a crowd that is represented by only 20×30 pixels or so. when viewed in the original context with tiny pixels, it is amazing how good a job the mind does of reproducing the original human face. it looks sharp, and you imagine you can see the person’s face. but you zoom in until those pixels start to get large, and you realize, you can’t actually see the face at all. and then if you actually look at other photos of the person, you can see that often that they don’t at all look like you thought they did when you saw them in context.

your associative network — or the computer’s associative network — reproduces a high quality image from a low quality image by revealing its biases and nothing more.

it’s definitely amazing and wonderful and useful. but it’s almost useless for scientific instruments. the useful output of a classification network is a classification, not a reproduction-from-imagination of the original image.

And yet I know from personal experience (formally trained illustrator) that it is possible to teach many people to see just the pattern of light, in order to be able to draw or paint it. I wonder how that “true sight” works and if we can engineer a similar behavior in neural networks.

RNN can be trained for this, but it takes a lot of legwork, especially for accuracy critical tasks. Current implementations thus use these tools for augmented notification rather than altering data, and couple that with (relatively) unbiased noise reduction.

Poisson noise is also known as shot noise, a term which is probably more familiar if you are an Electrical Engineer. Wikipedia has a pretty good page on shot noise.[1] It in-particular emphasizes how shot noise predominates during very short sample times and very low current levels, a condition present during low dose STEM observations.

* Reference:

1. Shot Noise

https://en.wikipedia.org/wiki/Shot_noise

They should use Samsung’s enhancements- then we’d see the craters on each of those moons…

:-D

Amazing work!!!