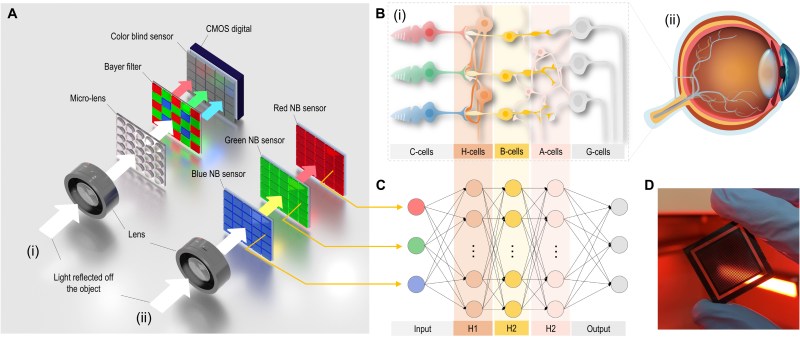

The mammalian retina is a complex system consisting out of cones (for color) and rods (for peripheral monochrome) that provide the raw image data which is then processed into successive layers of neurons before this preprocessed data is sent via the optical nerve to the brain’s visual cortex. In order to emulate this system as closely as possible, researchers at Penn State University have created a system that uses perovskite (methylammonium lead bromide, MAPbX3) RGB photodetectors and a neuromorphic processing algorithm that performs similar processing as the biological retina.

Panchromatic imaging is defined as being ‘sensitive to light of all colors in the visible spectrum’, which in imaging means enhancing the monochromatic (e.g. RGB) channels using panchromatic (intensity, not frequency) data. For the retina this means that the incoming light is not merely used to determine the separate colors, but also the intensity, which is what underlies the wide dynamic range of the Mark I eyeball. In this experiment, layers of these MAPbX3 (X being Cl, Br, I or combination thereof) perovskites formed stacked RGB sensors.

The output of these sensor layers was then processed in a pretrained convolutional neural network, to generate the final, panchromatic image which could then be used for a wide range of purposes. Some applications noted by the researchers include new types of digital cameras, as well as artificial retinas, limited mostly by how well the perovskite layers scale in resolution, and their longevity, which is a long-standing issue with perovskites. Another possibility raised is that of powering at least part of the system using the energy collected by the perovskite layers, akin to proposed perovskite-based solar panels.

(Heading: Overall design of retina-inspired NB perovskite PD for panchromatic imaging. (Credit: Yuchen Hou et al., 2023) )

Two for one. Power generation and image sensor.

Sigma can give up on their Foveon sensor now

Not so fast ;)

https://hackaday.io/project/2042-the-optical-inch/log/219957-perovskites

> and their longevity, which is a long-standing issue with perovskites

And their toxicity. Methylammonium lead would never be approved on the market because of RoHS.

Mind, methylammonium lead is exactly the kind of organic lead compound that is easily soluble and leaches out of waste disposal with rain, and bio-accumulates in plants and animals.

Plain metallic lead or lead oxides isn’t, so in comparison discarded electronics and solar panels containing MALHS would have a dramatically greater pollution potential than say, a car battery thrown into the woods.

Methylammonium lead halides are organic in name only. They behave like inorganic salts. The lead would go into the soil and probably become lead sulfate almost immediately. Perovskites are harmless. The real issue is that they’re water-soluble so none of this would ever be scalable, and researchers are just piggybacking on this doomed tech because it gets high efficiency numbers for a few days and secures their grant money.

I wonder if there are advantages of using neural networks instead of the classic bias/flat frames. The neural network is doing only calibration because the input (intensity of each color) is already usable.

Also I think normally neuromorphic cameras do not have frames but I imagine that process the image more continuously like the human eye but it’s not clear from the article.

In the end I dont know what are the novel parts, if the stacked sensors instead of thetraditional mosaic filter, or using neural network to processs the image… It is a cool hack but it being a paper makes you wonder if they are just playing or actually trying to make an altrrnative to traditional cameras

There are many more color filter array types than just RGB Bayer patterns* . Unfortunately, this is lost in the way the experimental work in their paper is executed. 4, 5 or 6 different bands would have been implemented and processed back into an RGB image, showcasing both the better color reproduction in scenes with sources featuring a multitude of color temperatures, and a more inspired neuromorphic computing approach to RGB output reconstruction.

I can recommend having a look at their supplemental document to see just how much fuss it was to get the materials, characterization and optical properties worked out, so I can understand why at face value they just made a crappier 32×32 pixel camera with lots of buzzwords :)

* http://www.quadibloc.com/other/cfaint.htm

Good example why the word “color blind” shouldn’t be used for describing differences in viewing colors in humans. As can be seen in this graph, people, even scientists, assume it means not being sensitive to colors, which is not what is meant when people are called color blind. This misnomer should really be removed from medical practice/topics.

Color-challenged.

Differently-colored.

Wait, no! Can’t say that one either!

Why oh why did you not come up with even a single alternative then? Come on.

consisting of…

out of…

Both work to convey the same idea.

But combining them is gibberish.

consisting out of…