The Kinect is a depth-sensing camera peripheral originally designed as a accessory for the Xbox gaming console, and it quickly found its way into hobbyist and research projects. After a second version, Microsoft abandoned the idea of using it as a motion sensor for gaming and it was discontinued. The technology did however end up evolving as a sensor into what eventually became the Azure Kinect DK (spelling out ‘developer kit’ presumably made the name too long.) Sadly, it also has now been discontinued.

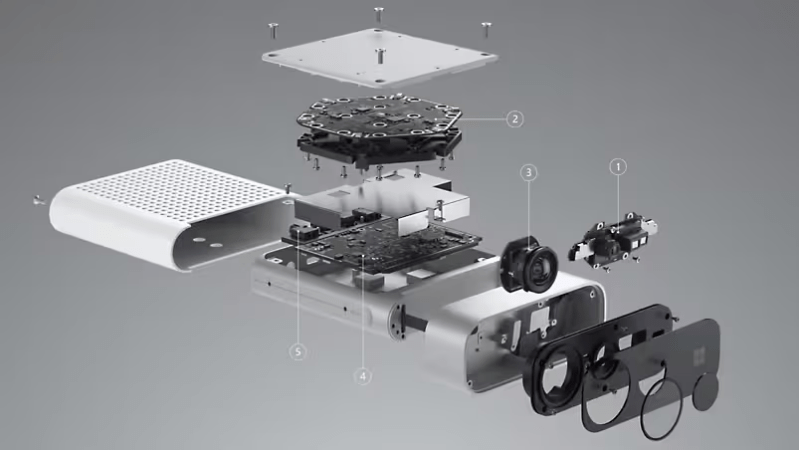

The original Kinect was a pretty neat piece of hardware for the price, and a few years ago we noted that the newest version was considerably smaller and more capable. It had a depth sensor with selectable field of view for different applications, a high-resolution RGB video camera that integrated with the depth stream, integrated IMU and microphone array, and it worked to leverage machine learning for better processing and easy integration with Azure. It even provided a simple way to sync multiple units together for unified processing of a scene.

The original Kinect was a pretty neat piece of hardware for the price, and a few years ago we noted that the newest version was considerably smaller and more capable. It had a depth sensor with selectable field of view for different applications, a high-resolution RGB video camera that integrated with the depth stream, integrated IMU and microphone array, and it worked to leverage machine learning for better processing and easy integration with Azure. It even provided a simple way to sync multiple units together for unified processing of a scene.

In many ways the Kinect gave us all a glimpse of the future because at the time, a depth-sensing camera with a synchronized video stream was just not a normal thing to get one’s hands on. It was also one of the first consumer hardware items to contain a microphone array, which allowed it to better record voices, localize them, and isolate them from other noise sources in a room. It led to many, many projects and we hope there are still more to come, because Microsoft might not be making them anymore, but they are licensing out the technology to companies who want to build similar devices.

Could it be that the core patents are expiring soon and soon the global market will be saturated with a glut of cheap clones ?

I’m wrong. Ihe core patent ( US7433024 “Range mapping using speckle decorrelation” ), in my mind – there may be others that are more important , will not expire until 2027-05-18.

Soooo, [Truth] wasn’t!

B^)

Thanks for the correction.

Four years isn’t that far off if you’re thinking of introducing a new product.

I’m sure a legally required replacement next generation for Freon is being developed when the current generation patents expire.

And here I was thinking it was an Israeli company called Prime Sense which created the technology and licensed to Microsoft and others(ex: Asus ). Prime Sense was purchased by Apple after it was published that Google was working with PrimeSense for a compact 3D camera to fit onto Android tablets. It was over 10 years before anything using the technology showed up in any Apple product. Or maybe Microsoft did invent the technology and that was all a dream. In that dream, Adafruit even had a key role in the Kinect product becoming popular outside of gaming and Microsoft tried to stop them.

IIRC that was the first Kinect.

Allways thought that Johnny Chung Lee is the Team Lead at the Kinect.

RIP Kinect

RIP Google Tango

Afair MS spend >$1Billion buying everything that moved and claimed to do depth/tof. The plan was to go with Time of Flight camera, but R&D didnt go as fast as planned and Prime Sense license was a last minute effort to salvage the project. Second Kinect was finally the result of those early acquisitions.

Apple owns the Kinect technology not Microsoft

If you lookup references in the “US7433024” patent, Microsoft may not own the current technology but they sure owned a lot of patent landmines in, around and surrounding that whole area.

No, Apple acquired Primesense, the maker of the structured light sensor in Kinect v1, after Kinect v1 was released. Kinect v2 and Azure Kinect used a different technology (time of flight) and different sensors.

You can get it from third parties now.

https://www.orbbec.com/microsoft-collaboration/

I’m really sad this never really took off – not for gaming (I love gaming, but the only thing it was really good for was ‘Lets Dance!’ type games), but for smart home applications.

I really wanted to put a whole bunch of Kinect type cameras around my house, which could then not just act as presence sensors, but actually uniquely identify the person present, and operate as smart speakers/mics for a system where different household members could have different reactions and routines set up based on the id’ed individual (for example, kids wouldn’t have certain options, it would greet each person by name when they come home, etc), have it measure heart rate without having to wear a sensor, fall detection for older individuals, etc.

I know current smart speakers/displays can do some personalization based on voice recognition, but combining it with a depth sensing camera and IR camera and person recognition, and processing all the visual data locally (which even the Xbox 360 could do) would have done a ton for making an actual smart home, vs a home with smart devices like we have now.

I really want a fully integrated smart house where everything is processed by a central server – home assistant is the closest we have, but having this type of tech integrated with it would just be really cool.

I know I could hack together some kind of version of it, but the biggest problem is that the kinect sensors all require a direct USB connection to a computer for it to work. I had a Kinect hooked up to an old NUC and aimed at my entryway at my old apartment, and it would greet me and my friends by name whenever we came in, but it was really more of a gimmick, with very little of the capabilities actually used.

But just the fact that it wasn’t forcibly integrated into one of the billions of smart device app eco systems, and had an actual SDK, made it fairly unique just by itself.

Maybe I should go buy up a couple dozen Kinect 2s or the Azure Kinect DKs, just in case I can figure it out later. At worst, they still make for pretty good machine vision for robots.

Sorry, my previous comment was meant to be a reply to the article, not your comment, must have hit the wrong thing.

Damn, those 3rd party cameras are expensive, though, especially considering they removed the microphone array – you could easily get 5-6 Kinect 2’s for the price of one of their lowest end version, and for the price of the one with truly useful upgrades (PoE powered, onboard processing), you could probably get around 10!

Sadly, without the mic array, none of them are that attractive for my purposes☹️

There is still the Intel RealSense product line. When I worked with AR stuff I got the impression those were really used more in the industry. (Where the Kinect products were more for building hacks.)

As an example, I believe Intel has ARM and Linux SDKs so you can run it on device not connected to the internet. Or not running Windows.

https://www.intelrealsense.com/

Those are nice, especially the onboard processing, but the high resolution (1080p) ones all have a field of view of only 69° × 42°, same as the Kinect 2. The 3rd party Kinect cameras go up to 120° x 120° at 4k RGB, which is a massive difference, and the $700 ones have onboard processing as well.

The RealSense’s do have a big advantage in slightly higher depth sense resolution, and much higher frame rate (up to 90fps, vs up to 30fps for the Kinect cameras).

Different application targets, I guess. Wide FoV is great for smart home and such, where the low depth FPS isn’t much of an issue, and narrow FoV with high depth FPS is great for monitoring industrial processes and such, where the extra FoV would just take in extraneous data, and you really don’t want to miss even the tiniest event.