It used to be that developing for microcontrollers was relatively relaxing. These days, even a cheap micro like the Raspberry Pi Pico has multiple cores, networking (for the W, at least), and file systems. Just like desktop computers. Sort of. I found out about the “sort of” part a few weeks ago when I decided to embark on a little historical project. I wanted a file system with a large file that emulates a disk drive. The Pico supports LittleFS, and I figured that would be the easy thing to do. Turns out the Little in LittleFS might be more literal than you think. On the plus side, I did manage to get things working, but it took a… well — dare I say hack? — to make it all work.

History

I’m an unabashed fan of the RCA 1802 CPU, which is, of course, distinctly retro. The problem is, I keep losing my old computers to moves, natural disasters, and whatnot. I’ve had several machines over the years, but they seem to be a favorite target of Murphy’s law for me. I do currently have a small piece of hardware called an Elf Membership Card (by [Lee Hart]), but it lacks fancy features like disk drives, and while it could be expanded, there’s something charming about its current small size. So that led me to repurpose a 6502 emulator for the KIM-1 to act like an 1802 instead. This is even less capable than the membership card, so it was sort of a toy. But I always thought I should upgrade the Arduino inside the emulator to a processor with more memory, and that’s what I did.

I started out with a Blackpill STM32F board and called the project 1802Black. The code is a little messy since it started out as [Oscar’s] KimUNO code, and then my updates layered with new updates. Also, for now, I shut off the hardware parts so it won’t use the KimUNO hardware — you only need a Blackpill (or a Pico, see below) and nothing else, although I may reenable the hardware integration later.

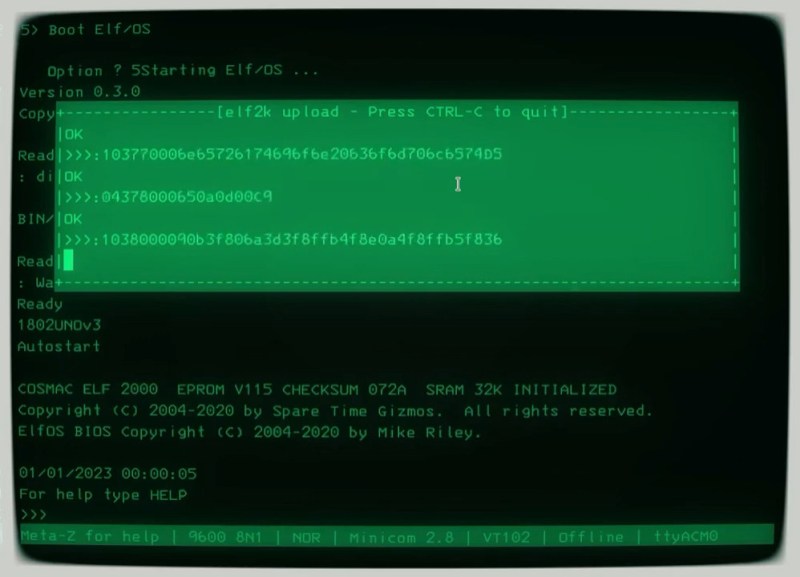

It wasn’t that hard to get it running with just more memory. Still, I wanted to run [Mike Riley’s] Elf/OS operating system and I also had a pair of Raspberry Pi Picos mocking me for not using them in a project yet. The chip has excellent Arduino board support. But what sealed the deal was noticing that you can partition the Pico’s flash drive to use some of it for your program and the rest for a file system. You can get other RP2040 dev boards with 16 MB of flash, which would let me have a nearly 15 MB “hard drive,” which would have been huge in the 1802’s day. Sounds simple. If it were, though, we wouldn’t be talking.

Emulated BIOS

ELF/OS and programs that run under it expect a certain BIOS, and the 1802 emulation already hooked many of these calls since other programs are written to use the BIOS, too. A BIOS call that might, say, output a character gets intercepted, and the Arduino code just does the work.

However, with the new filesystem in place, I needed to intercept and flesh out the basic file system API. There’s nothing exotic here. Given a disk block address, you just need to read or write a 512-byte sector. I planned to create a file the size of the virtual disk drive. Then, each read or write would just seek to the correct position and do the read or write.

As you might expect, that works. But there’s a problem. It works slowly. I know you might think, “What did you expect? This is a little computer that costs less than $10.” But I mean, like, super slowly. Disk formatting was painful. Even just writing a few sectors would take minutes.

Investigation

I was sure something was wrong, so I did what anyone does today when faced with a technical challenge. I searched the Web. Turns out, I wasn’t the only person to notice the slow performance of LittleFS. But it wasn’t everyone. LittleFS is fine for several things: reading files and writing to the end of files. Apparently, writing to the middle of files is slow. Very slow. I didn’t look at the code, but it appears that if you write to the middle of a file, the file system has to rewrite everything from the point you write to the end of the file. If the file is small, that’s not a huge problem. But with a 10 MB file, it takes forever.

I sort of get it if you were inserting things into a file, but in my case, it was just writing data over in place. It seems like you should be able to isolate the flash block that changes and only reflash it. So my choices were: 1) Fork LittleFS and try to fix whatever makes it perform poorly; 2) Use something else; 3) Work around the problem.

Forking LittleFS would be a big project, and then it is hard to benefit from upgrades. I didn’t really see anything attractive for option 2. So that left me with option 3.

The Final Option

I thought about caching more disk sectors, but since the problem was on writing, that would lead to data loss eventually. If we can’t stop LittleFS from rewriting files, it seemed the answer was to make that take less time. That means smaller files, and, in fact, that solution worked well.

Each disk call has an entry for a head, cylinder, and sector. However, the software treats it as a linear block address, so really, it is just a 24-bit number made of the cylinder and sector. My first attempt was to store each cylinder in a file and remember which file was open. When a new cylinder access occurs, the code opens a file like IDENN.DSK, where NN is the cylinder number. 256 cylinders (about 32 MB) seemed like plenty, although it would be easy to handle more if you have enough flash. Then, the code uses the sector number to seek to a particular spot in that file. If the file is already open, you just do the seek.

This immediately worked better, but still felt a little slow. A little trial and error showed that it was better to break the tracks into quarters (128 sectors each). The files now have a letter suffix like ide00A.dsk, followed by ide00B.dsk, and so on. After ide00D.dsk, the numbering scheme moves to ide01A.dsk.The read and write calls use the same logic, so I wrapped it up in ideseek:

int ideseek(uint8_t h, uint16_t c, uint8_t s)

{

unsigned newpos;

if (!dis_diskled) digitalWrite(DISKACT, 1); // light stays on if error!

if (h != 0 )

{

Serial.println("Bad head");

return -1;

}

if (c > MAXCYL) // too big?

return -1;

newpos = (s&0x3F) * sizeof(sector); // computer position

if (c != currentcy || ((s&0xC0)!=(currsector&0xC0))) // see if we already have the file open

{

int subtrack = (s & 0xC0) >> 6; // no, so build the name

char subname[] = "ABCD";

sprintf(fname, "/ide%02x%c.dsk", c,subname[subtrack]);

if (currentcy != 0xFFFF) // if a file is already open, then close it

{

fide.close();

}

fide = LittleFS.open(fname, "r+"); // open for read/write (assumes file exists)

if (!fide)

{

fide = LittleFS.open(fname, "w+"); // oops, create file

}

if (!fide) // couldn't open or create file!

{

return -1;

}

currentcy = c;

currsector = s;

if (!fide.seek(newpos, SeekSet)) // go to the right place

return -1;

cpos = newpos + sizeof(sector); // for next time

}

else // file already open

{

if (cpos!=newpos) // see if we are already there

if (!fide.seek(newpos, SeekSet)) // no so do a seek

return -1;

cpos = newpos + sizeof(sector); // this is where head will be next time

currsector = s; // remember where we are

}

if (!dis_diskled) digitalWrite(DISKACT, 0); // DISK led off

return 0;

}

Now, when LittleFS needs to write an entire file, it won’t be larger than 32 KB, which seems to work well enough. Seeking seems to be expensive, so the code knows that each BIOS call only reads or writes a single sector and adjusts its idea of the current position accordingly. If you ever called ideseek for another reason, this would be dangerous since each call primes the next call to think the current position is a sector beyond the last one.

In Practice

If you want to see the OS running on this file system, I have a few quick and dirty demo videos below. Or grab a Raspberry Pi Pico and learn something about the 1802. By the way, my intent here is not to knock LittleFS. It does great for storing small configuration files and logging data that tends to stream to the end. Those are the overwhelming use cases. I could have bit the bullet and used the flash interface directly, which would have probably been a lot smarter and faster. If you wonder why anyone wants to run a disk operating system on old hardware — real or emulated — we’ll refer you to our thoughts on retrocomputing.

Hahahahahahaha try it on ESP8266-01s, your working with a lot of room!!! We deploy a lot of these in production and wrapping your head back around the good old days when so much amazing tech is being thrown at you can be rough. ;)

Why to use filesystem at all for this? direct flash access would solve it (?) ‘Litttle’ is not the problem, ‘FS’ is.

I considered that. However, I was too lazy to have to figure out the file block (512 bytes) to a 4K flash sector in an efficient way. I _think_ LittleFS does that in a fairly efficient way except, of course, as noted above.

I don’t think littlefs write mapping is efficient at all, and read mapping is trivial. Just rewriting the whole 4k for each 512B write shouldn’t be particularly slow, and caching consecutive writes is quite straightforward also.

One option would be to instead of using a filesystem using a FTL (Flash Translation Layer) like Dhara (https://github.com/dlbeer/dhara). Okay it is used for NAND chips but there are probably similar projects for NOR flash.

Don’t even need a full FTL unless you are worried about bad sectors and write levelling. For a hobby / toy system that isn’t going to get a ton of writes over it’s lifetime, all you need is a cache. When you start writing to a block, if the write is less than the block size then you read the whole block from flash into the cache if it isn’t already there, only set the dirty flag for the block if data has actually changed. When you run out of free cache slots, you flush the oldest out to flash, repeat the process all over again. Write the last byte in a block? Mark it for immediate flushing. At a reasonable interval, flush any dirty write blocks that haven’t been written recently. Wrap it up with some thread safety so you don’t flush / free a block in the middle of an operation if you are using interrupts. If you are using purely cooperative scheduling (ie calling the cache flush/free function from your main loop) then you can skip that.

If only there was some way to find out information about a project on a single page… one might call it a project page… but to find that you might need some way to search… and engine of sorts… a search engine. Oh if only we had these things!

https://github.com/littlefs-project/littlefs

*

Power-loss resilience

* Dynamic wear leveling

* Bounded RAM/ROM

Also, CNX-software ran a nice article with an animation showing how wear levelling benefits LittleFS vs FAT: https://www.cnx-software.com/2018/01/02/littlefs-is-an-open-source-low-footprint-resilient-file-system-designed-for-tiny-devices/

I probably have 1 or 2 1802’s around here somewhere if you want them. Maybe even a 1602.

I still have my Quest 1802 system, I never could figure out assembly language programming for that CPU, it is a weird beast. Just couldn’t wrap my head around it. But the CPU has a 4? I/O pins (Q lines) which made it easy to connect an SPO256 and gave me great joy.

I had a quest system back in the day. Lost it to a hurricane. My first articles were in Quest data in the late ’70s.

LittleFS has copy on write for power loss resilience and a small writable block size. That will slow you down.

This is worth checking out:

https://github.com/littlefs-project/littlefs/blob/master/DESIGN.md

That rationale document is awesome! Thanks.

Possibly the best explanation of the design trade-off I’ve seen! And about halfway through you’ll see exactly why writing to blocks in the middle of a file is so slow (has to copy every block after that one!)

Has anyone found a good way to transfer / manage file on littleFS on the rPi Pico without Arduino 1.8 ?