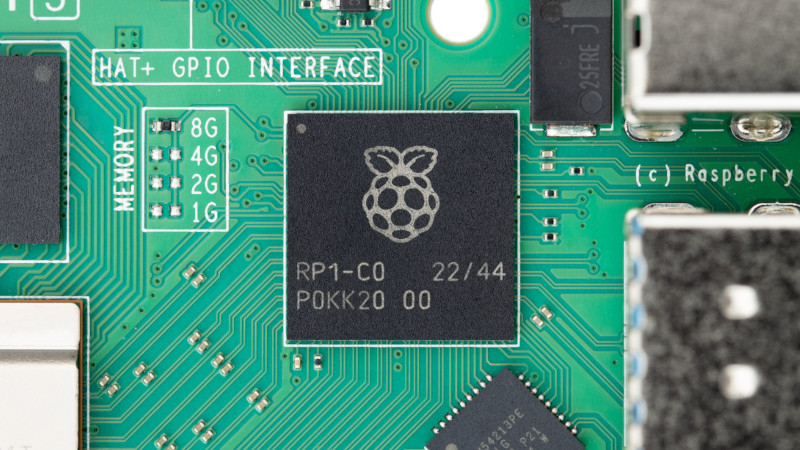

We’ve had about a week to digest the pending arrival of the Raspberry Pi 5, and it’s safe to say that the new board from Cambridge has produced quite some excitement with its enhanced specifications and a few new capabilities not seen in its predecessors. When it goes on general sale we expect that it will power a slew of impressive projects in these pages, and we look forward with keen anticipation to its companion Compute Module 5, and we sincerely hope eventually a Raspberry Pi 500 all-in-one. It’s the latest in a line of incrementally-upgraded single board computers from the company, but we think it conceals something of much greater importance than the improvements that marked previous generations. Where do we think the secret sauce lies in the Pi 5? In the RP1 all-in-one PCIe peripheral chip of course, the chip which provides most of the interfacing on the new board.

A Board Maker Becomes a Chip Designer

So far we’ve seen three pieces of custom silicon from Raspberry Pi, the RP1, the RP2040 microcontroller which is effectively an RP2, and the RP3 system-on-chip found in the Pi Zero 2 W. The numbering denotes their development timetable, and the RP1 project dates back to 2016.

The RP2040 uses a vanilla set of ARM parts joined by those Pi-designed PIO peripherals, while the RP3 is the same Broadcom SoC as found in the original Raspberry Pi 3 sharing a package with 512 MB of RAM. It’s evident that they are becoming more of a chip design house than they are a maker of boards with each successive new product, and with each iteration their capabilities increase.

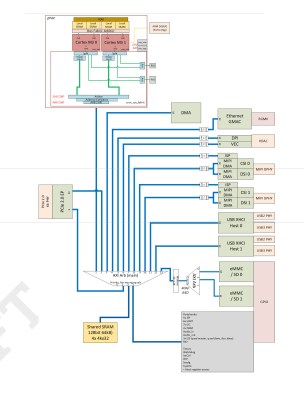

The RP1 is a chip that puts nearly all of the Pi’s external interfaces onto a PCIe peripheral, but in reality we think it’s more than that. What they have done is take everything that makes a Raspberry Pi a Raspberry Pi, and take it away from the SoC into a chip of its own. From a hardware perspective this makes some of the interfaces faster and adds a few new capabilities, but we think that the true value of this chip to the Raspberry Pi folks is a strategic one.

All of their previous boards used the built-in peripherals of the Broadcom SoC with the addition of a third-party Ethernet and USB hub chip, but an RP1-based Pi frees them from reliance on the SoC for the interfaces. Because the RP1 is now a Pi-in-a-chip that just needs an application processor, memory, and GPU to run, they can now make a Pi with any processor and GPU which will work just like the previous models if they maintain their rock-solid operating system support record.

A Pi Beholden To No Other

In theory then with the RP1 in hand they could make a Pi with any CPU that’s powerful enough and which has a PCIe interface, but this is unlikely to mean a radical departure in terms of architecture. We don’t expect a RISC-V Pi or an x86 Pi then, but this does mean that there doesn’t necessarily have to be an ARM Pi with a chip from Broadcom. They can now make a Pi with another manufacturer’s silicon at its heart, but if we had a chance to place a bet we’d be guessing that their roadmap has Raspberry Pi silicon front and centre. Our crystal ball is a little cloudy, but if there’s an RP4 or an RP5 in the works it wouldn’t stretch the imagination too far for them to put application cores and a GPU in it as the driving force behind a future Raspberry Pi 5 or 6.

Whatever happens in the future, the RP1 is definitely more than just a peripheral and its true importance to the Raspberry Pi as a product will become apparent as their product cycle evolves. We’ve been treated to a limited data sheet which has revealed a few new capabilities such as an analog-to-digital converter, and there are hints of a few more revelations to come. If we had a wishlist it would be for an RP1 with some of those RP2040-style PIOs. Meanwhile the mere thought of a future Pi with a Raspberry Pi application processor is intriguing, not least because they might be more amenable to releasing their blob source than Broadcom have been.

We’re reminded of the famous Rolling Stones song then, in that we can’t always get what we want, but if even a few of the above predictions for the RP1 come to pass, we just might find we get what we need.

Hear me out. AMD gives them an RDNA3 license for free/cheap to integrate into their own design. This will be a super cheap ROCm test board that even NVIDIA fanboi students have a reason to own. With 16GB shared memory, it can run inference on pretty big models (slowly). This will start a revolution of in-house offline AI assistants and AMD will get a big foot into the door of machine learning.

AMD architecture is too heavy US. ARM. Nice idea. But ain’t go n a happen.

Mike mentioned using RDNA3, you say to replace it with ARM.

What?

Atchison Institute LLC is designing a 21mm x 21mm die that you can MOUNT A CPLD on then flipchip the stack onto a RasPi-Z-W-2 or any SOM of interest. The main die has a 3×3 grid of 7mm x 7mm die that consist of (Mu0/VID-DMA/Nu0)functions X (TTL/FET/DIF)archetectures.

The object it to make a universal test pattern that allows the process development engineer to be able to do almost any process change and still make a product.

The aside is when you run standard matched Si/Metal Gate CMOS & BiBolar archatectures on the same wafer you get somthing that an LLM can MUX up into a “anything you want” circuit.

This is the direction all Ph.D. dissertations will be going. Hooked up with a good 3D printer and a 100T NVIDIA “will-power” processor this ” Super FPG” will be on every space e ship.

Interesting. Can you share any more details? I couldn’t find any in your profile link.

The idea is to use older GateArray “process” evaluation techniques and cutting edge “chiplet” assembly techniques to create a self testing die that is acyually several different die. Each die had a simpke function ( i.e. a memory device) that is implimented in several different design methodologies. This ptovides enough information for a new product introduction, product engineer or LLM vlone to figure out what is not working as expected and why.

I submitted a NSF Grant proposal and it was rejected brcause the reviewers thought it was too similar to a multi project wafer. I went ahead and finished the preliminary phase one work on my own. Some really interesting new things emerged out of this work. In addition, some really interesting understandings of the foundational “Producy Sensitivity Analysis” also spontaniously emerged.

The reviewer said Synopsis could provide this capability as a service. I can not reach anyone at Synopsis to find out.

I think that a lot of people that need this kind of performance, but prefer the RPi platform would just use the Coral TPU. It’s a good ide, but I don’t think there would be enough demand for it.

There is always demand for a better Raspberry Pi. There is always demand for open source drivers. If you can run some AI on your Raspberry Pi with okayish performance, there would be people developing for it. And I would be one who’d use it.

If you have to buy a dedicated accelerator / board to even try running your models on them, most people won’t bother. But if the hardware you use anyways can run your stuff, there is no entry barrier. A Raspberry Pi is useful for a lot of tasks besides (in this wishful thinking experiment) just AI.

The appeal of SBCs has always been price.

Compute power is a worthless metric when the platform costs $140.

The RPi was popular because it had a useful amount of power at a $30 price.

It was inexpensive enough to buy a few if you were worried about breaking one.

It was inexpensive enough to use in schools. Kids could actually keep them.

It was inexpensive enough to build into projects.

It was inexpensive enough to let people try it out without having to invest in an ecosystem.

It hasn’t been inexpensive enough to use in a long time though…

Wouldn’t it be great to have a PCIe Card with the RP1 that you can plug in your x86 machine? You could have a Threadripper machine with GPIOs..

Such cards already exist. And you can use LPT and RS-232 ports as GPIO. Actually using these older ports might be much easier for the programmer to use. With some custom interface you can obtain as many IO lines, as you need. And you could further expand these by adding more LPT port cards.

LPT and RS232 card are totally obsolete and have been for a long time

Tell that to FTDI

Kind of proves the point as that’s TTL over USB with a UART chip in the USB-A housing. The old RS-232 AIB(and for a period built-in to MOBOS) used 9-pin D-Sub and require +/- 12(or 13) volts. Not only do modern systems not even include D-Sub, but +/-12 rails are a rarity. And since converters fit into USB cables, there’s little incentive to do it any differently on consumer computers.

25-pin D-Sub Line Printer Ports are even more obsolete, and have been since we stopped calling PCs “IBM-compatible”. The whole sub-thread is silly as Steve M. seems to be asking for PCIe speed capable GPIO to the processor, not just a way to program, debug, and monitor over serial/parallel ports.

There are still a lot of mainboards which come with one COM port and an LPT port on board.

Just as example: https://www.gigabyte.com/de/Motherboard/GA-IMB1900TN-rev-10#ov (look in the picture bottom left)

Usually the ones with an LPT Port are these mini ITX boards. A COM port is usually on every Mainboard, even these full size gaming mainboards.

No they are not. LPT is just GPIO that used to be needed for a printer. GPIO is GPIO.

RS232 is a uart with line drivers. Uart is Uart.

It is no less obsolete than arduino.

You don’t need PCI to do IO either. This chip is nothing special nor does it give any real capability other than to provide the Pi people with a path away from other people’s IP… which can help their supply chain management.

USB is a fantastic way to add inexpensive IO to any machine. Uarts, SPI/I2C, GPIO (FTDI), ethernet (Microchip), etc.

The PIs are too power hungry and over powered for most of what my company does and have been for a while. There are processors from Microchip and NXP that make adding Linux based computing to a product super easy. And Yocto makes getting a customized kernel running relatively straight forward. Their inability to deal with the supply chain issues was the final straw… they still don’t have reasonable quantities of compute modules available. Shut down our product line for nearly 2 years.

We are done with Pi. I am sure hobbyists will still love it but I expect a lot of OEMs are seriously reconsidering any commitment they have to the platform.

In a couple of Raspberry Pi videos with Eben Upton they’ve mentioned they had RP1 chips on x86 PCI-E boards during development – but the lack of Device Tree support in Intel Linux made them trickier to use than on ARM.

“You could have a Threadripper machine with GPIOs..”

They actually already have them. High-end CPUs aren’t actually much different than microcontrollers in that they usually have an array of low-speed GPIOs which can be muxed with low-speed peripherals. It’s just that the motherboard design typically uses almost all of them, and even if they don’t, they don’t expose them to users anyway (partially because it’s risky, as it’s a pin directly connected to one of the most expensive components).

But as another person mentioned, PCIe cards with GPIOs aren’t hard to find.

Any sufficiently powerful FPGA board with PCIe (e.g., ECP5-based ones) can do far more than that.

Sure, but it doesn’t come with upstream Kernel drivers and the Raspberry Pi community with examples.

There is indeed an upstream kernel driver for Xilinx PCIe IPs, it was very easy to use.

Cost is the other reason, if they can produce an RP1 PCIe card with pricing on the RPI Pico end of the spectrum, it would be a game changer for CNC use along with anything else which needs high speed peripherals.

IMO, an ideal board might have a PCIe 2.0 8x interface with two RP1 chips each taking 4 lanes. They’d then include an RP2040 for each RP1 (Connected via SPI and UART) which can provide PIO capabilities. That would be a lot of GPIO, hardware serial peripherals and PIO with the bonus of a load of MIPI as a bonus.

I’m hoping the RP4 chip takes the concept of PIO and runs with it, as primarily a massive external PIO chip.

Would not it defeat the purpose? SPI will add a lot of latency.

Most of the machine output switches are slow-as-molasses relays, for coolant flow or dust sucker or driver-enable or other peripherals. The realtime control of movements is also fairly slow compared to SPI that can clock at dozens of MHz; that can bitbang even the step-dir for the steppers.

@Thomas Shaddack for this slow I/O you don’t need a PCIe based device in the first place. Just use an SPI GPIO multiplexer.

There is a ton of applications where GPIO switching latency really matters – e.g., BLDC control.

For purposes of CNC control the proposed RP2040 would suffice for the latency critical bits, and a slow serial link to the RP2040 would be enough for the rest.. Which brings us back to the realization that PCIe would be totally overkill..

Now put the RP1 on a PCIe card and maybe an M.2 card so we can have all the GPIO we need on our PCs.

Once we get a datasheet of the same quality as for the RP2040, it will be a wonder indeed.

The possibilities of mating it to any chip with a PCIe interface certainly opens up possibilities. It will be interesting to see how that develops.

I don’t get it. Nothing’s been said about any availability of “RP1” to 3rd parties, especially common folks like random Joe Hobbyist?

Will we ever be able to just order 10pcs from Mouser or Digikey?

“random Joe Hobbyist” will just buy a RPI 5 and be done with it ;) .

I don’t expect to see RP1 chips sold without RPi-5 attached to them.

They’ve said it won’t be available independently.

Which makes sense, from the company’s strategic PoV. So that makes me wonder why we’d really need a quality datasheet like for the RP2040 ?

I get that a nice good datasheet could still be *somewhat* useful, even in the context limited to standard RPi boards.

Maybe some cool Hacks & tweaks can be made even within the original board HW.

But the “mating it to any chip with a PCIe lane” part sounds like a hypothetical pipe-dream, if the ICs can’t be sourced anyway. Unless that proposal involves desoldering the ICs from official RPi’s, reballing them and use in some custom HW design.

While that’s probably perfectly possible, and would indeed be interesting to see, it would certainly not be a very practical or common occurrence :)

To program against, if it’s part of the CM5 or other HW they produce being able to program it and support it independently is extremely useful, it also essentially has a higher end RP2 built in with a dual Cortex-M3 (over the M0 in the RP2) so people will need to be able to do similar things to the RP2 as a co-processor to the main Arm A cores.

In short: no. It’s a chip that allows its owner to make a Pi with almost any application processor. They don’t want their direct competitors to have it, so they won’t be selling it.

This. Even though it sounds financially compelling and a lot of people may be willing to buy or demand it but it’s not gonna happen. On the flip side if at all this is sold on the open market, imagine this being a higher grossing product than the Pi models themselves.

It also has a pair of Cortex-M cores on it like the RP2 chip, although this time M3 not M0

The difficulty of “put application cores and a GPU in it” is that, while application cores come in free, open and licensable varieties pretty readily, GPUs are complex beasts and open GPU cores with open drivers are very rare things.

The fact is that anyone can design an ASIC and get it into production, if they can afford it. You can buy a PCI-E card with powerful FPGA chip for hardware development, and then send your finished design to be manufactured. The real cost is in development of component parts of that ASIC. That’s why most GPUs are developed as closed-source projects by major corporations, and they won’t open them. And the basic stuff, like 2D and 3D rendering is not that hard to do – we developed the theory and algorithms before we had hardware powerful enough to perform the calculations. But while hardware was catching up to those basic algorithms, we developed much more advanced and complex ones. Even Intel, a major player in computer hardware, has problems catching up to nVidia and AMD on gaming GPU market. Open Source hardware will be 5 to 8 years behind those major players, and that’s quite a lot.

Then there are patents.

Not only that, at least if closed source drivers are acceptable there are many GPUs one can license. But a modern application processor will need to be made in at least 16nm tech or so. Compared to 40nm this is very expensive, and things like 5V tolerant IO will need special process characterization.

Get a cheap FPGA over PCIe (with a full open source toolchain), that would make a worthy product.

Intel was supposed to be bringing us CPUs with integrated FPGAs but if it ever happened it only happened at the extreme top end.

I am a super newbie hobbyist but have always been fascinated by FPGAs. I played around with a cheap FPGA and a super cheap CPLD. I managed to get a software cpu core installed on the fpga (not that I got it to do much) and wrote some basic code for the CPLD to have it control some addressable LEDs. The CPLD was vastly quicker then a similarly priced micro controller but not exactly easy to tinker with.

Xilinx (AMD) have several ARM+FPGA SoCs; Zynq. Altera (Intel) have similar

maybe i’m being unduly negative. i admit, i was slow to see the genius of the RP2040. but to me this looks like a southbridge that they’re intent on only pairing with awful closed broadcom chips, a continuation of their legacy.

i can tell Jenny List and i are living in different worlds from this phrase “rock-solid operating system support”. what i have always looked for in a linux board is documented interfaces so that the open source linux kernel can operate in full openness. but what we’ve got instead is a bunch of thin (and ugly, and undocumented, and changing) open source wrappers around closed source firmware. it’s very useful but i’m not going to call it rock solid. my personal perspective is that the moment you start poking at it, the ground crumbles under your feet and you realize you don’t actually control *or even understand* anything that’s going on.

it’s a fine compromise in some ways. i live with the same compromise with a lot of closed hardware on my laptop. but there’s another difference: it’s not just smartphone chips, even the newer mobile celerons from intel show that it’s possible to have near-zero idle draw paired with an enormously powerful CPU. but the raspberry pi 5 moves even further away from that ideal than the boards before it! (unless i have missed something??)

a pi-in-the-sky dream that they may someday move beyond these awful broadcom chips is all i’m seeing. but until i see that reality, it will look like just another companion to an awful closed source broadcom chip. there surely are users and use cases who will appreciate the greater performance of raspi 5 but there’s just such an enormous demand for a raspberry pi that is embeddable, that cares even a little bit about idle power draw, and that has some openness to these core functions…and i’m just not seeing any steps in that direction yet. the RP1 just seems to be a companion to their same old established harmful trajectory.

I guess I don’t see it as harmful. They sale more than they can make :) . What I do see is with the RP1 chip, the Raspberry Pi people now have all their options open. Any ARM CPU (or other, RISC V?) can be on the table for any future products utilizing the RP1 chip. I see the RPI-5 as just a stepping stone to the future. Could one say a ‘proof of concept?’ Could be wrong, but that is how I see it.

As for idle power of the RPI-5, we’ll see where that heads. I know one of the talks said something about a sleep mode. Also the RPI-5 CPU can be clocked ‘down’ to 1.5Ghz I believe I read. So that ‘should’ help the idle and max power used I would think. All depends on ones intended use of the board.

Oh, and a lot of projects I bet can simply be handled with a Pico W or any RP2040 board. Only need the RPI-3,4,5 or CM modules for your most complex, CPU intensive projects where power ‘usually’ isn’t a concern. I know I’ve swapped out some RPI Zeros for Pico Ws for example. Better fit.

eh, i am ever more convinced they don’t *want* to leave broadcom behind. i’ll believe it when i see it, or probably (given my biases) some years after that :)

but i’m responding to “I don’t see it as harmful” because i super duper see it as harmful but it took me a moment to put my finger on it.

a lot of use cases, the pi really is perfect. but for the cases where it isn’t, it perpetrates this harm on the people who use it. they fight with the pi, they invest time learning the insane limits of the pi. if you go looking for it, you can find a lot of incredulous posts that clearly represent someone who has sunk dozens or hundreds of hours into it, and simply cannot believe the limitation they ran into. as one of those people myself, this harm really offends me. but that’s superficial compared to the deeper harm.

i’m not the regular user, so the probems i ran into are not representative of the problems ‘regular users’. and the dozens or hundreds of users who are situated similarly to me — who are outraged at the closed and proprietary problems they run into — are a tiny minority too. but we are a very specific minority. we are the open source community. we are the people who contribute back patches, who release source code to our one-off hacks that can serve as a model to others, and who generally move this whole open source shindig forward.

and attracting us to pi is a harm. pi is a honey trap to us — we come to it but all we get is sticky with no sweetness. there’s a real opportunity cost here. if broadcom hadn’t come up with this devastating way to sell their extremely closed ecosystem to everyone, we wouldn’t be wasting our time with raspberry products. instead, we’d be *investing* our time into something better, probably something rockchip or so on.

the fact that none of the competitors have managed to build enough of an open source community to get over the initial hump and generate a well-supported ecosystem is exactly synonymous with the fact that raspberry has managed to become such a pervasive entry point to the arm sbc world. the enormous number of raspberry promotion articles on sites like this one lead directly to the hundreds of people who could build up those open source communities each wasting dozens or hundreds of hours banging their heads on this regressive closed environment. each of us could have contributed one useful nugget to another project in the time it took us simply to become outraged at raspberry.

that’s harmful. they’re a parasite on the open source community itself.

Just stop using them if they’re so bad???? Who is forcing the pi down your throat exactly? The intended market loves the pi. If you’re an outlier stop trying to force yourself to use something you don’t have a use for.

i really tried to answer your specific questions in my above comment, and i will try again.

i got raspberry pi because i relied on misinformation from places like this website that indicated it was unusually open. in fact, it’s unusually closed, it’s about as closed as a modern PC. the only thing it’s got going for it is that the vendor has already struggled with collecting the glue for the closed source resources into a tested bundle. that’s valuable but it’s not unusually open.

i then invested about 30 hours into the hack that i wanted. it took more effort than it should have, because of the proprietary barriers i ran into. i had to work around bugs hidden behind the proprietary barrier. if it had been open, i would have *fixed* those bugs and everyone would benefit from the fix. but instead all i got is a work-around so i could complete my own project.

then raspberry pi changed the interface in the very next OS version, so now my one-off hack itself is useless to everyone else and a ticking timebomb to myself.

there are at least a hundred people in a similar situation to me, who have each invested these hours without generating anything of value to the community. in order for a more open board to really succeed, it needs the labor of these people. it needs this open community. it needs a decade of tiny incremental contributions. but it can’t have us, because we wasted our time on raspberry pi instead.

this misinformation harmed the other boards. that’s why we can’t use them. because instead of accumulating the strength of hundreds of people fighting these battles, our efforts were squandered fighting with a closed ecosystem. we could not fix the bugs we found and our workarounds were broken by the upstream vendor before we could even finish them.

the solution has to be community-wide, people need to become aware that raspberry pi is opposed to the values of open source software and squanders the work of open source developers. once that happens, other projects will stand a chance. that’s why i speak the way i do. because the misinformation that pi is open is the harm itself.

the availability of a well-supported closed board is not harmful. only misinforming that it is open is harmful.

Pi have, for almost its entire history, been moving away from closed source firmware to open source ARM side drivers. Mesa instead of firmware OpenGL, libcamera instead of ISP in firmware, DRM instead of the firmware HVS/HDMI/display, V4L2 instead of firmware based OpenMAX. There are limits to how fast it can be done, but it is being done. Pi5 firmware is now a very small bit of code indeed, all the real work is done on the ARMs in open source code.

i’ve looked into some of this and it’s been my impression that they’ve been moving away from bad proprietary interfaces to their closed firmware towards standardized interfaces to closed firmware. with the firmware still closed.

the irony is that the transition itself causes pain. already, the closed interface i had to bend over backwards to tolerate has been replaced by a standardized interface and the only software i use on my raspi4 is now tied to a 3-years-ago version of the OS and will break if i ever upgrade.

i waited until raspi4 to join the club, so their pace is very slow indeed. the fact that the underlying firmware is entirely closed is the ultimate culprit here. they cannot leverage a community that would have solved this problem nearly a decade ago because the community is forbidden from the resources needed to solve it. so instead we are left begging for their largesse and being asked to appreciate that their glacial pace is at least progress.

there’s many things to say about it — some quite positive — but it falls so severely short of the ideals or promise of an open source development community.

So instead of complaining the Pi isn’t perfect go find something similar that is, and when you find it actually tell us what we should be looking at instead!!!

Which I doubt is actually possible as open more completely than a Pi AND actually a computer not just a micro really isn’t common – maybe you can find something that is more open in the one precise area you keep bashing your head into the wall with a Pi whatever that is, but I’m damn near certain you’ll find some other wall to bash your head against probably way more than just one other wall too.

Plus this move of everything to the 2040 like chip means they can as it is their IP open up much more of the hardware you actually need to deal with.

one of the reasons that’s not possible is the harms produced by raspberry pi. i would swear i already typed this.

You can moan at the Pi all you like but saying it harms anything by existing smells most malodorous… The product you want doesn’t exist at least as far as I or apparently you who really care enough to hate the Pi for having some locked down something somewhere you wanted to be so surely went looking?? can tell. Probably because it is nearly impossible to actually make it exist and just about the folks trying to make something close happen are the Pi foundation, Pine folks, MnT reform and maybe you can count Framework (plus no doubt a few I’ve missed).

A community can and will form around anything remotely worthwhile – if somebody actually produces a good RiscV? entirely open hardware board that is capable enough to be useful, actually for sale somewhere (and somewhat reliably available) while not being priced so absurdly high it makes no sense at all then the open source crowd will flock to it. Just look at the swarms of folks helping each other de-google and keep their old phones running or turning crap routers into functional ones with OpenWrt firmwares – with way way way more locked down obfuscated crap baseline to start from than a Pi yet its happening…

Foldi – what is harmful is the mythology that it’s open. it’s fine to have closed stuff. just call it closed.

There is no mythology there though, it is very largely open and has been trending ever more that way as the Pi ecosystem matures. Nobody is claiming a Pi is fully open source hardware complaint, but for a real computer it is one of the only contenders you can call even remotely open, and lots of interesting projects have been possible because so much of it has been opened – so celebrating all the bits that are is well worth it, and not at all misleading.

You do not know enough about this, clearly your “looking in to it” was insufficient. The Pi5 firmware has been reduced greatly, now has nothing to do with codecs, 2D, 3D, cameras etc. It only handles clocks and I think, the related power management. Please get your facts right.

do you have any idea how much work it was the first time to dig and dig and dig and get to the bottom of it and find out that bottom was an opaque wall with a closed firmware on the other side that has a bad interface and lots of bugs and no documentation?

at the time, i had heard from people like you, again and again, that raspi is open. they said the same thing you’re saying today. and i invested that effort to find out that was misinformation. i was astonished!

i’m sorry that, given the same assurances that were false before, i haven’t got enough faith in me to waste the time again.

and for what end! all my work before was useless before it was complete, because they just change things behind the closed wall without any contribution from the community. will that happen again? according to you, it seems like it won’t, but wouldn’t you have said the same thing 3 years ago? or is this a perennial thing? for every now, do we say that pi used to be closed but isn’t anymore? people were saying *that* 3 years ago too.

this thing of throwing away time peeling back layers of wrappers to debug a closed bit of software is not at all unique to raspi. it is normal to all closed environments. so much less harm if we call the spade a spade.

If you look into what is the basic intent of the new tool development at NSF & DARPA ypu will find birds of a feather.

Taking into a count what LLM systems ste doing it will soon be moot. LLMs will know everything. As far as my work is concerned in the Neutonian Engineering world it already is that way. If you have a 40T computer ($500) you can essentially do anything. It is like in the late 70’s and early 80s when the TI-59 anf HP-41C changes the engineering world – birth of HACKERISM but jumping from 2.5MHz to 100T.

Where can I get one of these 40 THz computers for $500?

I think he means TFLOPS, a RTX 2060 gets 51.6 TFLOPS on tensor operations and newer ones even more.

We’re in the same world, believe me.

What they do with OS support is provide a distro that’s regularly updated with up-to-date kernels, and works properly with a huge support knowledge base. Compared to Chinese boards with a single hacked-on and buggy kernel and no support, that’s pretty good.

What they don’t do is provide any low level info, it’s all through the blob. That’s not good.

The part number “RP1” is an unfortunate name for it.

Because as a casual Pi user of many years, I assumed that “RP1” was short for “Raspberry Pi 1”, as in the original 2012 Pi board (A or B) before there was a Pi 2, then a Pi 3… etc. I had to read the article a few times to realize it’s talking about a particular custom chip, no the 2012 board. (And an IO chip at that.)

It is only a product if they sell it

Indeed. It’s just one of many examples of bad naming/numbering products. Why can’t marketing people count?! Xbox is another example. The second version was numbered “360” and the third one was numbered “One” and the fourth version of the series is named “series”. No surprise coming from MS who changes their OS version numbering system every couple of versions.

I wouldn’t call SIP-ing an SoC and some RAM together “chip design”

Gimping it with a micro-HDMI port, especially after the 400 has been so derided for doing so, doesn’t bode well.

What is wrong with a micro-HDMI port? Every time I need to plug in a screen, it just works…. Also takes up less room on board edge. Seems like a win-win for the occasional times you use the HDMI interface. Should say ‘I’ use :) . But again it shows the screen just fine, so I don’t see a problem here.

As cheap as USB-C has gotten they could just skip hdmi completely. Many small portable monitors are usb-c now and you can buy an adapter to go from usb-c to hdmi (something you most likely do for the micro hdmi port too, but at least the usb-c adapter is something you’d likely use in other places anyway).

Thank you for the update! Never thought about that! My portable monitor looks like it has HDMI 1, HDMI 2, and a 5V-USB C port (didn’t know it could be used for display). BTW, I do like the small portable monitor as it fits well on the bench rather than pulling out a full size monitor for the RPI occasional work.

My cheap portable monitor (Arzopa) can be powered and drive the display over the single USB-C cable, which is quite neat. It would have been nice to see that on the Pi, but mini-HDMI is the standard there these days.

I don’t use it much either, but I don’t have *anything* with a micro-hdmi port, so basically the adapter dongle would have to follow the Pi around in case I needed it. As such I’m against it for similar reasons as I’m against the idea of a laptop without a usb-A port – if I always need the adapter, you didn’t actually save me any space or money, just moved the space outside your device.

The pi sucks at desktop with a single monitor, why do we even need two!.

Bring back full size HDMI please

I vote to replace one or both of the HDMIs with Displayport, Displayport converts to HDMI with a cheap adapter but the reverse is a lot more expensive.

3d/stereo displays for virtual/augmented reality can be done on a monitor pair.

I thought the Pi project started because Eben worked for Broadcom, and they encouraged him to use their chips?

Has Enben stopped working for Broadcom, or is the Pi project fully disassociated with them?

haha thanks for this! trying not to be conspirational, i will still say: i’m astonished i didn’t know Upton started out as a senior Broadcom employee!

a lot of things make a lot more sense once i realize raspberry foundation exists to sell broadcom chips. i had assumed that they started out wanting to make a hobbyist board and broadcom had just offered them a good deal, and honestly that just never really made sense to me.

it also deepens my pessimism that RP1 will ever “liberate” them from broadcom — they don’t desire to be liberated! it all makes sense now.

There’s a story here for sure, even if it’s to confirm there’s no conspiracy :-)

I expect he is independent now. Or the Foundation is. And that depending on Zbtoadcom is mainly a function of history.

I think the less tin-foil-hat version is that Eben worked for Broadcom and used his knowledge and a bit of inside leverage to spin up a little educational board to encourage kids to get into coding, and it got a bit too successful.

Getting good support for SoC’s and parts at that level is _hard_ for small outfits so sticking with Broadcom initially was probably their only real choice, once you’ve sold a million or so other companies may talk to you but by that point it would be a massive shift and a ton of work to switch to a part that’s no better (or not usefully better) – and if they’ve built up a good relationship with Broadcom and are getting good support & pricing, why would they change?

If they suddenly flipped and went to another manufacturer there would be as many people lining up to attack them for that too.

The only thing to add here is Eben Upton hasn’t worked for Brcm for some time.

“and if they’ve built up a good relationship with Broadcom and are getting good support & pricing, why would they change?”

i like easy questions. the answer is, because they aren’t getting good support. a product like raspberry pi really needs good documentation of the underlying chips to fulfill its promise to hackers. broadcom doesn’t seem to allow such documentation.

and then there’s power management.

Eben was still working in Broadcom by the time Pi3 was released. I worked in his team back then, on VC5 GPU. The main reason for using Broadcom SoCs for Pi is pretty much familiarity, as the same team that designed the SoC designed Raspberry Pi, and to this day they are mostly the same people.

Anyone else spot the memory select jumper? One track connected via resistor to gnd, I wonder what the reange is and if it might be possible to freak it out or ‘unlock’ more capability with different values (and obvs. different/extra memory chips

Just saying “put … a GPU in it” [and achieve independence from Broadcom] seems a bit optimistic. I think it would be almost as hard for Raspberry Pi to move away from the Broadcom VideoCore series as to move away from Arm. The VideoCore IV, VI and now (presumably) VII must be amongst the best supported GPUs by open source software, and I’m under the impression that at least some of that work has been funded by Raspberry Pi. Which GPU family would you propose that they move to? Are any of the other vendors likely to open their interfaces up in the same way? Mind you, it seemed pretty out of character for Broadcom.

What I wonder if they’ll do is introduce a headless variant of the Raspberry Pi, that has no GPU on the SoC, and no display interfaces. It could retain the GPIO, networking and USB etc. (which is now all on RP1) and would be a lower-power alternative for the tiny home server / home automation usecase, where graphical output is rarely useful. I’d imagine that would be good for a lot of projects, as you could develop and debug on a system with a display, but deploy on something without.

Said: “as you could develop and debug on a system with a display, but deploy on something without.”

Actually I do all my debugging either ssh’d in to the RPI or using the USB serial interface (like on the Pico). No need for a display at all for ‘most all’ (of my) use cases. I normally use my development computer for editing files, cross compiling, and then sftp to the RPI, or setup a shared folder via nfs. Lots of ways to ‘develop’ without a silly local GUI with keyboard and mouse taking up space on the bench. That said, I don’t mind a HDMI interface being available for those projects that have something to display. Also seems now with new PI OS it is almost a requirement so you can setup an ‘initial’ user … grrrr…. No default ‘PI’ user.

Yes, while it makes tons of sense for the Pi to be a “full computer” for educational purposes, I’ve personally had very few situations where being able to use it as a desktop was an advantage. Even if I get locked out, it’s easier to reimage than log in. I’d imagine a lot of people are the same.

Of course there are cool projects like RGB2HDMI, and all of the cyberdeck and console emulation projects that make a virtue of the display capability. But no one is suggesting that all future Pis should be headless.

Broadcom is keeping its GPU team (all that was left of the larger Media division) primarily for the needs of Raspberry Pi and for its STBs (of which they even forgot themselves when they were closing Media down). They would have sold the IP long ago otherwise.

So, yes, there is a special relationship.

That’s interesting. I wonder who’d be likely to buy it if they sold it. If Raspberry Pi Trading are the biggest customer (are they?), they’d have an interest in seeing it go to a good home. But I don’t suppose they would be interested in buying it?! It would be a big step from integrating existing IP, and building things like PIO (cool as it is). The ongoing costs of something like that must be enormous!

Amazon switched to the other SoCs for their FireStick, so yes, there are only two customers of the VideoCore GPU – Broadcom itself, for its STB products, and Raspberry Pi.

Given that a lot of people in Raspberry Pi Foundation are the same people who designed VC in the first place, the same people who built those SoCs, it can probably make sense for them to acquire the IP if Broadcom decides to let it go. Probably it’ll be the best possible outcome.

The RP1 is not for sale.

Like I mentioned in the forums years ago, switch from A50-cores to A70 cores, and you’ll get the performance bump you’ve been wanting…