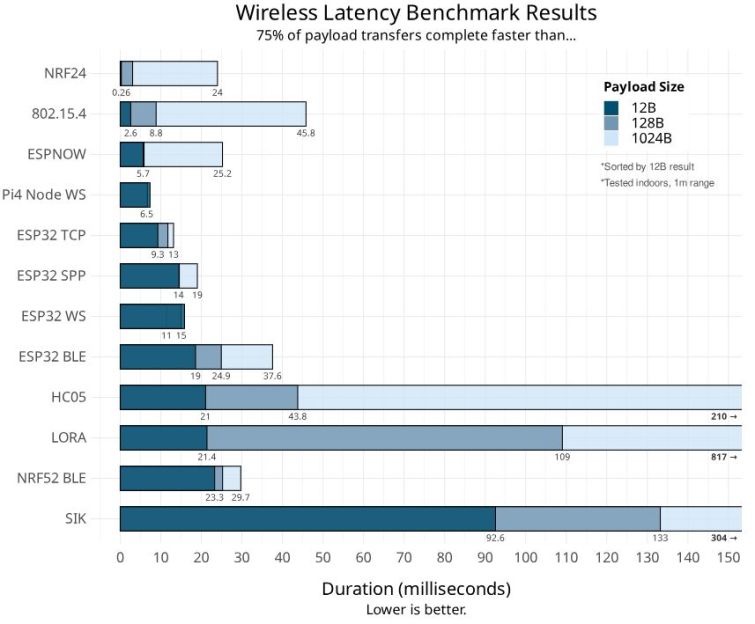

Although factors like bandwidth, power usage, and the number of (kilo)meters reach are important considerations with wireless communication for microcontrollers, latency should be another important factor to pay attention to. This is especially true for projects like controllers where round-trip latency and instant response to an input are essential, but where do you find the latency number in datasheets? This is where [Michael Orenstein] and [Scott] over at Electric UI found a lack of data, especially when taking software stacks into account. In other words, it was time to do some serious benchmarking.

The question to be answered here was specifically how fast a one-way wireless user interaction can be across three levels of payload sizes (12, 128, and 1024 bytes). The effective latency is measured from when the input is provided on the transmitter, and the receiver has processed it and triggered the relevant output pin. The internal latency was also measured by having a range of framework implementations respond to an external interrupt and drive a GPIO pin high. Even this test on an STM32F429 MCU already showed that, for example, the STM32 low-level (LL) framework is much faster than the stm32duino one.

After a bit more checking for overhead within the MCU itself with various approaches, the actual tests were performed. The tests involved modules for SiK, LoRa, nRF24, Bluetooth SPP (ESP32, HC-5), Bluetooth LE (Bluedroid & NimBLE on ESP32, as well as the nRF52), ESP-NOW (ESP32), 802.15.4 (used by Zigbee, Matter, Thread, etc.) and of course WiFi. The software stack used had a big impact on some of these, such as for BLE, but WiFi differed a lot on the ESP32, whether using TCP or overlaying Websockets (about 10x slower).

What’s clear is that to get low latency with wireless communication you need to do more than just pick the right technology or module, but also understand and work with the entire software stack to tweak and optimize it for the best performance. For those interested in the raw data, firmware, and post-processing scripts, these can be found on GitHub.

We’ve looked at low-latency hacks before. Testing which protocol is best is a staple for RC enthusiasts.

Very useful data! Thx!!

Not 16, 128 and 1024? That would have made more sense.

Why? When something is non-round like that, you should really assume that there’s some subtlety that you don’t know the details of. In this case, there’s usually a bit of overhead in a packet, so by picking 12 bytes they’re actually ensuring the radio doesn’t end up splitting the packet into two 16-byte chunks and massively skewing the results.

The overhead has a negligible impact on the larger packet sizes, so they don’t bother to compensate for it.

There are much faster ways things could have been done in the code. Things like using gpio_set_level( ); There are much faster ways to set I/O pins than this. recv_cb->data = malloc( len ); takes time, if defined before hand would save time too. There are others ways like using IRAM for for functions as well.

So if you want to speed things up there are more ways to do this. But this gives you a general idea i guess. The reason they don’t provide this info is because it’s so heavily dependent on the user code.

I think the malloc will happen before actually waiting for receive, so it shouldn’t affect the measurement. The difference between gpio_set_level() and direct register write is less than a microsecond, in a measurement that is several milliseconds for most protocols.

The transfer latency between external RF chip and the microcontroller is a significant factor, but even that was measured as only a couple of microseconds.

So there more software there is, the longer it takes. Shocker.

I’m intrigued by the ESP-NOW results, as I can transfer 127 bytes under one ms. https://hackaday.io/project/161896-linux-espnow

BLE is not for low latency, use FSK/OOK/light.

I’m not sure how accurate this is, from this list, I only have tested ESP-NOW, but I got < 1ms round-trip time for about 95% of the packets, with most deviations being about 2-3ms, and a very spurious delay of 6ms at most.

nRF24 is the basis raw protocol for nRF24XXXX µc. You can’t compare it with BLE or WIFI or Zigbee since they all are written on top of this one. It’s no surprise this is the lowest latency version.

That’s literally the point of the exercise – you can indeed compare them.

I think the spirit of “you can’t compare them” is something like the results that came out: WiFi is so dominant for large amounts of data, and the raw protocols for small bits, that the comparison is almost “unfair”. But of course you can compare them.

Man, that startup time on BLE is a killer, though. ~20 ms just to fire up. By then, nRF24 and ESPnow are mostly through the first kilobyte, and you have a whole cat picture over web sockets. And in keeping with the spirit of the tests, ~20 ms just starts to be noticeably laggy for people doing fast-response things like gaming or flying race quads.

And for small bits of data, temperature sensor or simple joystick controls or whatever, it really looks like nRF24 shines. I mean, as sweethack suggests, it’s got no protocol layer overhead. But isn’t that the point?! Quantifying the time cost of the protocol layer?

If you’re just sending a few bytes, nRF24 gets it out 10x faster than Zigbee and 20x faster than ESPnow. 40x faster than WiFi and 100x faster (!) than BLE.

Again, the reason is obvious, but it’s great to see them all quantatively compared.

Well, unsurprisingly old nrf24 has beat up everybody else. It’s a great balance of price and performance in between strong aged AM and modern lot modules