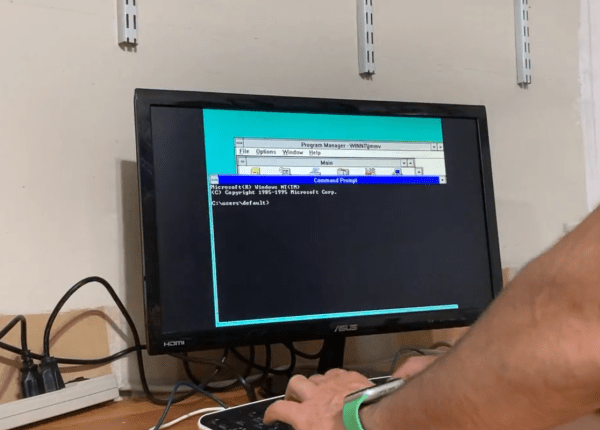

If your guitar needs more distortion, lower audio fidelity, or another musical effect, you can always shell out some money to get a dedicated piece of hardware. For a less conventional route, though, you could follow [Brek Martin]’s example and reprogram a handheld game console as a digital effects processor.

[Brek] started with a Sony PSP 3000 handheld, with which he had some prior programming experience, having previously written a GPS maps program and an audio recorder for it. The PSP has a microphone input as part of the connector for a headset and remote, though [Brek] found that a Sony remote’s PCB had to be plugged in before the PSP would recognize the microphone. To make things a bit easier to work with, he made a circuit board that connected the remote’s hardware to a microphone jack and an output plug.

[Brek] implemented three effects: a flanger, bitcrusher, and crossover distortion. Crossover distortion distorts the signal as it crosses zero, the bitcrusher reduces sample rate to make the signal choppier, and the flanger mixes the current signal with its variably-delayed copy. [Brek] would have liked to implement more effects, but the program’s lag would have made it impractical. He notes that the program could run more quickly if there were a way to reduce the sample chunk size from 1024 samples, but if there is a way to do so, he has yet to find it.

If you’d like a more dedicated digital audio processor, you can also build one, perhaps using some techniques to reduce lag.

Continue reading “Running Guitar Effects On A PlayStation Portable”