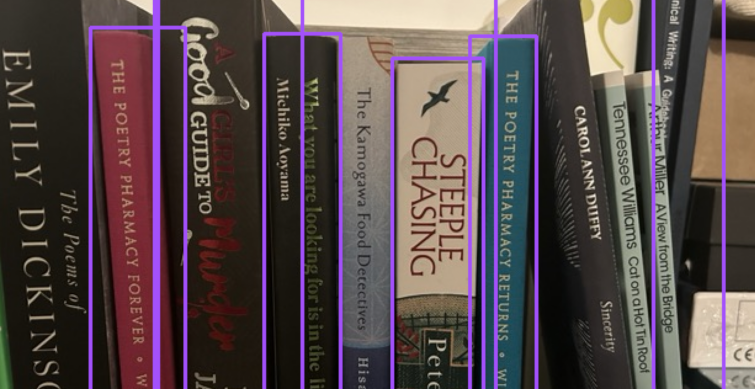

We’ll confess that we have a fondness for real books and plenty of them. So does [James], and he decided he needed a way to take a picture of his bookshelves and make each book clickable to find more information. This is one of those things that sounds fairly simple until you decide to do it. You can try an example of the results and then go back and read about the journey it took to get there.

There are several subtasks involved. First, you want to identify each book’s envelope. It wouldn’t do to click on the Joy of Cooking and get information about Remembrance of Things Past.

The next challenge is reading the title of the book. This can be tricky. Fonts differ. The book could be upside down. Some titles go cross the spine, but most go vertically. The remainder of the task is fairly easy. If you know the region and the title, you can easily find a link (for Google Books, in this case) and build an SVG overlay that maps the areas for each book to the right link.

The optical character recognition is done with GPT-4. The prompt used is straightforward:

Read the text on the book spine. Only say the book cover title and author if you can find them. Say the book that is most promiment. Return the format [title] [author], with no punctuation.

With that information, a Google API will look up the book for you, and the rest is straightforward. You can grab the code on GitHub. We wonder how this method of OCR for difficult text would compare to more conventional methods. After all, OCR isn’t a hard problem. The complex problem is making it work well.

“After all OCR isn’t a hard problem”

Oh sweet summer child…

OCR isn’t hard *now* because we have incredible amounts of computing power and hi-res image capture but by god it was difficult when PCs still had RAM measured in MB and on 72 or even 30 pin modules.

What was *incredibly* impressive was the OCR and handwriting recognition technology employed by an organisation I occasionally visited back in the early 2000s

(I am of course well aware that Al knows already and is as old, if not older than I am)

The man dated carbon. As for the article that’s why I keep a bar code scanner around.

ABBY Finereader, expensive but capable.

Tesseract, free but workable.

im really impressed by how you can take microwave scans of ancient scrolls while still rolled up (because unrolling them would destroy them), and still manage to scan in every character in ancient disused dialects and dead languages and turn it into actual text, and then translate it into english.

in all of my tech life i never had to manually copy a single page of text. though fixing scan errors is a different matter. im old enough to remember that.

I’ve spent many hours over my career and personal life correcting OCR documents, it’s often a blessing but, like the output from the various “AI”, you really need to proof read it and understand what it’s saying.

Depends on the circumstances.

If you have context, it is much easier than random text.

That’s why snailmail in the late 60’s could be processed with OCR pretty well and extremely fast, even with limited computational resources.

James P. Hogan predicted the scanning of books you couldn’t open in his 1977 Sci-Fi novel Inherit the Stars.

https://www.baen.com/inherit-the-stars.html

Scanning of books you couldn’t open? How is it that you can’t open a book?

There was a ‘hack chat’ about some books that couldn’t be opened just a few days ago.

https://hackaday.com/2024/01/22/x-ray-investigations-hack-chat/

TL;DR: some scrolls were entombed by the 79AD eruption of Vesuvius, they were recovered 250 years ago and have been waiting for the technology that might read them.

In the story it is for books that are old and would be damaged of opened. The scifi machine suggests an MRI-like device with scanner and ocr, similar to how archeologists are doing it today, 37 years later.

Quote: The image on the Trimagniscope tube was an enlarged view of one of the pocket-size books found on the body, which Dancheckker had shown them on their first day in Houston three weeks before. The book itself was enclosed in the scanner module of the machine, on the far side of the room. The scope was adjusted to generate a view that followed the change in density along the boundary layer of the selected page, producing an image of the lower section of the book only; it was as if the upper part had been removed, like a cut deck of cards. Because of the age and condition of the book, however, the characters on the page thus exposed tended to be of poor quality and in some cases were incomplete. The next step would be to scan the image optically with TV cameras and feed the encoded pictures in the Navcomms computer complex. The raw input would then be processed by pattern recognition techniques and statistical techniques to produce a second, enhanced copy with many of the missing character fragments restored.

Brewing texts from the early 1800’s would crumble when opened (acid paper). In the 90’s I was scanning OCR/PDF to do research – only way to study the content.

next match this up with a projector and have it highlight the physical book when you click on it.

after that, have it tell you where to move it on the shelf to get the books sorted by genre and alphabetized by author name, using the projector to highlight where you need to move the book to.

Neat. I will have to look deeper into this.

I recently had a similar idea. But more to find specific books at second hand markets which sometimes are wildely unsorted. So with a few modifications, from what i have seen, it should work. have a list of books you want to buy. take a picture of an unsorted bookshelf. highlight positions of identified books :D

I wil I can take a picture of the DVD movie shelf at Goodwill store and find what I need.

Let’s say I make a list of DVD’s what I am looking for and snapping the picture of the shelf will notify me about the DVD on the shelf to reduce time browsing all titles.

Or maybe a particular screw in my junk drawer.

There should not be a need to pre-make a list of titles you’re looking for.

AI should be able to sort through all the available DVDs on the shelf, categorize them and then match them to your genre/actor/director known likes and probable likes (and of course then highlight where they are on the shelf).

Take a photo of a bunch of jigsaw puzzle pieces. Let the AI number them, and show which pieces fit together.

You might want to limit the number of pieces per photo, to make it easier for the AI and yourself.

There used to be an app for this. But they got bought and shuttered by Rakuten.

What was the name of this App?

This is something I’ve wanted for at 15 years, but it was a lot harder to solve that problem back then. Hurrah!!!!