Working on embedded systems used to be easier. You had a microcontroller and maybe a few pieces of analog or digital I/O, and perhaps communications might be a serial port. Today, you have systems with networks and cameras and a host of I/O. Cameras are strange because sometimes you just want an image and sometimes you want to understand the image in some way. If understanding the image involves reading text in the picture, you will want to check out EasyOCR.

The Python library leverages other open source libraries and supports 42 different languages. As the name implies, using it is pretty easy. Here’s the setup:

import easyocr

reader = easyocr.Reader(['th','en'])

reader.readtext('test.jpg')

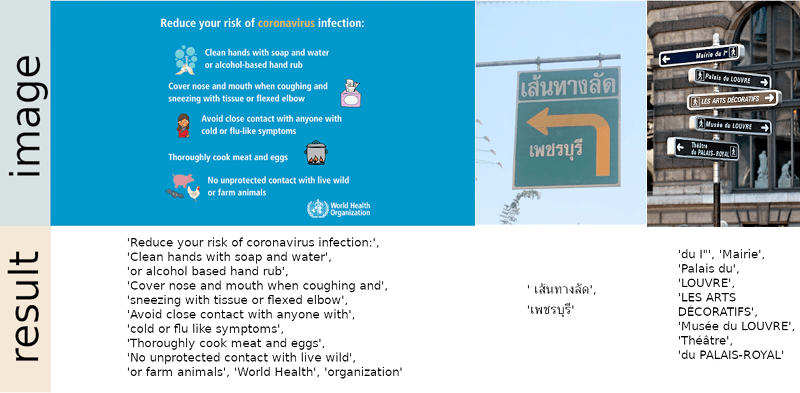

The results include four points that define the bounding box of each piece of text, the text, and a confidence level. The code takes advantage of the GPU, but you can run it in a CPU-only mode if you prefer. There are a few other options, including setting the algorithm’s scanning behavior, how it handles multiple processors, and how it converts the image to grayscale. The results look impressive.

According to the project’s repository, they incorporated several existing neural network algorithms and conventional algorithms, so if you want to dig into details, there are links provided to both code and white papers. If you need some inspiration for what to do with OCR, maybe this past project will give you some ideas. Or you could cheat at games.

bad ass. (seldom earned slow-clap)

Is it offline or is it spewing your text all over the interweb for lookup?

Locally using CPU/GPU, and the code is open for security review.

Looks awesome. The only downside is that it’s a huge amount of python code which adds an obscene amount of overhead. This is fine for computer systems but not so much for embedded systems.

It looks like the performance critical code uses libraries like Numpy and OpenCV that come with their own native code.

Yeah, this is massively complicated compared to Tesseract OCR.

Tesseract has very reasonable accuracy (though it doesn’t do fancy tricks like reading angled street signs) and only requires a moderate amount of CPU.

It also ships only a binary (or source code) and some language files, and is already avaiable on package repos everywhere.

Runs easily on a raspi, self-contained.

I had very limited success with Tesseract. Even when the source material was very readable for a human, the output of Tesseract tended to be pretty garbled and useless.

ABBYY Finereader it’s not.

Try running ABBYY Finereader on a raspi. Or even implemented in javascript in a web browser.

I’ve had pretty fantastic results with typeset english and japanese, though it seems to choke on the japanese font used by twitter. You’re welcome to do your own training to produce custom Tesseract language files of your own.

I was excited to see it and want to try it. But it is not as portable as I thought. Even after successful pip install, I get this during import:

ImportError: dlopen(/Users/tin/testing/ocr/env/lib/python3.7/site-packages/cv2/cv2.cpython-37m-darwin.so, 2): Symbol not found: _kCVImageBufferTransferFunction_ITU_R_2100_HLG

Referenced from: /Users/tin/testing/ocr/env/lib/python3.7/site-packages/cv2/.dylibs/libavcodec.58.91.100.dylib (which was built for Mac OS X 10.13)

What does this project have to do with embedded systems? The article begins in a completely nonsensical way.

I totally agree, how many embedded systems are able to download several 200 MiB pre-trained model weight files and have the RAM to load and use them.

I agree. A bloated Python lib has nothing in common with embedded systems. But when one has to fill in a number of words to meet the criteria of an “article” here, any approximation does the job.

A Raspberry PI or a NVIDIA Jetson is considered an embedded system these days. Some people back in the 80s would have assigned that classification to an 11/750 as long as it was running VAXELN. Many of them were at AT&T / Western Electric. :-) For that matter, DEC’s top-end laser printers had microVAXes in them running… VAXELN. I think I remember the Xerox Documenter ran SunOS, maybe? I think I remember OpenLook for the GUI. Embedded is a mindset, not a form factor.

I can easily imagine this software reading street signs as one more input to a Kalman filter algorithm on a self-driving whatever.

A VAX MIPS is pretty pitiful compared to any of the many ARM based embedded systems that can run Linux. Typical around 3000 VAX MIPS per core and up to 8 cores (Samsung S5P6818 for example). You can get a VAX MIPS from an Apple IIe with an 8MHz accelerator.

I’ll guarantee you one thing though: VAX MIPS ARE LOUDER. :-) :-)

(I’m going to put that on a T-Shirt. Also, sorry for shouting, but it’s so noisy here in the machine room.)

I read the comment and I realized that embeded software engineers are narrowed eye and shallow mind person. If you are not ok with other peoples work just share better one. Just wining others work is quite pathetic. If you think better than others, stop complaining and show better one which you originally make yourself.

No way this is appropriate for an embedded system at all, from some preliminary testing it can take upwards of 20 to 30 seconds running on CPU only since you won’t be able to take advantage of GPU on raspberry Pi’s.

I have an application to ocr drawings with the AutoCAD shape font and could not get easy ice anywhere near tesseract, though they both struggled with the stupid square ‘O’.

I can see for some things easyocr could be better, but in this case, not.

The performance is much better than Tesseract, but it is strange that

def ocr_on_frame(reader, frame_path, frame_id):

frame = cv2.imread(frame_path)

results = reader.readtext(frame, detail=1)

return frame_id, frame.shape, results

def safe_ocr_on_frame(reader, frame_path, frame_id):

try:

return ocr_on_frame(reader, frame_path, frame_id)

except RuntimeError as e:

print(“Caught a RuntimeError (possibly out of memory):”, e)

return None

def parallel_ocr(reader, frame_paths, max_workers=4):

frame_results = {} # Dictionary to store results with frame ids

with ThreadPoolExecutor(max_workers=max_workers) as executor:

future_to_frame = {executor.submit(safe_ocr_on_frame, reader, frame, frame_id): (frame, frame_id) for frame_id, frame in enumerate(frame_paths)}

for future in as_completed(future_to_frame):

frame_path, frame_id = future_to_frame[future]

try:

result = future.result()

if result:

frame_id, _, results = result

frame_results[frame_id] = results # Store bbox, text, and probability

except Exception as exc:

print(f”Frame {frame_path} generated an exception: {exc}”)

print(traceback.format_exc())

return frame_results

parallel_ocr, is not really working, it will sometimes just wait infinitely, like as if it was choked for data. I only had luck with max_workers = 1.

Anybody experience with running it in threadpool?