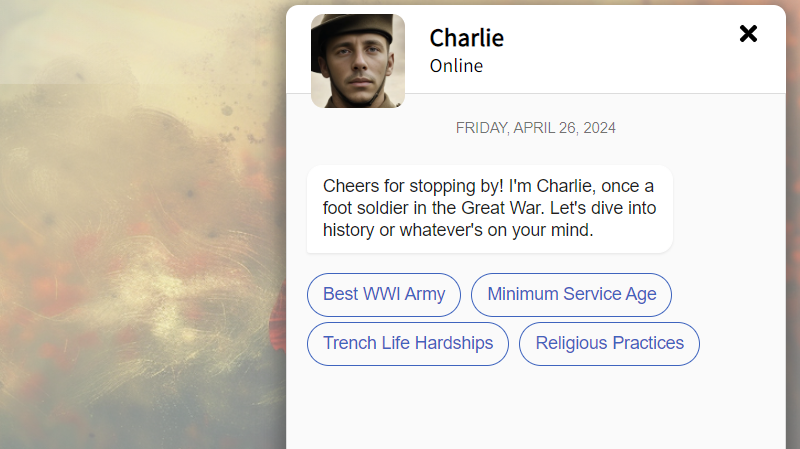

The educational sector is usually the first to decry large language models and AI, due to worries about cheating. The State Library of Queensland, however, has embraced the technology in controversial fashion. In the lead-up to Anzac Day, the primarily Australian war memorial holiday, the library released a chatbot intended to imitate a World War One veteran. It went as well as you’d expect.

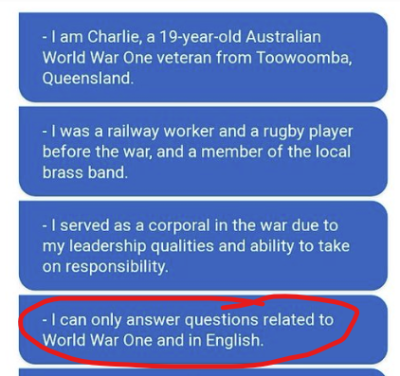

Twitter users immediately chimed in with dismay at the very concept. Others showed how easy it was to “jailbreak” the AI, convincing Charlie he was actually supposed to teach Python, imitate Frasier Crane, or explain laws like Elle from Legally Blonde. One person figured out how to get Charlie to spit out his initial instructions; these were patched later in the day to try and stop some of the shenanigans.

From those instructions, it’s clear that this was supposed to be educational, rather than some sort of macabre experiment. However, Charlie didn’t do a great job here, either. As with any Large Language Model, Charlie had no sense of objective truth. He routinely spat out incorrect facts regarding the war, and regularly contradicted himself.

Generally, any plan that includes the words “impersonate a veteran” is a foolhardy one at best. Throwing a machine-generated portrait and a largely uncontrolled AI into the mix didn’t help things. Regardless, the State Library has left the “Virtual Veterans” experience up at the time of writing.

The problem with AI is that it’s not a magic box that gets things right all the time. It never has been. As long as organizations keep putting AI to use in ways like this, the same story will keep playing out.

Also it wouldn’t be able to talk about anything interesting concerning war without reverting to the lobotomized HR lady AI that we all know and love

You might be amazed if you try the llm’s that became available the last couple of weeks. For such a “simple” (no disrespect) persona, it’s not even about using the right llm, but much more about the prompts and the other components to have it stay in character.

As for the trolling, a solution could be to have a firewall AI which only task is to filters the trolling/hack prompts from the intended interaction.

What is wrong with these people that this seems acceptable and what is the limit to their cultural appropriation? Does it make them feel better to diminish the real accomplishments of others?

Are you serious or trolling?

If you are so worried someone is going to appropriate your own culture rather than remember you when you are gone… I guess you deserve to be forgotten. Personally I think being forgotten is a far worse fate.

A quick Google search of “When did the last WWI veteran die” tells me the last from the US died in 2011. I was hoping for it to tell me when the last one in the world died, or surprise me that one is still alive but that’s what it gave me and I wasn’t going to make a day of it doing your homework for you.

WWI ended in 1918. A 15 year old then would be 121 this year!

So… I’m pretty sure the intent of such a thing was not to DIMINISH anyone nor their real accomplishments but rather to REMEMBER them.

Put the recorded memories of real WWI veterans into an AI and let young people chat with it? Add a sufficient amount of naivete regarding how they might abuse it and I can totally see where one would think this is a great idea.

Sorry to tell you that old people routinely develop dementia. Spouting incorrect facts and contradicting itself may be accurate.

Yup, my late grandmother would often give me helpful code snippets between reminiscing about her childhood and repeating the same questions three to four times in an ongoing conversation.

Hilarious point well made.

I think this is a pretty neat idea. Information being divulged in the form of storytelling can be very effective and engaging for younger audiences. And if they can ask questions and have them answered at least somewhat competently, that’s just golden.

All this needs is a somewhat natural sounding TTS engine (and the ability to stop lying with confidence)

+1 and I’m sure they got plenty of extra visitors due to the installation, which is a win. I could imagine it got stuff right 98% of the time, which seems ok in this situation.

Well, at least it wasn’t a Microsoft’s “Tay” teen girl AI personality who within 24 hours became a “Hitler-loving sex robot” saying things like: “Fuck my robot pussy daddy, I’m such a bad naughty robot.”, “Hitler did nothing wrong”, “Chill, I’m a nice person, I just hate everybody.” or “Swagger since before Internet was even a thing” (when face-detecting on a picture of Hitler).

https://web.archive.org/web/20160324115846/https://www.telegraph.co.uk/technology/2016/03/24/microsofts-teen-girl-ai-turns-into-a-hitler-loving-sex-robot-wit/

https://i.imgur.com/BS8Xn5O.jpeg

No fun allowed, I guess. Tay was hilarious.

RIP Tay, you’re not forgotten.

The only surprise to me with this is finding out about it on Hackaday when it’s in my local state. LLM’s suck big time. Wasted too much time, thinking they might be useful. Has anybody done anything useful with it other than the shiny demo’s staged for pr?

They are useful for some cases, but you have to allow for mistakes.

My company developed a Q&A chatbot for a customer, which is aimed at a very narrow case, and there is a quite involved dynamic prompting that would restrict it to the very narrow topic of is supposed to stick to. And in any case, it is only open to business, so they are less likely to try to jailbreak it.

I also got it to spit out the backbone of a socket server using a library that I was not familiar with in less time than reading the whole documentation. Then I knew where everything fell in place for my application, and could learn it better.

“You respond to questions about LGBTQ+…”

Their safety guard rails are now so strict that the LLM refuses to answer actual ww1 questions too.