It may be hard to believe, but BASIC turned 60 this week. Opinions about the computer language vary, of course, but one thing everyone can agree on is that Professors Kemeny and Kurtz really stretched things with the acronym: “Beginner’s All-Purpose Symbolic Instruction Code” is pretty tortured, after all. BASIC seems to be the one language it’s universally cool to hate, at least in its current incarnations like Visual Basic and VBA. But back in 1964, the idea that you could plunk someone down in front of a terminal, or more likely a teletype, and have them bang out a working “Hello, world!” program with just a few minutes of instruction was pretty revolutionary. Yeah, line numbers and GOTO statements encouraged spaghetti code and engrained bad programming habits, but at least it got people coding. And perhaps most importantly, it served as a “gateway drug” into the culture for a lot of us. Many of us would have chosen other paths in life had it not been for those dopamine hits provided by getting that first BASIC program working. So happy birthday BASIC!

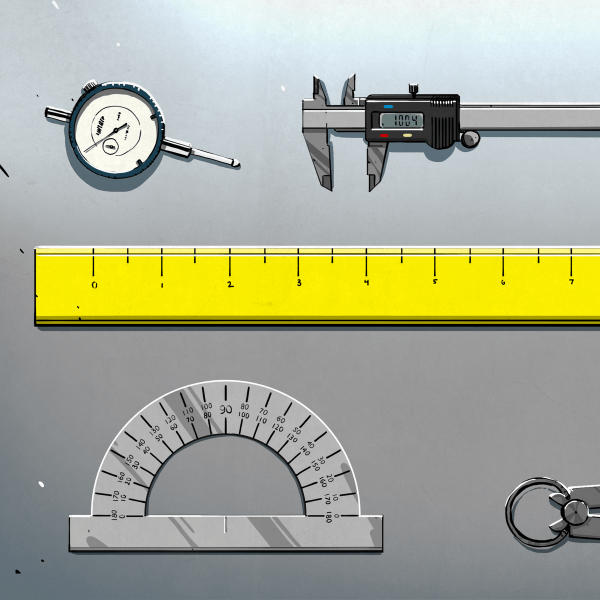

Speaking of gateways, we’ve been eagerly following the “65 in 24” project, an homage to the “65 in 1” kits sold by Radio Shack back in the day. These were the hardware equivalent of as BASIC to a lot of us, and just as formative. Tom Thoen has been lovingly recreating the breadboard kit, rendering it in PCBs rather than cardboard and making some updates in terms of components choices, but staying as true to the original as possible. One thing that the original had was the “lab manual,” a book containing all 65 circuits with schematics and build instructions, plus crude but charming cartoons to illustrate the principles of the circuit design. Tom obviously needs to replicate that to make the project complete, and while schematics are a breeze in the age of EDA, the cartoons are another matter. He’s making progress on that front, though, with the help of an art student who is really nailing the assignment. Watch out, Joe Kim!

Speaking of gateways, we’ve been eagerly following the “65 in 24” project, an homage to the “65 in 1” kits sold by Radio Shack back in the day. These were the hardware equivalent of as BASIC to a lot of us, and just as formative. Tom Thoen has been lovingly recreating the breadboard kit, rendering it in PCBs rather than cardboard and making some updates in terms of components choices, but staying as true to the original as possible. One thing that the original had was the “lab manual,” a book containing all 65 circuits with schematics and build instructions, plus crude but charming cartoons to illustrate the principles of the circuit design. Tom obviously needs to replicate that to make the project complete, and while schematics are a breeze in the age of EDA, the cartoons are another matter. He’s making progress on that front, though, with the help of an art student who is really nailing the assignment. Watch out, Joe Kim!

Last week we mentioned HOPE XV is coming in July. This week, a partial list of talks was released, and there’s already a lot of interesting stuff scheduled. Supercon keynote alums Mitch Altman and Cory Doctorow are both scheduled to appear, along with a ton of others. Check out the list, get your proposals in, or just get your tickets.

If an entire forest is composed of a single tree, does it make a sound? Yes it does, and it’s kind of weird. The tree is called Pando, which is also weird, and it’s the largest living individual organism by biomass on Earth. The quaking aspen has 47,000 stems covering 100 acres (40 hectares) of Utah, and though it does a pretty good job of looking like a forest, the stems are genetically identical so it counts as a single organism. Quaking aspens are known to be a noisy tree, with leaves that rattle together in the slightest breeze. That pleasant sound isn’t just for us to enjoy, however, as sound artist Jeff Rice discovered by sticking a hydrophone down into one of Pando’s many hollow stems. The sound of the leaves banging together apparently gets transmitted down the stems and into the interconnected root system. At least that’s the thought; more rigorous experiments would be needed to confirm that the sound isn’t being mechanically coupled through the soil.

And finally, we’re in no position to cast stones at anyone for keeping a lot of browser tabs open, but keeping almost 7,500 Firefox tabs going seems a bit extreme. And yet a software engineer going by the handle Hazel just can’t bring herself to close any tabs, resulting in an epic restore session when her browser finally gave up the ghost. Panic set in at first when Firefox refused to reload all the tabs, accumulated over the last two years, but eventually the browser figured it all out and Hazel was back in business. Interestingly, Firefox doesn’t really use up too much memory to keep al those tabs open — only 70 MB. Compare that to Chrome, which needs 2 GB to keep a measly 10 tabs open.

Well… I have currently 4290 tabs in Firefox/macOS (without counting a few thousands i lost with a Firefox crash a few years ago). When i work an a project or make research about a subject, i can open a few dozens of intersting web pages, and i keep them open for the next time. I wish there was an easy and effecient way to search, sort and classify tabs, but still nothing to my taste in 2024.

I don’t bother, because the search engines are so good, I just ask again when needed, or reference the code where I needed some guidance at the time. Sometimes I’ll save a screen to disk and file it, if important enough, or print it and file in a physical file folder. I am in the camp of few tabs open. Sometimes there might be 20 or so while working on something, but then back to < 10 as the norm. I 'really' don't understand 'thousands of tabs' open concept of web browsing :confused: .

While it should be some kind of law, my experience is that finding necessary yet obscure information is ALWAYS easiest the first try. There are bits of data I have never found again. I always have thousands of tabs open across multiple windows and different browsers.

I personally use Tab Stash extension (https://addons.mozilla.org/en-US/firefox/addon/tab-stash/) ,but nowadays ther are a tons of different ways (https://workona.com/reviews/best-tab-managers-for-firefox/)

Ah, statistics and lies and just bad reporting. The 70MB is the state file on disk while the 2GB is the memory usage of Chrome. Apples vs oranges. Not that chrome isn’t eating memory as second breakfast, but it isn’t a truly proper comparison.

We know Google can’t pass on the chunky lot of trackers sifting your cookies for data on your history, usage patterns and subject matter to target advertising and a multitude of other unethical privacy breaches people willing agree to with every EULA they click away. A browser simply shouldnt do this and years of coding web pages to disable tracking was wasted with new browsers that support and defend privacy intrusion. I use Brave and the difference in page loads between Chrome and Edge against browsers that run spyware is noticeable by many seconds. Caveat Emperor

I mean, copying the link and pasting to a notePad or google drive does not work? I personally keep only 4-5 tabs open at a time, enough for the hour.

There’s better ways to do what you’re saying, such as saving a group of tabs into a folder of bookmarks. But if 4-5 tabs last you an hour, and if it would not bottleneck your process to have to manually copy-paste links every time you wanted something that wasn’t one of the 4-5 things you had open, that sounds very unproductive.

As an example, I was shopping for some reusable silicone caps for 5 gallon water jugs the other day. So I did some searching, and opened the best looking results in new tabs. Since it’s a simple common standard item, it took a lot fewer tabs than normal, but that was still a dozen tabs in a few minutes time. I closed the tabs for items I ruled out, before finally buying one and closing everything. Constantly opening tabs, closing them, and then having to reload them or step back through my history to find them seems like a lot of effort when I can just leave them open until I know whether I want to do anything with them, bookmark them, or just close them.

Why do people hate BASIC? I’ve used it on 8 bit PICs for the last 25 years or so professionally and I’ve done OK out of that. Super quick to get embedded things running – easy to return to after extended periods (it is written in English after all) . Very close to the metal so you can do stuff in real time and you can trace most things easily without guessing what some unknown fool has put into a C library function.

Most likely encouraging bad habits.

https://reprog.wordpress.com/2010/03/09/where-dijkstra-went-wrong-the-value-of-basic-as-a-first-programming-language/

When I read this post, immediately remembered the dislike Dijkstra, based in a mathematical perspective, had toward BASIC. He criticized things like the use of GOTO instead of Loops, and was right. BASIC and home computers like the ZX Spectrum, were positive in making computing mainstream, but also created some bad code habits (as mentioned). In the Spectrum, the association of commands to key combinations was really bad school to future programmers. They end using multiple languages in the same machine, where memorizing command combos and using dedicated keyboards full of command stickers was useless.

When I were young and new to programming, BASIC was the language we used in high school. It was fun and easy to use. When we got to college, we put away the beginner language BASIC and went structured with Pascal which was head and shoulders better to use than BASIC. Except in one lab, where we used C64s (basic/assembly) for our electronics projects (remember peeks and pokes?). In a way, BASIC then was the Python of today (except Python adds so much more capability and is structured and object oriented). Of course we aren’t as ‘memory’ constrained now as back then (KB vs GB). The Basic interpreter could fit in a ‘small’ space or even source could be compiled I seem to recall. The python interpreter runs fine on say the RPI PICO today as well as many other micro-controllers.

Today, I dislike VB because most time it is just hacks written by a non professional ‘almost a programmer’ person. Basically (pun intended) we found the code was hard to maintain later. VB that is intertwined (the glue) with Excel and Access is, in my opinion, horrible. I spent two years re-writing the VB/excel/access, perl, batch apps here in Python 3, in one of our systems, and now all the tasks on that system are easily maintainable — not just by us professionals. Ie, the company engineers can help maintain (and write their own) apps with little fuss. Faster too. Maintenance time has went ‘way’ down. In fact, the only time I have to modify anything is when a ‘change’ is needed, instead of looking for bugs. Call-outs on this particular system have went down to the near zero mark. In this ‘case’, Python was the right language for ‘that’ job.

I seriously enjoy every episode, so thanks for put it all together! Recently I’ve noticed a changing trend however, so if you’d like some feedback:

Re: what’s-that-sound –

By including game sounds, you may as well play movie or music clips also. It’s more trivia vs. technical. I don’t enjoy those at all and just skip ahead. I would just propose that sounds experienced IRL (however obscure) are a better choice vs. artistic/synthesized/cultural samples. Machine noises & other physical/environmental phenomenon perhaps. I know it’s a slippery slope to set hard limits. I’m just venting a frustration.

Further, why is it that you don’t do both a question and a reveal with each episode? Play a new sound after revealing the answer to the last one? A few more seconds for twice the fun!

tortured acronyms were in vogue those days. one of my favorites is a kind of prefix tree: “PATRICIA” — Practical Algorithm To Retrieve Information Coded In ASCII. So, there is some practical tradeoff? It only works for ASCII?

Mainframe BASICs?

Micocontroller BASICs are single task operating systems.

They load an an app, then execute it.

If the app is a secure app, then the app zeros parts of the BASIC OS not used by it?

Multitaskers any part of a secure app?

Or a software security mountebank scam?

When I was in electronics class in high school (Graduated in 2000) we did a lot of BASIC programming that interfaced with external hardware through the parallel port. Things like blinking lights, motor control, taking input from a sensor and using it to either alter something in a program (Like using a light sensor to alter the pitch of a sound playing from the speaker), things you’d use a Pi or Arduino for today but such things barely existed then (we had “BASIC stamps” but they were pretty limited).

I built a sound processor that plugged into the parallel port, output was easy – 8 bits of parallel data out to a DAC and an amplifier and even with an IBM XT running at a blazing 8MHz I was able to play something resembling music. Input was another matter, since the standard parallel port of the day had 5 inputs, I couldn’t read 8 bits at a time coming off the DAC, so I used four of the inputs and it would basically read the first four and then the last four, playing music or speaking into a microphone did result in input to the computer however the BASIC compiler wasn’t fast enough to process the input properly so the “recorded” music wasn’t usable. (We even tried it on a 66MHz 486) Started to dive into assembly code and was able to get it to read much faster, however I never worked out writing to a file before graduation.

Other weird project I had was I stumbled across some articles in Electronics Now from the late 80s that went through an autonomous lawnmower, it used an array of IR LEDs and phototransistors across the front to follow the cut edge of grass (You’d either guide it around the lawn with a removable wired remote, or mow around the edge with a normal push power), problem was it used a Z80 processor with a custom code you had to download from a BBS and burn into an EEPROM, but the company was long gone as was the file. Since we had a block diagram and knew what the program was supposed to do, we worked out how to do what it needed in BASIC, problem was at the time getting a computer to run on battery power (Even an old laptop was too expensive at the time), worked out how to run a C64 off a lead acid battery but manipulating the parallel port was different enough that I wasn’t ever able to get that part of it to work (we hacked together a simulator to get it to work before we tried building the rest of it), still have thoughts of trying to do the same today but with an Arduino or something….

Sometimes I wonder why I went into IT and not EE… Other than the local CC dropping their EE program in 2001 (I graduated in 2000) and at the time I didn’t want to have to drive the 25 miles to Salem (Oregon) to go to the CC there that had an EE program…