Until the early 2000s, the computer processors available on the market were essentially all single-core chips. There were some niche layouts that used multiple processors on the same board for improved parallel operation, and it wasn’t until the POWER4 processor from IBM in 2001 and later things like the AMD Opteron and Intel Pentium D that we got multi-core processors. If things had gone just slightly differently with this experimental platform, though, we might have had multi-processor systems available for general use as early as the 80s instead of two decades later.

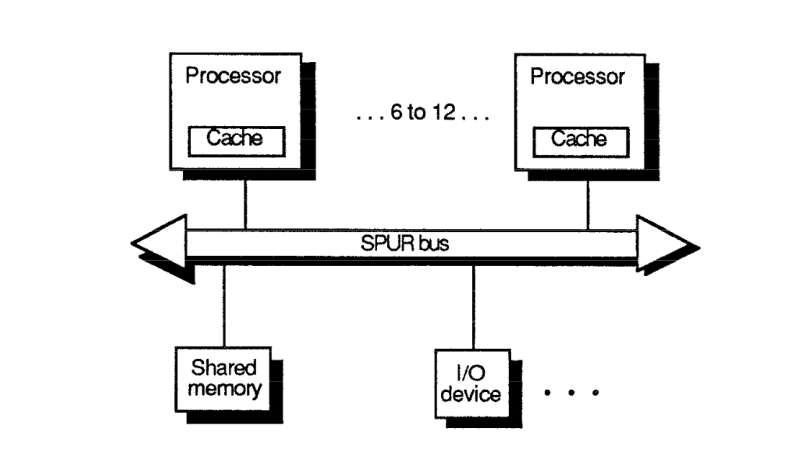

The team behind this chip were from the University of Califorina, Berkeley, a place known for such other innovations as RAID, BSD, SPICE, and some of the first RISC processors. This processor architecture would be based on RISC as well, and would be known as Symbolic Processing Using RISC. It was specially designed to integrate with the Lisp programming language but its major feature was a set of parallel processors with a common bus that allowed for parallel operations to be computed at a much greater speed than comparable systems at the time. The use of RISC also allowed a smaller group to develop something like this, and although more instructions need to be executed they can often be done faster than other architectures.

The linked article from [Babbage] goes into much more detail about the architecture of the system as well as some of the things about UC Berkeley that made projects like this possible in the first place. It’s a fantastic deep-dive into a piece of somewhat obscure computing history that, had it been more commercially viable, could have changed the course of computing. Berkeley RISC did go on to have major impacts in other areas of computing and was a significant influence on the SPARC system as well.

unless you count plan 9 which IMHO you should. It was an early adopter of a distributed OS and IIRC the original cisco pix used a modified version of plan 9. Granted it is not in the same CPU but it should not be ignored either as been a path leader IMHO as well.

Digital Equipment PDP-10 pioneered symetric multi procession (SMP) with multiple CPU:s. This is a interesting story about implementation. https://groups.google.com/g/alt.sys.pdp10/c/te34JW4svFU/m/NFwEoU8zYhYJ

DEC also pioneered network CPU clustering with it’s TOPS-20 operating system.

The IBM 360/65, released in March 1965 could be configured as a dual-processor system supported by the MVT MP65 operating system. Although not fully symmetric with respect to I/O, later 370/158 and 370/168 models were.

The late 1960s / early 1970s saw the introduction of the IBM 9020 system which was a S/360 multiprocessor. Max configuration was 4 x modified Model 65s, based on the standard Model 65 but 4-way multiprocessor rather than 2-way. Incidentally, the never-delivered Model 62 was a 4-way multiprocessor.

Or hell, on the subject of DEC I’m pretty sure you could buy a VAX 11/780 with up to 4 CPU units. Definitely could with later VAXen.

The VAX-11/782 was a dual CPU machine released in 1982 and the VAX-11/784 was a quad CPU model (but it was quite rare).

Inmos did something similar with Transputer in the 80s…

Multi processor parallel systems with a serial bus.

This looks like a development of that with risc and a better memory model

Yes.

And Atari wanted to bring it to the market, showed prototypes at the SYSTEMS trade show

And then something happened in the UK, and everything vanished.

No one seriously user Transputers because they turned out to be bad, just like Motorola 88000, NS32000, Z80000. Plenty of bad late products on the market.

No-one you know of, someone I knew made a very nice career for themselves developing military stuff using Transputers.

There were plenty of very expensive, niche and bespoke systems using them, quite a few are listed on Wikipedia and there were more so I suspect you’re using ‘seriously’ to mean it wasn’t a mass market success rather than there were no serious uses of it?

They definitely weren’t ‘bad’ chips, but they weren’t able to compete with the pricing and ease of use of other (less advanced?) chips on the market.

That is not true. Transputers where the go-to solution for problems requiring massively parallel processing, for both civilian and military applications. Particularly successful in image processing.

I saw Transputers used on speech recognition boards in the 80s. For myself, I developed software on the ICL MiniDAP which had 1024 1-bit processors. Very niche.

I have a French Transputer based “Supercomputer” from 1992. An Archipel Volvox with over 40 Transputers. It is controlled by an SGI Indigo2 running Unix.

If I ever find the missing software I will control the World! http://www.regnirps.com/VolvoxStuff/Volvox.html

Thanks for the Transputer shout out. Hard to talk about 1980s parallel processing without giving a nod to Transputers.

oh the processors that never were….

Epiphany IV I cry for thee

Its too bad no one picked up the reins when DARPA scooped up Olofsson.

“Califorina”?

oklahoma

Where I worked in the 1990s, we had a couple of Symbolics (Lisp) machines they were attached to Pixar machines.

Lasseter, is that you?

??? OS/2 and BeBox were SMP and OS/2 supported non-symmetrical. I owned a Compaq server that had a 386 and a 486 processor and preferred OS/2.

The Be machines supported 2 processers from the beginning.

The first paragraph is very incoherent. We’ve had multi-processor systems for “general use” since the eighties. The AS/400 supported dual processors by 1991. SMP DEC Alpha, SPARC, x86, RISC, PA-RISC systems were a common sight in the 1990s. Even the odd BeBox inbetween. Linux got SMP support in 1995. Even the SPARCStation 10 on my desk had a dual-CPU Module. Most Netware servers I had seen were dual machines.

And we can’t be talking about “for general use” in the sense of “for consumer use” here, because neither POWER4 nor Opterons nor SPU RISC were aimed at consumers.

HaD writes not doing research again. There were lots of multi-CPU VAXen in the 80’s and even mid-to-late 70’s.

Mmmmm summary makes it sound a lot like multiple processors and multiple cores on the die are interchangeable concepts.

Didn’t all our software get slower a few years back because of the tradeoff of patching some security holes that were found to be enabled by multi-core chips? Security holes that don’t exist in multi-cpu machines where it’s just one core per die, not sharing any cache or whatever other internals multiple cores share?

Clearly I don’t know all the technical details but.. I’m still going to say that multi-core isn’t really the same as multi-cpu.

I did have a two-processor computer back sometime around 2000 or 2001. That thing ran great for the time! It sure was expensive to upgrade the RAM though as it used ECC. And the electricity it used… and the heat it generated… did I mention those were AMD CPUs? Like computing on an electric stove!

You might be thinking side-channel attacks.

Multi-processor VAXen were available in the 80s already.

Sorry, but the summary of multiprocessor systems correspond to actual history. Even vaguely.

I worked on 36 R4400 (64 bit) processor SGI systems (Challenge XL’s) in 1994. I managed an 18 i386 x processor Sequent Symmetry systems in 1991.