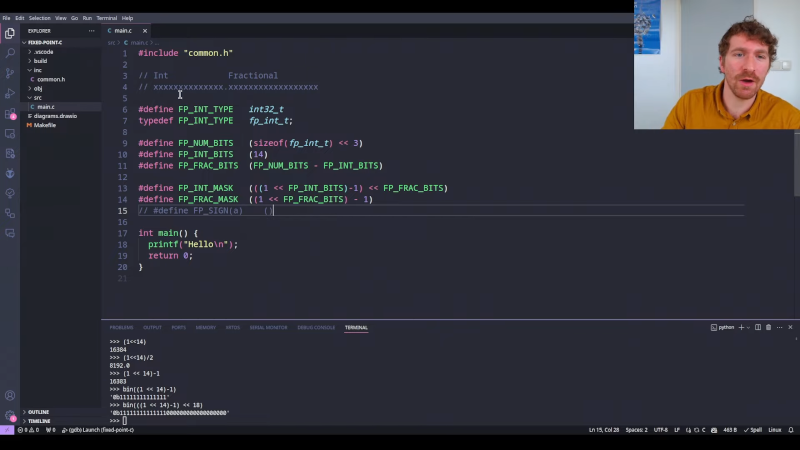

If you are used to writing software for modern machines, you probably don’t think much about computing something like one divided by three. Modern computers handle floating point quite well. However, in constrained systems, there is a trap you should be aware of. While modern compilers are happy to let you use and abuse floating point numbers, the hardware is often woefully slow. It also tends to eat up lots of resources. So what do you do? Well, as [Low Byte Productions] explains, you can opt for fixed-point math.

In theory, the idea is simple. Just put an arbitrary decimal point in your integers. So, for example, if we have two numbers, say 123 and 456, we could remember that we really mean 1.23 and 4.56. Adding, then, becomes trivial since 123+456=579, which is, of course, 5.79.

But, of course, nothing is simple. Multiplyting those two numbers gives you 56088 but that’s 5.6088 and not 560.88. So keeping track of the decimal point is a little more complicated than the addition case would make you think.

How much more complicated is it? Well, the video covers a lot but it takes an hour and half to do it. There’s plenty of code and explanations so if you haven’t dealt with fixed point math or you want a refresher, this video’s worth the time to watch.

Want to do 3D rendering on an ATMega? Fixed point is your friend. We’ve done our own deep dive on the topic way back in 2016.

> Multiplyting those two numbers gives you 56088 but that’s 5.6088 and not 560.88. So keeping track of the decimal point is a little more complicated than the addition case would make you think.

How is that unclear to anyone with a high school diploma? If a = n/100 and b = m/100, c=a*b=(n*m)/(100*100), how is that complicated??

Don’t get me wrong I appreciate people like the guy in the video explaining this stuff and HaD staff relying this out of interest, but I would feel EXTREMELY wary if I had to work on constrained systems with someone lacking such a basic understanding of math.

No need for a high school diploma, rational numbers and decimal notation are primary and lower secondary education stuff in most parts of the civilized world.

The problem here is your condescending and arrogant attitude. And I really, genuinely don’t mean that to be an insult. People with an aptitude for mathematics just don’t understand how other people can’t “get” it. It’s so obvious,right?

Only for an awful lot of people, it isn’t obvious. What you present there just doesn’t naturally sit in some people’s heads in a manner which allows it to be processed. I’d suggest it was like a sort of mathematical dyslexia, only it’s way more common than can be explained as a learning difficulty.

Mathematics is a higher aptitude discipline than many people think, including, clearly, yourself. You should try to remember that as you judge people.

Don’t blame [mista4a] for failed educational systems in certain parts of this globe. If an adolescent or adult doesn’t grasp such simple maths (which is one of the basic skills needed to survive in our money-driven society), then something must have gone horribly wrong.

In most western countries math education has become learning what you should be doing – that is, “understanding” the math – instead of learning to actually do it by routine. The idea is that people wouldn’t need to learn “useless” information – all they should learn is the references to the information, so they can look it up later when they actually need it.

You’re supposed to remember things like, “Ah, here I need the quotient rule… let’s see how it was again…” but, without any rel context, such abstract knowledge is quickly forgotten. When given the opportunity, people pass the school system by cramming and retaining the correct answers just long enough to pass the tests. The end result is knowing that an answer is one quick Google search away, but you don’t remember which search terms to use.

That’s why certain basics should simply be drilled in by repetition. It’s no use trying to “explain” math to the vast majority of kids, because they can’t see the point of it – they’ll only understand later when they realize they should have studied harder.

Maybe it’s in the presentation.

https://www.popularmechanics.com/science/a32131826/ancient-multiplication-method/

In the USA math is a group cooperative activity by current pedagogy. Children are expected to discover, by the magic of working together, the last 4000 years of mathematics. If 5 of them are in a self directed group, they are sure to discover integration and how to prove if a sum converges.

If some direction is needed, there is the “circular curriculum” where they get a little bit of everything twice a year and remember all of it so that their knowledge builds into a glorious whole! No, of course it doesn’t. You would have to be a dedicated Marxist critical theorist to think that. You would have to believe that a man can become a woman by saying so and other magical thinking. That would never get into the schools, would it?

“…simple math..” You want to see the failure of mathematics in the US? Next time your total at the register is, say, $7.23, hand the cashier a ten, two ones,two dimes, and three pennies. S/He will try to hand you everything back except the ten, telling you that you gave him/her too much. S/He won’t be able to grasp the idea that instead of getting two dollar bills and some odd change back – which, added to all the other bills and change in your pocket, gets bulky – you’d rather just get back a single five dollar bill. I’ve seen people lock up when I do that.

Yes… I know nobody (other than me) uses cash anymore, but you get the point.

I use cash too, and make a point of politely walking out of the shop if they won’t accept my cash.

Yes, we get it, things have gone wrong, our education system is awful, etc. That doesn’t change anything about the need to explain these things, though. It just explains why it’s necessary.

eh, i don’t think that’s really relevant to the struggles of a computer programmer trying to come up with representations for numbers. it’s truly a weird spot to try to write usefully about, since there is a very narrow range between ‘no duh’ and ‘i don’t care’ that defines the possible audience.

imo, mista4a is correct. the rules for multiplication are not going to stymie anyone who stands any chance of writing numerical software. it’s a relative of amdhal’s law — if that’s the stumbling block they ran into, then fixing it won’t matter because there are much larger stumbles both ahead and behind them!

So called ‘mathematical dyslexia’ does actually exist – it’s more properly called “dyscalculia”.

Haven’t watched the video yet, but I thought through how I’d do it for binary fixed point. I’d guess that most of the complications have to do with overflow and rounding. It’s not the world’s hardest thing to figure out, but not as trivial as a division.

To elaborate: You are byte constrained, so you can’t just naïvely multiply and shift. That will get you more overflow than you really want. E.g. you might view an 16 bit sequence as encoding b2+b1*2^-8, where b1 and b2 are the lower/upper bytes as int8s. Then naïve multiplication + shift as int16s will give you overflow even for small integers. Instead, you need to expand (a2+a1*2^-8)(b2+b1*2^-8) as a2*b2+(a1*b2+a2*b1)*2^-8+a1*b1*2^-16. Then you need to determine if the last term is going to carry and round up or down and if the middle term is going to carry.

There is a world of difference between those who used slide rules at one extreme, and mostly calculators at the other. As to how obvious decimal point tracking would be. Similarly those who worked with logarithims a great deal and how obvious floating point is.

It’s more about “obviousness” than the ability to understand mind you.

I’ve been around for a while. A long while. Since before standardization of floating point. One of the first distributed libraries I wrote was to do 8087 compatible arithmetic on a Z80 or 8085. This included routines for maximum precision translation to/from several other formats, including DEC and IBM360 floating point forms.

This was actually not much more difficult than building a robust, correct fixed point library, if for no reason other than good groundwork by a number of top analysts (William Kahan, for one). There are a lot of pitfalls to trap the arrogant and unwary in fixed point, especially when dealing with changing radix and getting consistent, appropriate rounding.

if you really want speed you never do it in decimal, always binary. bit shifts are cheap. you just need to make sure you use a larger type on some operations, because miltiplies/divides can result in bigger numbers that need to be shifted back into the correct format and then truncated. if you dont have enough room for that operation you can lose precision. addition and subtraction can be done entirely in format, i think multiplies need to be twice as big and divides like four times. you might take a hit in the form of register operations there.

The fundamental rules themselves are straightforward, but especially in a constrained system every single extra point of complexity is out to get you. The worst bugs come from the requirements that the programmer, in their hubris, underestimated.

When I was taking the assembly language course in college, the instructor showed us how to do arithmetic using one digit per word (obv, you could compress and use packed BCD as well), which gave you number lengths on the scale of the computer’s memory size (minus room for OS and code). The task was to calculate as many digits of Pi as possible on the machine (a CDC CYBER-74) Instructor was an apps engineer for CDC, and taught us all sorts of interesting tricks…

I think there’s a book out there with all these “tricks”.

“Numerical Recipies in C” is the one I have (now). It’s pretty good, but I have found errors in the edition I have. There’s one for FORTRAN, too, I believe. Of course, we didn’t have it back then…

There are errata notes on the website for NR. Best supported text I ever have used

https://numerical.recipes/

You can read it for free! (Which makes used hardcopies on eBay super cheap.)

It has some rather odd errors. The derivation of 4th Order Runge-Kutta integration is wrong. The code is right. Tracing back, one finds this same error in a couple generations of numerical methods books. Six decades or more of cut and paste. I found this while preparing a class. One wonders how many similar errors there are.

Perhaps you mean Hacker’s Delight?

https://en.wikipedia.org/wiki/Hacker's_Delight

or

https://www.amazon.com/Hackers-Delight-2nd-Henry-Warren/dp/0321842685

In a similar vein there is also xchg rax,rax

https://www.amazon.com/xchg-rax-xorpd/dp/1502958082/

Does a MCU count re “Modern computers handle floating point quite well” ?? I can make calculations on GPS coordinates with 9 dec places with 64 bit ‘double’ but not 32 bit ‘float’. Using float gives the wrong answer !!

Really, nobody should be allowed near floats until they understand fixed point. The problem with 32-bit floats is that they only have a 23 bit mantissa. 8 bits are the exponent, which basically tells where the decimal (okay binary) point is, and one is the sign. That means for GPS if you do everything perfectly (which is itself hard) you can resolve the circumference of the Earth to one part in 2^23, or about 1 part in 8 million, or 15 feet. Which is kind of crap. 32-bit integers do fine, but you have to use fixed point scaling.

Sure, I get the 23 bit mantissa, and I would have thought my 9 digits behind the decimal place would have been ok with this set up without the hassle of fixed point scaling …. but obviously not! BTW, the calculation itself is working out the heading from vectors, where mostly the last 2 or 3 digits change.

Are there really any situations where you can’t use integer math but “assume” a larger multiplier for the value?

For example, when you need a number, say 12.345, couldn’t you instead represent the number as 12345 integer for all the math operations? Of course you’d need to “know” beforehand that the multiplier x1000 is applied but besides that it would be pretty easy to work with.

Sorry I’m not really familiar with the details, hence the question.

You can do everything with integer math, Decimal notation is just a convenient way to write down a sum of fractions of integers.

You aren’t a really hardcore fixed-pointer until you’ve also used ten’s complement negative numbers.

I always use ten’s complement when doing subtractions on bills. Much easier to subtract the number from 9999.99, add 1, and then add it to the other number and discard the spurious 1 at the beginning. :-)

FORTH has */ to multiply two words together and divide by a third to give a word result, but using a double-word intermediate value (16-bit words with a 32-bit intermediate, or 32-bit words with a 64-bit intermediate).

This allows integers to take the place of floating-point contants. For example pi is 355/113 with an error of 8.5×10^-8 so using */ n*pi is n*355/113. (With 32-bit words it is 1068966896 / 340262731 for an error of 3.0×10^-18). Other constants like e, sqr 2, sqr 3 can similarly be approximated with an error of less than 10^-8. Full table and other fixed point info in Starting Forth by Leo Broadie https://www.forth.com/starting-forth/5-fixed-point-arithmetic/. (No I haven’t watched the hour and a half video before commenting 😬).

Fixed-point arithmetic is not as easy as it seems.

This is a good video, but I’m not a big fan of the C API, it makes things less intuitive…

I’m writing a C++(20) library, that I used in embedded projects (STM32): https://github.com/RonnaTechnologies/flute

The library takes advantage of operator overloading and template meta programming to provide an intuitive API. For instance, you can do something like: const auto two_pi = 2 * ufixed{3.14}.

There are also two implementations of look-up tables that allow linear interpolation.

It’s still a work in progress, but already usable.

But if you don’t write it in C how are you supposed to get bragging rights on online forums for making your life pointlessly harder?

hahah guy online “takes advantage of operator overloading and template meta programming” and then we talk about “making your life pointlessly harder”

it’s all a matter of perspective

i like to sometimes use fixed point on embedded projects to speed things up. but float i think is stupid fast on modern processors and may even exceed the capabilities of the integer units.

a lesser known feature is that it gives you explicit control of your number format. you can put your decimal anywhere or use any size. floating point is limited to like 80 bit on x87, and i dont even know if that format is still supported. if you need more precision than that offers (and it offers a lot), then using a big int format can give you ranges far outside of what you can accomplish with float.

in C, there is __int128 that i think most modern compilers support fairly well. just a longer int type beyond the typical 64-bit ‘long long’. longer than that, you wind up looking at synthetic types. struct int256 { __int128 high,low; }; it’s not too hard to figure out the arithmetic for these, or to find a 256-bit int library, or to find an arbitrary-precision int library.

there is also ‘long double’ which is ideally a 128-bit double. but i have been rather astonished to find that gcc doesn’t support it on most platforms, using either 64-bit or the intel 80-bit.

but the neat thing is, it’s actually not that hard to invent a synthetic giant float format, and implement the arithmetic yourself. and it’s just as easy to find a library that provides 128-bit float, or that provides arbitrary-precision float. and one thing that has really surprised me is that the arithmetic for basic float operation (+ – * /) is really not outrageously expensive.

so overall i am just pretty happy that we have such an abundance of options now.

There is a 128 bit float (quad precision) library for use with gcc (for C or C++ or both, I forget). Not knowing that it existed, about 8 years ago I wrote my own 128 bit float library for gcc C++. It took more than a month and worked nicely, but I didn’t test it thoroughly. An educational experience, but in the long run a waste of my time. The function overloading capability of C++ is needed to make the use of the new format convenient. In doing the work on this, I found out that the Numerical Recipes books are optimized for doubles, and the formulae provided will be WRONG if an attempt is made to extend them to 128 bits. No warning in the books; shoddy writing in my opinion.

For a programmer, I expect them to be able to do this, its not that difficult. I simply wrote a basic set of fuctions: fpAdd, fpSub, fpMul, fpDiv – then I had a struct for the number { uint32 val; uint8_t dp; }

30 years of embedded software, and I’ve _never_ had the luxury of floating point!

Amen, brother! After many years of programming on fixed-point embedded DSPs, you just start to “think” in terms of integer math with lots of bit shifts. It also makes you think hard about the system as a whole so you don’t overflow a register and completely lose the value (of course, you want as much precision as possible for as long as possible, so you don’t want to leave any high-order bits being under-utilized). Bit shifts for as far as the eye can see!

Way to attack virtally every current programmer. I’m a modern programmer and I’ve made (not compiled an existing) my own OS kernel… While I am certainly an exception, I know a lot of people younger than me who know FAR more than how to spit out math input about programming. While it was probably meant as a joke, holy crap guys, watch it. That’s borderline, if not way passed the line, of unproffesional.

Anybody here ever hear of the Forth programming language?…with its ability to perform very-high-precision math using only fixed-point arithmetic…ONLY?

Anybody here ever heard of, or ever USE, a slide rule, where one has to keep track of “…the decimal point…” in one’s head?

“…But, of course, nothing is simple…”

But of course.

Particularly when one is told one has to endure a 1½-hour video presentation on a subject which we are led to believe occupies a position right up there with the invention of calculus.

I’ve got a bridge I can let you have REEEEL cheap…

If I need to scale something by a fixed constant and cannot use floating points I calculate a multiplication value and a shift value. Sometimes an offset is needed.

One that doesn’t lead to an overflow and has the precision I need. I can optionally add rounding by adding 1<<(shiftvalue-1) before shifting, and if an offset is needed it won't add any overhead.

If it is a compile time constant I can use a const or constexpr. If it is a runtime const (ROM value) I can calculate the values in an init function during boot.

I often scale final results by a decimal number, such as voltage in millivolt.

In this example of 3.3v I would save the result in an uint16_t for a value between 0 and 3300.

I could go to 33000 for extra precision, but that's probably not needed in this case as the stepsize is 0.8mv and there might be more noise/ripple.

with rounding:

uint16_t voltage_mv = ((uint32_t)adc_value*3300 + (1<> 12; // with rounding

without rounding but larger multiplier:

uint16_t voltage_mv = ((uint32_t)adc_value*845007) >> 20;

3.300v will read as 3300 mv

if you want more digits:

uint16_t voltage_mul_10000 = ((uint32_t)adc_value*528129) >> 16;

3.3000v will read as 3.3000v

It helps to play with the values.

Fun fact: if your calculation and storage precision is greater than your ADC resolution you can calculate your original ADC value back in post processing and redo the calculation with floating points.

hackaday ruinied my code, a tech site like hackaday should have an option to insert a code block in comments.

It’s a shame that definitions have changed over the years. 60 years ago “floating point” referred to a BCD format with 1 or 2 decimal digits per byte. Each digit could be 0 to 9 or decimal point or minus (or plus?). The decimal point did indeed float in the number. What we now call float was properly called scientific notation.

It’s not fundamentally different. IEEE754 supports decimal floating point, the only thing that’s different is how it is stored, you can store them in BCB (including sign and radix) or in what you call scientific notation or in a string. One explicitly stores the radix and the other stores the position of the radix. The advantage of scientific notation is that you can have a radix outside the digits, so a exponent greater than the precision. You cannot do that with your storage proposal. Also you can fit two BCD digits in a single byte.

It is a long video and I have not watched the whole thing. Did he talk about Rational Arithmetic? There is no error or roundoff with rational arithmetic. It has serious limits in that if you do very many operations the integers will exceed you memory. But for calculations that do not repeat and sum and that kind of thing you get exact results. The problem arises from repeated calculations of the least common divisor or the GCD of arguments to keep them in rational form. But any kind of machine control and steppers and digital detectors, using rational numbers is the way to avoid missed steps and all that.

Rational numbers plus CORDIC algorithm? Golden.

“There is no error or roundoff with rational arithmetic.”

If you have sufficient precision there is no rounding error when adding or multiplying floating point numbers and you can use arbitrary precision libraries to get very high precision. But once you exceed precision you get rounding errors. And the same applies to rational numbers. Once rational numbers exceed memory you can scale them down and lose precision.

It’s at division where rational numbers shine. They do not have rounding errors in that case as long as you have enough memory.

But some numbers are irrational and they cannot be stored in either format without rounding. In order to do that you need to rewrite your formula to avoid using irrational numbers and that is not always possible. Alternatively you can store your numbers in a symbolic way to avoid rounding at least until the end.

CORDIC is great, but not all angles can be represented as a rational vector. Like 60 degrees has a ratio of sqrt(3)