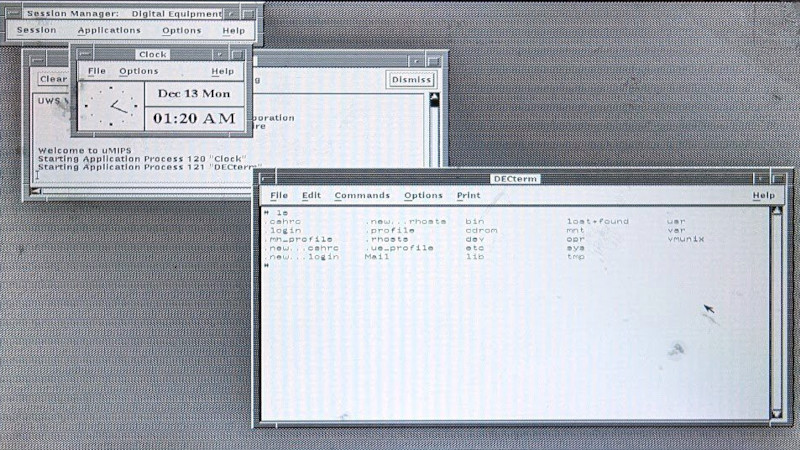

Years ago there was a sharp divide in desktop computing between the mundane PC-type machines, and the so-called workstations which were the UNIX powerhouses of the day. A lot of familiar names produced these high-end systems, including the king of the minicomputer world, DEC. The late-80s version of their DECstation line had a MIPS processor, and ran ULTRIX and DECWindows, their versions of UNIX and X respectively. When we used one back in the day it was a very high-end machine, but now as [rscott2049] shows us, it can be emulated on an RP2040 microcontroller.

On the business card sized board is an RP2040, 32 MB of PSRAM, an Ethernet interface, and a VGA socket. The keyboard and mouse are USB. It drives a monochrome screen at 1024 x 864 pixels, which would have been quite something over three decades ago.

It’s difficult to communicate how powerful a machine like this felt back in the very early 1990s, when by today’s standards it seems laughably low-spec. It’s worth remembering though that the software of the day was much less demanding and lacking in bloat. We’d be interested to see whether this could be used as an X server to display a more up-to-date application on another machine, for at least an illusion of a modern web browser loading Hackaday on DECWindows.

Full details of the project can be found in its GitHub repository.

Early 90s? The higher end DEC machines were there and above. (source: father worked at dec mosshill at the time)

Atari MegaSTs with the Viking Monochrome card and a big CRT could apparently do 1280*960 Pixel.

http://tho-otto.de/hypview/hypview.cgi?url=%2Fhyp%2Fchips350.hyp&charset=UTF-8&index=159

In principle, Windows PCs from late 80s could do 1024×768 no problem, since most VGA cards had Super VGA capabilities.

Video RAM expansion was a problem, though. The basic 256KB merely did allow for extended modes such as 640×400 256c or 800×600 16c.

Or 1024×768 in mono, but I’m not sure if pure video monochrome modes existed in these VGA BIOSes.

IBM 8514/A did feature 1024×768 256c at 56Hz in ca. 1987 already.

Videos of OS/2 1.1 running in 1024×768 256c do support this statement.

Some driver disks bundled with ET4000 VGA cards did ship with such an 8514/A software emulator for DOS, even.

Anyway, the problem wasn’t the computer but the monitor.

Back in 1990, merely a few users had monitors that could go past the default VGA timings.

640×480 and 60Hz or 72Hz and 31,5 KHz were the limit.

Super VGA in 800×600 was possible, too, by using interlacing and low refresh (56Hz again).

In simple words, users had so-called “single-frequency monitors”, as opposed to “multisync” monitors.

The humble 12″, 14″, 15″ VGA monitors of the early 90s were meant with MCGA (320×400) and VGA (640×480) in mind. Super VGA in 800×600 was kinda possible, but more an afterthought.

I forgot to mention, this merely was a comparison with bog standard intel PCs of the day.

Because that’s what most layman do things compare with.

Professional workstations were a thing on their own, of course.

SGI was famous, for example.

Still, many PC-based professional software did support high resolutions early on, as well.

For Super EGA/VGA cards, there were ADI drivers for 1280×1024 pixels and up, for example.

For custom CAD/CAM graphics systems, too, of course.

So users of Autodesk products (AutoCAD, AutoSketch, 3D Studio etc) could do work in high-res; on a DOS system!

In the dark ages of Turbo XTs and ATs, before the iron curtain fell.

In the early 1990s, a 19 inch SGI (color) monitor cost ~$2500.

Intergraph had 27″ monitors in about 1990. And you could hook 2 of them up to their workstations. I don’t remember the resolution of these glass beasts, but I believe they referred to them as “2 megapixel” displays.

I bought a used Sun 3/160 from a guy who worked for Sun in East Bay in the early 1990s. The Sun had a 21 inch monitor with 1152 x 900 resolution which if I recall was 8 bits per color channel. Just the video was two huge VME boards. There were four memory boards of 4 meg each with 4116 RAM chips, 68020 CPU board and SCSI board. The whole thing weighed a few hundred pounds. The power supply was simply labeled “1.5 KW.” I had two 327 meg full height SCSI drives. SunOS was supplied on a set of two tape cartridges. Sun workstation keyboards and mice used Apple ADB, and Sun machines had a built in boot environment written in FORTH. Those were the days.

High resolution video wasn’t that unusual in the early 1990s and it wasn’t out of reach of the average hobbyist if one was willing to accept ten year old equipment which weighed a few hundred pounds and doubled as a space heater.

“XGA” monitors were present in 1990. Early Sun workstations used your choice of 1600×1100 or 1152×900 at 1bpp. (“bwtwo”)

Anyone running any *N*X would have found that familiar, not unusual.

Even over in ‘consumer toy’ land, the Amiga A1200 (and other AGA Amigas) could do colour 1024×768.

The question is if you also could afford a monitor for those resolutions with your brand new 1992 Amiga.

In 1989, when decstation 3100 was released, Amiga 500 and 2000 had a little more than 640×400 as maximum resolution and 320×200 was the normal resolution at the time for home use.

If I read Wikipedia correctly, 1024×864 in monochrome or 256 colours was the options at release.

640×512 pixels for the PAL models (OCS). That was a bit more than Standard VGA, even, which was 640×480 pels.

https://amiga.lychesis.net/articles/ScreenModes.html

OCS 640×512 is at 25Hz, its interlaced :|

Aww sweet, the VGA is PIO driven and the cursor is in hardware. Nice!

… 3-clause BSD huh? Stripping this project down to use for other RP2040 computer projects is tempting. Of course it’s awesome as-is, just even better when someone else can hack on it too!

correcting myself, by ‘in hardware’ I meant… that by implementing the VGA using PIO, and having it handle the cursor, it’s *like* being in hardware. It’s software, of course.

Hey, just as long as the cpu doesn’t have to do it, it’s all good. :D

I couldn’t get the RP2040 to do quite everything – there’s no (easy) way to do the logical operations between frame buffer data and cursor data, so that’s done via an ISR. But the ISR is pretty simple, as the framebuffer read for the combinaion from the PSRAM is done by the DMA/PIO engines, as well as inserting the results into the video stream.

I couldn’t get the RP2040 to do quite everything – there’s no (easy) way to do the logical operations between frame buffer data and cursor data, so that’s done via an ISR triggered at vsync time. But the ISR is pretty simple, as the read to fetch the frame buffer data from the PSRAM is done by the DMA/PIO engines, as well as inserting the results into the video stream. So the ISR just does a shift to align cursor data and the logical operation to combine framebuffer data with cursor data.

I love VMS in old MicroVAX DEC. Very powerful script.

The workstations that I wanted (and had) in the 90’s (even the late 80’s) were SUN workstations. They blew the doors off of the comparable DEC’s In 1993, we had a DEC Ultra that ‘appeared’ to be fast – but it turns out it was merely overclocked – the CPU drew 70 watts by itself and ran REALLY hot! Short-lived product adn DEC folded shop shortly after that.

hmm. a Next station on an rp2040 would be nice…

MMU , more ram and meybe rom (libc)

Lol, my next cube (mono) had 8 MB ram (8 sticks!) sure it had swap, but with 32, who needs swap?

I had 128MB in my NeXTStation Turbo Color :D

why raspbery 2040 not have normal MMU ?

i need normal linux not emulating

Get an rpi0, maybe RPi Zero 2 W since the small price increase isn’t that meaningful for a hobby project. Then you can run Linux and have an MMU, and some more sophisticated peripherals.

But that’s the trade off when doing a microcontroller versus SoC.

If you want an application cpu, buy an application cpu.

You want to run ‘normal Linux’ on 256KB chip? good luck to you!

This is running emulated Linux on virtual CPU running DECstation emulator on top. Its a beautiful turtles all the way down tower of emulation.

“It’s worth remembering though that the software of the day was much less demanding and lacking in bloat. ”

One mans bloat is another’s functionality.

Close, but more like “One man’s bloat is another’s cost and time to market.”

May you spend the rest of your working life debugging steaming piles of JavaScript.

And you can go back to hand assembling machine code! Everybody loses^H^H^H^H^Hwins!

Now back to the sandpit, boy! Hand-made assembly was actually readable! 😃

I wonder if it provides the full MicroVaxII Ultrix experience, where if you didn’t shut it down properly, it would spend half the morning FSCK’ing.

You could repeat that even on a PC.

We had a lab of 486dx66 PCs with Linux on them. Filesystem was ext2 (before journaling), and well – if shutdown improperly – well, FSCK run on 170mb hard disk was about an 30-40 minutes, up to an hour, I think?

The one person who found nothing smarter than powering the lab down by pressing ‘off’ button on breaker box suffered in the hands of the one who had to power up the lab next morning :-D

The workstation i wanted in the 90′ was a nextcube/nexstation ! I still do.

BeBox for me. By the time I had enough money to get one, they were discontinued. Netwinder was another one I had my eye on back in the day, but I guess that’s more of a 2000’s workstation instead of a 90’s.

There is an emulator for NeXT called “Previous”, but it’s super old and not receiving regular updates. It could use a little love from some interested users (it’s GPL).

I used a standard VM and images from around the net. Needed to get color working.

I’ve played with ‘previous’. Not really impressed, kinda hard to use, definitely looking old, and, indeed, unmaintained. :-(

Previous is in continuous development and works really well nowadays. Unfortunately, there are some web pages online that provide outdated information and builds. It’s best to build previous from source, check out https://sourceforge.net/p/previous/code/HEAD/tree/branches/branch_softfloat/

Previous now also runs on the web thanks to emscripten, you can run a number of NeXTstep releases on https://infinitemac.org/ now. If you’re interested in previous development, please join the NeXT forums at http://nextcomputers.org/forums/

I have tried something from git in 2021 (not updated since): https://github.com/spillner/previous.git

And indeed subversion on sf, but using code/trunk.

Using svn in 2024… com’on. Only that makes it look so old/unmaintained: switch to mercurial and join the new century (avoid git, it’s cumbersome).

I did some quick testing. Current trunk doesn’t compile. Current branch “softfloat” doesn’t compile neither, same errors it seems.

That being said, i previously managed to get it compiled, and i managed to boot (you need disk images, easy to google). The project kinda works.

NeXT OS is now called MacOS.

Apple failed (miserably) to write a decent OS on their own, in the end they just bought Next, after Bee arrogantly priced themselves into bankruptcy.

I had a BeBox and several NeXTs. I wish I still had them. My BeBox was a dual 66mhz model so barely usable. My NeXTs I used daily till around 2010 lol

The readme spends an awful lot of time talking about the hardware but almost no time talking about what it emulates/compatibility, which is a huge red flag to me. And no Lance Ethernet support? Pass. I’ll stick with gxemul.

Aw, common! It’s designed to run on a LinuxCard thing! It’s clear it’s meant as a silly, yet fun little project. 😃

Otherwise, it would have been based on a modular card design like these recent Z80 systems or 8088 XT boards.

You know, something expandable, in a form factor that can be taken serious.

Something that’s not as a “gag” on a party, like one of these miniatures.

In ’94 I was a sysadmin for ~500 systems running Ultrix, SunOS, OSF/1, Irix, VMS, Solaris 2.2 and maybe HP-UX. There were a few X terminals from NCD, Visual. Lots of Decstation 2100, 3100 and 5000. We were switching to Sun Sparcstations (5 & 20s) running SunOS. We had a firewall on a 3100 (nothing was commercial yet) with proxies for FTP and HTTP and could run Mosaic.

I had a 3100 and upgraded to an HP X Terminal. that would host on any of the 500+ systems. I was able to get a DEC 3000 (alpha workstation) a little later. OSF/1 was nice. I definitely preferred it to Ultrix!

Admin was done w/ rsh (no ssh yet!) and we had something that could run 10 at a time in parallel. Writing shell scripts to run on 500 systems w/ various OSen was challenging! Bash wasn’t on every system (or stable yet), /bin/sh didn’t always have functions (Ultrix!) but we did have /bin/ksh and /bin/csh on all systems.

RAID wasn’t a thing. We had lots of disks w/ many partitions for user /home across many systems. /home was symbolic links to NFS servers that might be your workstation or a server. You could login to any of the 500 systems with NIS . /usr/local was NFS mounted w/ support for OS/CPU in subdirectories. Packages were not a thing. My .profile would use uname -s and uname -m to set my PATH to have /usr/local/vax, /usr/local/mips, /usr/local/alpha, /usr/local/sun, /usr/local/solaris.

Part of my job was compiling GNU and other source into /usr/local for all the users. Perl 4 and modules were also active.

We needed to track all those disks and partitions on all the systems so we could move users around. Each OS had a different way to get that info. We manually got the info, put it into a word processor (interleaf) and printed it out on a physical bulletin board. When I was shown how to do it, it took 30 minutes per disk! I wrote a perl script that we could rsh to every system. It would get its partitions in to a standard format, then create an Xfig file of the label. Then it would arrange multiple labels onto pages & print them.

With that script I could gather the info on all *500* systems in < 30 minutes and print the labels (9 per page) in under an hour. That saved *lots* of time and was very helpful.

In the 80s I used to look at Tektronix graphics terminal ads like a playboy.

Just an unattainable dream.

I’ve thrown away better screens.

i’m way off topic but the screenshot at the top reminds me…up through 1997 i “only” had 4MB of RAM (and not that much more HDD!) so i avoided X11 and for a while i played with MGR. its “display server” was basically a windowed terminal emulator, not too different from screen. but it supported some “extended ANSI escape sequence” sort of language for vector graphics primitives and registering for mouse events.

not sure why but that still deeply appeals to me on some sort of aesthetic level even though i’ve never wanted to go back and actually use it :)

I too like MOTIF/TWM/CDE window managers!

They remind me of Windows 3! :D

When I first got into Linux, I *bought* a copy of Motif mwm. Must still have the tape somewhere…

Good times.

Probably because they were “clean”. I was nanaging many windows on my XP with W95 theme or Slackware with Redmond theme. Today i can’t recognize whoch window is where on on my W11.

yeah i think cleanliness is at the heart of it…and to me it’s the cleanliness of the code, the interface, just very simple and self-contained, not a lot of features or room for shadows to lurk. but it’s the same as the cleanliness of the UI. if all you have is a text pipe for sending vector drawing commands and there’s no shared memory or GL stack or big graphics toolkit like GTK, then all your interfaces are gonna look like line-drawings. the simple technology is going to lead to a simple look. no mysteries. no depth. very satisfying :)

Love this project.

I hope that I can buy one with all the SMD components soldered.

In the mid 80s I set up sun sparc with multiple 19 inch mono graphics terminals as a front end to a vax cluster so my team could open multiple terminal window sessions to the vax. Team loved it.

Sun SPARC in the 1980s?

Considering SPARC shipped in 1987… Yeah?

In 1990 I had an NCD Xterm on my desk. 1024×1024 monochrome. Same size and resolution as a page from the laser printer. I came back from vacation to find it had been replaced by a SPARCstation 1+. I got my NCD back. Part of my job was building X and Motif from source for systems which did not yet offer them from the OEM, e.g. Sun & Alliant. I used twm and had a stack of terminal windows open on all the systems I worked on with a menu bar down the RHS which made it easy to select the system I needed to work on. $HOME was NFS mounted everywhere and I had a .login file that detected the system and set things so they all behaved the same way. Eventually that covered Sun, IBM, SGI, DEC, HP & Intergraph at another company. I had the admins “fold” the auto mount so /tool/bin pointed to the correct binaries for the Gnu utilities I built in my spare time for all the systems.

I miss the days when xclock took 80 kB instead of 80 MB.

Back in the day, after losing a Portfolio, I sight out and I had a Dauhpin DTR-1 that served great as a terminal I could take into closets and on planes. Used my bigger PC to build a kernel for it, got it pretty tight, as was the fashion of the day.

Before that, alongside the Poetfolio, we ha a model 100 in the ops rack which was sufficient. I wrote a lot of poetry and code on that thing and all the comm ports I could access with it, physically and by way of modem.

These days I am content with a foldable netbook with multitouch, some sort of GPD clone, but definitely pocketable, functionally complete, and enough ports with which to gain terms with much bigger metal….

In my attic I still have a gigantic SGI crt monitor with 5 coaxial video cables. I don’t know how to repair the cable (it is cut) but it would be cool.

https://ghostbsd-arm64.blogspot.com I enjoyed reading all of your thoughts about UNIX systems. Yes the RPI 2040 is nice. Look at the RaspberryPi 4B with 8GB , or 400 keyboard model and install https://freebsd.org/where 14.1 RPI image written into a USB flash drive stick (etcher.io or use dd).

https://download.freebsd.org/releases/arm64/aarch64/ISO-IMAGES/14.1/ or have working HDMI Audio use GhostBSD-Arm64 image from Telegram Desktop Arm Open-Source group files area.

https://ghostbsd-arm64.blogspot.com/2024/04/create-ghostbsd-usb-ssd-500gb-or-larger.html

Nice to plug in a USB keyboard ( or use Logitech Model K400 wireless media keyboard and touch pad) on a 28″ inch HDMI television or 43″ inch HDMI Television. Nice environment to use from your Recliner chair and watch YouTube Videos with great sound from the TV Speakers.