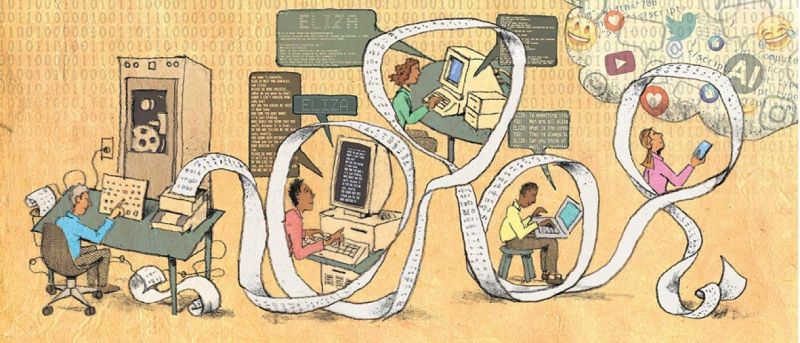

It would seem fair to say that the second half of last century up till the present day has been firmly shaped by our relation with technology and that of computers in particular. From the bulking behemoths at universities, to microcomputers at home, to today’s smartphones, smart homes and ever-looming compute cloud, we all have a relationship with computers in some form. One aspect of computers which has increasingly become underappreciated, however, is that the less we see them as physical objects, the more we seem inclined to accept them as humans. This is the point which [Harry R. Lewis] argues in a recent article in Harvard Magazine.

Born in 1947, [Harry R. Lewis] found himself at the forefront of what would become computer science and related disciplines, with some of his students being well-know to the average Hackaday reader, such as [Bill Gates] and [Mark Zuckerberg]. Suffice it to say, he has seen every attempt to ‘humanize’ computers, ranging from ELIZA to today’s ChatGPT. During this time, the line between humans and computers has become blurred, with computer systems becoming increasingly more competent at imitating human interactions even as they vanished into the background of daily life.

These counterfeit ‘humans’ are not capable of learning, of feeling and experiencing the way that humans can, being at most a facsimile of a human for all but that what makes a human, which is often referred to as ‘the human experience’. More and more of us are communicating these days via smartphone and computer screens with little idea or regard for whether we are talking to a real person or not. Ironically, it seems that by anthropomorphizing these counterfeit humans, we risk becoming less human in the process, while also opening the floodgates for blaming AI when the blame lies square with the humans behind it, such as with the recent Air Canada chatbot case. Equally ridiculous, [Lewis] argues, is the notion that we could create a ‘superintelligence’ while training an ‘AI’ on nothing but the data scraped off the internet, as there are many things in life which cannot be understood simply by reading about them.

Ultimately, the argument is made that it is humanistic learning that should be the focus point of artificial intelligence, as only this way we could create AIs that might truly be seen as our equals, and beneficial for the future of all.

“I have found that the reason a lot of people are interested in artificial intelligence is for the same reason that a lot of people are interested in artificial limbs: they are missing one.” and…

“Artificial intelligence has the same relation to intelligence as artificial flowers have to flowers.”

—David L. Parnas

The discussion about artificial intelligence is interesting because it poses the question “what is intelligence” and “what makes us different from a computerprogram with the same mental capabilities”. I do understand this is scary and it’s a perfectly normal reaction trying to demean this “AI” in order to feel safe about our own intelligence. In the end AI is just a tool and we can do amazing things with it, yes and also bad things. It just depends in how we use these tools. NLP is one of the great gains, just tell a program what you want it to do without having to adapt to the very limited and non-standardized input syntax of that specific program. Because of NLP and reacting back in a style that mimics human interaction, anthropomorphism will happen, but thats a choice. The StarTrek computer which did NLP sounded like a computer and was even called computer. I do find it incredibly interesting in how AI/LLM capabilities will evolve and calling a program stupid or dumb is just anthropomorphism in itself ;)

People say that a lot – “it’s just a tool and it can be used for good or bad”. But it seems that thinking about how we’ll prevent the bad uses and encourage the good uses always comes later, if at all, after the horse has left the barn, the worms are everywhere, the evils have been released.

>“what makes us different from a computerprogram with the same mental capabilities”.

That’s begging the question that a computer program could have the same mental capabilities. First you have to answer, is intelligence computational in the first place?

That’s where the crowd splits in two, and the argument starts about whether anything can be non-computational (clockwork universe vs. nondeterministic physics). Even after you establish that ok, the universe is not fully deterministic, the argument shits to say that such effects are too weak to have any effect in a brain. It’s hard to accept that a mind is anything but a computer program if you’re coming from such a “Newtonian” point of view.

But if such a non-computational mechanism exists, then computer programs may always be just facsimiles and clever mockups of intelligence. A computer fundamentally cannot do some things, like throw a truly random number, so it cannot replicate some of the processes that are possible – we just don’t know whether those processes are responsible for “intelligence”.

Couple of the best observations on AI I have read in a long time.

Sorry, was there some kind of meaningful or interesting content there?

Just the usual human exceptionalism. Monkey brains are magic and just because something imitates one in all possible ways, it’ll never actually be one because reasons. Boring.

You mean other than referencing an excellent article on the history of AI and the false anthropomorizing that has been occurring for decades?

Makes me think of the effect sudden widespread access to books. Suddenly, we have access to thoughts of people far away in space and time. Books are not the same being able to talk directly with Plato or Hawkings, but at least gives us a boost on insight. Perhaps AI is a misnomer, and should be seen as give another boost to understanding.

No ‘perhaps’ about it. AI is a misnomer. Just a buzzword to sell it to the masses… Use the technical name(s) of the algorithms wouldn’t have the same pazzazz…

Altman said that they told him when he was just starting with OpenAI that his approach would not end with an AI.

I think they might have been right, but I’m not knowledgeable enough to know for sure, and it’s near impossible to get reliable analysis at this point.

Those chat interactions are cute, and the Open AI does seem capable of helping to write code for real, but it’s of course not AGI and I don’t expect it to ever become so with mere LLM’s.

Hossenfelder said that she thinks real AI will come from first creating robots since you need interactions with reality to get to real AI. It’s an interesting view that makes you wonder.

I also wonder if when we were to reach real AI it would be a well thought out road towards it or just one of the stabs in the dark with surprising results.

I also think that when talking AI at the moment everybody ONLY talks about the OpenaAI approach and in many cases the people talking are not even aware that there are surely various people working with different approaches trying to get there, albeit with a much much much lower budget I expect.

“Hossenfelder said that she thinks real AI will come from first creating robots since you need interactions with reality to get to real AI. It’s an interesting view that makes you wonder.”

To me, that makes sense. We have all seem AI attempts at drawing physical objects based only on the LLM. For instance, without knowledge (?) to what a cylinder “looks” like, the corresponding output is gibberish.