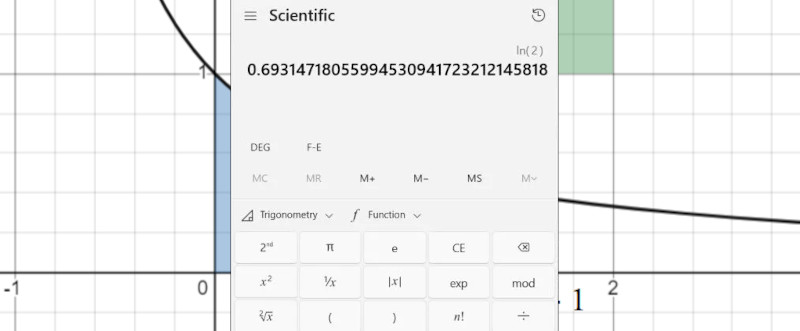

Logarithms are everywhere in mathematics and derived fields, but we rarely think about how trigonometric functions, exponentials, square roots and others are calculated after we punch the numbers into a calculator of some description and hit ‘calculate’. How do we even know that the answer which it returns is remotely correct? This was the basic question that [Zachary Chartrand] set out to answer for [3Blue1Brown]’s Summer of Math Exposition 3 (SoME-3). Inspired by learning to script Python, he dug into how such calculations are implemented by the scripting language, which naturally led to the standard C library. Here he found an interesting implementation for the natural algorithm and the way geometric series convergence is sped up.

The short answer is that fundamental properties of these series are used to decrease the number of terms and thus calculations required to get a result. One example provided in the article reduces the naïve approach from 36 terms down to 12 with some optimization, while the versions used in the standard C library are even more optimized. This not only reduces the time needed, but also the memory required, both of which makes many types of calculations more feasible on less powerful systems.

Even if most of us are probably more than happy to just keep mashing that ‘calculate’ button and (rightfully) assume that the answer is correct, such a glimpse at the internals of the calculations involved definitely provides a measure of confidence and understanding, if not the utmost appreciation for those who did the hard work to make all of this possible.

My high school math teacher taught how to calculate logs and trig functions using various series. I don’t remember anything about optimization though.

In the article we’re talking about computational optimizations, which I doubt any highschool math teacher would ever teach.

If you’re talking about optimization of an equation/function, again I wouldn’t expect a highschool teacher teaching that, that’s univ level stuff.

I’ve said it before and I’ll say it again, curve fitting, optimization, and basic statistics are very important and relevant in an engineering setting but few schools teach them.

Depends on the high school.

The “gifted” program some high schools have (had) do indeed explore such topics.

“had” in past tense because they were *forced* to end the programs in the name of

“equity” (was considered ‘racist’, since it ‘discriminated’ against people-of-color).

I don’t think we needed this.

No mention of the people who used to manually compute these functions to 8 figures and publish huge books of them:

(and their heroic typesetters…)

https://en.wikipedia.org/wiki/Jurij_Vega#/media/File:Houghton_Math_837.97_-_Logarithmisch-trigonometrische,_tabula.jpg

This was one of the ways FDR kept people employed during the Great Depression. The DoD needed log and trig tables (for pretty obvious reasons — think parabolas).Also, IIRC, those folks were actually referred to as “computers”.

Writing the code in Python makes it very hard to understand what;s going on. C would be much clearer, and writing the formula in a standard mathematical format would be even better.

Looking at a standard C math library can be confusing, as it has to handle all extremes and error conditions properly. If I recall correctly, it also sometimes has to carry out calculations to many more bits than the final result to assure the rounding is perfect.

A good introduction is The Standard C Library by P. J. Plauger. The Numerical Recipes books are OK for hacking but are sloppy in ways the authors hide. There are books consisting of nothing but expansions of math functions, etc.

I could one-up you and say if you really want to know what’s going on, do it in hardware. It’s totally cheating to just do “a=b/c;”.

The subject of numerical methods and algorithms in software is a minefield. All you need is one algorithm with some peculiar behavior under certain odd conditions and a whole system can unexpectedly blow up in your face! For ages, to make sure bullet-proof numerical methods and algorithms are being used you would try to employ something like the highly reputable and trusted “International Mathematics and Statistics Library” (IMSL).[1] The first IMSL Library for the Fortran language was released in 1970, followed by a C-language version originally called C/Base in 1991, a Java-language version in 2002 and the C#-language version in 2004. Today IMSL works with C, Java, C#/F#/.NET, and (of course) Fortran. There are Python wrappers to IMSL C Library functions (PyIMSL wrappers), and there is also something called PyIMSL Studio. Employing IMSL in a project may make it insurable, or at least reduce the cost of insurance premiums. Since 2019 IMSL has been owned by the Minneapolis, Minnesota – based application software developer Perforce.[2][3]

* References:

1. IMSL Numerical Libraries

https://en.wikipedia.org/wiki/IMSL_Numerical_Libraries

2. Perforce Software, Inc.

https://en.wikipedia.org/wiki/Perforce

3. Perforce’s IMSL Celebrate 50+ Years as the Trusted Source for Advanced Mathematical and Statistical Algorithms

https://www.perforce.com/press-releases/perforce-imsl-celebrate-50-years

I recommend this talk by Raymond Hettinger about the insides of the math modules in python:

https://www.youtube.com/watch?v=wiGkV37Kbxk

how change exp() to logarithm?

for example in neural network is sigmoid function, how creating sigmoid on logarithm and sigmoid’ dx

Base 2 logs are the easiest (but not quickest) to compute manually with just a 4 function calculator. First, just keep dividing your number by 2 until you get a result between 1 and 2, the number of divisions = the whole number part of the log (for numbers 5, 2.5, 1.25 so it’s 2.something. 1.25²=1.5625 (10.0…₂) squared=>2.44.. (result is 10.01..₂ & ÷2) 1.22..²=1.49.. (10.010..₂), 1.49..²=2.22..₂ (10.0101..₂ & ÷2), 1.11..²=1.235.. (10.01010..₂) 1.235..²=1.519.. (10.010100..₂) and the next one will be 1, because 1.519²>2. So, 10.0101001₂. Let’s check by converting to decimal (2.3203125), and calculating 2^ that = 4.9944, so pretty close.

With √ on our calculator we can work out Exp₂(x). We start with 1 and double for the number of times in the integer part; then for each binary-point place we start with √2 and [multiply the current result by the current √ if the corresponding digit=1, then √ the current √ and repeat] for each new digit. Thus 10.0101001 => 2*2 (10.) = 4 x √√2 x √√√√2 x √√√√√√√2, because only the second, fourth and 7th binary places are set = 4.9944.. as before.

Convert the number to binary, shift right until there’s only a single 1, incrementing in the loop. (Sometimes called a floor shift approximation)

Then take the bits you shifted away, and use them to get the fractional parts. For a poor man’s approximation, just tack them on: that’s piecewise linear. For more accuracy, do a polynomial fit (equivalent to what’s done in the article if you do a bit more trickery!).

For instance, 15 is 1111. 3 shifts to the last 1, so integer part is 3. Bits shifted out are 111, and 0.111 is 0.875, so 3.875. Actual answer is 3.907… etc.

The one funny thing in the article is square roots are hard but dividing is easy: dividing isn’t fundamental! It’s a pain in the neck! It was just about as hard as a square root in hardware.

My recollection is that both square root and division can be/are computed with Newton’s method, and in some (early?) processors they (re)use the same hardware as a result.

Newton’s method for a square root uses division internally, I think (so it’s technically more complicated) but there are other (slow) reciprocal algorithms that are basically identical to square root – and technically, since division is reciprocal + multiply, the square root ends up being cheaper.

Old processors (like the 8086) actually do division the dumbest way possible (repeated subtraction).

It always bugs me though when people treat division like it’s fundamental. It’s not: add, subtract, multiply are all maps from integers to integers. Division isn’t. There’s a reason why ARM avoided a division instruction so much.

If you are interested in how to calculate these sort of functions with as little overhead as possible, there are a couple of books I would recommend:

Math Toolkit for real time programming by Jack Crenshaw:

https://www.routledge.com/Math-Toolkit-for-Real-Time-Programming/Crenshaw/p/book/9781929629091

and Hacker’s Delight by Henry S Warren:

https://dl.acm.org/doi/10.5555/2462741

Both are a great read

I am so comfortable and delighted to be here in Nerdville. My wife has a picture of me on our honeymoon while I’m sitting up in bed reading ‘A first course in Numerical Analysis ‘ by Ralston and Rabinowitz. I always felt the numerical analysis was a kind of wonderful visualization tool that helped us see things that were otherwise invisible.

Computer arithmeticians used bit-by-bit computations for calculation of elementary functions since about the start of electronic calculators. Newton’s method not used to compute a square root.

Rather bits are shifted left to right to see if answer to is too big.

University of Illinois ILLIAC-II project leader’s student Bruce deLugish phd thesis addresses this technology.

i am happy to leave the computation of log and sin as an obscure tedious art i thankfully don’t have anything to do with. but this article reminds me of the last time i saw someone calculate a logarithm by hand, which happened to be insanely awesome. Feynmann lectures vol 1 chapter 22 “algebra”:

https://www.feynmanlectures.caltech.edu/I_22.html

in one chapter, he covers the entirety of algebra from beginning to end, and in the middle he incidentally calculates logarithms just as a way to convince the reader of one of his lemmas. it’s absolutely fantastic stuff, and the punchline will surprise anyone who isn’t already familiar with the euler formula. can’t recommend it enough. the single best math essay i’ve ever read.

That was an absolutely great read. I especially love how close it was to describing the basics of CORDIC in action.