You often hear that Bill Gates once proclaimed, “640 kB is enough for anyone,” but, apparently, that’s a myth — he never said it. On the other hand, early PCs did have that limit, and, at first, that limit was mostly theoretical.

After all, earlier computers often topped out at 64 kB or less, or — if you had some fancy bank switching — maybe 128 kB. It was hard to justify the cost, though. Before long, though, 640 kB became a limit, and the industry found workarounds. Mercifully, the need for these eventually evaporated, but for a number of years, they were a part of configuring and using a PC.

Why 640 kB?

The original IBM PC sported an Intel 8088 processor. This was essentially an 8086 16-bit processor with an 8-bit external data bus. This allowed for cheaper computers, but both chips had a strange memory addressing scheme and could access up to 1 MB of memory.

In fact, the 8088 instructions could only address 64 kB, very much like the old 8080 and Z80 computers. What made things different is that they included a number of 16-bit segment registers. This was almost like bank switching. The 1 MB space could be used 64 kB at a time on 16-byte boundaries.

So a full address was a 16-bit segment and a 16-bit offset. Segment 0x600D, offset 0xF00D would be written as 600D:F00D. Because each segment started 16-bytes after the previous one, 0000:0020, 0001:0010, and 0002:0000 were all the same memory location. Confused? Yeah, you aren’t the only one.

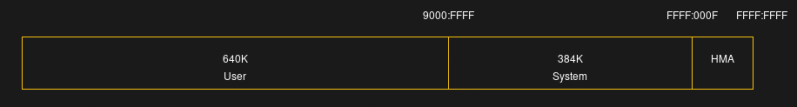

What happened to the other 360 kB? Well, even if Gates didn’t say that 640 kB was enough, someone at IBM must have. The PC used addresses above 640 kB for things like the video adapter, the BIOS ROM, and even just empty areas for future hardware. MSDOS was set up with this in mind, too.

For example, your video adapter used memory above 640 kB (exactly where depended on the video card type, which was a pain). A network card might have some ROM up there — the BIOS would scan the upper memory looking for ROMs on system boot up. So while the user couldn’t get at that memory, there was a lot going on there.

What Were People Doing?

Speaking of MSDOS, you can only run one program at a time in MSDOS, right? So what were people doing that required more than 640 kB? You weren’t playing video. Or high-quality audio.

There were a few specialized systems that could run multiple DOS programs in text-based windows, DesqView and TopView, to name two. But those were relatively rare. GEM was an early Windows-like GUI, too, but again, not that common on early PCs.

However, remember that MSDOS didn’t do a lot right out of the box. Suppose you had a new-fangled network card and a laser printer. (You must have been rich back then.) Those devices probably had little programs to load that would act like device drivers — there weren’t any in MSDOS by default.

The “driver” would be a regular program that would move part of itself to the top of memory, patch MSDOS to tell it the top of memory was now less than it was before, and exit. So a 40 kB network driver would eat up from 600 kB to 640 kB, and MSDOS would suddenly think it was on a machine with 600 kB of RAM instead of 640. If you had a few of these things, it quickly added up.

TSRs

Then came Sidekick and similar programs. The drivers were really a special case of a “terminate and stay resident” or TSR program. People figured out that you could load little utility programs the same way. You simply had to hook something like a timer interrupt or keyboard interrupt so that your program could run periodically or when the user hit some keys.

Sidekick might not have been the first example of this, but it was certainly the first one to become massively successful and helped put Borland on the map, the people who were mostly famous or would be famous for Turbo Pascal and Turbo C.

Of course, these programs were like interrupt handlers. They had to save everything, do their work, and then put everything back or else they’d crash the computer. Sidekick would watch for an odd key stroke, like Ctrl+Alt or both shift keys, and then pop up a menu offering a calculator, a notepad, a calendar, an ASCII table, and a phone dialer for your modem.

Sidekick caught on and spawned many similar programs. You might want a half dozen or more resident programs in your daily MSDOS session. But if you loaded up a few TSRs and a few drivers, you were quickly running out of memory. Something had to be done!

EMS

EMS board were “expanded memory.” There actually were a few flavors, not all of which caught on. However, a standard developed by Intel, Microsoft, and Lotus did become popular.

In a nutshell, EMS reserved — at least at first — a 64 kB block of memory above the 640 kB line and then contained a lot of memory that you could switch in and out of that 64 kB block. In fact, you generally switched 16 kB at a time, so you could access four different EMS 16 kB pages at any one time.

This was complex and slow. The boards usually had some way to move the block address, so you had to take that into account. Later boards would offer even more than 64 kB available in upper memory or even allow for dynamic mapping. Some later boards even had sets of banking registers so you could context switch if your software was smart enough to do so.

EMS was important because even an 8088-based PC could use it with the right board. But, of course, newer computers like the IBM AT used 80286 processors and, later, even newer processors were common. While they could use EMS, they also had more capabilities.

Next Time

If you had a newer computer with an 80286 or better, you could directly access more memory. Did you notice the high memory area (HMA) in the memory map? That’s only for newer computers. But, either way, it was not fully supported by MSDOS.

Many boards for the newer computers could provide both EMS and just regular memory. The real issue was how could you use the “regular memory” above the 1MB line? I’ll tell you more about that next time, including a trick independently discovered by a number of hackers at about the same time.

I had a 386 with options in the BIOS to assign some of the memory to hardware EMS. Probably a C&T NEAT (CS8281?) but I don’t remember.

I had 6 of those 386sx33 motherboards with 4MB – 16MB of RAM in each of them. But I ended up letting go of them in the early 2000’s because I didn’t have space for a retro computer collection. Big mistake, those boards would have been valuable to anyone putting together a retro game system. The turbo button was sufficient for getting Wing Commander to run correctly. And it could be assigned to a hotkey in BIOS.

What were people doing? RAM disks. If you wanted your TurboPascal to compile quickly, you configured some of your memory to act as a floppy disk, but a really, really fast one. Even if you had a hard disk, the RAM disks were quite a bit faster. Compiles took seconds rather than multiple minutes. Of course, the data on the RAM disk would vanish when the computer crashed, so you had to save to a more permanent media, but for some applications it was magic.

When CAD became possible on PCs, extended/expanded memory on DOS was a necessity. But this is getting ahead of the story, as you couldn’t do CAD until 286 at least.

One of the funny bits of trivia about early AutoCAD versions is that they were ‘parasite’ operating systems that did all sorts of bizarre and interesting things to make MS-DOS ‘sit down, shut up, and get off my lawn!’ DOOM did similar tricks, but not to the same extent.

Yeah, I didn’t start with AutoCAD until r11. I was on VAXes with PCs for Tektronix graphic terminal emulation before that.

Hello! To my knowledge, AutoCAD 2.x released in mid-80s runs on an original IBM PC with 8088 and no 8087, at the very least.

Though a 286 was highly being recommended for serious work at the time, so I partially do agree. :)

A math co-processor makes a big difference, too, no matter if 8088/8086 or NEC.

An humble PC/XT with x87 did outperform an AT or slow 386, even.

– So in the later years, when Turbo XTs became more common and the 8087 had dropped in price, CAD was once again making sense on PC/PC/XTs.

Later AutoCAD versions did require an math co-processor or an emulator.

I think versions R9 and R10 were those.

By contrast, AutoSketch 2 (and 3) shipped with two disks in the box, plain 8086 version and 8086+8087 version.

The DOS versions of R11/R12/R13 and some versions of R10 were now 32-Bit (AutoCAD 386), I think.

The Windows 3.x versions used Watcom Win386 Extender and had required a 386 or higher, too.

It may depend on what kind of CAD you’re talking about. Where I was working from 1985-92, I started out doing PCB layout by hand. When we decided to go for CAD, we shopped around, got lots of demo discs, and lacking experience in CAD, all we could finally conclude was the OrCAD seemed to have been out longer than the others, so it probably had things together better. Wrong! Wow, it had more bugs than an ant hill; and each update to fix a bug seemed to introduce two more. When I joined a small start-up company after that, I had no temptation to bootleg a copy of OrCAD. Instead, I shopped around again, now having some experience behind me. Our budget was very small. My first choice was MaxiPC, at about $2K, and a close second was EazyPC Pro, from Number One Systems in England, at only $375. These were DOS-based, and I think they both worked with an 8088. They also had a non-Pro version that was $99 IIRC, but although it was written in assembly language and did screen re-draws nearly instantly on a low-end PC, it simply did not have enough capability for what we needed. The company got me the Pro version. On that, with a ‘286 with 1MB of RAM (640KB for the user’s DOS applications), I did PC boards up to 12 layers and 500 parts, without running out of memory. I still use it sometimes, under DOSbox-X, on my Linux machine. (It produces the older Gerber and Excellon file types, but I have a quick way to convert them to the newer standard, pulling a few tricks in the process, to accomplish things this CAD wasn’t originally intended to be able to do.) This CAD had bugs too, but I and one other intensive user in the US kept reporting them, and the company was very responsive in fixing them, without introducing new ones in the process.

I’m surprised to hear that OrCAD let you down. I used it intensively from 1989, though their 386+ releases, all the way until they abandoned it for the catastrophically bad Windows offering in the mid ’90s.

I have very fond memories of the DOS OrCAD system. In fact, coincidentally, I found a box of floppies only last weekend and got it running again under Windows 11 (to be fair, an XP VM was in the mix!) and loaded up some old designs, just to see how much I’ve improved my skills since the early days. Sadly, I don’t think I have 😂 It was nice to see my old friend running again. The muscle memory was still intact.

Hi. Printer spooler also was a memory hungry application.

Especially in a network environment.

Btw, EMS also worked with a swap file.

Here, LIMulators supported EMS 3.2 for plain data storage.

“Above Disc” was such an example.

Btw. there also was a freeware program named “EMM286” in the early 90s (not EMM386).

It simulated EMS (LIM4) on a 286 through XMS/Himem.sys.

It was slow because it used copying rather than mapping.

But to users without chipset EMS it was useful.

(Windows didn’t work with it as far as I know, but some graphics programs, CAD software and databases could use it.)

The Amiga had a “RAD disk” which was RAM that you could partition so it would survive a power cycle. IIRC you could install the whole OS plus all your software (assuming plenty of RAM) and it would be super fast, at least until you lost power.

Typo? Should be 640 kB line, right?

Aggh…fixed

While we’re being pedantic, it’s SIMMs not SIMs. Single in-line memory modules.

Reminds me of when you had to low level format an MFM drive through Debug using G=C800:5

Oh, gawd… LOL. Thanks for causing that memory to resurface from the cobweb infested corners of my brain!

Hi, this often worked with 8-Bit MFM/RLL controller cards, because they had their own HDD BIOS (as an option ROM).

The 16-Bit models usually relied on PC/AT BIOS.

Nowadays, we’re using XT-IDE Universal BIOS instead.

The 8-Bit XT-IDE cards are popular, too.

They all using a Compact Flash card or an IDE drive.

Back in the day I wrote a TSR that would read the users position in a text graphic based online game, and would calculate the angle and distance so it could drop a bomb on that specific location. (You’d move your tank to the location, hit a key and bomb yourself. Cost a tank but won you the game.)

A friend of mine wrote a tsr that would just scan the entire block of memory for the map and automatically drop a bomb in the enemy base. Needless to say, he won every round he ran the TSR.

Zoid would later work in the gaming industry.

Heh. I wrote a TSR that would dump the MCGA frame buffer to a file when PrScr was pressed. IIRC it would dump the palette too.

640KB is an arbitrary limit. XT based computers could have up to 736KB of conventional memory if you has the right motherboard. EGA/VGA adapters put their buffer at A000, which limited conventional memory. On an XT with MDA the memory is at B000, so you could have 704KB, but with CGA the frame buffer was at B800 and thus you could get another 32KB of RAM on many boards. Some XT boards were equipped with 3 SIMM slots (768KB) and would enumerate that much.

+1

OS/2 Warp had offered 736KB for DOS boxes, if the user was okay with being limited to CGA graphics.

There’s an option in the settings for DOS and Win-OS/2..

Many MS-DOS compatibles had 704KB of RAM as standard or supported it.

Such as the BBC Master 512 or Sharp PC 7100.

The PC-Ditto emulator for Atari ST featured 703KB of RAM.

With an CGA+MDA (or Hercules) dual-monitor configuration, 704KB are possible without issues.

VGA cards can also be used to increase memory to 704KB or 736KB of RAM.

With the limitation that merely text-mode and CGA graphics mode can be used.

Products such as QRAM or QEMM had included utilities to make EGA/VGA framebuffer in A segment usable as conventional memory.

This “hack” was useful to get compilers with large projects going that otherwise ran out of memory.

Speaking of hacks, users in the 80s did hit the memory limit really soon.

Power users in ~1987 already tinkerered with UMB cards and tried to upload drivers into UMA.

This was when PC-DOS 3.30 was current. It’s not a new phenomenon.

They used hardware such as the HiCard and loadhigh software.

Way back in 1985 public domain utilities existed that updated the BIOS to see 704KB of RAM (if physically available).

After a soft reset, 704KB was reported to DOS.

This was years before DR DOS 5 had introduced UMB/HMA support circa 1990.

I’m not sure that the “640K” Gates comment is a myth. I can’t site any sources. But I can certainly see Gates talking to the IBM engineers about the architecture and making that “640K” comment as the engineers decided to reserve the upper 384K for ROMs and expansion cards.

Did Bill Gates or Microsoft influence the first IBM PC’s hardware design? I would think it far more likely that IBM worked on the hardware first and then told Microsoft how to interface to it. Besides, you could also get CP/M-86 for the first PC.

It’s just roughly a 2/3 split between user/system. The 1 MB total comes from the processor itself, so the only thing you’re left with is deciding that split.

Systems still do this today, you just don’t notice because 1. the address space is so huge and 2. paging allows reserved chunks of memory to be wherever you want anyway. /proc/iomem looks hilarious nowadays.

What’s funny is the “[EMS swapping memory in and out] was complex and slow” comment considering paging is basically the same thing on steroids.

The EMS boards with backfilling option were almost like MMUs.:

The PC RAM, except for a minimum required for booting, was being moved from mainboard over to the EMS board.

Under DESQview it then was possible to swap in/out almost all conventional memory along with big applications.

If you swapped 512KB of RAM, 2 MB of EMS RAM was a practical minimum, I assume.

I think you might have misunderstood my comment. The 640kB RAM, 384kB IO split is a hardware design decision, because IO can be placed as low as 0xA000:0x0000. One of the comments here says you can squeeze out a bit more if you use an MDA video card, but the principle still holds.

I’m pretty sure that IBM made that decision prior to Microsoft’s involvement and besides, why would IBM listen to MS’s hardware design ideas when MS were just a small software company and PC-DOS was just one option? Also, it’s fairly well-known that IBM thought of the 5150 as their proper entry into micro computing, not as the architecture that would dominate personal computers for decades. Also, computer churn was very high in the late 1970s and early 1980s – what basis did IBM have for thinking their PC would last longer than a couple of years? So, IBM had little need to anticipate more than 640kB and little need to consult Microsoft about it.

Assuming that typical memory requirements grew at about 0.5 bits per year and 64kB was normal for a business machine in 1980. Then 512 is 6 years’ worth of lifetime.

Onto EMS swapping. Yes, it’ll be slower than using segmentation. There’s two main reasons for that. Firstly, 8086 segmentation allows a program to directly access any amount of code, far call and far ret are as efficient as they need to be. But you can’t do that if you try to run code in expanded memory, because calls and returns need to be to a different window and saving and restoring the bank for a given window needs at least 4 more instructions:

in ax,[DstWindow]

push ax

mov ax,TargetBank

out [DstWindow],ax

far call DstWindow+FuncOffset ;now IP:CS:OldDstBank are on the stack.

Return is the reverse.

Data access has similar problems. You need 32-bit pointers again, but they’re split up in a different way, on 16k boundaries. You’re also limited to accessing 16kB at a time, so arrays and data structures most likely are constrained by that; whereas with segmentation, they can be a full 64kB each and on any 16b boundary. Switching banks takes 2 instructions (load the new bank value, out it to the window) compared with 1 with segmentation (mov es,).

As challenging as segmentation is, EMS is far more challenging.

When the first IBM PC was designed, it was normal that newer models made were incompatible with previous ones from the same manufacturer. Tandy and Commodore had incompatible models sold at the same time. The IBM PC was designed in a rush, and the idea was clearly to evolve the system to a better and more powerful one.

What happened that a lot of software was made for the IBM PC and required hardware compatibility.

Apple, with the Apple II had the same proble when made the Apple III, but

with the Macintosh made a totally incompatible system and continued to make Apple II and a compatible IIGS . With the Macinosh LC models was possible to add an Apple II emulation card to run older software, so Apple was able to transition the user base on the new platform.

With IBM there were a lot of clones that made compatibles. When tried to make an upgrade of the architecture with PS/2 line it failed. The idea for IBM was to have systems that could run in compatibility mode (with the CBIOS and PC/DOS) but also use a new operating system and a different BIOS runnin in protected mode (ABIOS and OS/2).

That plan failed for a lot of reson, so the IBM PC architecure became quite complex.

Not sure you understood my response: I’m saying that it’s not really even an IBM decision. It’s Intel. You’re starting out with a 1 MB address limit. You want memory-mapped devices in there, so you have to decide a user/system split, and a 2/3 user 1/3 system split was a pretty common split.

As the other poster mentioned it was pretty normal that each time you got a new or different model, things were incompatible. No one expected the PC to blow up with clones and such. Once it did and it expanded into the future to more advanced processors, the 640 kB limit became an obvious limit. But at the time it had more to do with the processor than what people would “need.”

I mean, if IBM had made it 75/25, you’d just end up with “768k should be enough for anyone.” I mean, we bumped into memory limits below 4 GB with 32-bit processors due to memory mapping, too. The only difference now is the doubling to 64-bits makes the memory space absurd.

I remember the time I stripped a pallet full of government-surplus TRS-80 Model 3s of their 41256 and 414256 chips to fully populate an EMS board (as well as update the mainboard and VGA card) for my first 8086 computer. Cost me all of about $5 for the pallet at a time where the chips were fairly expensive retail.

Anyway… this worked fine until one day his wordperfect (word processor) ended up using enough memory to exceed 320k…. So…. back in those days it was possible for a wordprocessor to use more than half of 640k. Bit bigger document and you’d be able to hit 640 just fine.

It seems the “quote” didn’t work as I intended. Sorry.

Back in the day I wrote a memory test to pick this specific error up. Oh those far simpler days.

Why did this article get a photo of a display controller?

It’s a fun article. In the mid-80s, before I’d ever used an IBM PC (we were still using 8-bit computers, perhaps with some bank-switching), I couldn’t really comprehend how the 8088 used segments to address memory.

Then someone at the local computer club (the Nottingham Microcomputer Club, UK), explained that the 8088 simply takes the value of a 16-bit segment register, multiplies it by 16 and adds a 16-bit offset.

EA=SegmentReg*16+Offset

And then I understood how awful it was. On top of that there isn’t a segment register for each address register, but just 4 global segment registers which the programmer can mix and match with 4 address registers; 4 combinations of a pair of address registers and/or offsets. Complex, and weird.

I think segmented memory is somewhat different to bank-switched because it does at least expand the address space. Bankswitching limits access of code and data to 64kiB at any one time. However, an 8086 can usefully address pretty much any amount of code and at least 128kB of data, before Large model complexities really kick in. In that sense it’s mimicking the split code/data address space of the pdp-11.

Expanded memory of course is simply bank-switched in a 64kiB address space and classic bank-switching tricks were needed to run code from it.

Hi there! EMS 3.2 was limited to 64KB, but EEMS/LIM4 wasn’t anymore.

Given LIM4 hardware, an EMS manager could provide, say, an 256KB page frame.

EMM386 did this for Windows 3.x if you had run MemMaker and had chosen to support Windows..

(Windows 3.x knows Small-Frame EMS and Large-Frame EMS.)

But anyway, we’re in the 90s now..

In the 80s, EMS support in 286 chipsets was rather basic (but still NEAT).

EMS 3.2 hardware with LIM4 memory manager, in principle.

By contrast, dedicated EMS boards from late 80s were often LIM4 capable.

Such as AST Rampage series (AST made EEMS).

Speaking under correction here.

About the x86 segment thing.

It was useful for machine translating of 8080 code, I think.

Many early PC-DOS programs were ported over from CP/M-80 platform.

That’s why A20 Gate was needed, too, to support CALL5 interface.

That being said, in practice, DOS was doing all the hard work most of time.

It had an executable loader that tried to take care of the worst problems and patch the known trouble makers (applying loadfix etc).

That’s why the later DOS 5/6 versions can be useful on PC/XTs and old ATs, even.

It’s not just about the HMA feature or FAT16 all the time.

For a long time, most users (hobbyists) wrote little COM programs and never had to leave their segment.

Turbo Pascal 4 (DOS) was first to support EXE files, Turbo Pascal 3 used COM files and supported overlay files.

QuickBasic 4.5 uses one segment, too, but can be forced to switch it.

PDS 7.x supports EMS and has better memory-managment.

Re: EMS 4. So, if EMS 3.2 is 4x 16kB windows (making 64kB), then EMS 4 is basically 4x 64kB windows (making 256kB). But it’s still going to be more clumsy than the 8086/8088’s native segmentation, because you still have to select a segment for the EMS area and also write to the bank registers on the EMS card (or NEAT chipset).

About the “x86 segment thing”. People make up all sorts of excuses for the segmentation design. It’s possible that segments made porting 8080 code easier, but 256b segment boundaries would have been just as good for that and better for large model code (16MB addressing, no need to shift by 4, a shift by 8 is easier). The major reason though for segments was that Intel were a fan of the operating system MULTICS and had already made the iAPX432 segment-oriented.

That’s also why the 80286 made their protected mode segment oriented, when they could have just overlaid paging. They could have put segments on 256 byte boundaries (that would have provided 16MB of virtual and physical addressing), then translate the upper 12 bits through a far simpler page table, backed by a small TLB.

If Intel had used paging, then the 80286 would have been 100% upward compatible with the 8086 and it would have been a simpler design. So, it wasn’t so much that Intel were so focused on compatibility, but that they had a Segmentation ideology.

I think A20 gate only appeared with the 80286. There was no A20 to gate on the 8086 (memory addresses would just wrap), but on the 286 the hardware didn’t wrap addresses even if you were in real mode. OK, so I’ve just looked up the CALL 5 issue in Wikipedia.

So, on an 8-bit CP/M system, addresses 0x5 to 0x7 contained an INT instruction followed by the CP/M segment size (or rather, TPA size I guess). On CP/M that would max out at 0xFEF0 (or maybe 0xFF00). But the BDOS entry point on CP/M-80 corresponded to INT 30h on a PC at address 0xC0. So, they needed 3 bytes that could invoke INT 30h, and provide a TPA of 0xFEF0. That meant executing a CALL FEF0h and that could only work if it wrapped around the top of the address space, back to 0xC0.

What a mess.

It’s quite a sad indictment that despite having access to 1MB of address space, hobbyist programmers had to treat their PCs largely like an old 8-bitter. I think I would have home-brewed a version of Forth that could do far calls (i.e. by using 16-bit tokens to point to 32-bit addresses):

;IP in DS:SI, SS used for data stack, return stack and tokens, BP^return stack, SP^Data Stack

Enter: ;is a far call to here.

ADD BP,4

MOV [BP],SI

MOV [BP-2],DS ;save old DS:SI (IP)

MOV SI,BX

ADD SI,2 ;Point to threaded code.

MOV BX,ES

MOV DS,BX

Next:

MOV BX,[SI]

ADD SI,2

LES BX,SS:[BX]

JMP ES:[BX]

Exit: ;reverse operation.

MOV DS,[BP-2]

MOV SI,[BP]

SUB BP,4

JMP Next

Thank you for your reply!

I must admit that your expertise is much greater than mine.

In defense to the 80286 I can only say that its development had slightly pre-dated the IBM PC and that it was made with other applications in mind.

Things like PBX systems, databases, industrial control systems etc.

OSes such as XENIX 286, Concurrent DOS 286 or OS/2 v1.3 were positive examples, I think.

These systems ran quite quick and stable, also in comparison to 386-based systems.

It’s Protected-Mode used segments to implement pointers, which as such weren’t too shaby.

The memory protection through segmentation was nice, too. In addition to the ring scheme.

Code blocks could be marked as program/executable and data/non-executable.

Buffer overflows couldn’t cause a security breach so easily.

On PCs of today, it needed DEP and NX bit to bring back that feature for systems that use beloved “flat memory model”.

Btw, when 80286 was freshly designed, MS-DOS and IBM PC were still irrelevant and niche.

That’s why it needed the 80386 to fix the shortcomings.

Today, we often overlook this, I think.

We assume that IBM PC was important all time,

but don’t know that the IBM PC originally had 64KB, ROM BASIC a cassette port and no floppy drive.

It was a home computer at first, with the option to be expanded to an office computer.

Which it totally became just two years later. With 256KB RAM, 360KB floppy drive etc.

But in 1981/1982, it wasn’t yet.

Here in Europe the Victor 9000/Sirius-1 was the first MS-DOS PC that was available.

The IBM PC 5150 was being officially sold by IBM from 1983 onwards, I think.

So here in Europe, the Sirius-1 almost became the IBM PC equivalent over here.

Similarily, years later, the PC1512/PC1640 sorta became our equivalent to the Tandy 1000.

Of course, looking back, programming the 68000 was more elegant.

But it also wasn’t quite as powerful as an 80286.

Personally, I liked the 68010 which had a tiny buffer and virtual memory support.

It’s perhaps the closest in terms of features to 80286, besides 68020.

In the 80’s, a clone of MS-DOS appeared from Digital Research. As one might guess: it was named DR-DOS. This DOS version had the ability to access the empty areas above 640k (provided you installed the memory in that area). And suddenly you had 768kb to play with… Maybe more depending on your video and networking setup.

My first PC was an 80386SX with either 2MB or 4MB RAM, and it was difficult to do something useful with that extra memory. I did install some RAM disk program once that was able to use it, and that was useful. For school assignment I had a Z80 cross assembler and emulator, and I used the RAM disk for the assembled output. This cut the assemble & test loop from around 15 seconds to 2 seconds or less.

And that segmented memory was probably one of the most stupidest decisions in the whole history of PC’s. Or maybe the switch from CPM to DOS. After (I think) Xerox invented the windowed GUI with mouse, it was only a matter of time before something similar ran on any (desktop) PC.

“My first PC was an 80386SX with either 2MB or 4MB RAM, and it was difficult to do something useful with that extra memory.”

In the 90s, when DOS 6 was current, many DOS programs had used Himem.sys (XMS) or EMS.

The Sierra game compilations used extra memory, for example.

Picture viewers such as CompuShow 2000 supported extra memory, AutoSketch 3 used EMS. Or MOD players, such as MOD-Master.

Windows 3.x ran well with 4 MB and up, as well.

Especially on a 286 in Windows Standard-Mode, which had no virtual memory support.

GEM applications such as Ventura Publisher used EMS, too.

The usefulness of XMS memory is something that’s not very apparent until you don’t have XMS, I think.

On a Turbo XT with V20 processor, many modern DOS programs fail to run because EMS and/or XMS aren’t available.

That’s why I bought a Lo-Tech EMS card and an UMB card. It helps to run many programs properly.

“Or maybe the switch from CPM to DOS.

After (I think) Xerox invented the windowed GUI with mouse,

it was only a matter of time before something similar ran on any (desktop) PC”

DOS had quickly introduced a better filesystem (FAT12) and supported directories, I think.

That’s why it was so popular. MS-DOS 2.11 was much more developed than CP/M in most ways.

It even tried to adopt certain Unix specific things.

Like an Unix-style separator.

Or support for devices (AUX, CLOCK, PRN).

Btw, an fascinating little DOS was DOS Plus 1.2, I think.

It was CP/M-86 with a DOS compatibility layer named PC-MODE.

A bit like WINE on Linux, if you will.

DOS Plus could run both CP/M-86 programs and DOS programs.

CP/M-86 programs could run with limited multitasking, even.

Directories were simulated to CP/M-86.

The BBC Master 512 and the Amstrad PC1512 shipped with DOS Plus (and GEM).

The Amstrad version runs on IBM PC compatibles, too.

It’s useful because it can handle both CP/M and DOS filesystems.

6845 brings back memories…

And nightmares. ;)

Sooo…. I co-wrote a complete Voicemail solution in A86 assembler as a TSR. The main ‘line’ code ran in 64kb, and used XMS memory (swapping out chucks into the 64kb .com app as needed). The were other TSRs for different functions, but everything ran in <256kb of real memory. The whole solution ran under DOS6.2 and would use Rhetorex ISA voice cards and happily run 3000 users across 16 analog lines on a 486DX100

This was in 1993, and the last one stopped being used in 2023!

The key to it all was that we created a tiny helper TSR that generated INT2F (with AX set to CC02) whenever DOS was ‘safe’ (in TSRs you have to be really careful about when to write to the disk etc.) – This meant that all the TSRs could hook into this and function.

The coolest part of the code was that each time the tick occured, we’d look at the voicecards to see if there was an ‘event’, process that event and then call a function called “callback” – This basically popped the return address off the stack, store this and the registers in XMS memory and then call IRET to exit the Interupt, when the next safe ‘tick’ occured, we’d pull the return address (and register values) back from XMS and continue exactly where we left off.

The documentation is sparse, but if there is an interest, I may well be pursuaded to open-source this if someone has a DOS project/forum that might be interested.

I wrote a bunch of TSRs in the day. From communication managers to handle medical lab instruments to pop-up background text editors that we leveraged for user-definable online help

The best TSR I wrote though was the smallest too…see we had computers sharing a server printer (way before Ethernet) and folks printing hundreds of pages of medical lab reports to pinfeed dot matrix printers. Since the pages needed to be aligned to the paper feed (so you don’t print across the perforation) it was important to keep the page top aligned.

The wrinkle in the plan was the habit of the lab techs to do a quick screen print of some results for a quick report…when they did that (via a Shift -PrtSc) it would route to the BIOS via INT05 and dump the screen buffer 80 characters by 24 lines to the printer. This isn’t a problem on a local printer, because you just hit the Form Feed button to scroll the paper to the top of the next page…but on a shared printer (probably in the other room) the problem was that those 24 lines weren’t a 66 line page, so the page alignment went wonky and if someone started a huge report run it would all be misaligned.

So the TSR I wrote hooked the INT05 (screen print) and called the original handler (dumping 24 lines) and when that BIOS code finished, emitted a single FORM FEED character to advance the printer to the top of the next page. Problem solved!

The TSR occupied 16 bytes when resident.

Nice! The ‘safe timer-tick’ TSR I mentioned above was about 8k I recall, which was still pretty good for the time :-) – It had to hook into 05h, 10h, 20h, 21h, 2fh and maybe a few others and had to use a lot of unofficial/undocumented calls to check the OS state before allowing stuff to be done. It’s funny how I’ve not touched that code for maybe 20 years, but still remember the interrupts and how everything worked! :-)

I wrote a TSR with Turbo Pascal and Assembler in the 90s that turned the screen upside down. It flipped the character set in the graphics card memory and then the screen every timer tick.

It was hell. I remember buying. Yes, legit commercial copy. Windows 3.0 on how so many 514 floppies, it was like 12, just for the purpose of making use of those 2mb on mine 386sx. It didn’t work I remember, was no use for running high base memory required stuff. Had to wait for Win32 stuff for that, but we had pentium by them. Crazy times anyway.

As I recall, the original IBM PC also allocated 128k to a rudimentary basic in bios. This was basically wasted, as there wasn’t enough there do do much with it. My TI Professional computer didn’t waste the 128k and used it for lower memory, so the TIPC had 768k available.