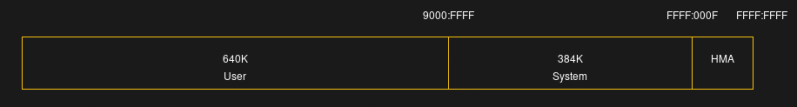

Last time we talked about how the original PC has a limit of 640 kB for your programs and 1 MB in total. But of course those restrictions chafed. People demanded more memory, and there were workarounds to provide it.

However, the workarounds were made to primarily work with the old 8088 CPU. Expanded memory (EMS) swapped pages of memory into page frames that lived above the 640 kB line (but below 1 MB). The system would work with newer CPUs, but those newer CPUs could already address more memory. That led to new standards, workarounds, and even a classic hack.

XMS

If you had an 80286 or above, you might be better off using extended memory (XMS). This took advantage of the fact that the CPU could address more memory. You didn’t need a special board to load 4MB of RAM into an 80286-based PC. You just couldn’t get to with MSDOS. In particular, the memory above 1 MB was — in theory — inaccessible to real-mode programs like MSDOS.

Well, that’s not strictly true in two cases. One, you’ll see in a minute. The other case is because of the overlapping memory segments on an 8088, or in real mode on later processors. Address FFFF:000F was the top of the 1 MB range.

PCs with more than 20 bits of address space ran into problems since some programs “knew” that memory access above that would wrap around. That is FFFF:0010, on an 8088, is the same as 0000:0000. They would block A20, the 21st address bit, by default. However, you could turn that block off in software, although exactly how that worked varied by the type of motherboard — yet another complication.

XMS allowed MSDOS programs to allocate and free blocks of memory that were above the 1 MB line and map them into that special area above FFFF:0010, the so-called high memory area (HMA).

Because of its transient nature, XMS wasn’t very useful for code, but it was a way to store data. If you weren’t using it, you could load some TSRs into the HMA to prevent taking memory from MSDOS.

Protected Mode Hacks

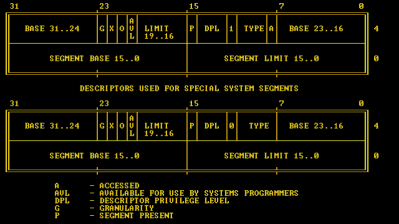

There is another way to access memory above the 1 MB line: protected mode. In protected mode, you still have a segment and an offset, but the segment is just an index into a table that tells you where the segment is and how big it is. The offset is just an offset into the segment. So by setting up the segment table, you can access any memory you like. You can even set up a segment that starts at zero and is as big as all the memory you can have.

You can use segments like that in a lot of different ways, but many modern operating systems do set them up very simply. All segments start at address 0 and then go up to the top of user memory. Modern processors, 80386s and up, have a page table mechanism that lets you do many things that segments were meant to do in a more efficient way.

However, MS-DOS can’t deal with any of that directly. There were many schemes that would switch to protected mode to deal with upper memory using EMS or XMS and then switch back to real mode.

Unfortunately, switching back to real mode was expensive because, typically, you had to set a bit in non-volatile memory and reboot the computer! On boot, the BIOS would notice that you weren’t really rebooting and put you back where you were in real mode. Quite a kludge!

There was a better way to run MSDOS in protected mode called Virtual86 mode. However, that was complex to manage and required many instructions to run in an emulated mode, which wasn’t great for performance. It did, however, avoid the real mode switch penalty as you tried to access other memory.

Unreal Mode

In true hacker fashion, several of us figured out something that later became known as Unreal Mode. In the CPU documentation, they caution you that before switching to real mode, you need to set all the segment tables to reflect what a segment in real mode looks like. Obviously, you have to think, “What if I don’t?”

Well, if you don’t, then your segments can be as big as you like. Turns out, apparently, some people knew about this even though it was undocumented and perhaps under a non-disclosure agreement. [Michal Necasek] has a great history about the people who independently discovered it, or at least, the ones who talked about it publicly.

The method was doomed, though, because of Windows. Windows ran in protected mode and did its own messing with the segment registers. If you wanted to play with that, you needed a different scheme, but that’s another story.

Modern Times

These days, we don’t even use video cards with a paltry 1 MB or even 100 MB of memory! Your PC can adroitly handle tremendous amounts of memory. I’m writing this on a machine with 64 GB of physical memory. Even my smallest laptop has 8 GB and at least one of the bigger ones has more.

Then there’s virtual memory, and if you have solid state disk drives, that’s probably faster than the old PC’s memory, even though today it is considered slow.

Modern memory systems almost don’t resemble these old systems even though we abstract them to pretend they do. Your processor really runs out of cache memory. The memory system probably manages several levels of cache. It fills the cache from the actual RAM and fills that from the paging device. Each program can have a totally different view of physical memory with its own idea of what physical memory is at any given address. It is a lot to keep track of.

Times change. EMS, XMS, and Unreal mode seemed perfectly normal in their day. It makes you wonder what things we take for granted today will be considered backward and antiquated in the coming decades.

We used 8088 and 8086 a lot for embedded systems we designed. IAR compiler had a banked memory model. Easy to turn on. Then each memory area (RAM and ROM) has a section that was always present and another section that was controlled be a bank number. A register help the bank number. The linker put all the code together and added bank switching call to any module not linked into the current bank. You could design almost any size preeminent and banked. For example:

RAM 2k words permanent and 2K banked. ROM 8K permanent and 16K banked.

What was the advantage of banking, when all RAM and ROM banks would have been able to fit into one segment in 8086/8088?

For 8080/8085 I could imagine such concept.

OK, I misunderstood there was only one banked 2k slice of RAM and 16k of ROM. Of course, with many slices, banking in required slice in the 2k/16k space makes sense.

IAR C was great. We used Aztec C-86 (very good) and Microtech MCC85 (usable).

No MIX Power C ?

Probably a good compiler to generate PC/MSDOS software. Back then we did not have resources to evaluate all C compilers for embedded design – Aztec was our choice then.

Back then PC memory was a big mess. My first PC was an 80386SX with either 2 or 4MB (I forgot) and MS-dos 4.01, and I was not really able to do much with all that memory. I’m not sure if windows 3.1 was able to use that memory normally. I think it took up to windows 95 (Ten years after release of the 80386!) before there was an OS from microsoft that could handle the memory without workarounds.

Very similar things with both HDD’s and uSD cards. They’ve bumped into the maximum addressing space, and then they invent a new standard that has a whopping extra 4 bits of so of addressing space. This lead to lots of problems, such as a new memory card not working in your camera, or your PC not being able to use your whole HDD. “They” probably did this on purpose, with each “new standard” released an opportunity to make more money.

But luckily, this is now all finally mostly a ghost of the past. I’m also just chugging along on my Linux box, which “just works” and I’ve lost interest in keeping up with what sort of progress is made in the PC world. Every 10 to 15 years my PC gets a bit slow, and then it’s time to buy a new one.

“But luckily, this is now all finally mostly a ghost of the past.”

DOS ain’t dead. It lives on forever, just like C64 and Amiga! :D

Windows ’95 was indeed arguably the first mainstream OS to support all 32 bit windows applications running in a 32 bit address space.

Windows 3.1 was a lot more sophisticated than people remember, though, I think. It’s “386 enhanced mode” was entirely capable of addressing 4MB of RAM, or even much more (despite using 32-bit addressing, my understanding is that 256MB, not 4GB was the functional limit, likely because 256MB already seemed absurd).

However, windows programs themselves were still 16 bit*, so they couldn’t natively take advantage of all that memory.

(*unless they were compatible with the windows 32-bit subset, “win32s”, after that was introduced.. but I’m still not sure you can count that as “native”)

Hi! Windows 3.x really was interesting, despite its flaws.

Win32s was an early testbed for Win32 development, too.

It was available to most developers before Windows NT was.

Applications which have relocation tables and use no threading can run on Win32s.

Win32s 1.25 and 1.30 even surpassed NT 3.1 in terms of application compatibility.

They also ran on OS/2 Warp’s Win-OS/2, I think.

What’s also interesting: Watcom’s Win386 extender for Windows 3.0.

It provided an 32-Bit version of Win16 API on top of real Win16 API.

Applications written for it are true 32-Bit applications, like with Win32s, but still have a Win16 header.

That means that Windows 3.1/9x/NT will treat them as 16-Bit applications.

That’s why they have same restrictions as ordinary Windows 3.x applications,

with the exception of 32-Bit memory managment within the applications.

Here, the later Win32s applications might be less being limited in comparison.

On Windows NT they might be run via NTVDM/WoW like ordinary Win16 applications are being run.

Which makes me wonder if they ever ran on Windows NT 4 on RISC machines.

Because, the RISC versions of NT 4 had an i486 emulator for DOS/Windows 3.1 support.

That’s something that x86 Win32 applications couldn’t do without help (FX!32 etc). The had to be native executables.:

The RISC versions of Windows NT merely had provided 80286 (initially) or i486 emulation for legacy applications, but not Win32 applications.

What about NT on MIPS64? I know it was officially dropped for NT 4, but feel like we had a workstation running it anyway. I largely remember it being impossible to run anything on, and not seeming to have hardly any legacy application support.

The machine was running an Immersadesk, which made the incompatibility a real problem and eventually the machine was replaced with an SGI 320 with Win2K I think. … Which was arguably as bad, maybe worse? IRIX was much easier to deal with than either if these hybrids.

Hi there! You seem to know more about me here, I’m afraid! 😅

I could imagine that the 64-Bit platform was used as a testbed for Itanium, too, but it’s just a guess.

Windows 3.1 also had featured WinMem32 API,

which was a third method to 32-Bit applications (besides Win32s and Win386).

But there’s not much information about it, I’m afraid..

I used applications that required them, but cannot remember what they were at this point.

Hi again, this article about internals of Windows 3.1 is very well done, I think.

https://www.xtof.info/inside-windows3.html

“However, MS-DOS can’t deal with any of that directly.

There were many schemes that would switch to protected mode to deal with upper memory

using EMS or XMS and then switch back to real mode.

Unfortunately, switching back to real mode was expensive because, typically,

you had to set a bit in non-volatile memory and reboot the computer! On boot,

te BIOS would notice that you weren’t really rebooting and put you back where you were in real mode. Quite a kludge!”

That’s true. But there’s more.

The 80286 was a problem the 80s, but nolonger in the late 80s and 90s.

Himem.sys v2.06 (ca. 1989) added a new code path and avoided the CPU reset.

Himem.sys included in MS-DOS 5/6 is smart and used LOADALL instruction to access memory past 1MB.

So the 80286 never has to leave real-mode, strictly speaking, if a modern himem.sys is used.

Himem.sys has multiple code paths and different “handlers” for A20 Gate.

On a 386 and up, it uses the 386 version of LOADALL or switches gracefully between real-mode and protected-mode.

It tries to auto-detect from over 20 different PC types, but can also be forced to use a specific handler.

Other DOSes, such as PC-DOS and DR-DOS and Novell DOS 7 are similarily smart here.

Except PTS-DOS or Paragon DOS, I think.

It tries to uses small memory footprint and its himem.sys is quite tiny.

It’s unlikely that it is as smart or has as many machine handlers.

More info:

https://www.os2museum.com/wp/himem-sys-unreal-mode-and-loadall/

Speaking under correction.

Unreal mode was very handy for DOS programs that needed to access PCI devices (obviously later when PCI entered the market).

Very handy for markets that still used DOS (US Military) and wanted to use PCI I/O cards.

When working with BIOS in later years (2005) it was definitely surprising to still see source code marked 198x. This of course makes sense because this was what was necessary for compatibility all the way back to the beginning.

+1

Though there’s one thing I’d like to add to mention.

The BIOSes in the 90s and 2000s were highly advanced and modular.

BIOS vendors such as Award or AMI sold BIOS kits to OEMs, which could be customized.

Also, the BIOS didn’t remained stuck in 1981 as the media might say.

The PC/AT BIOS added a lot more interrupt service calls, such as INT15h.

The PS/2 BIOS did, as well, but the PS/2 line wasn’t adopted by industry.

In the 90s, the BIOSes used 32-Bit instructions even in Real-Mode.

Some also used compression to fit in the first megabyte or used the Cloaking technology by Helix.

That meant the actual BIOS code was running past 1MB, but a stub (akin to a remote control) remained below 1MB.

To be visible by DOS in Real-Mode.

Then there were additions like Protected-Mode BIOS, APM support, network boot (PXE) and so on..

The development of the BIOS over the past decades was very fascinating.

Too bad intel removed CSM (BIOS) from UEFI specs..

It was such a great hack! :)

https://en.wikipedia.org/wiki/Helix_Netroom

Quite a lot of technical inaccuracies here.

1) “However, you could turn that block off in software, although exactly how that worked varied by the type of motherboard” – not quite true. All motherboards of the era supported access via the keyboard controller (which was just a GPIO chip really that had a spare line). Later some other methods were devised. See e.g. https://wiki.osdev.org/A20_Line.

2) “XMS allowed MSDOS programs to allocate and free blocks of memory that were above the 1 MB line and map them into that special area above FFFF:0010, the so-called high memory area (HMA).” – Nope, the HMA is always available (once the A20 line is enabled). XMS allowed copying memory from/to above 1MiB to a buffer below it. Both XMS and enabling HMA is part of HIMEM.SYS, perhaps that’s where the confusion lies.

3) “Because of its transient nature, XMS wasn’t very useful for code, but it was a way to store data. If you weren’t using it, you could load some TSRs into the HMA to prevent taking memory from MSDOS.” – True, but these lines are presented as if talking about the same. XMS was useful for data only (well, you could still use “overlays”, a technique that loaded code on demand), HMA was normal reachable memory, so you could in theory put TSRs there (though you’d let DOS handle that or get into trouble).

4) “There is another way to access memory above the 1 MB line: protected mode.” – XMS (at least on a 286) also uses protected mode to access the >1MiB memory, so not exactly “another” way.

5) “Modern processors, 80386s and up” – yup, the modern 386 :D. Note that x86-64 CPUs don’t have segmentation at all anymore, just paging.

6) “There were many schemes that would switch to protected mode to deal with upper memory using EMS or XMS and then switch back to real mode.” – No, EMS never “switched to protected mode (…) and then switch back”, that was just XMS on a 286. EMS used virtual 86 mode (and hence the need for VCPI/DPMI when having EMM386 loaded and starting a protected mode executable).

7) “Unfortunately, switching back to real mode was expensive because, typically, you had to set a bit in non-volatile memory and reboot the computer!” – on a 286 only. 386es can gracefully transition between protected mode and real mode.

8) “Well, if you don’t, then your segments can be as big as you like.” – to the maximum of 4 GiB of course (for 32-bit CPUs).

9) “The method was doomed, though, because of Windows.” – Sure, but by that time, you either dual booted or ran Windows games. Unreal mode was widely used in the early and mid 90s (Doom being the prime example.)

Hi thanks for your write up. 😃👍

I think we should be forgiving to the author, though.

It’s not easy to simplify the matter so that ordinary people can understand it.

For example, Extended Memory and XMS aren’t exactly same thing.

The AT BIOS had featured an INT15 routine to access Extended Memory years before himem.sys/XMS were around.

The ancient RAM Drive driver in PC-DOS 3 (VDISK.SYS) used it, I think.

XMS is just a software specification, similar to EMS.

Also, “flat mode” on i386 and up works by “disabling” segmentation.

But the segmentation unit in the MMU doesn’t stop working, it just nolonger has work to do.

The “trick” was to change segmentation size from 64KB to 4GB, the whole physical address space of the i386.

So segmentation is still there, but has no effect.

Last but not least, I got Windows 3.0 to once run in Standard-Mode on an AMD Athlon 64 X2 (early 86_64).

Windows 3.0, at least, which uses krnl286 and 16-bit Protected-Mode, which uses segmentation.

I have no idea how situation is with newer processors.

Speaking under correction.

Btw, here’s an interesting link.

https://www.os2museum.com/wp/himem-sys-unreal-mode-and-loadall/

Thanks for the additions, I was aware of these but didn’t want to complicate it even more :).

As for “flat real mode”, the trick was to switch to protected mode, extend the segment registers to 4GiB, and switch back to real mode. The segment size was preserved, making it possible to address 4GiB in real mode. For protected mode it basically works the same (it’s what DOS4/GW used), set up all segments to 4GiB, but no switching back to real mode.

You’re welcome! ^^

Doom did not use unreal mode, it used DOS4GW. There were not many games that used unreal mode, Ultima 7 being one of the notable exceptions. It was more common with productivity software and demoscene.

640KB is all you need

Now in 2025 64GB is all you need

Find a motherboard that’s not a server board that supports 128GB

If you really want to brag get 64GB of Sram Instead of ddr, basically having low level cache as ram no memory refresh and can run at the full 3-4 ghz cpu clock, runs bfv and gta v much faster

When is the 128 bit cpu going to drop

64 or 72 bit address space

128bit data space

Lotta cpu pins unless it’s multiplexed, that has a timing/speed and performance hit….

Just take two existing 64 bit alu

One for the upper 64 bit and other for lower

Do half the math for each set, then simply add the two into one 128 bit word, so internally sorted as two 64 bit words

By that point you’d be grabbing for a GPU or something similar

But with a GPU, things run in parallel and the instructions aren’t as typically complex as a cpu, but you can access a lot more memory at once, but you can do cpu style computing on a GPU, like risc the code might take up some more disk space….

Good questions.

The x87 floating point unit has 80-Bit accuracy since the 80s.

Modern SIMDs such as SSE and AVX have more bitness than the ALU, too.

There’s AVX512, an extension to AVX with 512-Bit, for example.

The AltiVec unit for certain PowerPC processors has 128-Bit, for example.

In the 90s, MMX was 64-Bit already.

It was being followed soon by 3DNow! and SSE.

https://en.wikipedia.org/wiki/X87

https://en.wikipedia.org/wiki/Single_instruction,_multiple_data#Hardware

https://en.wikipedia.org/wiki/MMX_(instruction_set)

https://en.wikipedia.org/wiki/AltiVec

Back in those days I worked for an engineering software company. We had a simulation program whose binary image was about 6MB. Everybody wanted a PC version – marketing, sales, customers, the boss, etc. We spent a summer trying to create an overlay map that would fit the whole thing into 640K. Remember overlays? Probably not. You analyzed the call tree of the program and built a map that instructs the linker to put the subroutine binaries into overlapping segments. When a subroutine is called, the overlay code automatically swaps in the segments between the calling routine and the called routine. Lots of disk I/O if you don’t create an efficient overlay map!

When we got stuck, we would trim out a lower priority feature of the program to squeeze the program into less memory. By the time we were done it was below the minimum viable functionality so the project was abandoned.

The 80386 changed the game. A company called Phar Lap Software had created a DOS Extender product and a suite of compilers to produce 32 bit protected mode code. Customers just had to buy a 386 with at least 8MB of RAM, admittedly a huge amount in those days, but it got your engineers off your mainframe.

It worked great, even though the FORTRAN compiler was a bit buggy. For each build I had to compile one routine to assembly instead of directly to object code. Then I manually fixed the bug (a near jump that needed to be a far jump) and assembled the affected routine. I suppose Phar Lap eventually fixed the compiler but I remember having to do the manual fix for a long time.

Within a few years almost all the customers were using the PC version instead of a mainframe or minicomputer version. Great! But then they wanted a Windows version! I’ll leave that story for another time.