When home computers first appeared, disk drives were an expensive rarity. Consumers weren’t likely to be interested in punch cards or paper tape, but most people did have consumer-grade audio cassette recorders. There were a few attempts at storing data on tapes, which, in theory, is simple enough. But, practically, cheap audio recorders are far from perfect, which can complicate the situation.

A conference in Kansas City settled on a standard design, and the “Kansas City standard” tape format appeared. In a recent video, [Igor Brichkov] attempts to work with the format using 555s and op amps — the same way computers back in the day might have done it. Check out the video below to learn more.

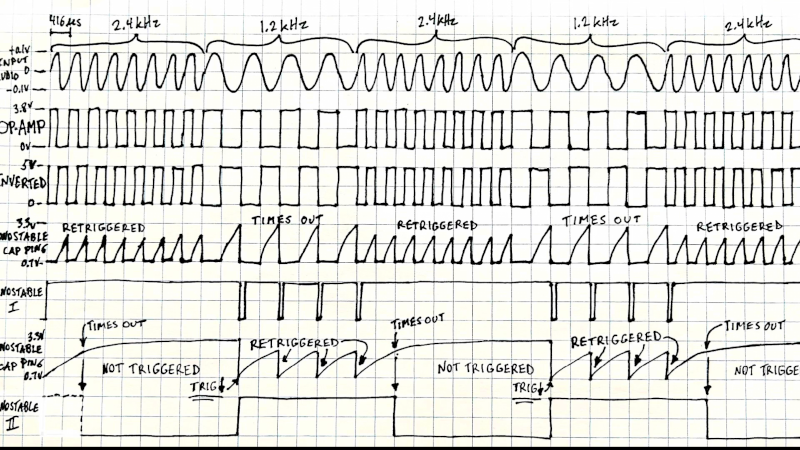

These days, it would be dead simple to digitize audio and process it to recover data. The 1970s were a different time. The KC standard used frequency shift method with 2.4 kHz tones standing in for ones, and 1.2 kHz tones were zeros. The bit length was equal (at 300 baud), so a one had 8 cycles and a zero had 4 cycles. There were other mundane details like a start bit, a minimum stop bit, and the fact that the least significant bit was first.

The real world makes these things iffy. Stretched tape, varying motor speeds, and tape dropouts can all change things. The format makes it possible to detect the tones and then feed the output to a UART that you might use for a serial port.

There were many schemes. The one in the video uses an op-amp to square up the signal to a digital output. The digital pulses feed to a pair of 555s made to re-trigger during fast input trains but not during slower input trains. If that doesn’t make sense, watch the video!

The KC standard shows up all over the place. We’ve even used it to hide secret messages in our podcast.

“Consumers weren’t likely to be interested in punch cards or paper tape…”

Well a stack of punch cards in wrap could keep the coffee table level.

Ever try to load a large C64 game via punch card? A punch card is around 80 bytes, you’d need something of around 40-50kb for some of the games. A large game that fits single cassette would take about 600-700 punch card to load. Imagine if the power went out after you spent an hour just loading a game from cards.

Really large games that span multiple disks (such as Ultima 5) would mean over 17,000 punch card and you’d need to load a batch now and then, and have new punch card created every time you saved the game.

Easy to see why the punch card didn’t take off for home computers.

Thanks for the explanation, Mr Brichkov! The KC standard is still easy to understand for me. I hope there might be some future videos on the C64 datasette standard and the Sony Bitcorder. Cheers!

Where did the Tarbel Encoding fit into the Scheme of things..

Cap

KCS (1975). Tarbell(1976). Latter mostly used in CP/M machines.

Why not just write raw binary like we do nowdays with .tar.gz files? Not enough CPU power for decompression on the flight? Or programmers back then were so incompetent?

Limitations of the media.

Remember that this was targeted at cheap portable cassette decks. Not a high end audiophile sort of thing. So frequency response was limited. Plus of course tape dropouts and such. So a simple scheme of recording tones known to be within the passband.

You can of course use raw data in NRZ or perhaps another digital format like biphase. But clock and data recovery becomes much more complicated. Plus, with NRZ there is a limit on runs of zeros or ones that the bit sync can tolerate before losing lock.

Which is why biphase is sometimes used since it is self clocking. At the cost of requiring twice the frequency response.

+1

Datasette was one of the worst media ever.

You had wow and flutter, warble, dropout etc.

It was barely usable for 8 seconds SSTV (better known as “Robot-8”).

And that was merely analog and in grayscale.

Yet the good old (especially old!) music cassette did barely cut it.

I took it to mean why record the source code rather than the already-tokenized code which would probably be shorter. The choice would not be affected by frequency response, dropouts, flutter, or anything else; because regardless, the entire cycle would remain the same: the processor sends digital data to a UART, whose output goes to the modem, whose output goes to the tape recorder, whose output goes to the modem, whose output goes to the UART, which is read by the processor.

We simply didn’t know about Huffman encoding/decoding. You might think we would, but algorithms propagated slowly without the Internet. The kind of compression algorithm one might use on a late 1970s/early 1980s microcoputer might be limited to text. It might assign the 12 most common letters to nybbles and then the remaining 96-12=84 symbols to a 7-bit extension (4-bit primary + 5-bit extension). This would achieve bit less than 2x compression, but it would be easy.

Sure we had online services and chatrooms.

There used to be X.25 global networks since the 70s.

That’s what the original Tron movie makes a reference to.

In the states, the largest BBS of the time, CompuServe, had offered a CB Simulator for chatting and international e-mail service.

Here in Europe, we had Minitel and similar services.

People into technology, the geeks and nerds, did have platforms to discuss such things.

Then there was ham radio and international chatting via RTTY.

7-Bit ASCII as an alternative to Baudot was available, too, to send/receive computer files via shortwave or ham satellites.

The technology was there, but the users lacked the necessary awareness, maybe.

Pure binary is (just) ok if you’re writing it to a digital medium but cassette tape is analogue so it needs to be encoded and for reasons outlined in the article, it’s an imperfect medium so you needed to add error correction, framing etc to ensure it was reasonably robust. Plus compute power and resources (RAM) available were limited so compression was hard if even possible

The problem was the datasette as a medium.

Using a VHS tape would have solved the issue.

Either by using the hi-fi stereo head (cheap solution) or by using the video head (elegant solution, gigabytes of storage).

Considering that the VHS was synonymous for the 80s,

it’s a shame it didn’t make it as a computer medium on a wider scaler.

The higher price would have dropped, eventually, too.

S-VHS would have really improved it. Although the higher ends cost almost as much as the computer.

Hi, black/white (monochrome) resolution of ordinary VHS was good enough, I think.

You need to bear in mind Kansas City standard predated VHS by a couple of years and VHS recorders were rare in homes as well as incredibly expensive compared to cassettes, the hardware required to generate a video signal would have also added significant cost but the hardware to demodulate it would have been even more expensive, and, frankly, the capacity was overkill.

So, the logical step from cassette tape was floppy disk drives.

VHS streamer tape storage did exist, however. For backup purposes.

Example (using video head): https://www.youtube.com/watch?v=TUS0Zv2APjU

Using VHS as an audio medium was still more mass market compatible than using 8-track or reel tapes.

The production of VHS and recorders cassettes was on the rise in the 80s.

It was a medium that was common in western society, in short, next to audio cassette.

But without the issues of unstable tape transport.

Quality of the VHS tape was CD-quality, too.

VHS as backup was 1990s, I think. And in the 1980s, you’d be wasting bandwidth on a VHS tape.

But there were definitely backup solutions: https://en.wikipedia.org/wiki/ArVid

Talking things about VHS when back then most children could not afford even a gravel bicycle is pure copium. Fact is programmers were too stupid to come up with proper data encoding so they bodged something that didn’t even work 85% of the time.

But children using or owning home computers was most normal thing on other hand, right? 🤨

My point was that music cassette (aka mc, compact cassette) was very low-tech and unreliable. Period.

And the only other blank medium available in an average small town next to some Agfa or Kodak films, Polaroid packs, music cassettes etc was the VHS cassette.

Or Video 2000, Betamax if you will. Or VHS-C. It’s same basic tech.

I don’t think a super market had reel tape on sale, which was proper audio medium in terms of reliability.

The music cassette was barely even good enough to hold still images of 128×128 pixels monochrome (SSTV).

Storing data on music cassette was same as trying to get data over shortwave radio! That’s how bad it was! Chrrrrķ! Tssss! Woooo!

Another technology was the Quick Disk (QD).

It was a hybrid of a diskette, a record and a music cassette.

The Sharp QD drive used one long spiral track to record data.

Unfortunately, it didn’t catch on as a generic floppy alternative.

VHS was more mass-market compatible, in theory, still, I think.

QDs weren’t cheap, I think, even if the drive was.

https://oldmachinery.blogspot.com/2014/06/sharp-mz-800-quickdisk-and-monitor.html?m=1

https://en.wikipedia.org/wiki/History_of_the_floppy_disk#Quick

I don’t understand the connection between a physical storage scheme and a “raw binary” “.tar.gz” file.

Are you asking why they didn’t just represent a 1 on the tape as a magnetized section, and a 0 as a non-magnetized section? (Instead of 4x 1.2khz and 8x 2.4khz)

Because the hardware on the market was designed for storing sound waves.

And the naive way of storing binary on magnetic medium has trouble differentiating 100 zeros from 101 zeros. They both look like a non-magnetized chunk; the second is just 1% longer. Consumer tape deck motor speeds might not be consistent enough to tell the difference.

Representing a 0 as 4 slow waves gives you a more reliable way to count a long sequence of 0s even if the tape speed is unreliable.

I suppose Manchester encoding could have been used in the manner

True, the Sym-1 had a one pulse per bit high speed tape data transfer. However it was late on the scene and people had moved onto the next great hope, floppy disks, so it went largely un acknowledged. It had great resistance tk wow and flutter.

The instant of the change in frequency did not have to be synchronized with the change of logic state though; so you couldn’t just count off the number of cycles. Also, the frequency was not crystal-controlled like the associated UART was.

Listen to yourself…

The incompetent move is writing ‘raw binary’ to physical media in any form.

Thank dog the file system and drives are saving you from yourself.

Live closer to the metal, it will save you embarrassment.

Abstraction layers are great, until you forget they’re there.

Lol. That comment is incompetent on so many levels :)

The many hours of my life wasted typing “LOAD” only to see “ERROR” a 1/2 hour later lol.

Most of the problem was the playback signal levels and the comparator circuit to square them up.

TRS-80, ZX-81, TI-99A some were very finicky. A VU meter helps.

I may have copied Altair BASIC, the first Microsoft product. This was the beginning of software piracy. Bill Gates complained about it and hence his drive to monopoly.

I hear you brother. At least you were getting an error at the end. With my Altair, it would hang if a save didn’t complete. The good news was, with only 4k of memory, it didn’t take long to type in the program again.

One of the last things I did before I moved on to a PDP-11 with floppy disks was write my own cassette interface, but I don’t remember at all how I did it.

Commercial software had multiple copies to help ensure a good read. BYTE magazine came out with an improved standard. CUTS format (UK).

Future improvements like the Exatron Stringy Floppy or Tandberg digital cassette drives.

“I may have copied Altair BASIC, the first Microsoft product. This was the beginning of software piracy. Bill Gates complained about it and hence his drive to monopoly.”

Small correction here: Software piracy didn’t exist until shortly after, because there had been no market yet when computer hobbyists built their first computers.

Previously, students and profs on university were used to exchange information unrestricted, which also included source code and formulas.

If you don’t believe me, have a look at the copyright situation in the US of early 70s.

There was a change just shortly before B.G. sold his Basic..

“The Computer Software Copyright Act of 1980” protected apps I believe.

“In 1976, Gates wrote an open letter to hobbyists in which he stated that less than 10 percent of Altair owners ever paid for their copy of BASIC. Gates complained that his royalties on Altair BASIC made the time they spent developing it worth less than $2 an hour. In this letter, he said that he “…would appreciate letters from anyone who wants to pay up… Nothing would please me more than being able to hire ten programmers and deluge the hobby market with good software.”

“Small correction here: Software piracy didn’t exist until shortly after”

Small correction here: Software piracy began the first time someone pirated software. It’s conceivable that instance described above was actually the first.

In the beginning of the DIY computer scene there was no market for computers, though!

Software wasn’t available on sale yet, in short! That’s the point!

Microsoft basically took advantage of the copyright change and accused ordinary users of piracy who hadn’t noticed the change of law yet.

The software was way overpriced, greater than the H/W! 9/1975:

Altair Extended BASIC $750, Altair 8K BASIC $500, Altair 4K BASIC $350

A complete assembled Altair 8800 $621 or $439 kit.

If a few hundred people bought MS BASIC I think the programmer’s wage paid for? Microsoft only ported over open BASIC from somewhere else, which is a huge irony.

But the greedy pricing fueled piracy IMHO, with the first low cost personal computers.

https://archive.org/details/radioelectronics46unse_6/mode/2up

Some of the problems were due to the company’s naive firmware coding: what worked in the lab with good test equipment failed in the real world with all kinds of consumer tape machines & tapes. For the TRS-80 Model 1’s ROM, the timing loop after the clock pulse was set too low: it checked for the start of the data pulse. So any noise coming in would be treated as data. After lots of complaints and various hardware “smoothing” circuit fixes, they finally updated the ROM with more reliable code–right at the end of the Model 1’s life.

The Sym-1 did it.

That’s why the receive side of the modem should amplify it to clipping, so it becomes a square wave before any timing detections, regardless of level. I made such a tape modem, and the playback level simply didn’t matter.

300 bits per second, with 11 bits per byte, means about 27 bytes per second, and just under 2.5kB for a 90 minute tape. That seems very inefficient, I’d think the bandwidth would be much larger if a better encoding scheme would be used.

Even though better encoding was technically possible, in 1975:

It would have required more CPU cycles, more RAM, and more precision from hardware.

A “standard” had to be simple enough that any microcomputer could implement it with minimal parts and code.

The KCS was designed for universality, not optimality.

And yes there was better, latter.

I’m coming up with a rather different figure using UNITS(1): 300|11 byte/second * 90 minute = 143.8 kibibyte.

x 60 = 145800 bytes per 90 min.

27 bytes per sec at 60 sec per min is 2.6 kiB per minute. 90 minutes would be 145kiB. Most machines loading from tape would barely be able to handle a 16kiB program.

27 bytes per second, times 60 seconds per minute, times 45 minutes per side of a C-90 cassette, equals almost 80KB, not 2.5KB. (That’s unless you really meant a tape that’s 90 seconds on a side.)

Although an article on tape storage is interesting, it’s actually quite depressing to see yet another KC tape or CUTS standard article when much better and simpler tape interfaces were actually developed in other countries. The UK’s ZX Spectrum, for example supported 1500 baud (and even faster in custom tape loading routines) using a zero-crossing technique: 0 or 1 is determined by the time between a transition rather than counting a number of oscillations at a given frequency.

The ZX Spectrum, for example, just needed a 1-bit audio input and a Schmitt trigger as hardware support – everything else was in software.

https://shred.zone/cilla/story/440/spectrum-loading.html

A form of software-defined data decoding, sans the Schmitt trigger.

It reminded me of the thousands attempts to adjust azimuth position of the cassette player I used when loading games in my brand new ZX Spectrum back in the ’80s. Just a small screwdriver and little turns right or left…

Apple 2 was also much better…IIRC 1200 baud on tape.

C-64 roughly same.

TRS-80 was 300 baud though…(No excuse given the date…Everything about Trash80s was kinda lame…Expensive interface w twisted wire mod and buffered ribbon cable!)

Not sure about Pet, but that’s also earlier machine.

Check your dates.

This was the mid 70s, five years long time.

Sharp MZ-80K was from 1978 and had used 1200 Baud, too.

The datasette drive was similar to VC-20/C64..

TRS-80 Model I was 500 bits/sec, and the Model III was 1500 bits/sec. Only Europeans cared about cassettes after 1980 anyhow.

And my EI worked perfectly fine without that crazy buffered cable. It certainly worked a lot better than a ZX-80 RAM dongle that was flaky without any cable at all.

“Only Europeans cared about cassettes after 1980 anyhow.”

European/German here. That’s sad, but true, I think.

I myself had a home computer with a datasette still (Sharp MZ).

But CP/M users did have floppies, because it was a requirement.

The worst example for datasette was the C64 maybe, the über home computer, sort of.

It was very popular and the main medium to many users was datasette.

By late 80s, I think, the 5,25″ floppy slowly got more popular (except in E-Germany).

In a time, when Atari ST, Amiga and Mac had moved on to 3,5″ floppy already. And PC users, too – especially the laptop owners.

Other home computer uses such as Amstrad CPC and PCW had used 3″ floppy, at least.

This is all so sad, because the datasette had no real random access.

C64 software on datasette also was sold as inferior version to floppy version, sometimes.

Storage consuming things like intro animation, music or graphics were missing in some datasette versions.

Datasette users also missed out on GEOS and GEOS applications, of course.

The C64 portable (C64SX) had no datasette routines and interface anymore.

But because the European market was so dependent of the datasette, it probably had no chance over here.

What’s also negative is the wasted ROM space on datasette routines in C64 kernal and other home computers with 64KB address space.

The positive side was that a tape interface could be used as an audio digitizer or as an RTTY interface.

Some of the datasette drives operating at 1200/2400 Hz could be used to detect/generate RTTY signal.

If an intelligent RTTY software was used, then either MARK or SPACE were sufficient, but noth both.

Other solutions used the motor sense signal etc to operate morse code or RTTY.

I know, to many here that’s quite strange and odd, but in the 70s and 80s it was a hibby among nerds/geeks to decode RTTY news on shortwave.

Manchester encoding or some variant of it.

Speed didn’t matter at the time since the only alternative was literally re-entering everything in from scratch by hand. So of course the first standard prioritised reliability. The “better and simpler” interfaces came several years later, building off the experience gained from using the older implementations.

Didn’t hurt that the UK home computer market was much less wealthy and so everything was done to minimise the BOM cost as much as possible. No hardware is cheaper than “implemented in code”, and once your implementation is software defined you can tweak the performance as far as it’ll go.

Good reply. I might quibble with “Speed didn’t matter..”. I think the real toleration was about 3 or 4 minutes, or 8kB at 300 baud. And many early computers had that amount. I think the minimum configuration for the first IBM PC was a miserable 16kB (and it had cassette support too).

When you get to 48kB home computers, we really did need about 1500 baud just to be able to keep loading times to 4 minutes! Loading a SCREEN$ and a full 40kB of code/data for a game would take nearly 4.5 minutes to do that.

Totally agree about everything else you’ve written.

Bootstrapping a loader for the earliest computers would be quite a challenge. I think I could squeeze a zero-crossing tape loader into 64b of a 2MHz 8080. Assuming you start with an 8080 computer with a toggle panel for entering code (at a minimum, 8 toggles + load address+ write data + go, so 11 toggles in total).

And it would be possible to build a diode-ROM of that size (if, as likely, you didn’t have access to being able to program or erase an EPROM). A 64b diode-ROM would need 9 74LS138 chips to decode each address and about 64 x 4 = 256 diodes. At about 1p or 1c per diode, that’s just £2.56, $2.56 + maybe 20p per chip => £4.36. Then some stripboard; you need a tri-state buffer governed by /OE. That’s going to be about the same price as a minimal EPROM of the time.

With a 64b loader you can load a save routine and/or a better loader. At that stage you can probably write an EPROM programmer. However, my sequence would be:

Toggle in the Save routine. Now you can start by loading the Save routine.

Add a hex keypad or 40 key keyboard, so you can enter numerical data (and/or characters later).

Replace the toggling with a small monitor program that uses the keypad/keyboard, perhaps 256/512b long. That would be quite a challenge if you have to toggle it in! I think an equivalent monitor with load addr, write data is all you need, because you can use ‘go’ from the toggles).

Then you can code much quicker: write a longer monitor program, one that can help with jumps and labels and outputs to a teletype, starburst display, electric typewriter or super-posh: Don Lancaster’s TV typewriter type hardware!

Write an EPROM burner program and then use it to burn your monitor program + Load + Save to an EPROM. That can now replace your 64b loader.

Now you’re up to the level of an early 1970s home-brewed computer.

Listings were a pain in the 80s, even!

There were experiments to get away with them.

a) transmitting tape signals via FM radio (VHF broadcast)

b) shipping records made of thin plastic foil with computer magazines

c) printing of bar codes in magazines and using a DIY barcode reader at home

d) use of a blinking dot on CRT TV to get programs over the air

e) use of teletext-like signals hidden in the blanking lines of a TV signal

Here in Germany/W-Germany, Channel Videodat did this.

But a time when PCs started to become more dominant.

Not just home computers, but also RTTY terminals.

Such as Tono Theta 7000E.

https://www.universal-radio.com/catalog/decoders/7000E.html

I once tried to build a 8 track tape data storage for my TRS-80 model 1. Never got it to work with any reliability. Also tried to build a stereo tape data storage, with each channel going to its own decoder/UART and then loading into different segments of memory at the same time. It kind of worked sometime….but then I got a 77 track floppy drive and I was in Big Storage for sure and gave up of the tapes.

Basically if you didnt encode frequencies as 1 and 0, and you used either a positive volt for 1 and negative for 0. This rectifier/comparator idea doesnt work well since sustained 1s or 0s would be dc offset silence which would get output as a kneejerk that falls back to silence due to the DAC.

Lots of ways to deal with this problem and used for missile telemetry for decades. See their standard for more than you could ever want to know. (RCC 106)

You could restrict the data. For example, a 10 bit ADC that has its range reduced to 1000. Eliminating the first 12 and last 12 values.

Or randomized NRZ. Run the data through a linear feedback shift register to randomize the output. With the obvious tradeoff with bit error rate. A variation on this is encryption.

Plus there are other versions of NRZ than NRZ-L. NRZ-S changes level on a space. (zero) NRZ-M changes on a mark. Handy. I used that once with an SDLC bit stream that used stuff bits. It looked odd and totally confused the range telemetry guys but it worked.

And of course group encodings. Take a group of bits, three or four, and translate them to a larger group. Translation carefully chosen to limit runs. With a big enough group you get forward error correction.

Lots of things you can do if you have the hardware.

Computers like the ZX Spectrum used transitions to encode 1 and 0 and the time between the transitions defined whether it was a 1 or 0.

So much easier than all this mess is VPW modulations like how J1850 does it. It’s very similar to the addressable LEDs everyone loves. Long time between sign changes is 1, short time is 0. Set the zero frequency to something like 100us between transitions (5kHz output) and the 1 to half that (200us between transitions, 2.5kHz for run of ones). Then you get something like 7.5kbps encoding with a hardware interface that looks like a line driver and schmitt trigger with a 1kHz high pass in front of it. You also run with a ton of margin for tape speed (you slice 0 from 1 at 120us) without fancy math from the software side. You also get something like 3.375MB raw per channel per hour of tape (so 13MB raw in a 60 minute tape.) Do some fun with preambles at half frequency or something and chunked loading so (64kB of RAM means you’d never need a whole tape at once) and you probably end up with “wasting” half the tape to get something better than 90s floppies for total storage. … damn this is how you get into retrocomputing isn’t it.

The Acorn Electron & BBC Micro in the UK used a sismilar system but was 1200Baud.

This topic is closely related:

https://hackaday.com/2021/10/20/audio-tape-interface-revives-microcassettes-as-storage-medium

The chip I used the most in Uni was the Op-Amp (Ampop here). It could do so much it’s still amazing today.

By the way, the ZX Spectrum (1982) cassete tapes tranferred at 1200bps though there were turbo loaders that got to 4800bps. The chance of bit errors were higher, aiming for higher quality tapes of course.

It is great to see people talking still about how things work at the core of digital communication, I spent years studying and working at that core, from DOS device drivers for interfaces, then LAPB/D and later in core optical transmition (SONET/SDH).

You mentioned the podcast. It’s Saturday and it is missing. Any updates on the matter? Thanks.

My dad was our city’s auditor and was ecstatic when they installed a computer at City Hall in the 1970s. He would occasionally bring home used data cassettes. At the time, he said they were better than consumer versions because of their robustness. I would think the tape stock was better, as well as the transport. I still have one, and it seems heavier than a standard cassette from that era. My high school’s 1976 spring concert was recorded on it and survives to this day.

If you limit you frequency range, well within the spec, to 65 Hz – 10 kHz, how many non-harmonic frequencies could you reliably put on a tape (given care to typical wow and flutter)? Could you make a multiple bandpass filter (is that comb filter) that restore N signals ? If you can get 9 you could have a clock and 8 bits all in parallel. 11 would add on start and stop signals. I’m talking mono setups, since that would have been cheep back then, but stereo was obtainable. Also some reel-to-reel could do 4 tracks.

Yeah, as soon as I learnt about KC I though about that.

I was more conservative, at 4 tracks – to transfert nibbles, and use 3 to have a byte plus recovery data (as tape was really that bad).

But it seems that it boiled down to the cost. Simply the cost.

Designing analog filtering was expensive (more expensive than nothing at least), and you didn’t had the power to do it in software back then.

Autocorrelation (some kind of poor’s man FFT at very defined frequencies) appeared latter (in the 80’s I think).

So yeah, hope these info help = )

Oh it was way worse than those diagrams show, well if you wanted to use any old recorder you bought in any old shop.

First you found that 50 percent of machines inverted your signal and the rest didn’t.

Second the DC voltage superimposed on your signal was much bigger than the signal itself, and could be any function you like of the previous 50 cycles.

Third if you tried to write unfiltered square waves to an audio recorder you had no idea what it would do with the higher frequency elements.

It took me days and days designing both filters and code.