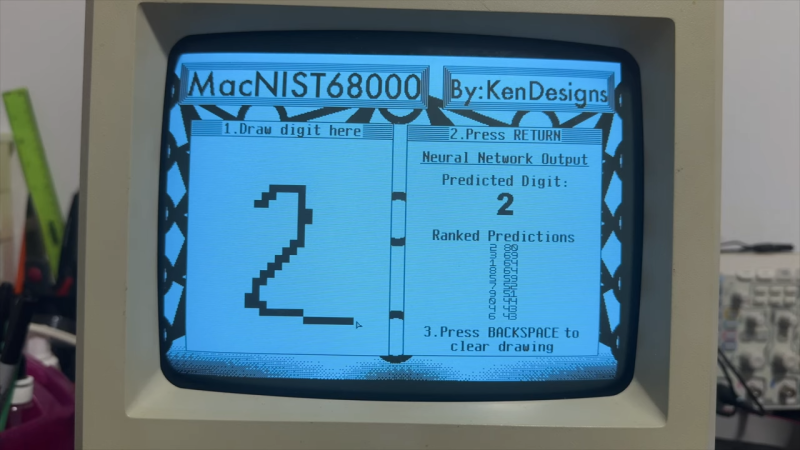

Modern retrocomputing tricks often push old hardware and systems further than any of the back-in-the-day developers could have ever dreamed. How about a neural network on an original Mac? [KenDesigns] does just this with a classic handwritten digit identification network running with an entire custom SDK!

Getting such a piece of hardware running what is effectively multiple decades of machine learning is as hard as most could imagine. (The MNIST dataset used wasn’t even put together until the 90s.) Due to floating-point limitations on the original Mac, there are a variety of issues with attempting to run machine learning models. One of the several hoops to jump through required quantization of the model. This also allows the model to be squeezed into the limited RAM of the Mac.

Impressively, one of the most important features of [KenDesigns] setup is the custom SDK, allowing for the lack of macOS. This allows for incredibly nitty-gritty adjustments, but also requires an entire custom installation. Not all for nothing, though, as after some training manipulation, the model runs with some clear proficiency.

If you want to see it go, check out the video embedded below. Or if you just want to run it on your ancient Mac, you’ll find a disk image here. Emulators have even been tested to work for those without the original hardware. Newer hardware traditionally proves to be easier and more compact to use than these older toys; however, it doesn’t make it any less impressive to run a neural network on a calculator!

Let’s just wait a little longer and users will have unlearned to use a mouse of a GUI operated system.

Instead, they will be asking an LLM like ChatGPT to click an icon or make a selection. Let’s just wait..

I asked ChatGPT to analyze the products on a website, give me the highest priced product in each category.

It took almost an hour, inefficiently at first browsing the site, but eventually figuring out how to do so in a reasonable manner, collected the process across categories. It missed 1 category for some reason, but got the 5 other categories.

I didn’t have to tell it how to do any of this, I just told it what I wanted it to collect and give me.

So, anybody with a brain and hand eye coordination could have done it in a fraction of the time, but it was still impressive that we can provide a goal, and the system can figure out how to achieve it.

The technology is fascinating, no question.

However, it’s scary to think what it may do to us or some of us in near future.

I know people who can’t do written calculation anymore, for example.

Today, we can be glad when people know how to use a pocket calculator, still.

Humans should be the masters of technology and not other way round.

Once they as a society have forgotten the fundamental workings of their technology,

they’ll feel lost once said technology needs maintenance.

It wasn’t like this yet in the 1980s when kids knew each peek/poke of a C64 and had a book of a 6502 and the c64 kernal.

90s was still mostly similar, when books of x86 assembly and interrupt tables of

MS-DOS were normal things to be found in the shelf of any average computer user.

Then there’s another problem. Search engines are switching to LLMs, too.

In near future, it’ll be nolonger about finding valuable information on a given topic,

but about search engines providing an answer based on ratings (what users seem to like most).

Like it started a couple of years ago with the paid ads being showing up first, but worse.

So if you’re searching for information about the healthiness of a certain drink,

you won’t links to scientific papers anymore but you’re may ending up with positive summary or a public relations website of C. Cola.

I generally agree.

However, some tech becomes obsolete or relegated to niche- or hobbyist-only use over time. Very few people besides hobbyists and collectors use a tube radio that they have to maintain? Ditto a butter churn or pre-19th-century-technology firearms.

Other tech becomes so low-maintenance that it’s easier to pay an expert on the rare occasions that it fails. Take cars. Even 60 years ago wise drivers knew how to do things like add water to the battery, change oil, add radiator fluid, change a tire (inner tube and tubeless), adjust and replace spark plugs, and more. Now, failures other than a punctured tire are rare, and cars warn you ahead of time so you can drive to your mechanic or for “imminent catastrophe” issues, pull over and call for help.

Do you know how to make a 6502? Would you be able to repair the chip if it broke?

It’s called the technology trap, and we “fell into it” decades before computing. It’s hard to say that it was a bad idea, especially considering that modern medicine is also part of that system.

https://www.youtube.com/watch?v=lKELMR6wACw

Pretty soon, kids these days won’t even know how to to charge they phone, eat hot chip and lie.

Very interesting reading material for everyone who really wants to go old-skool regarding handwriting recognition. With some very good examples, seriously!

https://jackschaedler.github.io/handwriting-recognition/

Thanks for sharing. I’m in the process of going over a lot of old course notes and I was searching for some HTR solution to digitalize all of them. From what I’ve found, the best way could be to fine-tune a kraken HTR model to my own handwriting. Base models however work really kinda bad, lots of nonsense because my cursive is inconsisten + often warped. I’m gonna give this a good read !

Suggested next challenge: Run this on an Apple Newton, which badly needed it.

Aw come on now, how about an Eliza front end lol?!

And how does “how about an Eliza front end lol?!” make you feel?

In the 90s, we were doing semantic processing with a neural net doing a lot of the heavy lifting. XFNC/XCMDs all in LightSpeedPascal along with plain C, and a hypercard front end.

In the 1980s when software stores were a thing you could buy neural net software for the PC-XT.

Yes, there was a time of such things.

In AI, there had been two big groups at the time, neuronal nets and expert systems.

Commercial software such as Question and Answer (Q&A) were popular, for example, which ran on DOS and IBM PC.

https://en.wikipedia.org/wiki/Q%26A_(Symantec)

They would combine Eliza with a database, essentially.

You could ask things such as, say, how many women in a state have a driver’s license at the prompt

and then the database would show the matching results.

Real neural nets had been worked on, too in the 1980s.

Especially advanced computers such as Macintosh, Atari ST or some graphics workstation were usable for this research, I think.

There are some videos ln YT, I think.