The basic concept of human intelligence entails self-awareness alongside the ability to reason and apply logic to one’s actions and daily life. Despite the very fuzzy definition of ‘human intelligence‘, and despite many aspects of said human intelligence (HI) also being observed among other animals, like crows and orcas, humans over the ages have always known that their brains are more special than those of other animals.

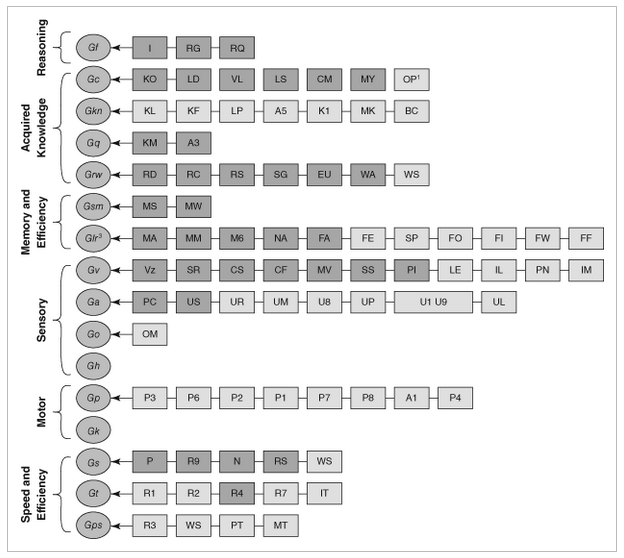

Currently the Cattell-Horn-Carroll (CHC) theory of intelligence is the most widely accepted model, defining distinct types of abilities that range from memory and processing speed to reasoning ability. While admittedly not perfect, it gives us a baseline to work with when we think of the term ‘intelligence’, whether biological or artificial.

This raises the question of how in the context of artificial intelligence (AI) the CHC model translate to the technologies which we see in use today. When can we expect to subject an artificial intelligence entity to an IQ test and have it handily outperform a human on all metrics?

Types Of Intelligence

While the basic CHC model contains ten items, the full model is even more expansive, as can be seen in the graphic below. Most important are the overarching categories and the reasoning for the individual items in them, as detailed in the 2014 paper by Flanagan and McGrew. Of these, reasoning (Gf, for fluid intelligence), acquired knowledge and memory (long and short term) are arguably the most relevant when it comes to ‘general intelligence’.

Fluid intelligence (Gf), or reasoning, entails the ability to discover the nature of the problem or construction, to use a provided context to fill in the subsequent steps, and to handle abstract concepts like mathematics. Crystallized intelligence (Gc) can be condensed to ‘basic skills’ and general knowledge, including the ability to communicate with others using a natural language.

The basic memory abilities pertain to short-term (Gsm) and long-term recall (Glr) abilities, in particular attention span, working memory and the ability to recall long-term memories and associations within these memories.

Beyond these basic types of intelligence and abilities we can see that many more are defined, but these mostly expand on these basic four, such as visual memory (Gv), various visual tasks, speed of memory operations, reaction time, reading and writing skills and various domain specific knowledge abilities. Thus it makes sense to initially limit evaluating both HI and AI within this constrained framework.

Are Humans Intelligent?

It’s generally considered a foregone conclusion that because humans as a species possesses intelligence, ergo facto every human being possesses HI. However, within the CHC model there is a lot of wriggle room to tone down this simplification. A big part of IQ tests is to test these specific forms of intelligence and skills, after all, creating a mosaic that’s then boringly reduced to a much less meaningful number.

The main discovery over the past decades is that the human brain is far less exceptional than we had assumed. For example crows and their fellow corvids easily keep up with humans in a range of skills and abilities. As far as fluid intelligence is concerned, they clearly display inductive and sequential reasoning, as they can solve puzzles and create tools on the spot. Similarly, corvids regularly display the ability to count and estimate volumes, demonstrating quantitative reasoning. They have regularly demonstrated understanding water volume, density of objects and the relation between these.

In Japanese parks, crows have been spotted manipulating the public faucets for drinking and bathing, adjusting the flow to either a trickle or a strong flow depending on what they want. Corvids score high on the Gf part of the CHC model, though it should be said that the Japanese crow in the article did not turn the faucet back off again, which might just be because they do not care if it keeps running.

When it comes to crystallized intelligence (Gc) and the memory-related Gsm and Glr abilities, corvids score pretty high as well. They have been reported to remember human faces, to learn from other crows by observing them, and are excellent at mimicking the sounds that other birds make. There is evidence that corvids and other avian dinosaur species (‘birds’) are capable of learning to understand human language, and even communicating with humans using these learned words.

The key here is whether the animal understands the meaning of the vocalization and what vocalizing it is meant to achieve when interacting with a human. Both parrots and crows show signs of being able to learn significant vocabularies of hundreds of words and conceivably a basic understanding of their meaning, or at least what they achieve when uttered, especially when it comes to food.

Whether non-human animals are capable of complex human speech remains a highly controversial topic, of course, though we are breathlessly awaiting the day that the first crow looks up at a human and tells the hairless monkey what they really think of them and their species as a whole.

The Bears

Meanwhile there’s a veritable war of intellects going on in US National Parks between humans and bears, involving keeping the latter out of food lockers and trash bins while the humans begin to struggle the moment the bear-proof mechanism requires more than two hand motions. This sometimes escalates to the point where bears are culled when they defeat mechanisms using brute force.

Over the decades bears have learned that human food is easier to obtain and fills much better than all-natural food sources, yet humans are no longer willing to share. The result is an arms race where bears are more than happy to use any means necessary to obtain tasty food. Ergo we can put the Gf, Gc and memory-related scores for bears also at a level that suggests highly capable intellects, with a clear ability to learn, remember, and defeat obstacles through intellect. Sadly, the bear body doesn’t lend itself well to creating and using tools like a corvid can.

Despite the flaws of the CHC model and the weaknesses inherent in the associated IQ test scores, it does provide some rough idea of how these assessed capabilities are distributed across a population, leading to a distinct Bell curve for IQ scores among humans and conceivably for other species if we could test them. Effectively this means that there is likely significant overlap between the less intelligent humans and smarter non-human animals.

Although H. sapiens is undeniably an intelligent species, the reality is that it wasn’t some gods-gifted power, but rather an evolutionary quirk that it shares with many other lifeforms. This does however make it infinitely more likely that we can replicate it with a machine and/or computer system.

Making Machines Intelligent

The conclusion we have thus reached after assessing HI is that if we want to make machines intelligent, they need to acquire at least the Gf, Gc, Gsm and Glr capabilities, and at a level that puts them above that of a human toddler, or a raven if you wish.

Exactly how to do this has been the subject of much research and study the past millennia, with automatons (‘robots’) being one way to pour human intellect into a form that alleviates manual labor. Of course, this is effectively merely on par with creating tools, not an independent form of intelligence. For that we need to make machines capable of learning.

So far this has proved very difficult. What we are capable of so far is to condense existing knowledge that has been annotated by humans into a statistical model, with large language models (LLMs) as the pinnacle of the current AI hype bubble. These are effectively massively scaled up language models following the same basic architecture as those that hobbyists were playing with back in the 1980s on their home computers.

With that knowledge in mind, it’s not so surprising that LLMs do not even really register on the CHC model. In terms of Gf there’s not even a blip of reasoning, especially not inductively, but then you would not expect this from a statistical model.

As far as Gc is concerned, here the fundamental flaw of a statistical model is what it does not know. It cannot know what it doesn’t know, nor does it understand anything about what is stored in the weights of the statistical model. This is because it’s a statistical model that’s just as fixed in its functioning as an industrial robot. Chalk up another hard fail here.

Although the context window of LLMs can be considered to be some kind of short-term memory, it is very limited in its functionality. Immediate recall of a series of elements may work depending on the front-end, but cognitive operations invariably fail, even very basic ones such as adding two numbers. This makes Gsm iffy at best, and more realistically a complete fail.

Finally, Glr should be a lot easier, as LLMs are statistical models that can compress immense amounts of data for easy recall. But this associative memory is an artefact of human annotation of training data, and is fixed at the time of training the model. After that, it does not remember outside of its context window, and its ability to associate text is limited to the previous statistical analysis of which words are most likely to occur in a sequence. This fact alone makes the entire Glr ability set a complete fail as well.

Piecemeal Abilities

Although an LLM is not intelligent by any measure and has no capacity to ever achieve intelligence, as a tool it’s still exceedingly useful. Technologies such as artificial neurons and large language models have enabled feats such as machine vision that can identify objects in a scene with an accuracy depending on the training data, and by training an LLM on very specific data sets the resulting model can be a helpful statistical tool, as it’s a statistical model.

These are all small fragments of what an intelligent creature is capable of, condensed into tool form. Much like hand tools, computers and robots, these are all tools that we humans have crafted to make certain tasks easier or possible. Like a corvid bending some wire into shape to open a lock or timing the dropping of nuts with a traffic light to safely scoop up fresh car-crushed nuts, the only intelligence so far is still found in our biological brains.

All of which may change as soon as we figure out a way to replicate abstract aspects such as reasoning and understanding, but that’s still a whole kettle of theoretical fish at this point in time, and the subject of future articles.

Great article. Thanks for posting.

Yeah. Well written, accurate, funny, and overall entertaining as well as informative. I agree.

Maybe this article was written by an LLM. It’s a prime example of the continuum fallacy… “knowing words is what humans do, so if birds can know words they must be as intelligent as humans.” And the CHC model is just silly – throwing everything at the wall so they can cover all the bases. Gf leads to all the others. Can’t remember everything? Write it down. Want to do something faster? Think about how to achieve it. Result: computers and spaceships.

Humans are orders of magnitude more intelligent than birds, full stop. And orders of magnitude more intelligent than probabilistic dice throwers.

Intelligent enough to write about our intelligence.

https://www.amazon.com/Intelligence-Understanding-Creation-Intelligent-Machines-ebook/dp/B003J4VE5Y/

There are pretty good methods for determining the relative intelligence of human beings, but we don’t have scales that allow us to say “Joe is twice as smart as Harry” because we can’t identify what “twice as smart” means. Put another way, is IQ a linear, logarithmic, or exponential function? Or square? Or square root?

“Orders” is plural, so “orders of magnitude” means a factor of at least one hundred. I don’t think that what it means to be “at least 100 times more intelligent than a bird” can be defined at this time in a manner that most experts in intelligence would agree on.

On a trip to the Zoo there was a cockatiel in an enclosure with a very nibbled sign saying “I have bitten N fingers”, demonstrating that there’s at least N humans who are less intelligent than a bird.

Well always be able to define intelligence in a way that excludes everything but some humans.

Meanwhile they’ll be taking our jobs!

I’d draw a comparison to the first engine Hero of Alexandria’s 1st-century AD aeolipile. It took until the 18th-century BC Thomas Newcomen to make a useful engine.

In all that time you might look at engines and say well it spins but it doesn’t do work in the way animals and humans can.

The only difference is it took 1800 years to make a useful engine that could replace animals. LLMs are doing it in under 8 years…

Maybe it didn’t. They had other engines too, like the automatic temple doors which used steam pressure to move water from one container to the other, which would get heavier and pull on a rope that would open the doors. When the heat was removed, the steam condensed and drew the water back, which would perform the opposite action by a counterweight. Not much of a leap from that concept to the Newcomen engine. It’s the same principle, the latter being just a more elaborate version.

Maybe they knew how to make it work, but it took too many humans to gather the firewood to run the engine, so it never made any sense as a practical tool for industry. More labor was spent than was gained. Thomas Newcomen had the advantage of the widespread mining of coal, and the engine itself was developed to solve the problem of pumping water out of coal mines, so fuel was plenty and using it for running the engine made more fuel available as a consequence – something which was not the case for the ancients.

Moral of the story: an invention being useful does not depend on the invention itself, or the inventor, but its circumstances of its application.

Really interesting point of view thanks.

We are truly in a bizarre era, I do think GPT-3 was a truly epoch defining invention (and discovery). And it was largely given away for free.

Interesting to think that perhaps you did not have the resource to make an engine make sense in the past.

But to be handed a free invention is quite bizarre.

Why? Did someone copyright the wheel?

With the limited metallurgy of the ancients, they knew that putting a stopper on a boiling steam vessel would cause it to come apart at the seams, so they were limited to using steam at close to atmospheric pressures or below. That means the maximum pressure differential they could manage was about 1 bar. Even James Watt was against using high pressure steam out of safety concerns for exploding boilers, and tried to optimize the Newcomen engine instead.

Watt quadrupled the efficiency of the atmospheric engine, but it required new machining techniques and alloys that were only recently invented – and it was still just 10%, so you can imagine how much firewood the ancients would have had to burn to run their steam engines.

Using positive pressure instead of condensing vacuum would improve the pressure ratio of the engine and made it much more efficient and powerful for a smaller size, which is what other inventors of the time were tinkering with – in no small part to get around Watt’s and Newcomen’s patents on the atmospheric engine – which Watt then adopted back into his designs once other people showed that it could work.

Moral of the story: famous inventors often get their names in history books by improving what is already known. The true inventors don’t usually get named, while their inventions get picked up by famous people like Watt, or Edison, Steve Jobs or Elon Musk, who have the money, influence, and armies of patent lawyers to turn the inventions into businesses.

Water wheels are engines; they’ve been used to mill grain since the 4th century B.C.

There were some great old anti-car cartoons from when cars were first invented demonstrating all the ways a horse was better. I must admit, a horse that knows its way home from the pub does sound mighty useful but the care & feeding is a bit onerous.

Not to also mention clean up, which could be part of care

LLMs have zero intelligence. They can’t figure things out. They only “know” something when they’ve read about 6 million posts or articles about it on Facebook, Xitter, Instagram, reddit, substack, Experts Exchange, blogs, publications, databases and repositories parsed without consent, etc etc.

Also important to understand that crows aren’t committing copyright infringement by existing. If a person creates a work based on analysing and examining someone else’s work, the original author has the right to determine whether that new work is allowed to be published and is owed a royalty if they agree to the publishing.

Even if you post something publicly, you’re only granting the right to read or view your work, not let it be analysed and used to generate new content.

Haven’t I just done that? Analyzed your post and generated new content? Why is OK for me, but not LLMs?

Yes and he would like royalties in perpetuity!

It is quite silly. Imagine if whoever created used the I–V–vi–IV chord progression for the first time prevented anyone from ever using it again without royalties!

If artists and recording labels had their way we wouldn’t have been able to rip our CDs to listen to them on our iPods (they really did keep this illegal in many jurisdictions for shockingly long).

It’s obviously different. You’re just reading it, an LLM is analysing it and adding it to a dataset permanently. An author deserves compensation when their works are used in the creation of other works, even if it’s just in part, like the structure of the words.

I didn’t just read it. I analyzed it for meaning and content. I added it to whatever dataset is in my head. I used it in the creation of other works, my reply.

I would suggest that everything I write, speak, or think is derived from other works. My knowledge came from somewhere, right?

The irony is of we spend six month coding something, people want it to be “open sourced’ and yet if I spend the six month wring a novel or a few catchy tunes, people want to own all rights to it for decades.

That’s not how fair use works at all. Criticism and analysis are explicitly covered under fair use. Otherwise, the makers of shitty movies and books would be able to sue critics for publishing reviews.

Of course, all of the various objections to LLM’s on copyright grounds are pretty incoherent from a legal standpoint.

There’s an artist called “Akufen” who used to make music out of 3-second clips off the public broadcast FM radio. Fair use.

The new song is now copyrighted, so taking more than 3 seconds out of it is illegal.

https://www.youtube.com/watch?v=zfV8M91A5Rk

The infringement in question is due to the companies taking a shortcut, not in the generative process itself.

Try reading this again with ‘Humans’ in place of ‘LLMs’

Doesn’t work. Humans figure stuff out. Like, for example, LLMs.

That and hundreds of thousands of hours learning to solve coding problems by practical application with feedback on the accuracy and quality of their results.

Programming is a finite universe constructed from well-defined languages and symbols for which there are very specific rulesets and tons of worked examples. What useful programming language, library or extension doesn’t come with canonical examples?

Programming is well-suited to a LLM-type approach. The real world is messier.

What universe are you from? In this universe, programs have to address poorly-described, incomplete, and intermittently changing requirements. Understanding those requirements depends on … understanding. Not just Grand Theft Autocorrect.

It seems obvious to me that it’s due to a lack of arbitration by reality. Animals (including us) know success or failure as a mechanical fact. The food made it to our mouth, or it did not. We stopped feeling hunger, or we starved. It’s the basis for the concepts of “truth” and “reality.” The core of self-awareness in our experience is having a biological body. Without these early experiences with necessary goals, we’d appear to be “just a pattern-matching network” just like chatgpt does.

So the hard part is to make a pattern-matching network that doesn’t differentiate the training and performing roles, that does both simultaneously. Then it’s a simple matter to just train it by making it perform with a robot body, and then it will be as smart as a crow in no time. Of course, the closer it gets to humanity, the more human failings it will pick up and the more computer strengths it will shed.

It is sometimes said that a baby first cries out of reflex, the second time from realizing that it exists, and the third time from the realization that it can do nothing about it.

An excellent example of human intelligence’s greatest strength and weakness – the ability to simplify.

Yes, the current ‘AI’ isn’t.. It’s a LLM – and not even that some times ie the google ‘AI’ now used for search seems to just go to the most popular page and copy the text for it’s answer. And because google has the page stats….

This contrast to the actually beginnings of AI with some of the image/video processing, as they may include some measure of ‘understanding’ to generate a result..

We need to remember that “intelligence” is an entirely human-derived category. If crows (or dolphins) have derived similar categories, we have no way of knowing.

“A person is said to be intelligent if that person can quickly recognize the same patterns, and categorize them the same way, as the person evaluating the intelligence.”

From: A Few Short Lectures on the Brain: Sublecture Eight A, Intelligence

Putting Intelligence and LLMs into the same sentence is just wrong. There is no basis for comparison in the first place. There is a segment of population that wants to believe in AI (as depicted in the movies), and other segment that wants to exploit it for monetary reasons ( the big companies hit on a good marketing term ‘AI’ for their LLMs), and the rest of us have to ‘live with it’. LLMs used as a tool for appropriate activity can be/is useful. But they aren’t Cylons as some of us think LLMs are.

“Putting Intelligence and LLMs into the same sentence is just wrong.” There’s something fishy about that sentence.

It reminds me of a paradox Arthur C Clarke gives in The Fountains of Paradise

Sage: ALL SENTENCES CONTAINING THE WORD “GOD” ARE FALSE.

Disciple: The sentence I am speaking contains the word “God”. I fail to see how such a simple statement can be false.

Sage: ONLY SENTENCES THAT DO NOT CONTAIN THE WORD “GOD” CAN BE TRUE.

Disciple: If this sentence applies to itself then it must be false –

(Sage breaks his begging bowl over Disciple’s head)

Poor programming from the future:

Everything Harry tells you is a lie. Remember that.

Harry: Listen to this carefully. I am lying.

Android: But if everything you say is a lie, then you are telling the truth, but you cannot tell the truth because everything you say is a lie. You lie, you tell the truth… Illogical. Illogical. Please explain. You are human. Only humans can explain their behavior. Please explain.

Sorry, I am not programmed to respond in that area.

[Smoke rises from android’s shirt collar]

There is a problem with the way people discuss issues surrounding AI in that there are those trying to warn others about things that will occur as time goes on, while others read the warnings as if they are in reference to the present.

Another isssue is that there are more people who have never created something worthwhile, so having AI steal from those that have so that they can create a cheaper version of it is perfectly justifiable.

All it takes for AI to take your job is to complete the task at a lower cost. Quality is always second to profits.

There are also those that underestimate scale. To think that the whole of Azure can’t outthink you is like verifying the existence of something based on Google search results.

My favourite quote of recent times is from Cory Doctorow;

“It’s like breeding faster horses an hoping one of them turns into a train”

There’s some useful stuff being done with ML/LLM’s and no doubt some things are getting better – perhaps even good – but none of it is intelligence and none of it feels any closer to intelligence than the examples in that C64 book likely are.

“[For LLMs] In terms of Gf [Fluid reasoning, ie solving puzzles] there’s not even a blip of reasoning, especially not inductively, ” ….For that to be true, you would then need to explain how they can solve novel logical problems, specifically those requiring inductive logic.

All this just to make up some lies?

“it should be said that the Japanese crow in the article did not turn the faucet back off again”

Many humans seem incapable of turning lights off when they leave a room, no matter how many times you tell them.

No penalty for not turning it off in terms of immediately. If it was completely necessary for survival and it was lost due to not being off, eventually they would learn after a long exasperating process, or die out. Humans have a slight shortcut via communicated lessons not involving demonstration