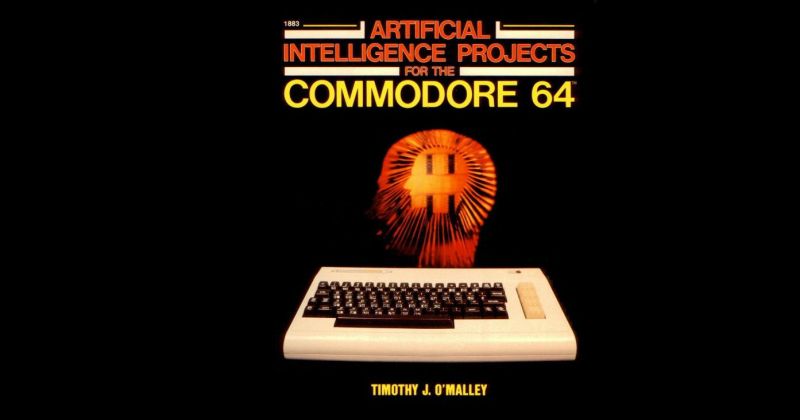

Artificial intelligence has always been around us, with [Timothy J. O’Malley]’s 1985 book on AI projects for the Commodore 64 being one example of this. With AI defined as being the theory and development of systems that can perform tasks that normally requiring human intelligence (e.g. visual perception, speech recognition, decision-making), this book is a good introduction to the many ways that computer systems for decades now have been able to learn, make decisions and in general become more human-like. Even if there’s no electronic personality behind the actions.

In the book’s first chapter, [Timothy] isn’t afraid to toss in some opinions about the true nature of intelligence and thinking. Starting with the concept that intelligence is based around storing information and being able to derive meaning from connections between stored pieces of information, the idea of a basic AI as one would use in a game for the computer opponent arises. A number of ways of implementing such an AI is explored in the first and subsequent chapters, using Towers of Hanoi, chess, Nim and other games.

After this we look at natural language processing – referencing ELIZA as an example – followed by heuristics, pattern recognition and AI for robotics. Although much of this may seem outdated in this modern age of LLMs and neural networks, it’s important to realize that much of what we consider ‘bleeding edge’ today has its roots in AI research performed in the 1950s and 1960s. As [Timothy] rightfully states in the final chapter, there is no real limit to how far you can push this type of AI as long as you have more hardware and storage to throw at the problem. This is where we now got datacenters full of GPU-equipped systems churning through vector space calculations for the sake of today’s LLM & diffusion model take on ‘AI’.

Using a Commodore 64 to demonstrate the (lack of) validity of claims is not a new one, with recently a group of researchers using one of these breadbin marvels to run an Ising model with a tensor network and outperforming IBM’s quantum processor. As they say, just because it’s new and shiny doesn’t necessarily mean that it is actually better.

yawn…. there where earlier books on AI for the amstrad CPC…from 1984…

“On the Road to Artificial Intelligence : Amstrad CPC 464”..

on writing AI programs in BASIC

anyone who reads these old books will realise the AI hype that is around today is just that ..hype.. there has been ZERO progress in the field in over 40 years!… LLMs ARE NOT INTELLIGENT in the slightest….

There had been Questions and Answers, Q&A, for IBM PC compatibles, way back when that C64 book came out.

It was a database system you could talk to in natural language.

In the form of “how many girls under age 18 have a driver’s license ?”

A German (!) version (F&A) and a French version did exist, too, I think. Maybe even more language versions.

https://en.wikipedia.org/wiki/Q%26A_(Symantec)

Btw, back then there had been two basic types of AI. Expert systems and neuronal nets.

The former had been used in databases and was pre-programmed, highly specialized for a certain application.

Neuronal nets were about self-learning algorithms. In simple words, I mean.

Someone could write entire books about these things, without barely scratching the surface.

To say there has been zero progress in half a century is stupid and completely wrong, and of course by “intelligent” you mean “sentient.” Which no, of course they aren’t. AGI is kind of a stupid goal, it’s just a thing from science fiction.

One of the big issues since forever ago is that the definition of AI is vague and shifts as time goes by and things get more advanced. In 1998, AI was a simple pathfinding algorithm that helped a bot in Quake III find its way around a 3d map. Anything that becomes “solved” in AI is thereafter simply called “software” because it has been disenchanted.

LLMs will probably make the world more annoying and fake (like every piece of IT now) but it is still an advancement. No it is not a conscious ghost in the machine, that’s nonsense. We don’t even know why we are conscious. Honestly we don’t know what it is, so we don’t even know what questions to ask or where to start seriously searching. And we are metaphysically incapable of advancing from that spot at this moment in culture anyway

There has been progress in the amount of memory and compute power you can apply to the problem, and the kind of hardware architectures that are better suited for the workload, but the fundamental methods are still the same: statistical inference over massive amounts of data, or randomly evolving a cellular network until it passes some arbitrary test of competence. Then you manually write a bunch of IF-THEN rules to fix the problems edge cases with the previously mentioned and pretend it’s AI instead of a Mechanical Turk.

The kind of algorithms where someone figures out a clever way to solve a limited problem, like path finding through a maze, were never considered AI except in marketing buzzwords. The question about AI is more generally, “what is intelligent?” rather than how intelligent it is. The algorithm is a fixed program that cannot behave in any other way. Even if you program it to account for new information, or to re-program itself, how it does that is dependent on your initial programming. The whole AI research field is kinda stuck on that point. They can’t make machines “think” in any sense of the word because all the thinking is done by the people who program them, and the machine just repeats the motions in a blind and dumb fashion.

That’s why they’ve been trying to delegate the programming part to randomly evolving neural networks and statistical inference machines employing Markov chains, so they could claim that the machine is intelligent because it’s “doing it by itself” – but for the neural network parts they haven’t figured out the architecture or the model of “neurons” that would behave the same as real brains, they haven’t solved the catastrophic forgetting issue that prevents the network from learning new information without destroying the old, and for the Markov chains and equivalents it’s just hiding the programming behind the curtain of big data and throwing dice to mix things up a little and mimic the appearance of thinking. The end result either way is still a fixed dumb algorithm that has nothing more to do with intelligence than the bot player in Quake 2.

AI IS a marketing buzzword. What is your working definition of AI? Is it commonplace? Is a mechanical Turk not “real” AI? According to whom?

You seem to have a requirement that the AI acts like a real brain, which is a pretty extreme position

At the very least, intelligence has the ability to adapt, plan and learn. It doesn’t have to be “AGI” or act exactly like real brains, although presently it seems that only real brains have all those abilities. Some brains, not all of them.

For example, evolution itself has the ability to adapt and learn, but it doesn’t plan, so in my book it doesn’t count as intelligent. Computer algorithms may have the ability to plan and/or learn in some senses, but they cannot adapt because the algorithm is fixed. Even the training part works much like evolution (random mutation, elimination of the weakest), so there’s no planning involved – no intelligence.

The catastrophic forgetting issue makes it impossible to do on-line learning – for both the statistical inference and the neural network AIs, because incorporating new skills requires you to start the training again from zero. Adding new information after the fact makes them perform worse on the skills they already learned, so adaptation is not feasible.

You don’t forget how to walk when you learn how to chew bubble gum.

A mechanical turk is not “real AI” because it’s a party trick. It’s real intelligence pretending to be machine intelligence. Pay no attention to the man behind the curtain.

Yay, you go the bot player in Quake 2 !!

Even LLMs are based on statistics and Markov chains – they’re technically equivalent to the parody generators we had all the way back in 1972.

If those were named today, they would be called “small language models”.

No, Large Language Models (LLMs) are not Markov chains. While both can generate text sequences, LLMs are based on neural network architectures (typically transformers) that can maintain long-range dependencies and broader context. Unlike Markov chains, which only consider the immediate previous state(s) to determine the next state, LLMs can reference and synthesize information from their entire context window and their trained parameters to generate responses.

Then why didn’t they name them back then? Did they have any capabilities at all back then? No I didn’t think so

They were limited by the amount of source material they could handle given the computers back then. You could have a book or two, a few megabytes at best.

They were literally parroting the source material by picking random but plausible bits of it and re-combining them in sentences. Hence why they were called parody generators. They did produce grammatically correct outputs that followed the source material in content and style, but at some point they always turned to illogical nonsense – just like LLMs today.

LLMs are essentially more complex parody generators under a new name. Their training material is simply half the internet, and whenever you ask a question they simply select some subset of that information according to the question and start putting random bits of it in a plausible order.

Perhaps the main difference between a parody generator and an LLM is that the parody generator does not take a “prompt”, it simply starts generating random stuff from a random seed.

The LLM is more like a game of mad-libs where you have a word or a sentence that sets the initial direction and starts evolving the sentences forwards out of that. Simply setting the random ramble in some direction keeps it in line for longer before it starts veering off into nonsense.

There’s always the process of elimination:

– It’s not “spirits and ghosts” as per religion

– It’s not deterministic algorithms as per classical AI

– It’s not randomly throwing dice (classical probability)

– It’s not randomly evolving a network that you then run as a deterministic algorithm

What else is there? Well, you can still rescue classical determinism by claiming that brains are intelligent because they’re in continuous interaction with the world and continuously evolving (not fixed and bounded algorithms), or you can find intelligence in non-classical probability theory. Either way, this is something we presently cannot do with computers and programs, either because we don’t have the machines to run the algorithms or we don’t know how to program it.

The question is what is it that makes you conscious, not what makes you intelligent. Fire is in continuous interaction with the world and continuously evolving, and the argument could be made that it displays simple “intelligence” of a sort (this is an illusion) but is it conscious? Totally different question, and this confusion of terms is one of the basic problems I bring up

This is the metaphysical inability I was mentioning. The problem is not that we don’t have big enough computers. We can’t prove that another person we are talking to is conscious, we can only assume as much, even though they are running the same “hardware” and presumably “software” (bad metaphors, pointing solely to our current technology). Even if we had a computer good enough to emulate a brain (we don’t know how one works, so we can’t really know how much compute is required to emulate. Is it just synapse count, or is there sub-neuron computation happening? Order of magnitude difference)… We still wouldn’t be able to assume that emulated brain is conscious. We don’t know what it is, we cannot measure it, and materialism seems thus far insufficient to even ask the question.

Since we don’t know the question, yes I agree we don’t know how to program it.

We basically don’t have the confidence level yet to make this claim authoritatively, and while I personally don’t think it is either, this statement is something you’ve made not from evidence of any kind but simply from looking down on that kind of thinking.

We don’t know it isn’t any of these either. How are you running this process of elimination if you don’t even know how to measure for what you are trying to find?

No it isn’t. Consciousness is a question of self-reference. There’s no problem in being intelligent without being conscious, since you don’t necessarily need to count any information about yourself into it. You’re begging the question that intelligence implies sentience, by assuming that self-reference is a necessary part of intelligence.

Yes we do – from the point that no religion whatsoever has any solid proof, and we would have to accept a religion first before we should consider its claims about souls and spirits. It would be like saying “There’s a flea on the nose of the dog on the moon”, and then debating about the flea – but is there a dog on the moon?

We just eliminated those options by argument.

“… is kind of a stupid goal, it’s just a thing from science fiction.”

that is a sad way to constrain a brain. free yourself before it is too late.

Very pithy. I mean there are plenty of reasons to shoot for other practical applications for AI that don’t require full AGI/consciousness, and that it is very silly to aim for the holy grail at this stage of development. Why don’t you go “free yourself” buddy

Take a look at what Karl Friston and Verses AI are doing with active inference.

8bit machines didn’t have 128GB of fast RAM to run a 405,000,000,000 node transformer LLM, so nobody made one.. Go figure..

Besides the hype mentioned, the bleeding edge prompts all get code and documented history and science wrong at a impractical rate.. Even doing Python which they do best the code is often broken on a syntax level..

In the very near future Facebook and OpenAI will hit a dead-end with transformer LLM and the general public will see for themselves.. ChatGPT 5 will not be that different from o1 and basically be the plateau..

Agreed, just more powerful machines performing more intricate tasks thrown at them by actually intelligent programmers.

Curious. Rang a bell, so I looked up on my bookshelf and saw my 1985 copy of “Exploring Artificial Intelligence on Your Apple II” by a Tim Hartnell (available at https://archive.org/details/exploringartific0000hart_n4d6 ), who also wrote “Exploring Artificial Intelligence on Your Commodore 64”.

I wonder what the begats are for these books that popped up simultaneously?

If my memory is correct, there is one for the Atari 8 bit as well. I think they were pretty much the same book, just tailored to the different platforms.

True to some extent, but there were different authors, different publishers, different countries. All popping up nearly simultaneously.

It is kind of funny thinking of what it would take to run an llm on a c64. Even on my i5 notebook with 16gigs it is like straining molasses through a coffee filter on a cold day. You could ask it what happens when we die and find out first hand long before you would get an answer.

The C64 always had been a toy computer, so it’s no wonder.

Computers like Cray-2 and graphical Unix workstations did exist by mid-late 80s, too.

The home computer world wasn’t by any means of importance in science.

Except for school labs, maybe. They had Apple IIs. In the 70s. ;)

In 1985, universities and research centers had computers more than 10 years ahead of what a private person had been used to.

The aforementioned Cray-2 was more powerful than a hundred Windows 95* PCs from mid-90s.

https://en.wikipedia.org/wiki/Cray-2

(*Assuming a 486DX4/100 is rated at about 6 MFLOPS; Cray-2 has 1900 MFLOPs)

“As [Timothy] rightfully states in the final chapter, there is no real limit to how far you can push this type of AI…”

Conceptually…sure.

But LLMs have all but stalled out in 2024.

We made huge leaps of progress in the last half a decade, but have stalled out hard in the last 12-18 months.

Even if we ignore the fact that using an LLM is theft (should we really ignore it?) our LLM projects aren’t that much better now than they were in 2022, despite the truly staggering amount of hardware and electricity we have been throwing at them.

I’d say there is a pretty clear limit, and we are bumping up against it.

The current AI push seems more about people realizing a big chunk of the global economy is being propped up by it, rather than any real progress being made.

A lot of people are going to be hurt when this bubble bursts…

AI apocalypse hype was actually meant to be a fig leaf to cover up AI winter 2: electric boogaloo. Or are we on number 3 now? I forget

Let me just ask ChatGPT to summarise and tell us the answer….

Are you really qualified to judge that? You are comparing GTP3.5 (2022) with recent models? (3.5-Sonnet, o1)?

Years of hobby research on my own hardware. Lowly dual EPYC with several GPUs.

Then a year+ of graduate level research using “someone else’s servers”.

Then I got so sick of the tech bros and executives I abandoned it.

As I said. We made huge leaps between 2008 and 2021ish.

I haven’t seen much real progress in the last 2-3 years.

It’s all sunk costs.

No one wants to admit that it never really worked well for “real” work.

It’s all just toys.

Some of the toys are really neat(when they aren’t theft…) like the super resolution tools.mbutbthey are still just toys.

NVidia and the rest are desperately pumping out hardware with bigger and bigger power budgets, praying that ANYONE makes something real before the money runs out, or society decided it might not be okay to use terawatts of power to make new cat pictures, or awul customer service chat bots.

It’s like watching people smile as they try to sell you an apartment on an island that will start sinking any day now. If they just keep acting like everything is fine, maybe the clients won’t notice their feet getting wet.

I was the first one in Alaska selling the Apple II and Commodore PET computers. At a business show in 1978 I demonstrated the Apple II on which I installed some game programs from David Ahl’s book “BASIC Computer Games”. One of those was an Eliza-like game called “Doctor”. It was a big hit. When people started on that little chatbot they wouldn’t get off for long periods. Unfortunately, although many people wanted to buy Apple II computers, the distributor in Seattle would only send me two a month, when I could have sold two a day.

A sophisticated program with access to an enormous amount of data (a significant amount pirated) can simulate intellect but it still doesn’t think. Also, consider that we think that AI will present us with correct information. Intelligence does not require infallibility. If Einstein makes a mistake is he therefore unintelligent?

Using the term “AI” is a marketing goldmine right now. The fact that it doesn’t really exist is just bad for business.

There was also a book about Artifical Intelligence on the Atari ST in 1987:

L’intelligence artificielle sur ST (Atari ST.)

ISBN 9782868990990

What a fantastic rambling blurb of random opinion these comments are. :-)

I observe:

My car drove itself most (but not all the way home) today. I don’t have to go back far to a car that couldn’t do that.

A lot of the marketing content pushed at me is both AI generated and targeted. A long way from Mrs Marsh and her chalk sticks (this might be an Australian reference to an old TV ad)

An astounding proportion of “routine” graphic design seems to be AI generated. This is fairly new.

From this, and some other things I infer progress in AI over recent times. Perhaps not the overnight revolution the share market pundits would have me believe. Nonetheless, AI is doing things now, it wasn’t doing when I was cutting demoscene assembler on my C64..

And yes, LLMs are an evolution of Markov chains, but they are not Markov chains and incremental improvement over time IS science.

Now this may well be a simple function of up scaling compute power. The stuff coming out of NVIDIA blows my comp-sci trained mind. But it’s still significant progress when measured in terms of social outcomes.

“My car drove itself most (but not all the way home) today. I don’t have to go back far to a car that couldn’t do that.”

Self-driving cars exist since the 1950s (experiments starting in 1930s).

https://en.wikipedia.org/wiki/History_of_self-driving_cars

If you want some older AI-related literature, go to archive.org and search for “cybernetics”, “thinking machines”, “teachable machines”, “expert systems” and similar. Many concepts behind modern AI were actually conceived in the 1950s and 1960s.

Oh yes, basically everything we are doing today was already conceived by the brilliant people of that era. We have just been waiting for the hardware to catch up so we could actually try some of their wilder ideas.

Actually, the hardware had been there since at least the late 1970s to mid-80s.

The super computers like Cray had more than enough power.

Unfortunately, we often make the mistake to measure current state of our technological level by our own appliances at home.

That’s why I have a bit of a love-hate relationship with the CBM 64.

It had bright users helping each others, but they had been too obsessed with the machine and were biased, thus.

They believed that it was cutting edge, which it never was.

It also depends on how you define cutting edge.

It’s not unheard of for an enthusiast to have a multi-PiB array at home.

100Gbit networking? 200Gbit? 400Gbit? All doable in a HomeLab.

You aren’t going to be installing 10 racks of H100s in your basement…

…but you can rent that rack by the hour today.

(It’s actually crazy to me that I can rent an hour of use on several dozen GPUs if I really need to run hashcat. And it isn’t even that expensive…)

With such easy access to tech that was literally state of the art 18-24 months ago, I’d say we are a LOT closer to cutting edge “at home” than a C64 user was in the 80s.

It’s a lot more like aristocratic citizen scientists l8ke centuries past.

“It also depends on how you define cutting edge.”

Maybe, yes. But the C64 never was. It was cheaply made and full of bugs.

Like almost everything made by Commodore, maybe.

It focused on mass-production the foremost.

Parts were recycled everywhere (C64 is in parts broken because the ViC20 had been guttet for parts).

The business model was to sell as many computers possible, for maximum profits.

Everything else was secondary. Including the well being of the users.

It seemingly was a really bad company and it showed shirtly before bankrupcy.

Nowadays, however, it would be desirable if the intellectual development of users had always kept pace with technological progress. ;)

I mean, you just have to look at the eloquence of then and now.

In the 18th and 19th centuries, people were very good at handling the subtleties of language.

In comparison, our way of expressing ourselves today seems downright neglected.

I think I have to correct myself a little. Not everything was bad at Commodore.

The Amiga development department, for example, criticized Commodore for a number of things. Or so I read.

I was told by an fromer East German that GDR had used the term “Kybernetik” (cybernetics) for many computer related topics in school.

Makes sense in a country that has big, state-controlled companies called “Robotron” (not the video game).

Here in normal Germany, we rather used the term “Informatik” (informatics). And “EDV” for IT.

Speaking under correction here.

Cybernetics are now called mechatronics and control theory when it comes to machinery and computers.

“Kybernetik” covers a much wider field than “Informatik”, it’s more of a holistic approach. I’m lazy today, so i’ll just cite Wikipedia: “Cybernetics is the transdisciplinary study of circular processes such as feedback systems where outputs are also inputs. It is concerned with general principles that are relevant across multiple contexts, including in ecological, technological, biological, cognitive and social systems and also in practical activities such as designing, learning, and managing.”

And I thought I could avoid all that AI BS if I simply stick to my C64..

I mean it worked for “Blockchain Technology!!!” and “Cloud Computing!!!” .

? and i thought i could avoid all that ai bs if i simply stick to my c64..

can you elaborate on that ?

I wish I still had my VIC-20.

The difference between now and 1985 is anyone thinking a squishy statistical auto completion black box is any kind of intelligent algorithm.

I was there in 1985 and I wouldn’t have agreed then and I certainly don’t agree now.