Physics simulations using classical mechanics is something that’s fairly easily done on regular consumer hardware, with real-time approximations a common feature in video games. Moving things to the quantum realm gets more complex, though with equilibrium many-body systems still quite solvable. Where things get interesting is with nonequilibrium quantum systems.

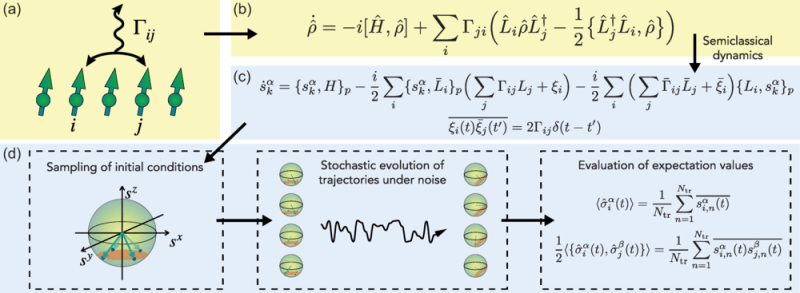

These open systems are subject to energy gains and losses that disrupt its equilibrium. The truncated Wigner approximation (TWA) is used as a semi-classical method to solve these, but dissipative spin systems proved tricky. Now however [Hosseinabadi] et al. have put forward a TWA framework (PR article) for driven-dissipative many-body dynamics that works on consumer hardware.

Naturally, even with such optimizations there is still the issue that the TWA is only an approximation. This raises questions such as about how many interactions are required to get a sufficient level of accuracy.

Using classical computers to do these kind of quantum physics simulations has often been claimed to the ideal use of qubit-based quantum computers, but as has been proven repeatedly, you can get by with a regular tensor network or even a Commodore 64 if you’re in a pinch.

I love physics but I feel like this article could do with an implementation of said algorithms on said consumer hardware (and the write up that goes along with it) to really make it stand out as a hackaday above regular science write up site ala iflscience

Okay, Well the research is mainly a framework view, the authors don’t explicitly write any implementation, but provide a consistent procedure with proofs and reasoning on their methodology. Overall the algorithm or pseudo code, from a computer science perspective is implied. Which is not surprising given the authors’ area of study is mostly with physics/chemistry. Although someone writing the code for this would make an interesting article on how they handle the various intricate parallelism and coordination.

If I had a dollar for every time my professor told me to use a program to solve physics problems… well. I’d be broke. He hated computers!

Pine for the analog computer days.

I had the opposite problem. I had a meteorology professor who insisted on spending a semester teaching us how to convert Richardson’s Numerical Weather Prediction Method into something that a 386SX computer could handle (it was 1993). Not only did we have to regurgitate the proof as part of the written final, we also had to create a 48-hour prediction off of a two-layer dataset using BASIC, C, or Fortran.