[Maxzillian] sent in a pretty amazing project he’s been beta testing called ReconstructMe. Even though this project is just the result of software developers getting bored at their job, there’s a lot of potential in the 3D scanning abilities of ReconstructMe.

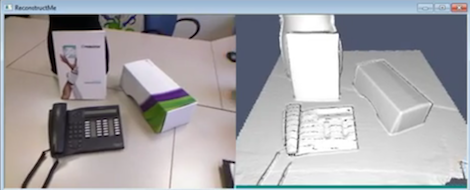

ReconstructMe is a software interface that allows anyone with a Kinect (or other 3D depth camera) in front of a scene and generate a 3D object on a computer in an .STL or .OBJ file. There are countless applications of this technology, such as scanning objects to duplicate with a 3D printer, or importing yourself into a video game.

There are a few downsides to ReconstructMe: The only 3D sensors supported are the xBox 360 Kinect and the ASUS Xtion. The Kinect for Windows isn’t supported yet. Right now, ReconstructMe is limited to scanning objects that fit into a one-meter cube and can only operate from the command line, but it looks like the ReconstructMe team is working on supporting larger scans.

While it’s not quite ready for prime time, ReconstructMe could serve as the basis for a few amazing 3D scanner builds. Check out the video demos after the break.

[youtube=http://www.youtube.com/watch?v=DHK6BLBJHU0&w=470]

[youtube=http://www.youtube.com/watch?fv=yC4wFvsznRg&w=470]

[youtube=http://www.youtube.com/watch?fv=ebnV7witwXg&w=470]

Anyone know if this can capture colour data? I don’t have a Kinect to try, but bloody hell, with .obj export this would be amazing for 3D scanning.

with MSSDK use nui.SkeletonEngine.DepthImageToSkeleton to get projected coordinates for each pixel, and use nui.NuiCamera.GetColorPixelCoordinatesFromDepthPixel to get the corresponding pixel in the color frame.

cont’d.

I have a .NET app that renders a point cloud in OpenGL and exports vertex and color data to a ply file. I’m currently working on a new meshing algorithm. If there is enough interest I could clean it up and release it. Windows only though.

w… wow

/me goes to download

Newegg is now selling the Asus Xtion Pro Live.

Can anyone chime in with details on what kind of accuracy and resolution could be expected with a Kinect (or Asus) based system like this? Looks as though the data generated is roughly a 5mm-8mm mesh resolution. It is tougher to state actual point accuracy, but I would hope for something around 2-4mm.

Is there a way to change mesh density/resolution with he Kinect output or is it always fixed? I downloaded the software and am definitely going to keep watch on this. I don’t own a kinect but I might go buy one just to mess around with this type of thing.

I haven’t had much time to do very much testing, but the resolution is somewhere around 5mm (off the hip guess) or under. Keep in mind that you can make multiple passes (although the longer the scan, the greater the chance for a scanning error) and change the orientation of the sensor to greatly enhance the accuracy of the scan. When I get the time, I plan to scan some even structures to make accuracy comparisons with.

Something else of interest is that I’ve seen lenses sold for the Kinect that shorten its range. This should effectively increase the resolution and accuracy slightly.

Hi,

first thanks for the feedback. I hope a lot of you will give ReconstructMe a try.

As far as the accuracy is concerned, we’ve published some figures in our newsgroup here:

https://groups.google.com/d/msg/reconstructme/6sIXHj_ksTI/ttgj-jSpgFIJ

With the default calibration and OpenNI backend I would assume an accuracy of +/- 4mm (worst case).

We could generate the mesh in much higher resolution, but that doesn’t payoff since the limiting factor is currently the sensor.

We’ve tested XBox Kinect, Asus Xtion Pro Live, and now XBox for Windows (not in the release yet). Resolution and accurracy are similar accross these devices, although some users indicate that the Asus device generates better results.

Please join our newsgroup if you have technical questions. We’d be happy to answer them.

Best,

Christoph

Hasn’t this been done before? In any case I don’t see it being very useful for most things since these kinds of sensors are way too inaccurate from what I’ve seen.

To my knowledge, no one has offered a turn-key system before.

Useful depends on the application. In my case this caught my interest because I’d like to use it for scanning the empty shell of a car I am building into a LeMons race car. The plan is to scan the shell and use the model for designing a roll cage in a CAD program.

This has been done before, once that I’ve seen, by Microsoft’s laboratories(I forget the link, someone else will doubtless post it soon). It’s nothing like what you’ve seen before.

Here you go:

http://research.microsoft.com/en-us/projects/surfacerecon/

some robotic platfor using Kinect and opencv has it implemented (+ color) for building 3d environment maps for the robot

http://www.youtube.com/watch?v=5o3ABX7xYJU

http://www.youtube.com/watch?v=kvAnGlIokbE

I like this one

http://www.youtube.com/watch?v=9URIR-dEWBM

I’ve used SLAM on ROS (Robot Operating System) I’ve used RGBD-SLAM … and it is really slow and if I remember right ram hungry as is the point cloud software.

So its not really fast enough yet for realtime 3d mapping… perhaps if they added openCL/Cuda support they could do it faster… I would expect so anyway 3D stuff is pretty matrix heavy.

Yes its REALLY RAM hungry (to the point of crashing after a 15 minute scan with 8 GB’s of RAM), but I would not call it slow as it is doing the scanning in real time.

Exporting to a mesh is very, very slow though. I thought the PCL (point cloud library) had GPGPU stuff to make it faster already, has RGBD-SLAM just not using it? (I haven’t tried the latest 0.7.0 binaries)

However the newer SLAM solutions are amazing. Check out DTAM here http://www.youtube.com/watch?v=Df9WhgibCQA

(PDF)http://www.doc.ic.ac.uk/~ajd/Publications/newcombe_etal_iccv2011.pdf

or anything by Andrew Davison

http://www.doc.ic.ac.uk/~ajd/