Of course putting a microcontroller into sleep mode or changing the clock rate has an effect on the power consumption of the chip, but what about different bits of code? Is multiplying two numbers more efficient than adding them, and does ORing two values consume more power than NOPping? [jcw] wanted to compare the power draw of a microcontroller running different loops, so he threw some code on a JeeNode and hooked it up to an oscilloscope.

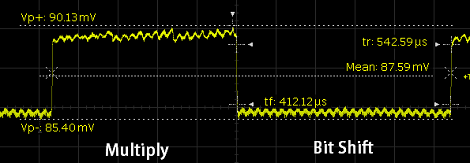

For his test, [jcw] tested two instructions: multiply and shift left. These loops run 50,000 and 5,000 times, respectively (bit shifting is really slow on ATMegas, apparently) and looked at the oscilloscope as the JeeNode was doing its work.

Surprisingly, there is a difference in power consumption between the multiply and shift loops. The shift loop draws 8.4 mA, while the multiply loop draws 8.8 mA. Not much, but clearly visible and measurable. While you’re probably not going to optimize the power draw of a project by only using low-power instructions, it’s still very interesting to watch a microcontroller do its thing.

On the higher-end processors a lot is made about minimising the number of times you update registers, in particular when you change the state of the register i.e. 1->0 or 0->1. If it changes to the same value (as in the case of most of the bits in the bit-shift) then you don’t consume that much.

On the sorts of geometries that are used for micro-controllers the power consumption of the combinatorial logic doesn’t equate to much (on the really small stuff it’s down to the wires and general leakage of the technology – hence very low voltages)

You have to keep in mind when optimizing the time taken per instruction if different instructions take different amounts of time, or if you have to combine multiple lower power instructions to do the same job as a higher power instruction, you may be using more energy for the same job.

Yes – I recall discussion about powersaving modes on laptops that may not benefit much from large computation due to the longer execution time. Granted they are completely opposite ends of the spectrum comparing a modern CPU to a uC.

It’s always made me wonder a bit though – bringing the age-old car analogy in there is a huge difference – slow and steady is vastly more fuel efficient then fast and short. I’m guessing the mechanical nature and friction at higher speeds has a lot to do with that.

Intel recommends a “hurry up and wait” strategy. More power can be saved if the CPU is run at full speed when work needs to be done, so it can go back to sleep at the lowest state for the longest time possible.

site is dead, but something tells me he did it wrong, used C or Crapuino or some Basic instead of assembler, not to mention he probly loops after one instruction instead of lining up 1KB of same instruction and looping that.

yup. bit shifting on AVR is fast (but instructions only do it by one bit) … he used gcc, which makes a loop to shift the right amount…fail

As documented on the linked site and commented on here, the AVR lacks a barrel shifter, so gcc has no choice but to generate a loop. Using assembly language would make no difference. Also, the reference to “Crapuino” is very out of place since this work was done on a JeeNode, not an Arduino. The purpose of the exercise was not to get high performance, only to measure power consumption in typical operations, so loop unrolling isn’t appropriate. And the site is certainly not dead; there’s a huge archive of great stuff there, which I suggest you read.

there’s a big difference:

gcc generating a loop, in pseudo – asm:

#define LOOP_COUNT 10

r1 = 0

loop: if r1 >= LOOP_COUNT

goto end

left shift r0 //assuming no second input to specific

//shift amount since there’s no barrel shifter

r1++

goto loop

end halt

doing the same thing, manually:

left shift r0

left shift r0

left shift r0

left shift r0

left shift r0

left shift r0

left shift r0

left shift r0

left shift r0

left shift r0

The first one takes about 50 instructions to complete (it’s not 100% optimal for a loop, but good enough to make a point)

The second one takes 10 instructions.

They’re both O(n), but one is n and the other is 5n. n beats 5n in therms of efficiency any day.

Note: yes this could go to shit with caches and using cached instructions in the loop vs. uncached in the non loop. Also, space taken up is O(1) for the loop, and O(n) for the nonloop. It’s a tradeoff, but for a test like this it probably doesn’t matter, especially if he’s doing the same method for each instruction (so the repeated loading of instructions vs. cached loop would effectively be nullified, and would lead to a possible increase in power use, but that would be included in the baseline, and you could better tell the difference between the power each instruction uses.

I’m just curious how exactly the test case looks like. Specifically the output assembler code. Did he take optimization into account?

“Oh hay, multiplication is more expensive than bitshifting.”

Well YEAH. It was mentioned early in my first microcontrollers class that if you need to multiply or divide an integer by a power of 2, you should probably use bitshifting instead.

Incidentally, this is why I also try to use & operations instead of % operations to catch odd numbers. I haven’t tested it, but something tells me that the fact that a % involves division will make it fairly expensive.

Don’t bother replacing “x%2” with “x&1” if the former reads better to you. An optimizing compiler will produce the same code for both expressions.

@Ken

That’s comforting to know. I’ve been making a habit of it for long enough that they both read about the same to me, though.

“optimizing compiler will produce”

optimizing compiler might produce

Charliex: Any compiler that misses this does not deserve to be called optimizing!

Compilers have been producing better (e.g. faster) code than experienced assembly language programmers for decades, and they don’t miss obvious bottlenecks like division by a constant. Check out the code your favorite compiler generates for division by a constant (any constant), and you’ll see what I mean.

Note to self: read article first.

Error in article: the Multiply required more power than the bitshift(though this is probably offset by the factor of 10 increase in runtime)

My work was with a Motorola HC11.

Hmm. Now I’m curious what processors(the ones that are the core of a microcontroller and the ones that you install in personal computers, either way) have barrel shifters and what don’t. It seems like it would be a pretty bad oversight for Intel to leave something like that out.

Wolfram,

From my Computer Architectures class I recall the time penalty of OP codes. Some operations take more machine cycles than others. It also makes sense what each command does with the gates of the core, i.e. using more gates, or more changes to gate states will consume more power.

I’m asking you to consider the “tone” of your reply “well YEAH”. I (and I suspect most of HaD readers) did not have a microcontrollers class and so this is new to many of them.

Bit late to change the tone of my OP now, HaD doesn’t have an edit button ;p

It should still be obvious to anyone who thinks about it, though. Multiplication and division are inherently expensive operations, and even done by hand, they are represented as sequential addition and subtraction. Computers don’t have the ability to intuit like humans do. If you go just by silicon chip space, it’s significantly cheaper to handle both operations in software as sequential addition or subtraction.(Multiplication takes up a lot more space than addition, and it reduces the maximum clock speed. Division even moreso. I wrote an unclocked divider for my final project at school, ended up multiplexing the outputs to reduce the number of dividers from around 20 to closer to 8, and still 80-90% of the FPGA I was using ended up being occupied with dividers.)

Doing it that way is really slow, though.

I actually believe this is one of the more sophisticated ways of cracking some RSA encryption… by watching the time it takes for a processor to complete it’s cycles, you can infer what it was calculating. crazy stuff.

The bit shift operations on AVRs (LSL/LSR/ROL/ROR) all take only one cycle so they aren’t ‘slow’ per se, but they only shift left/right one bit at a time. He’s using 16bit integers *and* an ridiculous number of shifts (321). Under those circumstances gcc quite reasonably implements the shift as a loop. In addition, an 8bit x 8bit multiply only takes 2 cycles but of course you need several of those plus some additions and shifts to implement a 16bit x 16bit multiply – and because of C’s type promotion rules, most arithmetic ops end up being 16bit, as that’s the size of a int with gcc on AVR.

There’s really nothing here other than ‘Making the CPU do work uses energy’ – well, duh.

Performing 321 shifts on a 16-bit register strikes me as a really expensive way of clearing that register. Small wonder it would take 10x as long as multiplication, he’s overloading the register by 2000%.(I really need to get in there and read the whole article to figure out what he was trying to do)

This is like firing a howitzer at a piece of safety glass to determine how bullet-proof it is. You’re going to fail regardless, and it’s not really going to tell you anything useful about what you’re actually trying to test.

HAD you got things swapped, multiply consumes more.

The code from the website shifts a 16 bit number a 321 digits, so there is a lot of added code because the CPU doesn’t support is directly, more than a simple 8 bit shift instruction.

this is the insight that enables many side channel atacks against crypto on micros.

http://www.cryptography.com/public/pdf/DPA.pdf

Wow! Thanks for the link.

Besides the obvious implied application of this (energy efficient code), my understanding is that the real magic is in side-channel attacks.

Gating the clock to the multiplier unit perhaps? It would make sense if the manufacturer had implemented it as an iterative multiplier and is using an internal ring oscillator to run it much faster than the rest of the CPU. Just switch it off when not in use.

Power consumption varying with the complexity of operations? Not surprising, though cool to see it documented.

The ATMega doesn’t have a barrel shifter? Now that is surprising, especially since it’s so common to optimize by bit-shifting instead of multiplying/dividing by powers of two. Makes me glad I’m a PIC user.

Barrel shifter…

I HaDn’t heard of such before, I’ll DAGS

(Do A Google Search) to find out more.

Except of course that PIC doesn’t have a barrel shifter either…

RLF

Rotate Left f through Carry

The contents of register ‘f’ are rotated one bit to the left through the Carry Flag. If ‘d’ is 0 the result is placed in the W register. If ‘d’ is 1 the result is stored back in register ‘f’.

16-bit PICs do

So do AVR32. Now both AVR32 and PIC16 isn’t the same as AVR and PIC so the relevant information content is zero…

I once heard that on a Commodore 64, it might be possible to make the red LED power light on the top of the console ‘flicker’ or change brightness depending on the code that was running. I never got it to work, but this certainly does shed some light on how it might have worked.

For some applications measuring *power* consumption may not be as important as measuring *energy* consumption. In the example given the multiply drew only 5% more current (thus 5% more power) than using the shifts. But if the multiply completed the job a lot faster than a similar shift based multiply routine, then potentially the micro could return to some very low power sleep mode thus extending battery life.

Still it would be more efficient to use a single multiply instruction instead of multiple shift instructions. You would expect that to be done by an optimising compiler – but is GCC that smart yet?

He updated the article. Bit shifting is faster.

Don’t forget the other two variables in the equation…

Memory and Cost

Others might argue physical size matters too.

So, you’ve got Power consumption, Speed, Memory required, Cost, and Physical size/weight.

Now maybe, they all might matter if you’re building a satellite in the ’70’s, but if you’re building a couple thousand devices and shipping them by air, it could matter too.