Fresh from Microsoft Research is an ingenious way to reduce interference and decrease the error in a Kinect. Bonus: the technique only requires a motor with an offset weight, or just an oversized version of the vibration motor found in a pager.

Being the first of its kind of commodity 3D depth sensors, the tracking on a Kinect really isn’t that good. In every Kinect demo we’ve ever seen, there are always errors in the 3D tracking or missing data in the point cloud. The Shake ‘n’ Sense, as Microsoft Research calls it, does away with these problems simply by vibrating the IR projector and camera with a single motor.

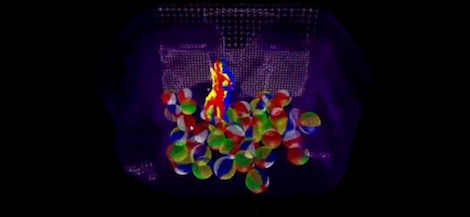

In addition to getting high quality point clouds from a Kinect, this technique also allows for multiple Kinects to be used in the same room. In the video (and title pic for this post), you can see a guy walking around a room filled with beach balls in 3D, captured from an array of four Kinects.

This opens up the doors to a whole lot of builds that were impossible with the current iteration of the Kinect, but we’re thinking this is far too easy and too clever not to be though of before. We’d love to see some independent verification of this technique, so if you’ve got a Kinect project sitting around, strap a motor onto it, make a video and send it in.

[youtube=http://www.youtube.com/watch?v=CSBDY0RuhS4&w=470]

This is truly elegant engineering, I love it!

Not want to be a spoiler, but this is meant to solve interference between *several* Kinects; from the youtube text: “Shake ‘n’ Sense is a novel yet simple mechanical technique for mitigating the interference when two or more Kinect cameras point at the same part of a physical scene”

It does not improve performance for a single kinect, as far as the info of the movie goes.

It’s true that it doesn’t improve the quality of a single sensor, but it DOES enable multiple sensors to be used on the same scene (stated in the summary and the movie). Multiple inputs can be combined to provide enhanced sensor quality, increased coverage, and increase the number of possibilities for this technology.

Also, this same method would work fine with improved optics in the Kinect 2, allowing for potential support for multiple sensors.

Who’da thunk it, so simple. I wonder any care needs to be taken to keep the weighted motors out of sync with each other.

I bet you could manage it for sure just by making all the weights vary in mass slightly.

It wouldn’t matter because the cameras aren’t from the same viewpoint so the motion of the pattern from one sensor will be vastly different when viewed from another sensor.

Doesn’t the Kinect come with an accelerometer too so it knows how to level and calculate it’s view angle? I wonder if the standard driver software will allow the user to bypass the accelerometer so it doesn’t freak out with all the shaking going on. Even better would be if the accelerometer had a fast enough update and fine enough resolution that it knew its deflection at a given moment and can refine where believes it’s point cloud is…. Only one way to find out…(digs kinetic out of closet and steals wife’s ”back massager”) …. Now that I think about it, how did they come up with this solution? O.o

Look up the “V Motion Project” on vimeo. It was done for an energy drink company, but it uses this principle.

After you’ve been dubstepped out, the writeup is kinda interesting:

http://www.custom-logic.com/blog/v-motion-project-the-instrument/

I imagine this would work for a single Kinect, actually. It’s a physical version of a technique used to increase the number of bits of accuracy for an ADC: introduce noise into your input and average the measurements. Atmel actually has an application note describing it here (PDF)

Dithering on instruments to increase accuracy isn’t particularly new, although application to the kniect is obviously novel. This is definitely one of those things that now that you see it you’re surprised it hadn’t been done before.

Basically saccades, innit? Agreeing with those who are saying “How did everyone else not think of that?”

I’m surprised the kinect doesn’t have some latency in the IR sensor compared to the camera that causes problems with accuracy.

Infrared and visible light both travel at the same speed, so if there is any latency, it’s in the data processing.

Dithering…

I dunno…well, maybe…but wait, no — ah, whatever you think!

Is there a way to attenuate the noise so it doesn’t sound like my Xbox is getting an unending stream of text messages?

The vibration motor found in a pager… or for those of us living in 2012, a phone…

Dithering, true, but motion blur is the more important aspect here.

Basically each infrared projector stops putting out a point cloud and starts putting out a motion-blurred point cloud. Because the infrared camera is undergoing the same motion blur, it sees the points it is projecting as true points still, but each other kinect only sees blurry indistinct IR light, not a point cloud that its trying to measure.

Genius!

This technique works with a single kinect too. I wrote a piece of software that ran the motor up and down in slight increments, and captured depth data only while the lens was moving. It was hell on the motor, but it improved the capture quality significantly.

Does this have anything to do with the stochastic ressonance phenomena? http://en.wikipedia.org/wiki/Stochastic_resonance

If microsoft doesn’t put this into Kinect 2.0 they’re crazy.

I haven’t had a chance to read the source article, does this work with the stock firmware? Would this be able to improve accuracy using Kinect Games?

This makes me think of how a lock-in amplifier works.

This was originally published by Andrew Maimone and

Henry Fuchs at the IEEE VR conference this March, I was there and it was a pretty interesting talk, and the general feeling in the crowd was “why didn’t we think of that?”

Here’s a link to the paper from IEEE VR if anyone is interested in more details:

http://www.cs.unc.edu/~fuchs/kinect_VR_2012.pdf

And here I was going to mention that :P

It definitely is a simple solution to the interference issue though. I myself thought that turning the cameras/lasers on/off in sequence rapidly would’ve been the solution people would go for.

+1

Pretty simple and incredibly powerful!!!

Do you think this idea can help kinect to sense fast motions more accurately?

What the hell’s a “pager?” And why do my grandparents keep giving me these sheets of paper with all these numbers on them? I think they call them “chaks” or something like that…

Ill take care of those for you…. In fact itll be easier dispose of them properly if they just put my name on them….

i can see many hackaday reader (those who own an xbox) playing xbox without force feedback, because they needed the motor for their kinect

I’m sorry but may I ask that they do the voiceover for their video with an actual microphone and more importantly by a person not having a severe headcold? I found in unbearable and had to turn the sound off.

now if someone can make a case mod for the kenetic that takes advantage of this and the “glasses” research that improves the scan resolution that would be awesome. just another reason for me to get off my butt and go buy a kinetic sadly I’ll have to invest in a more powerful netbook to pull it off while on the go ^^;

How about applying super resolution techniques to a multiple Kinect setup?

http://en.wikipedia.org/wiki/Super-resolution

wow thats cool!

any robot thats using a kinect setup is asking to get blinded by interferance. especially if its an interferance attack!