One of the problems future engineers spend a lot of class time solving is the issue of odometry for robots. It’s actually kind of hard to tell how far a robot has traveled after applying power to its wheels, but [John] has a pretty nifty solution to this problem. He converted an optical mouse into an odometry sensor, making for a very easy way to tell how far a robot has traveled regardless of wheels slipping or motors stalling.

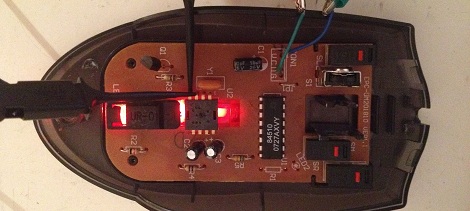

The build began with a very old PS/2 optical mouse he had lying around. Inside this mouse was a MCS-12085 optical sensor connected to a small, useless microcontroller via a serial interface.

After dremeling the PCB and discarding the microcontroller, [John] was left with an optical sensor that recorded distance at a resolution of 1000dpi. It does this by passing a value from -128 to 127, rolling over every time the sensor moves more than 3.2 mm.

As far as detecting how far a robot has moved, [John] now has the basis for a very simple way to measure odometry without having to deal with wheels slipping or motors stalling. We can’t wait to see this operate inside a proper robot.

Neat! I did a similar build with a propeller a while ago – if your micro can do floating point math, this gives you a fairly decent dead reckoning system if you have the patience to calibrate it. I ended up using two mice though.

http://www.youtube.com/watch?v=YiJOiqOHG1s

Ask me for the SW, I have it somewhere. Very unpolished though.

What if the wheels spin but they are not in contact with the ground?

Just aim it at the ground instead. Though I’m not sure how good that’d work.

Aiming it at the ground is preferred since you can tell if your wheels are slipping and take steps to mitigate it. Pointing tow at the ground, one on each side of the vehicle can help you make and measure more precise corners as well. (Outside of the vehicle travels further than the inside of the turn.) There are better/easier/cheaper ways of measuring wheel rotation than an optical mouse.

Every method of tracking has faults which is why bots that need truly accurate positioning combine multiple measurements from a range of sensors. It’s a surprisingly complex problem to figure out which readings are the best under which circumstances and how to use that info to turn it into actuate world info.

Brian’s last paragraph suggests the light/optics are pointed to the ground not at a wheel, but the first paragraph in John’s blog could be interpreted they are being pointed at a wheel surface.In the event the blog mentions what surface(floor or wheel) is being measured I’m overlooking it. In my comment directly to the article, my pondering on how well this would work on all surfaces, was based on Brian’s write up

MCS-12085 seems to be a clone of ADNS-2610.

I could not find MCS-12085 datasheet, but ADNS-2610 datasheet is available. Control registers and raw pixel data register match exactly the description in ADNS-2610.

So one can use raw 18×18 pixel data from MCS-12085.

Good tip. Of course, if the surface is too rough (like carpet, or if the robot will roll over things like socks or your pet’s tail), accuracy can be lower than wheel odometry.

And if you must fall back to that, mice still come to the rescue. The optical sensor can be used to measure wheel rotation, especially if you point it at an appropriate surface glued to their inner face. If you prefer quadrature encoders, old ball mice are a great source of them.

Far out repurposing of an everyday item. Thanks to John for sharing. Diversions are nice for keeping one sane, but back to building Babbage’s machine,OK? ;) Will be interesting to see how well it will work on some carpet types, and most outdoor surfaces, for those who adapt this idea in their robots.

Nice!

Had the same idea but never the time to build it.

Reassuring to see that you can use an old PS2-Mouse for that.

I’ve tried this for a robot contest some years ago. It works very well for flat surface(the kind that work good for mice) but make it 3D and problems star appearing.

When you change the distance between the sensor and the surface you can see a pointer “jump”, it tells you it traveled a long distance, but it didn’t.

Had the robot tested on a flat surface that had sheets of papers. Get the sensor at the edge of a sheet that is just a little risen and you can get the pointer to oscillate and act like crazy on the screen.

Yep, pretty much why I lost interest. I wonder if it would play nice with a traditional encoder.

I don’t think it’s so much an issue with the sensor itself, or the distance between sensor and surface. I can lift my mouse straight up off my mousepad without moving the pointer; so it handles the change in focus and field of view with no problem.

It’s probably due instead to the offset illuminator LED. Since it casts light at an angle, it will have more visual impact on 3D features as distance increases. Maybe a different illumination scheme would minimize the problem?

Here’s a mouse controlled robot I built in 2006:

http://www.youtube.com/watch?v=yCEJRxcduts

//Edited by Brian…

Done that a few years ago, with simple optical sensor and high-end laser sensor used in gamer gear. n both cases you can’t get very accurate nor stable mesurement. Often you will have “jumps” like Bogdan says, but there is also some drift depending on the surface, temperature, speed, acceleration, errors as soon as the sensor gets a little dirty, performance degradation when the height varies…

All of these are not a problem in a mouse : you provide a feedback loop that negates all these problems (except extreme dirt-induced problems. You see the pointer, and you correct so that it goes where you want. Try that: start at the border of the screen and move the mouse of a known distance not looking at the screen. Take a screenshot, repeat 10times, compare.

So basically as an absolute sensor it’s not very good :/

As part of a multi-sensor setup with complex fusion algorithm however it can be useful, but that’s another story.

If doing that mouse experiment make sure to have mouse acceleration turned off, otherwise not only the mouse starting and end position matters, but how fast it is moved as well.

I have not actually tried it, but I would have thought, in the short term at least, that a mouse would produce the same result if mouse acceleration is turned off.

Take a look at this page, it might offer more info about doing this, the guy uses his for a mini sumo robot. he mentions the pros and cons of dead reckoning with it.

http://imakeprojects.com/Projects/seeing-eye-mouse/

I did this sort of thing back in 2007. First, I hacked the mouse like John did and then created a custom, single-sided PCB to hold the mouse sensor, which is more useful for robotics application. Since I also used the ADNS-2610, the PCB can still be useful.

http://home.roadrunner.com/~maccody/robotics/mouse_hack/mouse_hack.html

http://home.roadrunner.com/~maccody/robotics/croms-1/croms-1.html

This is a demo of the above mouse controlled platform the only feedback is from the dual mouse sensors.

It was controlled by PIC16F877

http://www.youtube.com/watch?v=_dBV5sSMCis&list=PLgLvgZzJEIB0sYTAl4Navv6UpbQMTRd-2&index=1&feature=plpp_video

Amnon

This is a demo of the mouse controlled platform doing drag compensation ,the only feedback is from the dual mouse sensors.

http://www.youtube.com/watch?v=ZSQRYJz6RBY&list=PLgLvgZzJEIB0sYTAl4Navv6UpbQMTRd-2&index=4&feature=plpp_video

and the last one is synchronies movement of two of this platforms together .

more info on the you tube clips

http://www.youtube.com/watch?v=dsAOX7nYF7I&list=PLgLvgZzJEIB0sYTAl4Navv6UpbQMTRd-2&index=3&feature=plpp_video

Amnon

I tried to do something like this before, although I used the PS/2 output, rather than trying to reprogram it or something. It worked as long as the robot was going slow. At a few inches per second, it worked. But I had it attached to an RC car that wanted to go feet per second, and the mouse would loose track of it\’s location and either report no data, or something ridiculous (sometimes both).

Optical mice essentially take a small picture of the ground, and see how various spots move to give you X/Y. If the view of the mouse moves completely out of frame within the sample rate, the mouse doesn\’t know what is going on.

As I was on a tight deadline and budget, we ended up flipping the mouse over and using the scroll wheel as an encoder. Lower resolution, more susceptible to slipping (it was less accurate), but it worked well enough for that project.

If it had to do it over again, I would just have some wheel encoders. The wheels slipped less than the scroll wheel, and would probably be more reliable than an optical mouse.

I did some experimenting with LED and Laser-based optical mice sensors back in 2007 when developing some novel odometry methods for an unconventional vehicle.

Here’s a paper I wrote with some results on accuracy and combining the mice sensors with wheel odometry (for an unconventional vehicle):

http://vscl.tamu.edu/papers/AIAA-2007-6325.pdf

The interesting points are that the laser sensors seem to have more consistent inaccuracies (as functions of angle and rotation) than the LED sensors, indicating that they could theoretically be calibrated to pretty good accuracy.

In the end, however, it proved to be too much trouble for our application. The performance on different surfaces and even different levels of dirt on a given surface changed the sensor output.