It was only a matter of time, and now someone’s finally done it. The Oculus Rift is now being used for first person view aerial photography. It’s the closest you’ll get to being in a pilot’s seat while still standing on the ground.

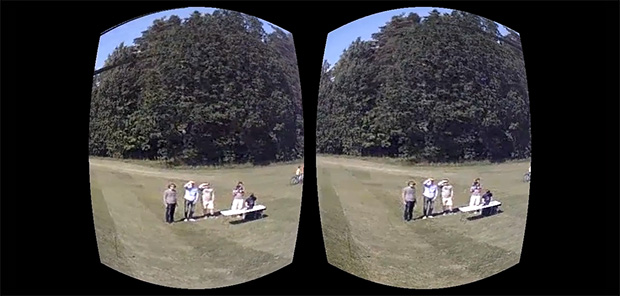

[Torkel] is the CEO of Intuitive Aerial, makers of the huge Black Armor Drone, a hexacopter designed for aerial photography. With the Rift FPV rig, the drone carries a huge payload into the air consisting of two cameras, a laptop and a whole host of batteries. The video from the pair of cameras is encoded on the laptop, sent to the base station via WiFi, and displayed on the Oculus Rift.

Latency times are on the order of about 120 ms, fairly long, but still very usable for FPV flight. [Torkel] and his team are working on a new iteration of the hardware, where they hope to reduce the payload mass, increase the range of transmission, and upgrade the cameras and lenses.

[youtube=http://www.youtube.com/watch?v=IoXSfpkUEm0&w=580]

[youtube=http://www.youtube.com/watch?v=IRKoUeqTsK8&w=580]

Nice, but 120ms is a loooooong time for fast FPV action, I got rid of my gopro in my FPV rig because of the lag on the A/V output which is shorter than that.

50k for a carbon fiber quad with a gryo camera holder. I bet there are people who pay that.. Well played

+1! They are quite nice though. Co axial hex not a quad so that’s 12 rotors. Mmmmm!

+1 I thought it was a typo when I first clicked through! That’s insane money

You’d better build a motion simulator chair or that’ll make you puke awfully quick.

It really isn’t that bad. Most people work through VR sickness in a couple days. Your body learns to reduce the importance of your vestibular system while strapped into the Rift. At this point I can do Superman style flying demos without reaching for the barf bag XD

That copter can haul some serious weight!

Now they need to put the camera on a pan/tilt and link an accelerometer to the Rift and have it real-time look as the use rmoves his head, similar to the FLIR system on heli’s

I’m working on my first FPV quadcopter right now. I was thinking about mounting a pair of #16 keychain cameras with 120-degree lenses in a stereoscopic 3D rig. (For recorded video, the FPV would likely be from a separate camera, unless testing shows that using the video-out on one of the cameras doesn’t cause any additional load.)

Getting full stereoscopic FPV sounds hella awesome, especially with the Oculus Rift’s huge FOV if the right cameras and optics are utilized, but here are my thoughts:

1. Aside from their choice of USB-based cameras, I don’t understand what limitation they encountered that made putting a whole computer on the craft more reasonable than beaming the video to a groundstation for processing.

2. HOLY CRAP that’s a huge craft in the first video! That thing could probably lift a friggin CHILD.

3. I think the most amazing application for full immersion stereo FPV would be a micro craft, like ~8 between motors or smaller, with guarded props. The small size, and the FPV’s full immersion and depth perception would allow for flight in tight quarters.

4. I can’t wait to see a later iteration of this tech with a 3D HUD.

5. “We’re living in the future, but I didn’t even notice! I was confused by the lack of hoverboards.”

I would imagine you would get a huge increase in performance and range using 2x 5.8Ghz Analog transmitters. I have a single one and the picture is incredibly clear from a GoPro (yes it is composite but with a quality camera + 5.8ghz it is very very clear!). Using the channels with the furthest separation and then using a pair of USB to Composite video capture cards would work. Altho the advantage as they have is that with it being fully digital, every pixel is sent (so clear object detection done externally) and also can stream data with the images (rather than having to use an On Screen Display chip with analog (unless you had a 3rd transmitter to send digital information data!!)).

Either way I’m impressed with the size and power of that giant copter!

Yes! I haven’t done any flight testing yet, but I’ve fiddled around with my video rig which is very similar to what you’re describing.

I get NTSC/PAL composite video out through the USB port on the keychain camera (Even while it’s recording 720p to the SD card) much like your GoPro, and transmit via 5.8GHz 200mW TX to an RX and a battery-powered 7″ LCD screen. The picture is VERY nice indeed!

And yeah, that’s a very impressive copter they’ve built. Though, hopefully when it’s finished, mine doesn’t sound like a fleet of angry lawnmowers taking flight; I like my hearing. (And considering mine can supposedly draw 100-120 amps @ 14.8v at full throttle, I shudder to think what it takes to power THAT thing!)

My room mate and I went off on a tangent about the Rift after I showed him Caleb’s rift attachment video. It was pretty long but the (funny) good part was using a few attachments and creating Golem’s from Snow Crash =P, considering how surprisingly comfortable with Google Glass people are just take it to the next level with full on creepy.

From the technology stand point, you can do so much more with a full visor system such as an AR HUD though I imagine latency would be a very big problem until the delay was mitigated from en/decoding so a pretty beefy laptop would be required. To make it useable it would also need a plethora of scripts that translated directly into the HUD overlay and some kind of management system so such displays didn’t clutter up the FPV of the personal AR experience, such as including bio-electric feed back from facial ticks and tongue movement or a pico camera for eye-tracking instead of stating obnoxiously “OK, Rift” to get the scripts attention…

A few days ago I posted a thread on the oculus forums with a tutorial on how to do this. My setup uses traditional analog video transmitters, meaning much longer range than Intuitive Aerial’s solution. It’s cheaper as well, you can use normal FPV equipment. Latency is about the same, 130ms.

https://developer.oculusvr.com/forums/viewtopic.php?f=20&t=2358

THIS is what I have been waiting for!

We have several Rift developers units at my job just to play with. . . so far about half of us (myself included) get sick using them.

HOWEVER, having depth data for telepresence robots when navigating tight spaces or using a manipulator is super important and I’m glad to see this post.

As an aside, I talk with UCB vision scientists a lot (which pretty much makes me an expert, right?) and apparently VR sickness has more to do with gaze convergence being fixed to a single plane which confuses your brain when it is presented with simulated depth data via stereo vision. It less provoked by the vestibular system. In normal human vision; the convergence point changes with the range of the object your gaze is fixed upon. Your brain uses the convergence point as a sort of range finding. This lack of convergence derived depth data conflicts with the simulated depth data via the 3D VR environment. A researcher is working on an improved goggle that would somehow vary the convergence point as well which should make the VR experience more natural.

Until then; I will do my best to train away my puke response. . . but I await the day for a headset that accomidates eye movement and focal distance.

Pedantically yours,

Robot

Thanks. That’s very informative.

I have been flying 3D FPV for 18 months using the ‘3D Cam FPV’ at nghobbies and love it. I think it has to be 3D if you are going to do it but I know many people will be happy with 2D on the large FOV. I see quite a lot of Rift youtube videos of games where the person does not realize it is 2D.

The Rift is a natural target for FPV flight and I have teamed up with Trevor the 3D Cam FPV designer for ‘Transporter3D’ that will enable the Rift to be used for 2D or 3D FPV. He made the first 3D rift FPV flight a month ago…

http://youtu.be/u74rf4ztJTI

http://youtu.be/M7WZXzhsFgY

http://youtu.be/xiqIPjAh0JQ

Still in progress but no laptop needed :-)

phil

I bet someone will make an equivalent quadrotor for about 3000$.

There are kits for gyro servo pan/tilt/roll, or you could design your own completely.

A decently sized quadrotor that can lift 1 pound payload. (includes battery weight)

Two good, yet cheap, low latency usb webcams.

Also, why use a laptop when you can use a RASBERRY PI!

Unfortunately because of the Pi’s inadequate USB stack/hardware/something-or-other it tends to struggle a little even with 1 webcam at a high enough framerate + res to be usable.. so add two webcams and add in data streaming/processing and I think you may run out of headroom and potentially crash a VERY expensive looking copter!

An alternative would be to use those webcams that have a built in encoder that generate the stream for you, the Pi then doesn’t need to ‘understand’ the stream but to just push it on to where-everneeds it (over the wireless network) which would probably work a lot better to be honest :) They are a fair bit more expensive.. they’re sold as skype webcams, or 720p encoded webcams (something along those lines)

They should run this over LTE

If i might make a suggestion – there is really no reason to use two separate cameras with two separate RF streams. If you want better quality, just get a better camera. Instead of two whole systems to create stereoscopic vision, use a mirrored splitter. There are some high quality ones made for larger DSLRs already for this purpose. Here’s a link showing the basics for how they work:

http://www.lhup.edu/~dsimanek/3d/stereo/3dgallery16.htm

Great stuff !

Are you guys using analog transmission or really digital transmission ? If digital, what kind of coding are you using to minimize the latency ?