If you need a specific wavelength of light for research purposes, the naïve way of obtaining that is a white source light, a prism, and a small slit that will move across your own personal Dark Side of the Moon album cover. This is actually a terrible idea; not only won’t you have a reference of exactly what wavelength of light you’re letting through the optical slit, the prism itself will absorb more of one wavelength of light than others.

The solution is a monochromator, a device that performs the same feat of research without all the drawbacks. [Shahriar] got his hands on an old manual monochromator and decided to turn it into a device that performs automatic scans.

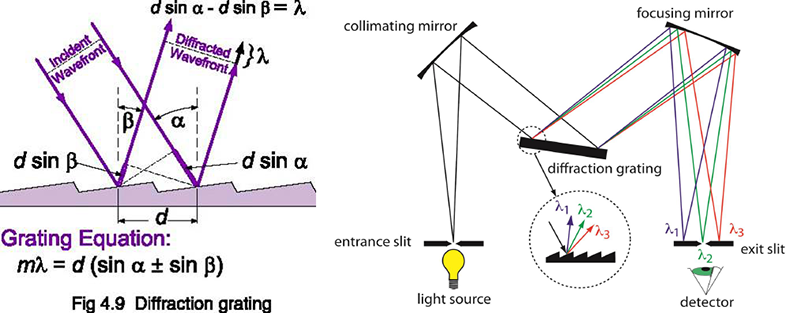

The key of a monochromator is a diffraction grating, a mirrored surface with many fine parallel grooves arranged in a step pattern. Because of the surface of the diffraction grating, it’s possible to separate light according to its spectrum much like a prism. Unlike a prism, it’s effectively a first surface mirror meaning all wavelengths of light are reflected more or less equally.

By adding a stepper motor to the dial of his monochromator, [Shahriar] was able to automatically scan across the entire range of the device. Inside the monochromator is a photomultiplier tube that samples the incoming light and turns it into a voltage. By sampling this voltage and plotting it with MATLAB, [Shahriar] was able to plot the intensity of every wavelength of light within the range of the device. It’s all expertly explained in the video below.

“Unlike a prism, it’s effectively a first surface mirror meaning all wavelengths of light are reflected more or less equally.”

This isn’t true and it depends on the ‘Blaze’ of the grating. Also there are ruled gratings and holographic gratings, latter masters are made by an optical process and have sinusoidal surfaces. Holographic gratings generally have lower efficiency but are free from ghost images caused by periodic errors in ruled gratings. If the monochomator uses a flat grating then the smart hacker would probably want to add a linear CCD to capture the whole spectrum at once. That Verity design uses a curved grating which means the spectrum isn’t all in focus at the same time on a flat sensor. Curved gratings allow designs with fewer optic surfaces which are better for UV applications.

diffraction gratings that aren’t fully characterized, actually, are way more terrible than any prism when it comes to the deleterious effects of an unknown spectral transfer function.

And if that isn’t cool enough, this probably is:

http://en.wikipedia.org/wiki/Chirped_pulse_amplification

That’s right. Rainbow lasers.

CPA rocks! I work with these types of systems every day!

.. but it’s also quickly becoming ‘old’ technology. There’s a hybrid scheme that spatially splits the broadened spectrum and amplifies slices of the spectrum, recombining each slice after amplification. These systems could potentially provide *extremely* short pulses at larger peak powers than a conventional CPA system. With this new setup, you effectively have multiple crystals for each wavelength slice, so you no longer have problems with gain saturation, etc.

Monochromators are cool, but you’re an idiot.

Both of your “drawbacks” of prisms are just as present with diffraction gratings.

You should apply to write!

With a good understanding of physics and a positive attitude oh wait I see the problem. nm.

Exactly this, no one in comments seems to get that the writers can’t be experts at everything. I think they do a fairly good job as is and could do with constructive criticisms not personal insults. I prefer to insult the insulters but they hardly understand why… It’s like they have an extreme understanding of their narrow, useless without everything else field and can’t see the big picture. Know it all comments are as arrogant as the character Sheldon in the big bang theory and as ridiculously stupid as that show is, that’s who I imagine insulting the writers.

The “writers” here are so set on the “blah is awesome because X” template that they don’t mind making up complete bullshit which makes this blog look like a joke to anyone with even cursory knowledge of the subject.

when an obvious lie smacks you in the face in the first sentence, how are you supposed to take the rest of the “article” seriously?

What are you *suggesting* though? That HaD should not link to projects they don’t completely understand? That no introduction should be used to bridge readers to the technical details in case there is a mistake?

Not to mention that the “lie” is more likely to be ignorance or a misunderstanding of the subject, which is why commenters with a better attitude than yours can step in to help.

@Marvin

Jeez, maybe just don’t make shit up? How fucking hard would that be?

That crap isn’t in the linked project, so why pull it out of their ass?

Maybe it wasn’t made up. People CAN believe their incorrect knowledge is correct, resulting in copy that is neither a lie nor fabricated.

Do better, deal with deadlines and limited research time, write clearly and succinctly and I’m sure you’ll put all the hackaday wrongs right again.

Be our know it all.

sometimes I wonder if the hackaday stuff even watches the videos they post.. Or if they do, why do they add wrong information that is not in the video?

The most serious drawback to using prisms (or any refractive device) is that they affect the quality of light they pass. They absorb light, and not evenly (absorption lines are present). They are also heavy, and can get too heavy as size increases (not a factor here.

These issues exist with telescopes. This is why large refractor telescopes do not exist. Large lenses sag under their own weight, and their centers can not be supported. At least mirrors can be fully supported from the rear.

Actually, nicely done! Getting from unmodified hardware to something that can produce apparently reasonable raw (uncalibrated) results takes a fair amount of work.

The next step is obviously calibration of the system (compensating for photomultiplier tube sensitivity, monochromator loss, and optical fiber loss) – that’s likely going to require measurements of several known light sources, and estimating the combined response.

Mounting the stepper is something of a hack – a sturdier mount seems called for. I think I would have tried to find a telescoping splined shaft and universal joints first, but might have defaulted to a slide much as you did.

The bandwidth of the PM tube and electronics may influence the scanning speed. You might monitor the wavelength peak of an LED and pulse it to see what output delay, risetime and falltime looks like. Results from the unmodified device would be representative of the both devices. Compare to the output from a phototransistor illuminated by the same LED.

Argon-mercury lamps are often used to calibrate spectrometers, they emit something like 9 distinct lines across the spectrum which makes calibration easy.

I think these monochrometers come with silica fibers so they are good all the way down to UV. Its kind of hard to profile everything without a known standard source.

Yeah, he did go a little overkill with the micropositioner for the stepper. If I do mine I will get a small ball spline and use the screw hole in the end of the micrometer to drive it.

I dont think the bandwidth of the tube will be an issue considering they are used for photon counting in applications like proportional counters and electron microscopes. At least it won’t be while the mirror is driven with a stepper motor.

Very cool, I have one of these verity monochromator that a friend gave me and I have thought about doing the same thing. Verity does make a version of the same monochrometer with the stepper motor built on to it, I am not sure how they are coupling, maybe it is a spline setup.

It is nice to see what is inside it so I dont have to take mine apart!

Very cool project. Not to accurate (not negative), this isn’t a monochromator. It is a scanning spectrophotometer. If you want to see a scanning monochromator, I can post a photo of a Jarrel Ash I put a stepper on in 1983, but it is just a metal box with a pancake stepper on the side. An external photomultiplier and photon counter made the whole combination a spectrophotometer and less interesting than this project. (Though after the liquid helium cold finger and rotating chopper, xenon arc lamps and quartz optics were added it was probably pretty interesting. Should I write it up some day as an example of what you can do with a 1 MHz 6502?)

What was the cold finger for?

It was the bottom of a dewar and held samples of biological molecules, like rat testosterone that had tryptophan. The whole thing was developed to scan both input and output (two motorized monochromators) – hit the stuff with a wavelength and scan the phosphorescence, change the excitation wavelength a nanometer and do it again) and find where the tryptophan peaks were. Then drop the chopper and use shutters to measure phosphorescence lifetimes at the peaks. Between the wavelength shifts and the lifetimes the environment of the trtptophan helped figure out the folding of the protein. It was all run with an Ohio Scientific 6502 with 28K or RAM and an AMD floating point chip and 12 bit ADC and DACs added. Written in Forth, which included analysis and driving a simple plotter and a storage display – even the fonts and character generation.

“Not to accurate” –> “To be accurate” Weird typo!

So is the idea that he can generate a plot of wavelength vs intensity with more accuracy than something like a camera sensor? The latter solution only has three sensors which means wavelength readings not on the peaks of those three sensors center peak sensitive wavelengths will be only approximations??

Yeah, the filters over the pixels in a camera sensor are better than a black and white photo but not much. That is why earth observing spacecraft have multi-spectral scanners and send a lot more than RGB channels. Ideally you would be able to look at an image and scan through the spectrum. Imagine the data of a single full spectrum image of resolution of only 10 nm. Without IR and UV. At 390 to 700 nm that would be 31 images and if you want good data you need 12 or 16 bits per pixel per channel. 400×3000 video would be around 3 GBytes/sec raw. 10 years from now it video data rates like that may be common. What comes after 4K video? I wonder what adding another color channel can do? The whole tri-stimulus color theory is pretty solid, so maybe nothing. How about an IR channel so you can feel the heat from a campfire in a movie? A full multi-spectral imager is a bit more of a problem.

You could replace the slit and PMT with a CCD line sensor to pick up all the photons at once.

This is how it is done often these days.

Try one from an old flatbed scanner where you have the electronics already in place.

Check the spectral performance of the CCD first but scanner CCDs are pretty good in the visual range.