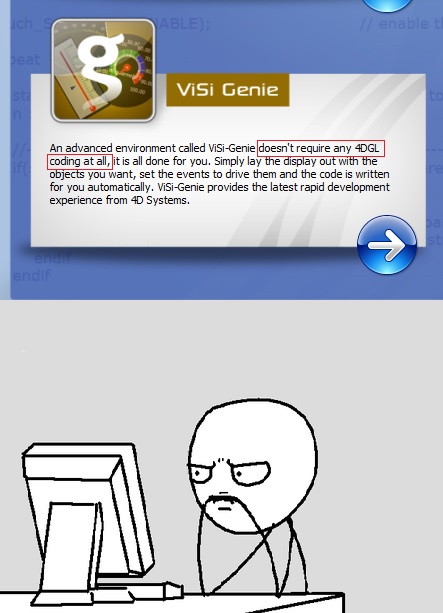

When the 4D Systems display first arrived in the mail, I assumed it would be like any other touch display – get the library and start coding/debugging and maybe get stuff painted on the screen before dinner. So I installed the IDE and driver, got everything talking and then…it happened. There, on my computer screen, were the words that simply could not exist – “doesn’t require any coding at all”.

I took a step back, blinked and adjusted my glasses. The words were still there. I tapped the side of the monitor to make sure the words hadn’t somehow jumbled themselves together into such an impossible statement. But the words remained… doesn’t.require.any.coding.at.all.

They were no different from any of the other words on the IDE startup window – same font and size as all the rest. But they might as well have been as tall as a 1 farad capacitor, for they were the only things I could see. “How could this be?” I said out loud to my computer as the realization that these words were actually there in that order began to set in. “What do you mean, no code…this…this is embedded engineering for crying out loud! We put hardware together, and use code to make everything stick! How does it even talk…communicate….whatever.

A sardonic grin traced my face as I chose the ViSi-Genie “no code” option – clicking the mouse with a bit more force than necessary. I started a new project and began to mentally prepare a Facebook post on how stupid this IDE was. I had already thought about a good one by the time everything was loaded and was able to start drawing on the screen with a crayon making a project.

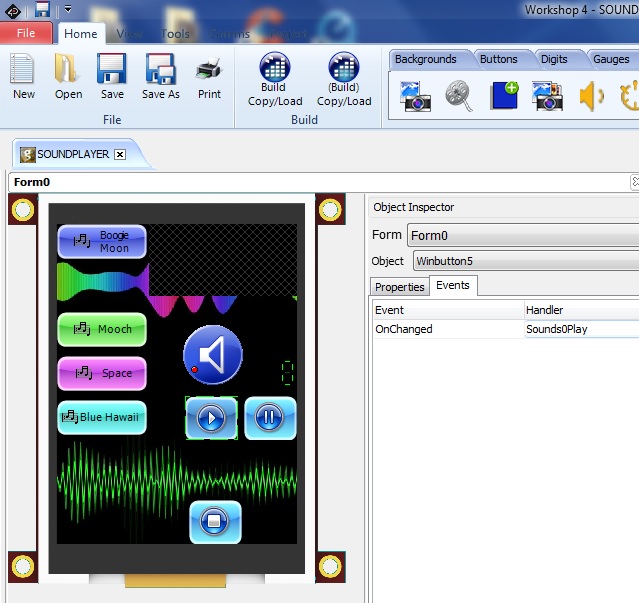

Each screen that gets painted to the display is called a “Form”. You can make as many forms as you want, and apply an image as the background or just leave it as a solid color. To apply an image as the background, one simply loads a JPG and the IDE will format it to fit the screen. A dizzying assortment of buttons, gauges, various knob, levers, etc… can be selected and dragged onto the form.

“OK, so this might not be so bad after all”, I said. I loaded an image and just placed a few interactive objects on the form, then hit the dubious “compile and load” button to see what would happen. It asked me to load the micro SD into the computer (that’s where it stores all images and files), put it back in the display, and poof! Within a few seconds, my image and the objects appeared on the display. I could even interact with them – if I touched a button or moved a knob, it moved in real time!

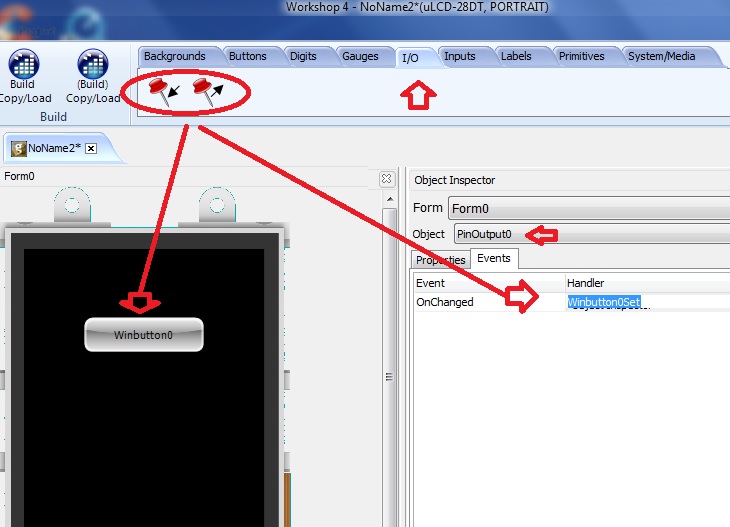

“Ah, but what do these knobs and buttons do in the background? How do you make them do anything without code?” So I went back to the IDE and started to explore the options for the various input devices. It was around this time that my opinion of this “no code” thing started to change. Each button could control an option. One of these options was the “Load Form” option. So I made a few other forms and linked them to the buttons and within minutes, I was touching buttons on the display and loading new forms. Another option was to link objects to one of the five GPIO pins. It only took another couple minutes before I was lighting LED’s by touching buttons and loading forms by controls TTL levels on the GPIO pins.

Next thing I knew, I had created my entire Pipboy 3000 project with not a single character of code. Twenty five forms, over two hundred user buttons, two GPIO inputs and three outputs – with no code. Wow. You can download and play with the IDE for free. Give it a go and let us know what you think.

So by “no code” they actually mean you can’t type the code, but have to use a series of drop-down selection menus?

Tagged: Ad

It this article sponserd?… By… (?!)

No, we do not post sponsored content.

If you do native Advertisement please state so before AND After the Ad

So, I am assuming this only works on their very high priced proprietary display units?

Of course. 4D Systems displays have microcontrollers built in to provide this functionality. They are also quite expensive.

Actually it’s not that bad, you can almost always source the LCDs cheap, build a PCB and order their microcontroller. The chip is still expensive, but it’s got some nice features to it. You don’t have to buy the module from them.

Yes, super expensive. You trade time and effort for money. I can buy a resistive touch screen lcd 4.3″ for about $35, these guys sell the same thing, added their driver at $170.

I have used these displays before, they are OK for the price and easy to get started with. However the SD card socket broke on one (it quickly becomes a pain swapping the SD card every time you flash the screen) and its hard to do anything more interesting than loading a form or toggling a pin with the screen on its own.

I connected mine to an mbed over serial and used it as a control panel for some home automation.

As a non-functional prop, one could use a much cheaper LCD picture frame with much less mousing around.

HaDvertisment… from Ad a day?

Except a picture frame lacks the ability to navigate.

Some of the pictures frames have touch navigation. The cheap stuff have buttons.

Touch navigation mapped to the correct locations? The project this display was used for was a Pip-boy which would have numerous buttons per menu.

Could have it been done with a digital frame? Sure, fairly close, but not what I’d consider to be a true simulation.

some of the photoframes are running arm-linux too, I consider this a totally overpriced experience, I’m not sure what’s being sold here, the software or the screen, if the software is free, the screen just isn’t worth $144 of anyones money.

A true simulation would have biometric and environmental sensors, so unless you put something similar to the tricorder projects, you are not even close.

This is just a fake prop for the rich kids and they are not living in post nuclear world just trying to stay alive…

Originally I was going to say it was a replication, but recanted due to what you pointed out. I felt that “simulation” reflected it accurately.

you guys are adorable.

What? 144 for a tiny resitive touchscreen?

Sigh – apostrophe :-/.

“GUI’s” would be possessive… for example, the “GUI’s blue color scheme”. Plural would be “GUIs”.

I see that you’re using an all-upper case headline and that might have lead you to think that “GUIS” may be confusing, but there are ways around that. An obvious one being “MAKING EMBEDDED USER INTERFACES WITHOUT CODE.”

Grammar Nazi…..*Sigh*

Before trying too hard to look smart, it’s best to know what you’re actually talking about.

This notion that apostrophes can only be used to show possession is an overly simplified idea that keeps getting pushed forward by people who don’t know any better and want to make themselves feel better by putting others down.

Yes, many people incorrectly use an apostrophe to create a plural, however this practice IS acceptable for awkward plurals, for making numbers plural and for abbreviations.

The author was correct in writing “GUI’s”.

No, it is friggin’ NOT acceptable. An apostrophe stands for a letter missed out or a possessive. (In fact, some argue that it also means a letter missed out when used as a possessive – for example “Peter’s dog” actually originated from “Peter, his dog”) The author was NOT correct in writing “GUI’s”. Where’s the letter missed out?

There’s a rule, and you can’t simply change the rules of the English language to suit personal preference. “Awkward” plurals (whatever you mean by that), numbers, abbreviations – these are no exception to the rule. Presumably you’d think that “the 1950’s” would be acceptable? Again, where is the letter missed out or where is the possessive? It’s simply the 1950s! Suppose you were wanting to refer to simply “the fifties” – are you going to write that as “the ’50’s”? The first apostrophe there is correct the second is NOT.

I’m sorry, but this sort of attitude really annoys me. There’s a right way and a wrong way to do things, and this simply isn’t the right way. Sadly, nobody seems to bother nowadays…..

This is the official Hackaday line on grammar: We specifically do not have a style guide, and the biggest influence on our lack of a style guide is the fact that ‘hacker culture’, ‘tech culture’, and ‘computer culture’ has always, always been extremely loose with rules of grammar and spelling. Prescriptivism has never played well here. There are stories that could fill a book, but I’ll just ask you, “split -p soup?”

Now, without a style guide or any codified grammar or spelling, we’re left with coming up with ad-hoc solutions. I ask you, which looks better: EMBEDDED GUIS or EMBEDDED GUI’S? Is it correct? No, absolutely not. Does one option look better than another? Yes, obviously. Is bandit’s suggestion of, “MAKING EMBEDDED USER INTERFACES WITHOUT CODE.” better than either of the two options? Yes. And now we know what to do the next time this question comes up.

> There’s a rule, and you can’t simply change the rules of the English language to suit personal preference. “Awkward” plurals (whatever you mean by that), numbers, abbreviations – these are no exception to the rule.

No. That’s just wrong. Get away from my language, prescriptivist.

Hell yeah, the staff fights back!

I hate to hammer a point, but there’s a difference between a _style_ guide, and what is simply plain wrong. “Style”, in my book, means stuff to do with how content is written and presented – third/first person, fonts, use of abbreviations, names etc. That’s a whole separate thing from punctuation, which simply has to be correct.

I have unfortunately never understood why it is considered “cool” or fashionable to ignore the established rules. How is mis-using the rules of English punctuation, simply for the sake of not following the rules, a good thing or something to be encouraged? If we’re on computer analogies, try missing out a semicolon in a C program, or whitespace in Fortran, and see how fashionably the program behaves then! (Or, rather, the compiler, since it probably won’t get as far as the program running….)

Is it so difficult or onerous to think before one writes its/it’s, and so on?

Now, as for EMBEDDED GUIS or EMBEDDED GUI’S – simple: don’t use all-caps in the first place! Did nobody think about the problems this will cause when referring to abbreviations or acronyms in titles – LED, PI, GPS and so on? If you really must use an all-caps font, which personally I find very hard to read for a heading, then can you at least make the initial letters bigger caps than the rest of them?

While I’m here, let me reply to charliex’s comment as well. “If laws don’t make sense, get ignored, or don’t get used, change the law. english is not fixed.”

1) “If laws don’t make sense”. How, exactly, do the laws governing the use of the apostrophe not make sense? They are perfectly straightforward, if only people would care to follow them. I say again – an apostrophe stands for a letter missed out or a possessive. There’s nothing about that which doesn’t make sense.

2) “get ignored”. So the laws against going through a red stop light, robbing banks, mugging people, etc., which are ignored every day of the week by many people, should be repealed just because some people choose to ignore them?

3) “don’t get used”. Sure, they don’t get used by the people who abuse punctuation, but see point number 2.

4) “English is not fixed”. (While we’re at it, let me also remind you about capital letters at the beginning of sentences and for proper nouns). Yes, words change and morph, and there are regional and international differences. The rules of punctuation are a critical part of the language _structure_, with clearly defined applications. Miss out punctuation and the meaning of sentences changes entirely. As I said in 1), the rules of punctuation work, and work well, if people followed them. You don’t change something simply for the sake of it, as you seem to be suggesting. Try missing out the commas which delineate clauses in Russian and see how far you get.

Again, no, and this is something all grammarians need to realize: there is no overarching authority concerning correct English grammar and punctuation. Spanish? Yeah, there’s a single authority that sets the rules for Spanish. French? Same thing. No such organization exists for English.

This is important, as any argument over English grammar and punctuation ultimately boils down to, “that’s not how I do it.” Most of the time, people who criticize these peculiarities do not take into consideration the internet/hacker/computer culture these grammar foibles come from, and it ultimately ends up being linguistic imperialism. I’m not having that, and anyone who suggests otherwise can GTFO.

I’m not saying “GUI’S” is dumb – I’ve already conceded there is a better phrase we could have used for the headline. However, I have heard no complaints of what would be incorrect grammar and punctuation used on Hackaday when it is the most obvious choice. Did you know that all ship names are supposed to be underlined? Wouldn’t that be stupid on the Internet, where underlined things mean they’re a link? Guess what we do with ship names? We italicize them. It’s incorrect formatting, but no one complains about it because it makes sense. Yes, this is misusing the rules of English punctuation, but doing so in a way that makes sense. It’s not cool, it’s just the way things need to be done.

Hey Brian,

you don’t think it might be prescriptivistic to tell Lindsay to step away from ‘your’ language?

For me, nativ german speaker, i consider it a major disadvantage that the english language has no institution for standardization. (German has of curse ;))

It always puzzled me how that is going on high schools for example.

You just tell the teacher that he has no right to grade you on spelling!?

Anyway the abuse of english is swapping over to my languages as well, which bothers me quite a lot.

For the german speakers: Handy’s in the store!? wtf

http://idiotenapostroph.org/

But now just quoting Hackaday’s line on grammar is as stupid as quoting what english teachers teach as right english.

The point on having standards/rules/laws in a language is about two things:

1.It’s about easy communication. Look at medival texts if you don’t now what i mean.

Having english as a lingua franka right now might seem contradictory, but english simply has a few well established standards that are easy to get (read: simple) and universal to all of it’s dialects.

2. The more importing thing here is whether or not to change these standards.

Yeah, everyone is free to speak/write how he wants. Let there be an authority or not.

But Lindsay has a point here on not breaking the rules just to break them or because of laziness.

In the end, how we speak shapes how think.(Try to thing about something without verbalizing it in yout head). Now this gives everyone the duty to use his own language carefully, since a change in language will result in a change of thinking.

Also that gives anyone the right to correct someone else, since language, if spoken out, is no personal thing at all. Rather it provides the possibility to change how people think!

A hygiene in language might be considered useful(now you can call me a grammar nazi), since, if nothing else, it makes people choose their words carefully meaning they are more likely to choose their thoughts carefully.

Breaking the rules with a reasoned cause is of curse the most preferable thing.

Maybe Lindsay does not get this because of how our gender education works today.

But this requires knowing the rule and reason about it. I cant see this here.

By the way, i thing GUIs works the best.

> In the end, how we speak shapes how think.

Yes, there is no disagreement there. 1984 was alllll about this, Chomsky’s political stuff is alllll about this. I don’t think anyone would argue that point. However, when you say, “this gives everyone the duty to use his own language carefully”, that’s something I would object to.

Because English has no central authority, subversion of the language cannot be seen as illegitimate. Since the subversion of language can be seen as a political act, codifying language with a (false) central authority thereby limits political expression, with all the groupthink that comes with that.

I’ll give you an example. It’s by negation, but I think the point still stands: In the US, we have something called an ‘estate tax’. Basically, it’s what heirs to an estate pay when they inherit something. An evil genius decided that this should be called a ‘death tax.’ If you do a poll, you will find support for an ‘estate tax’ is huge, but support for a ‘death tax’ is in the single digits. The public perception of tax policy can be shaped simply by choosing what words to use.

Now imagine if something we care about – open expression on the internet, some sort of property rights that a manufacturer can brick a device with an update, or really anything else we (generally) care about gets to the news. Everyone has the right to choose what language is used when discussing that specific topic. Likewise, we have the right to choose the language we use when discussing the same topic. It’s a two-way street, yes, and sometimes we’re going to get the short end of the stick. That’s the path we’re down now, and it’s not changing any time soon.

This doesn’t even cover the culture/identification usage of language. The sociological term you’re gonna want to search for is ‘code switching.’ I mean, hell, you’re on a site called ‘Hack A Day’. Do you have any idea how poorly the word ‘hack’ is looked upon in mainstream press? You can see it with Make / Maker Faire and their complete ignorance of this subculture’s use of the word ‘hack.’ Of all the tech/make/hack/builder sites out there, you don’t think the one that has ‘HACKADAY’ plastered across the top of every page wouldn’t be sensitive to the language this culture uses? We’re committed to this, and while we can’t force a change on anyone, we’re at least going to present something that others can point to and say, ‘see, it’s not just about breaking into bank accounts and hacking the Gibson’

And yeah, as far as GUIS vs. GUI’S go, there was a better option we didn’t take. There’s an option we have to change the headlines to not be all-uppercase. We might take that option, and then this entire dialog will have been moot.

I have to agree with Brian on this one. Language is not a set of rules, in fact the opposite. The way a baby learns to speak is the perfect example. You don’t get taught the rules before you speak, you speak to learn the rules, same thing with writing, but the way you learn them is by inductive logic, not deductive, meaning following a set of rules would actually keep you from ever speaking, or writing. If I or someone else creates something new, whether its physical or not, I must call it something, in order to convey to you what it means. Just as a one point the words in all languages didn’t exist. Languages and words is an agreed upon exchange, nothing more. Therefore if reusing or changing the grammar or structure defines a new structure of the communication, its not a bad thing, nor wrong or anything else, just a new set of standards. Just like when new languages in computers are developed, nothing changes, because its still machine code in the end, made to communicate, but the efficiency and efficacy of the language changes drastically between what is to be accomplished. Besides all that, the point Brain brings up about subconscious manipulation, is a very valid one. We can intellectualize all we want, but in the end, we are hard-wired emotional beings, and will perceive emotion, sometimes even when none exists. Even using the word hacker in a positive way, vs the rest of society as a negative connotation has its own effects. It reenforces the brain to disassociate with others, and possibly to disassociate with the morals of others, because we have lots of memories saying everyone else thinks this, but we consciously think this. This also has the ability to have/create cognitive dissonance. Now is this bad? Sometimes. Is it good? Sometimes. So long as it doesn’t develop into a psychosis your probably fine, but it will always have unintended side effects. Sometimes cutting yourself off from the herd is a good thing, especially when they’re all about to jump of a cliff(metaphor).

I just remembered another form of language perception and subconscious manipulation. During the 1970’s the word gay was used by pro homosexuality groups in order to cognitively associate homosexuals as being happy, same thing with the word homophobic, derogatory or pejorative word used to describe anyone that disagrees with homosexuality, under any arguments. This causing others listening, watching, etc to positively associate with homosexuality, and negative associate with those against it. The same tactics are used in elections, political parties, animal rights groups against clothes, medicines used and makeup, and pretty much any group that seeks to win by any means necessary, and has no problem lying, deceiving, or manipulating to get the job done. Political correctness being a huge one, propagated by the majority elite, and media.

If laws don’t make sense, get ignored, or don’t get used, change the law. english is not fixed.

I guess the firmware that actually does anything useful (beyond showing more forms – yay, someone reinvented Visual Basic …) then writes itself, as it is “no code”. *sigh*

I have yet to see anyone using these 4GL displays in any project – and they have been around for quite a while (Sparkfun used to carry them as well).

Nice advert, Hackaday …

Is it only me or is the quality of HAD posts going really downhill recently? Guy who has no clue what “ground” means, now this advert …

Well, there have not been as many clocks as usual, if that is what you mean.

I made a handheld Pipboy a couple years ago using a similar (non touch) 4D systems screen. I ran code on the module itself (4D Graphics Language) and used an Arduino to interface with switches and other sensors.

http://hackaday.com/2012/04/24/pip-boy-2000-build-goes-for-function-over-form/

I understand doing stuff without code is kinda interesting but is it really this drool worthy?

How about an article on the 4DGL programming language? It’s dead simple to learn and really pretty powerful. It was the language that I learned the basics of programming with and I’ve used it to build a whole GUI (mapping, logging, audio recording, bluetooth control… everything) for a radio system. It’s really a nice thing that 4D has built.

So can someone recommend a software program that would allow me to “draw” an interface for a home control system, tying on-screen controls and buttons to actions, et cetera? I’m not good at graphic design bout would like an option to draw up what it would look like (or import a JPEG), then select an onscreen “button” and define it to perform some action or actions.

http://ugfx.org/

>µGFX is a library to interface all kinds of different displays and touchscreens to embedded devices. The main goal of the project is it to provide a set of feature rich tools like a complete GUI toolkit while keeping the system requirements at a minimum.

That seems to be more geared toward embedded systems. My home controller would be running in Linux on an Atom-based PC. I might consider doing Win7 or XP as well if those tools are reasonably friendly.

Hi, I’m the founder of the µGFX is a library.

The µGFX is a library is meant to run on an 8-Bit microcontroller as well as on a 2-GHz MultiCore ARM based machine.

The advantage of using µGFX over some “conventional Linux GUI” is that you don’t need any GTK nor KDE library. µGFX runs either off the X-Server or the bare FrameBuffer. Hence the performance requirements are pretty low which results in cheaper hardware and less energy consumption.

Furthermore, µGFX provides a remote display feature where your GUI runs locally on your target and your host just connects to it – pretty much like VNC works. This is a feature often used in controlling applications like yours.

If you are already running a full OS, there are much better software tools to use native GUI. Pretty most of the compilers with IDE for the PC on the windows side comes with a resource editor to layout GUI.

Qt creator. fluid, etc etc http://en.wikipedia.org/wiki/Graphical_user_interface_builder

This is nice, but I get why people call this out as a blatant advertisement.

If I were to prototype something or develop a small series of some units for money, I’d be very interested in this. If it saves me two hours of time it has easily paid for itself. It’s a valid product. And if it is quick and easy to upload graphics and it has storage for those that’s even better. I’m actually more intrigued by the idea to be able to separate the GUI and the stuff that does the actual work almost totally than by writing no code.

Problem number one with this “hadvertisement”, though, is that I am none the wiser about this. All you get in this article is “wow, so great, cannot believe” and “look ma, no code!”. No words about how it works in detail, whether the designer is any good, how the refresh rate is, how much power it draws, how the generated code looks etc etc.

If this is posted in “reviews”, why is there no more information about the product than I would find in any advertisement? Hell, most ads probably would tell me more about the specs than this here does.

Problem number two is that a lot of the old timers on here are probably mildly interested in things like these at best. It’s not a hack. It’s a product directed towards people who need rapid prototyping. I can find those with google and there’s a lot of them – and a lot of really good ones. Using them has no real educational value, though. They don’t help you learn how something ticks, they help you get something done quickly. And they’re priced accordingly.

I personally neither mind nor appreciate reviews of rapid prototyping stuff, but I don’t come for that. But please make it actual reviews then.

Now get back to making swords :)

I think it has its uses… It sounds fast for simple projects – maybe rig up an HMI for your latest gadget in an hour or two. The simplicity also makes it very accessible, for kids and the moderately technical. Even for larger projects, it might save you some time. My only issue is the cost… the SPI display I have on my desk cost $8, compared to ~$150 for this beast.

I can ignore some of the more subtle ads disguised as posts, but this one aint even trying. Absolutely nothing to try to hide it, they just hype an severely overpriced product while giving you handy links to buy it.

I was kind of waiting for this to happen ever since HAD got sold. First they do some real nice things (the hackaday prize was awesome), but then they start pushing a little too hard to expand hackaday.io, make some product-focused ‘contests’, site redesign to something more “modern” that is a lot less functional and I guess now we will have obvious advertisements.

I just hope HAD lasts for another couple months.

Yeah, no code required, but last I checked, mucho $$$ required.

Don’t worry, code still required to make it do anything useful, unless the $$$ includes a monkey that will write it for you.

And the code has to be written in some brain dead proprietary language instead of anything standard. On top of that, the display is slow as molasses.

Probably it’s worth taking a look at the uGFX library. It has a similar goal but keeps the control over the code by the user. Just super easy to use API -> http://ugfx.org

Ad!!1!one!

So you paid $145 for a display with built-in GUI functionality, which is well-supported with a comprehensive software suite, as is befitting a device in that price range. And were amazed when its GUI designer allowed you to easily create… a GUI. That’s really all the Pipboy replica is, lots of meaningless GUI with no real functionality behind it.

I’m not disputing that you may in fact be pleased with the experience. Or saying that the product and included tools aren’t excellent. But crikey, I’m not sure which has more saccharine – my coffee or this article!

And although it reads like an ad, I don’t think that was your intent. Rather, your articles to date give the impression that you fall in love with certain concepts. First theoretical AI, now code-free design. Both nice ideals, but unfortunately they often tend to break down when examined more than trivially, and few share your level of exuberance for them. Perhaps this would have been better split into two articles – one grounded and factual review, and another article soliciting discussion of code-free design techniques and tools?

Riiight.. try a few hours working with LabView and let me know how ‘in love’ you are with the idea of ‘no programming required’.

Hah! Some of my university teachers were quite enamoured of LabView. From my experience it’s just fine for small projects, but so very fiddly for anything complex. One of those teachers did insist that my disdain was because of my greater familiarity with other languages.

No coding required means the development system has to make assumptions. Now since there is no such thing as artificial intelligence, the resulting code will always -and I mean always- suck compared to anything written by a average programmer.

Professionals should stay away fron this stuff: if they’re scared by the amount of code needed by a user interface they should not work in this field.

I can’t do anything but fully agreeing with you.

This site seems to be struggling to come up with content these days. It does sound like an add but it could just be they really don’t know how to sniff out the hacks anymore.

There are 700+ other projects sitting in HaD’s backyard from the last contest that are not explored are more hack worthy than this one. Don’t see an issue of lack of content here. There are anything worth hacking when you do is mouse click an existing product.

If you want to show an article mouse click, show something more useful. e.g. tutorials to use Atmel Studio, Xilinx, Altera tools for a project from start to finish… BTW everyone can learn something from the scope noob series.

I think we can safely say Hackaday poked the bear. Note to self: never tell coders that “no coding required” is a better option.

obvious ad is obvious…

Wow! I’ve always wanted to use PowerPoint on an expensive embedded system!

As a suit who still knows how to write a bit of code once in a while, “No code required!” has become a warning phrase like easy weight loss or add-blank-to-your-blank-instantly ads. It’s a seductive but very bad idea.

“No code required” generally means that instead of working with text files and keystrokes, you navigate poorly-designed complex IDE screens with mouseclicks. When it works, the end result is somewhere between “fine” and “utter $#@! code.” When it doesn’t quite work, you’re stuck digging into the autogenerated code, at which point you’re not code-free anymore, and possibly stuck working with nasty code. In my experience, it doesn’t quite work enough of the time that on any non-trivial project, you’ll be rolling up your sleeves at some point. Often, once you edit the autogen code, the code-free IDE can’t parse it anymore, and you’re stuck making changes in the text file directly for the rest of time.

On the flip side, “code” isn’t what makes programming hard. You can represent iteration and branching graphically, but you still have to understand what they are, and once you do, is reading the code all that difficult? Simple code is easy to understand. Code that is complex (with or without good reason) is harder to understand, but code qua code has very little to do with that.

We have seen great progress in productivity by things like automatic memory management and piles and piles of libraries that are widely available. We “waste” many times the power of an old PIC just for the bootloader on the Arduino, let alone the rest of the stack, but all that abstraction has freed me from writing repetitive glue code and worrying about low-level hardware details that don’t impact my use case. Yes, it’s bludgeoning a lot of flies to death with a million sledgehammers, but sledgehammers are cheap, and the native hardware is still there if and when there’s a case when I need to work to it more closely.

Those piles of libraries etc are why many programs that do relatively simple tasks are many megabytes in size, or even gigabytes. Why have Microsoft’s Office programs continuously bloated up in each new version? Does the current Word do *that much* more than the earliest version able to use TrueType fonts?

It’s easy to tell the difference between a program carrying the baggage of a lot of unused code that was included in libraries, modules etc and one that was written to do the same job but all its code written just for that job.

Perhaps I’m not fully understanding how these sort of things work, but I see a distinction here between the coding required to display and update the graphical forms/controls/buttons etc., and the actual user code which these buttons perform (e.g. turn LED on when a button is pressed). The clever part of the development environment seems to be the automatic generation of the display/update code – sure, you still need to write the actual code which your program executes, but you don’t have to worry about the (considerable) coding for drawing pixels, framebuffers etc.

It’s just like writing any software on a a computer – after all, you (generally!) don’t have to create a button totally from scratch, laying out the font, borders, handling up/down/pressed states etc. – the IDE takes care of all of that for you. All you want to worry about is the code execute when the button is pressed.

FWIW, FTDI also have an embedded graphics controller called EVE (http://www.ftdichip.com/EVE.htm). Seems a similar idea to 4D, although I don’t know about a dev. environment.

The 4DG display is based on the EVE.

Newbie…

For a good example of a rapid prototyping tool with a “no code” take a look at cypress psoc designer (yeah i know i lurve cypress) i recently made a z table for my laser and used it for just laying out gates and such to control the stepper, then letting it do the code gen, which was PERFECTLY FINE.. no kittens died, no drinks spat onto screens and nothing crashed into anything else. It just worked, amazeballs i know.

code generators have been around forever, they can be a useful tool. Typically “Rapid Prototype” is the name that goes along with them for a good reason, but like everything use appropriately. Be wary of people that say never/always, or emagherd code sucks, probably aren’t doing that much other than saying it sucks.

Ever used a WYSIWYG DVD menu authoring program? Create your screens, import them into the program. Draw all your buttons and give them unique names then go through and select what links to what. Those programs also read or can create chapter marks in the VOB files and link buttons to them.

There’s been little, if any, change in the code that operates DVD menus in 17 years because every DVD made is supposed to be able to play on any DVD player, no matter how old.

Hey Bart! Remember Hypercard? It’s back… in pog form!