While most people are moving onto ARMs and other high-spec microcontrollers, [Dave Cheney] is bucking the trend. Don’t worry, it’s for a good reason – he’s continuing work on one of those vintage CPU/microcontroller mashups that implement an entire vintage system in two chips.

While toying around with the project, he found the microcontroller he was using, the ATMega1284p, was actually pretty cool. It has eight times the RAM as the ever-popular 328p, and twice as much RAM as the ATMega2560p found in the Arduino Mega. With 128k of Flash, 4k of EEPROM, 32 IOs, and eight analog inputs, it really starts to look like the chip the Arduino should have been built around. Of course historical choices don’t matter, because [Dave] can just make his own 1284p prototyping board.

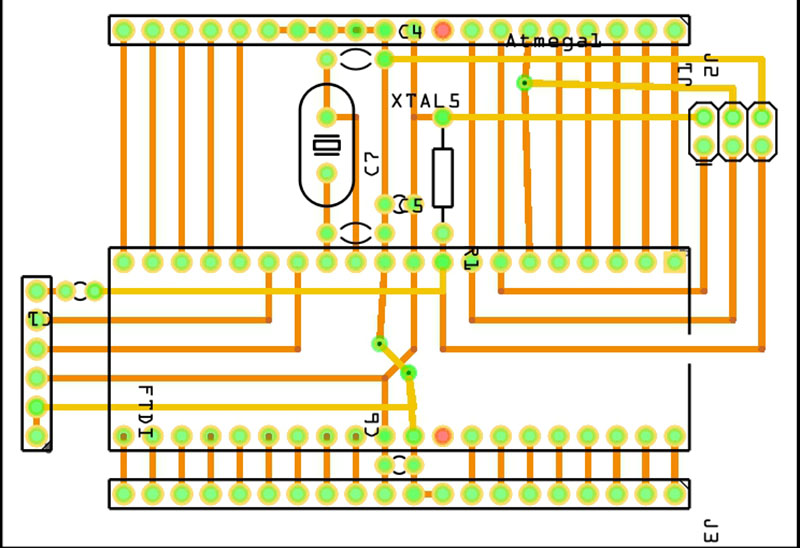

The board is laid out in Fritzing with just a few parts including a crystal, a few caps, an ISP connector, and pins for a serial connector. Not much, but that’s all you need for a prototyping board.

The bootloader is handled by [Maniacbug]’s Mighty 1284 Arduino Support Package. This only supports Arduino 1.0, not the newer 1.5 versions, but now [Dave] has a great little prototyping board that can be put together from perfboard and bare components in a few hours. It’s also a great tool to continue the development of [Dave]’s Apple I replica.

“While most people are moving onto ARMs and other high-spec microcontrollers” … I wouldn’t agree. There are tasks where PIC10F or ATTiny is suitable, there are tasks where you need an ARM processor. For most things people make at home even Arduino Mega is overkill, so I’m sure people will not move to ARMs just because they are more powerfull. Otherwise everyone would be using i7 processors and laughing at “humble” micros we see in many projects every day.

I don’t consider a 32 bit ARM to be “overkill” if it cost less than an 8 bit solution, comes in a similar/smaller package, requires fewer external components, and doesn’t use any more power. In other words, it’s not overkill when judged by the parameters that I would consider important for my project.

On the other hand, an i7 CPU would perform considerably worse on the same parameters, so I would consider it overkill.

Not only what you said, but the more people who ratchet up their game and switch to the more powerful processors, the cheaper they will become. IMO We all need to be moving along with the technology tide to keep production costs low. It benefits everyone. AND the software ecosystem will also continue to be relevant if we’re all using the same/similar platforms.

Have you noticed how cars keep getting bigger? Ratcheting up, if you will. I’m referring to cars like the Honda Civic and Toyota Corolla. When they first came out, they were “tiny”, but now 30-40 years later and they are mid-sized cars. I guess the manufacturers believe (probably rightly so) that if a person likes this particular model, when it comes time to replace it, the buyer has put on a few pounds, maybe added a member or two to the family, and lo and behold! the current model of their “favorite” is “just right”. In my best Andy Rooney imitation, “I wonder why I can’t buy a small half ton Japanese pickup anymore? Like the Datsun 610 or 720 I used to own. Now they are all as big as the Ford or Chevy pickups I abandoned decades ago for better gas mileage and reliability.”

I don’t think the volume in the hobbyists market will have any effects on 32(/64)-bit parts as much as Mobile, Computing, Consumer Electronics, Automotives etc. What you might see is that the Chinese vendors are going to have more breakout/eval boards for the advanced parts (demands, pricing & features) which make them more accessible.

The more advanced part are built on smaller geometry. As such they will be more economical when it comes to speed, memory and peripherals.

Pretty much most of the manufacturers (even ones that had their own line of uC) have converged to licensing ARM (or MIPS) for their 32-bit lineup.

Yes, there will be some growing pain to be wondering off the beaten path, but it is well worth broaden your horizon.

So the complexity of the development toolchain is not one of the parameters you consider important? In my experience, bringing a 32 bit ARM online is enough work to give an 8 bit micro a distinct advantage if the computing task at hand is otherwise trivial for both architectures.

If you start with the right toolchain, it can make your life a bit easier. I use Keil MDK-ARM demo with a 32kB limit (for windows). It is good enough to get you started. You can also switch the tool chain to GCC if at some point you find that limiting. Not having to fight or configure or even compile the tool chain means you can concentrate on the actual problem on hand.

I have documented the changes to switch the default tool chain to GCC here and also using the default tool chain to compile GCC extensions.

http://hackaday.io/project/1347-fpga-computereval-board/log/9404-compile-k22dx5-under-chibios-30gcc-in-rvct

As I have said in my project log “This is also the first time I am programming the ARM architecture, on a new tool chain and a hard deadline for the contest. What can possibly go wrong? :)”

Can it be done? Let’s say I got the ARM chip to work on first prototype without using a development board and managed to port ChibiOS/RT to that branch.

I use gcc + make for everything, so the tools are almost identical. The only problem is setting up the linker scripts and startup code. Usually they can be found on-line somewhere.

This is a cycle that repeats itself about every ten years.

8-bit CPUs have enough power to be interesting, but are still easy to learn. Every so often, Moore’s Law puts that amount of power into a smaller, less expensive package. Hobbyists discover the new package, and there’s a burst of enthusiasm as a new group of people discover how much fun programming and hardware hacking can be.

As project that use the new hardware get more complex, the limits of a basic 8-bit platform become more apparent. People who’ve become familiar with that platform have a good technical foundation to start learning the next two or three more complex platforms (which have also become smaller and cheaper), and sure enough.. the additional platform complexity buys more programming/hacking power.

There’s always a population that rates platforms on the more==better scale, so there’s a strong and highly predictable push behind for changes to the platform deemed minimally-l33t. Before long, it’s a full-scale microprocessor with an operating system, userland, and ‘native language’ with its own whole toolchain.

Thing is, all that extra power comes at the price of additional complexity. As the level of complexity rises, so does the barrier to entry.

The people who started from the original 8-bit platform don’t see that because they’ve spread a whole lot of learning over several years of iterative growth, but the number of new people discovering the joy of hacking ratchets down as the learning curve ratchets up.

It takes conscious effort to recognize the strengths of every platform.. from discrete transistors through FPGAs.. and to respect someone else’s choice to use something other than My Favorite Toolset.

Good hackers make that effort. Their more==better standard involves more people hacking and learning, at whatever level.

That is a valuable insight, well put Mike!

I have done a number of projects with the 644p and the 1284p and love them. Lots of memory, lots of IO, easy to work with, etc. Unfortunately the 1284p comes in at around $9 each in single quantities. I can get a comparable ARM chip for significantly less.

At this point, as lovely as the 1284p is, I would only use it where AVR compatibility is a factor. Not for new work.

Can you name some of those arms chips that replaced the AVRs for you? I like the AVR ATTiny and ATMega Series, but damn is the RAM limited. Could switchting to arm be a solution?

So for small uses I still use AVRs. The ATtiny10 for example is surprising capable for small tasks (1K Flash 32B RAM), and I use ATtiny 45s, 85s, and 84s.

When it comes to ARM, I use the nRF51822 for Bluetooth projects (Cortex M0 128K/256K Flash, 16K RAM), and for other things I tend to start with the the ATSAMD20 (Cortex M0) until I know I need something else. Since that is also the core in the Arduino Zero, you can get some Arduino compatibility that way as well.

The STM32 line is ubiquitous, and generally cheaper than Atmel’s solutions, but I personally don’t like their libraries as much. Though I have certainly used them.

Bear in mind I am not just a hobbyist, I do this work professionally as well. As such I need to keep a larger inventory of microcontrollers around than most. I have 100 ATttiny10s in a bin right now, and at least a dozen of the microcontrollers I use regularly.

Just a quick thank you for your information. I’m about to start a project that I feel might be a bit limited if I went with an AVR. I’m probably well out of my league but hey it keeps me entertained :)

The trinket 3.x series use Freescale MK20DX series. Those are worth looking into along with the usual well supported STM32Fxxx. See also PSoC4 comment I made.

I think you mean the Teensy 3.x. The Trinkets are Atmel based…

That’s true about cost. This is a hobby for me so $9 for the MCU isn’t going to break the bank, but if you’re moving to Cortex-M processors you quickly loose the ability to use 5v parts unless you also move away from the friendly world of DIP parts.

I don’t think it should be a requirement of everyone who wants to tinker with their own digital projects to have to find a solution to mount SMD parts or deal with the constant hassle of interfacing between 5v, 3v3 or 1v8 parts.

It depends. I like to make permanent or semipermanent projects, and while $9 isn’t that expensive, I would still like to get the best bang for my buck.

There are two DIP ARM chips out there, both from NXP: The LPC810, and the LCP1114 which need nothing more than a USB to UART adaptor to program them. SMD doesn’t have to be scary, long before I even considered handling a [T]QFP component I was soldering SOIC chips to protoboards. And of course there are SOIC/[T]QFB to DIP adaptor boards.

You are right about losing 5v, thought personally that wasn’t much of a loss. Almost every 5v component/breakout board I had at the time I made the transition also worked at 3.3v. It isn’t the same issue it used to be. Especially since it is rapidly becoming the norm.

As for 1.8v, give it a couple of years and that will be the new black, but for right now there isn’t much accessible to hobbyists that only works down at that range.

Don’t get me wrong, I think you have done a cool thing; but ARM isn’t as bad as you are making it out to be. Give it a try, the water’s warm.

I’m going to agree and amplify @Red; I think that HAD has become a bit obsessed with processor elitism and has lost sight of its supposed mission to present clever/interesting/informative/amazing “hacks” – stuff of all kinds put together in new/unusual/useful ways. Stuff includes something beyond electron switching – don’t forget that the Arduino and related development software was put out there to get “smart” electronics, and therefore motion, sound and blinky lights into…ART PROJECTS. Really.

Let’s not have this site dissolve into “Popular Electronics” anytime soon, despite the new parent company’s commercial motives.

If you are a hobbyist or not price sensitive, then by all means use any processor you like/ar familiar with/whatever and more power to you. However it can be easy to get stuck in an AVR/PIC/Whatever world and not be aware of what is happening around you.

Sometimes it is worth learning a new architecture. The 1284p is a really nice processor, but it is a tad expensive if you use them regularly

1284P prices ranges from $5 to $8 a piece. That is well into the price range of ARM chip at faster speeds, with more capable peripherals and more memory. The other thing is that there are no silly distinction of program vs data memory and requiring macro (e.g. PSTR()) for storing data in FLASH.

At the low end, you can get PSoC4 CY8C4013SXI-400 at $0.62 QTY1 from digikey. PSoC® 4200 Prototyping Kit is $4.

As far as I am concern, it was worth my time to learn to use them as they cover a wide range of applications from multiple vendors than a single sourced 8-bit part in the long run.

I have 25 of the 1284p around here still so I will be using them.

A very capable chip. They were a bit cheaper back when I bought them

than they are now. ARM is nice but I am very comfortable with the

AVR line and they are a good fit for many projects.

And if you have the inventory then bully for you. But if you didn’t, would you still be as bullish on the 1284p?

The ATmega1284 is pin compatible with the ATmega644, ATmega16, ATmega32 and probably a lot more. You can buy a ATmega16 / ATmega32 minimum system board with USBasp on ebay for about $6. The ATmega664 and ATmega1284 will drop straight into this board. The USB ASP can be used to load the bootloader. By pin compatible I mean power, ground, clock, IO etc different devices have different PWM, SPI, UART, ADC etc.

The Arduino IDE gcc toolchain supports all of these devices. You just need to write/edit a new boards.txt and pins_arduino.h files. If that’s a challenge then just copy them from any Adruino variant (of the same major version) that supports these devices. Older versions of Arduino IDE require you to EDIT boards.txt as they only support one boards.txt file.

Also, there are a lot of variations of arduino_pins.h to match all the variants of board layout / markings especially for the ATmega1284. If you’re just using one development board for any of these chips then choose / write a arduino_pins.h file to match it’s layout / markings. If you are using several different boards with these chips and they have different layouts / markings or making your own board then it’s probably better to us the actual pin numbers (not recommended).

Some caveats:

The clock speed of the hardware MUST match the bootloader. There are many different bootloaders for different speeds.

The clock type (RC, Resonator, Crystal) is set by the Fuse bits and they load when you load the bootloader so you have to use the same type of clock generator. The clock generator type CAN be changed after the bootloader has been loaded by simply changing the fuse bits. This can be done with the Atmel AVR studio and I think there is a way to set different fuse bits before burning the bootloder in the Arduino IDE but I don’t know how???

Pehaps someone else can answer that question.

I use “Khazama AVR Programmer” with Atmel Studio. It is a GUI for USBasp (that uses AVR Dude) . There is a GUI fuse setting. Never bothered with Arduino here, so can’t tell you what to do inside the environment. You can certainly set the fuses with AVR Dude command line.

There is also a different way of specifying the fuse setting inside GCC. That get passed to the ELF file.

http://www.nongnu.org/avr-libc/user-manual/group__avr__fuse.html

Really hate to be that guy, what exactly is novel here?

This is something I concocted about two months ago, since I had little time to build an entire robotic arm controller and wanted extra modularity and I had these microcontrollers laying around gathering dust.

https://i.imgur.com/eeQCF9o.jpg

And no, this thing is not Arduino compatible [at least I did not bother to check for compatibility] nor I care it to be. The IC has no bootloader and it runs from an external 20MHz crystal.

That been said. Props, I guess.

Cheers.

If you are doing a double sided board you might as well do a double sided load and reduce the size of the board considerably – here’s what 30 min of messing with the board layout got me: http://imgur.com/RIJGIZx 2.50″x1.00″, all headers on 0.10″ grid.

Working with the raw processor is somewhat daunting for those who’ve never burned a custom bootloader.

Fortunately, there are always a few pre-made 1284p boards to help beginners work their way up to Dave’s level:

https://thecavepearlproject.org/2020/05/11/build-an-atmega-1284p-based-data-logger/